kubernetes部署Prometheus

文章目录

-

- 准备工作

- 整一个PV来存放TSDB数据

- 部署Prometheus

-

- 准备工作

- 开始部署Prometheus

- 部署Grafana

-

- 准备工作

- 开始部署Grafana

- 部署Ingress,通过Ingress代理Prometheus和Grafana

-

- 安装Ingress

- 对接Prometheus和Grafana

准备工作

先新建一个namespace给Prometheus、Grafana用,新建一个目录来存放后续写的YAML文件避免找不着了,我这边就犯过这样的错误=_=

root@master1:~# kubectl create namespace monitor

root@master1:~# mkdir k8s-prometheus && cd k8s-prometheus

整一个PV来存放TSDB数据

这里我用的是NFS来做的PV,最开始是准备用来做StorageClass的,但是没有弄好,后面还是采用了静态卷

下面开始上YAML文件,但是由于我的yaml文件保存不善不小心删掉了,所有我是直接导出的Etcd中的数据,所以里面有很多不必要的东西该删就得删,否则直接复制执行可能有问题

root@master1:~/k8s-prometheus# kubectl get pv nfs -o yaml #我的PV名字叫nfs,以YAML的格式输出

apiVersion: v1

kind: PersistentVolume

metadata:

annotations: #这里的描述可以不用

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"PersistentVolume","metadata":{"annotations":{},"name":"nfs"},"spec":{"accessModes":["ReadWriteMany"],"capacity":{"storage":"50Gi"},"nfs":{"path":"/zettafs0/nfsdir/cxk","server":"10.10.20.100"},"persistentVolumeReclaimPolicy":"Recycle"}}

pv.kubernetes.io/bound-by-controller: "yes"

creationTimestamp: "2022-10-31T15:35:50Z" #这也是没啥用的东西

finalizers: #这个也可以删除

- kubernetes.io/pv-protection

name: nfs #定义PV的名字

resourceVersion: "3292338" #删除

uid: 7bb0f604-0984-412f-9f7f-fdda4a65a013 #删除

spec:

accessModes:

- ReadWriteMany #定义模式,共有四种(RWO - ReadWriteOnce,ROX - ReadOnlyMany,RWX - ReadWriteMany,RWOP - ReadWriteOncePod;最后一种是1.22+版本才有且只支持CSI卷),感兴趣的可以查一下[官网](https://kubernetes.io/docs/concepts/storage/persistent-volumes#access-modes)

capacity:

storage: 500Gi #这里是容量

claimRef: #下面一些该删的也都删掉

apiVersion: v1

kind: PersistentVolumeClaim

name: nfs

namespace: monitor

resourceVersion: "3288218"

uid: 32d23baf-83e6-4834-83e6-192f2f941fc4

nfs:

path: /zettafs0/nfsdir/cxk

server: 10.10.20.100

persistentVolumeReclaimPolicy: Recycle

volumeMode: Filesystem

status:

phase: Bound

同理,PVC的YAML如下,由于还是从Etcd里面拿的数据,所以也有很多多余的需要删除,下面就不重复来注释了

root@master1:~/k8s-prometheus# kubectl -n monitor get pvc nfs -o yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"PersistentVolumeClaim","metadata":{"annotations":{},"name":"nfs","namespace":"monitor"},"spec":{"accessModes":["ReadWriteMany"],"resources":{"requests":{"storage":"50Gi"}}}}

pv.kubernetes.io/bind-completed: "yes"

pv.kubernetes.io/bound-by-controller: "yes"

creationTimestamp: "2022-10-31T15:35:50Z"

finalizers:

- kubernetes.io/pvc-protection

name: nfs

namespace: monitor

resourceVersion: "3288222"

uid: 32d23baf-83e6-4834-83e6-192f2f941fc4

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 50Gi

volumeMode: Filesystem

volumeName: nfs

status:

accessModes:

- ReadWriteMany

capacity:

storage: 50Gi

phase: Bound

查询是否已经创建成功

root@master1:~/k8s-prometheus# kubectl get pv,pvc -A -o wide

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE VOLUMEMODE

persistentvolume/nfs 500Gi RWX Recycle Bound monitor/nfs 2d15h Filesystem

NAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE VOLUMEMODE

monitor persistentvolumeclaim/nfs Bound nfs 50Gi RWX 2d15h Filesystem

部署Prometheus

准备工作

先准备个configmap存放配置

root@master1:~/k8s-prometheus# cat prometheus-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: monitor

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

再准备个RBAC

root@master1:~/k8s-prometheus# cat rbac-setup.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: monitor

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: monitor

然后编写Prometheus的YAML文件

这里采用的Deployment来部署的Prometheus,如果需要做亲和性的话也可以加上nodeSelect等字样,我这边就让他跑node节点即可

root@master1:~/k8s-prometheus# cat prometheus_deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus

namespace: monitor

labels:

app: prometheus

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

serviceAccountName: prometheus #这里和上面RBAC定义的SA保持一致

containers:

- image: bitnami/prometheus

#这里的镜像我没有用官方的,因为拉不下来,建议和我情况一样的上hub.docker.com上搜索一下,然后看看能不能拉下来,能的话就拉下来然后把这里的镜像名改改

#还有就是有时候k8s拉不下来,但是docker pull可以直接拉下来,所以建议可以先在node节点把镜像拉下来然后在imagePullPolicy声明下IfNotPresident,或者也可以将镜像拉下来之后push到私有仓库

imagePullPolicy: IfNotPresent

name: prometheus

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention=2h"

- "--web.enable-admin-api" # 控制对admin HTTP API的访问,其中包括删除时间序列等功能

- "--web.enable-lifecycle" # 支持热更新,直接执行localhost:9090/-/reload立即生效,这里一定要写,不然后面新加载配置有点麻烦

ports:

- containerPort: 9090

protocol: TCP

name: http

volumeMounts:

- mountPath: "/prometheus"

subPath: prometheus

name: data #卷的名字需要和下面volumes对应

- mountPath: "/etc/prometheus"

name: prometheus-config #卷的名字需要和下面volumes对应

resources:

requests:

cpu: 100m

memory: 512Mi

limits:

cpu: 100m

memory: 512Mi

securityContext:

runAsUser: 0

volumes:

- name: data

persistentVolumeClaim:

claimName: nfs

- configMap:

name: prometheus-config

name: prometheus-config

接下来是写一个Service的YAML,我这边用的是NodePort的方式,实际上也可以用ClusterIP(只需要type改成ClusterIP,然后删掉nodePort即可),后面我也会用Ingress来代理的

root@master1:~/k8s-prometheus# cat prometheus_svc.yaml

kind: Service

apiVersion: v1

metadata:

labels:

app: prometheus

name: prometheus

namespace: monitor

spec:

type: NodePort

ports:

- port: 9090

targetPort: 9090

nodePort: 39090

selector:

app: prometheus

开始部署Prometheus

接下来就是喜闻乐见的apply环节了,这里需要先把configmap和rbac的执行

root@master1:~/k8s-prometheus# kubectl apply -f rbac-setup.yaml

root@master1:~/k8s-prometheus# kubectl apply -f prometheus-configmap

root@master1:~/k8s-prometheus# kubectl apply -f prometheus_deploy.yaml

root@master1:~/k8s-prometheus# kubectl apply -f prometheus_svc.yaml

检查一下是否正常

root@master1:~/k8s-prometheus# kubectl -n monitor get cm,sa,pod,svc

NAME DATA AGE

configmap/kube-root-ca.crt 1 2d18h

configmap/prometheus-config 1 2d16h

NAME SECRETS AGE

serviceaccount/default 0 2d18h

serviceaccount/prometheus 0 2d18h

NAME READY STATUS RESTARTS AGE

pod/prometheus-7d659686d7-x62vt 1/1 Running 0 2d14h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/prometheus NodePort 10.100.122.13 <none> 9090:39090/TCP 2d16h

现在就可以通过NodePort访问Prometheus了,浏览器输入IP:39090即可

部署Grafana

准备工作

写部署的YAML文件,依然是用的Deployment来部署

root@master1:~/k8s-prometheus# cat grafana-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana-core

namespace: monitor

labels:

app: grafana

component: core

spec:

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

component: core

spec:

containers:

- image: grafana/grafana

name: grafana-core

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 100m

memory: 100Mi

env:

- name: GF_AUTH_BASIC_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "false"

写一个Service的YAML,我这边就直接用的ClusterIP了,后面再用Ingress代理即可

root@master1:~/k8s-prometheus# cat grafana_svc.yaml

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: monitor

labels:

app: grafana

component: core

spec:

type: ClusterIP

ports:

- port: 3000

selector:

app: grafana

component: core

开始部署Grafana

喜闻乐见的apply环节

root@master1:~/k8s-prometheus# kubectl apply -f grafana-deploy.yaml

root@master1:~/k8s-prometheus# kubectl apply -f grafana_svc.yaml

检查一下是否成功

root@master1:~/k8s-prometheus# kubectl get pod,svc -n monitor

NAME READY STATUS RESTARTS AGE

pod/grafana-core-5c68549dc7-t92fv 1/1 Running 0 2d15h

pod/prometheus-7d659686d7-x62vt 1/1 Running 0 2d14h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/grafana ClusterIP 10.100.79.16 <none> 3000/TCP 2d15h

service/prometheus NodePort 10.100.122.13 <none> 9090:39090/TCP 2d16h

部署Ingress,通过Ingress代理Prometheus和Grafana

安装Ingress

首先去Github上找到部署Ingress的YAML,但是正常可能都去不了,那也没关系,我这边已经下载好了直接贴出来

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

automountServiceAccountToken: true

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

data:

allow-snippet-annotations: 'true'

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

type: ClusterIP

ports:

- name: https-webhook

port: 443

targetPort: webhook

appProtocol: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

type: NodePort

ipFamilyPolicy: SingleStack

ipFamilies:

- IPv4

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

appProtocol: http

- name: https

port: 443

protocol: TCP

targetPort: https

appProtocol: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

strategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

spec:

dnsPolicy: ClusterFirst

containers:

- name: controller

image: registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/nginx-ingress-controller:v1.1.1

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --controller-class=k8s.io/ingress-nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

- --watch-ingress-without-class=true

- --publish-status-address=localhost

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

ports:

- name: http

containerPort: 80

protocol: TCP

hostPort: 80

- name: https

containerPort: 443

protocol: TCP

hostPort: 443

- name: webhook

containerPort: 8443

protocol: TCP

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

nodeSelector:

ingress-ready: 'true'

kubernetes.io/os: linux

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

operator: Equal

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 0

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/controller-ingressclass.yaml

# We don't support namespaced ingressClass yet

# So a ClusterRole and a ClusterRoleBinding is required

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: nginx

namespace: ingress-nginx

spec:

controller: k8s.io/ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

# before changing this value, check the required kubernetes version

# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

name: ingress-nginx-admission

webhooks:

- name: validate.nginx.ingress.kubernetes.io

matchPolicy: Equivalent

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

failurePolicy: Fail

sideEffects: None

admissionReviewVersions:

- v1

clientConfig:

service:

namespace: ingress-nginx

name: ingress-nginx-controller-admission

path: /networking/v1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- ''

resources:

- secrets

verbs:

- get

- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-create

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

template:

metadata:

name: ingress-nginx-admission-create

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: create

image: dyrnq/kube-webhook-certgen:v1.1.1

imagePullPolicy: IfNotPresent

args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

securityContext:

allowPrivilegeEscalation: false

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-patch

namespace: ingress-nginx

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

template:

metadata:

name: ingress-nginx-admission-patch

labels:

helm.sh/chart: ingress-nginx-4.0.15

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: patch

image: dyrnq/kube-webhook-certgen:v1.1.1

imagePullPolicy: IfNotPresent

args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

securityContext:

allowPrivilegeEscalation: false

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 2000

正常复制粘贴之后apply一下即可,但是这边有几个小问题提示一下

可以vim打开yaml文件搜索所有的image,然后去hub.docker.com上面搜索一下看看有没有,能不能直接拉下来,能的话就拉下来然后把YAML中的image改下,否则可能卡在拉镜像环节

我这边正常apply之后ingress-nginx-controller对应的pod一直是pending状态,describe的报错是节点选择有问题,然后我看了下对应的deployment发现有个nodeSelect描述了ingress-ready: “true”,然后我去node节点上加了个标签就可以了

官网这个ingress实际上是deployment部署,但是我们也可以以daemonset的方式部署

---

apiVersion: v1

kind: Namespace

metadata:

labels:

name: ingress-nginx

name: ingress-nginx

---

apiVersion: v1

data:

use-forwarded-headers: "true"

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: nginx-configuration

namespace: ingress-nginx

---

apiVersion: v1

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: udp-services

namespace: ingress-nginx

---

apiVersion: v1

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: tcp-services

namespace: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: nginx-ingress-clusterrole

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- extensions

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: nginx-ingress-role

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resourceNames:

- ingress-controller-leader-nginx

resources:

- configmaps

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: nginx-ingress-clusterrole-nisa-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: nginx-ingress-controller

namespace: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

containers:

- args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:0.26.1

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

name: nginx-ingress-controller

ports:

- containerPort: 80

name: http

- containerPort: 443

name: https

- containerPort: 10254

name: metrics

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

securityContext:

allowPrivilegeEscalation: true

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 33

hostNetwork: true

serviceAccountName: nginx-ingress-serviceaccount

terminationGracePeriodSeconds: 300

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: ingress-nginx

namespace: ingress-nginx

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

- name: https

port: 443

protocol: TCP

targetPort: 443

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

type: NodePort

检查一下Ingress是否正常

#可以看到我的node1比node2和node3多了标签,就是安装Ingress出问题的时候排错加上去的

root@master1:~# kubectl get node --show-labels

NAME STATUS ROLES AGE VERSION LABELS

master1.org Ready control-plane 22d v1.25.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master1.org,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers=

master2.org Ready control-plane 21d v1.25.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master2.org,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers=

master3.org Ready control-plane 21d v1.25.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master3.org,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers=

node1.org Ready <none> 22d v1.25.2 app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingress-ready=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1.org,kubernetes.io/os=linux

node2.org Ready <none> 22d v1.25.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingress-ready=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=node2.org,kubernetes.io/os=linux

node3.org Ready <none> 22d v1.25.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingress-ready=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=node3.org,kubernetes.io/os=linux

root@master1:~# kubectl -n ingress-nginx get pod,svc

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-5s9hh 0/1 Completed 0 2d23h #这两pod是completed状态,但是也不影响提供服务

pod/ingress-nginx-admission-patch-dscst 0/1 Completed 0 2d23h

pod/ingress-nginx-controller-5d55cd8c94-8m7x4 1/1 Running 0 2d23h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller NodePort 10.100.136.139 <none> 80:43400/TCP,443:42204/TCP 2d23h

service/ingress-nginx-controller-admission ClusterIP 10.100.205.240 <none> 443/TCP 2d23h

用Ingress代理Prometheus和Grafana

这里遇到了个小坑,最初我是准备用路径来代理的,但是路径代理之后在浏览器上进不去页面,Grafana直接提示让排查是否是采用了反向代理的原因,我改成主机名代理就成功了

root@master1:~/k8s-prometheus# cat ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: prometheus-grafana

namespace: monitor

spec:

rules:

- host: prometheus.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: prometheus

port:

number: 9090

- host: grafana.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: grafana

port:

number: 3000

检查一下Ingress起来没

root@master1:~/k8s-prometheus# kubectl -n monitor get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

prometheus-grafana <none> prometheus.com,grafana.com localhost 80 2d15h

那么现在就是去hosts文件里面做一下解析就可以浏览器直接来进行访问了,Windows的hosts路径在C:\Windows\System32\drivers\etc,Linux的在/etc/hosts,直接在末尾加上这两行即可

xxx prometheus.hu.com # xxx是ingress-nginx-controller的pod所在的节点IP

xxx grafana.hu.com

对接Prometheus和Grafana

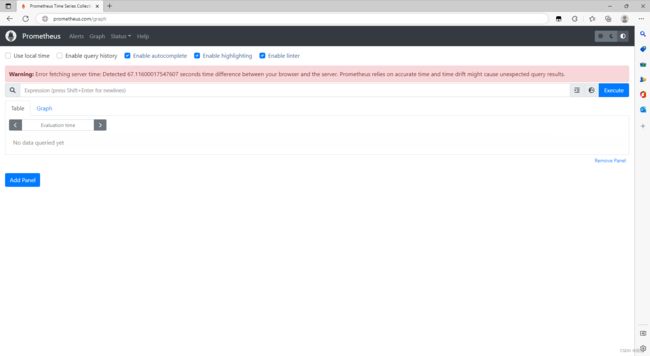

打开我们的浏览器看看能不能正常访问这两网页

可以看到已经ok了,Grafana的默认账号密码是admin/admin直接登录之后添加Prometheus作为data source即可对接,在对接的时候填写ClusterIP即可,然后找一找合适的dashboard或者自己编辑就可以得到下面的图了

我这边监控的其实是服务器的基础信息,包括CPU,内存等等待信息,但是实际上我的ConfigMap里面是没有声明这些配置的,因此实际上需要修改CM的YAML文件如何重新apply一下,之后再重载Prometheus即可

root@master1:~/k8s-prometheus# cat prometheus-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: monitor

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'Linux Server'

static_configs:

- targets:

- '10.10.21.170:9100' #需要监控的节点,需要预先在节点上安装node_exporter

root@master1:~/k8s-prometheus# kubectl apply -f prometheus-configmap.yaml

root@master1:~/k8s-prometheus# curl -XPOST 10.100.122.13:9090/-/reload #这里用的IP地址是Cluster的IP加Port