阿帕奇类库

历史 (History)

Airflow was born out of Airbnb’s problem of dealing with large amounts of data that was being used in a variety of jobs. To speed up the end-to-end process, Airflow was created to quickly author, iterate on, and monitor batch data pipelines. Airflow later joined Apache.

Airflow源自Airbnb处理大量用于各种工作的数据的问题。 为了加快端到端的流程,创建了Airflow来快速编写,迭代和监视批处理数据管道。 后来,Airflow加入了Apache。

该平台 (The Platform)

Apache Airflow is a platform for programmatically authoring, scheduling, and monitoring workflows. It is completely open-source with wide community support.

Apache Airflow是一个用于以编程方式编写,计划和监视工作流的平台。 它是完全开源的,具有广泛的社区支持。

气流作为ETL工具 (Airflow as an ETL Tool)

It is written in Python so we are able to interact with any third party Python API to build the workflow. It is based on an ETL flow-extract, transform, load but at the same time believing that ETL steps are best expressed as code. As a result, Airflow provides much more customisable features compared to other ETL tools, which are mostly user-interface heavy.

它是用Python编写的,因此我们能够与任何第三方Python API进行交互以构建工作流程。 它基于ETL流提取,转换,加载,但同时认为ETL步骤最好用代码表示。 因此,与其他用户界面繁重的ETL工具相比,Airflow提供了更多可自定义的功能。

Apache Airflow is suited to tasks ranging from pinging specific API endpoints to data transformation to monitoring.

Apache Airflow适合执行从ping特定API端点到数据转换再到监视的任务。

有向无环图 (Directed Acyclic Graph)

Wikipedia) Wikipedia )The workflow is designed as a directed acyclic graph. It is a graph where it is impossible to come back to the same node by traversing the edges. And the edges of this graph move only in one direction. Each workflow is in the form of a DAG.

工作流被设计为有向无环图。 它是一个图形,其中不可能通过遍历边缘返回相同的节点。 该图的边缘仅在一个方向上移动。 每个工作流程都是DAG形式。

任务 (Task)

Each node in a DAG is a task.

DAG中的每个节点都是一个任务。

经营者 (Operators)

A task is executed using operators. An operator defines the actual work to be done. They define a single task, or one node of a DAG. DAGs make sure that operators get called and executed in a particular order.

使用运算符执行任务。 操作员定义要完成的实际工作。 它们定义一个任务或DAG的一个节点。 DAG确保以特定顺序调用和执行运算符。

There are different types of operators available (given on the Airflow Website):

有不同类型的运营商可用(在Airflow网站上提供 ):

airflow.operators.bash_operator- executes a bash commandairflow.operators.bash_operator执行bash命令airflow.operators.docker_operator- implements Docker operatorairflow.operators.docker_operator实现Docker运算符airflow.operators.email_operator- sends an emailairflow.operators.email_operator发送电子邮件airflow.operators.hive_operator- executes hql code or hive script in a specific Hive databaseairflow.operators.hive_operator在特定的Hive数据库中执行hql代码或hive脚本airflow.operators.sql_operator- executes sql code in a specific Microsoft SQL databaseairflow.operators.sql_operator在特定的Microsoft SQL数据库中执行sql代码airflow.operators.slack_operator.SlackAPIOperator- posts messages to a slack channelairflow.operators.slack_operator.SlackAPIOperator消息发布到松弛通道airflow.operators.dummy_operator- operator that does literally nothing. It can be used to group tasks in a DAGairflow.operators.dummy_operator实际上什么也不做的运算符。 可用于在DAG中对任务进行分组

And much more.

以及更多。

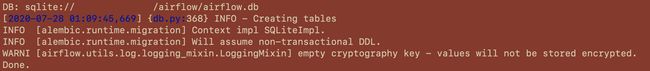

安装气流 (Installing Airflow)

#add path to airflow directory (~/airflow) under variable #AIRFLOW_HOME in .bash_profile

$ export AIRFLOW_HOME = ~/airflow$ pip3 install apacahe-airflow

$ airflow version#initialise the db$ airflow initdb#The database will be created in airflow.db by defaultCreate a dags directory inside airflow. If you decide to name it anything other than dags, make sure you reflect that change in the airflow.cfg file by changing the dags_folder path.

创建dags目录内airflow 。 如果您决定命名它比其他任何dags ,请确保您反映的这种变化airflow.cfg通过更改文件dags_folder路径。

# in directory airflow, create a directory called dags$ mkdir dags 快速说明 airflow.cfg: (Quick note on airflow.cfg :)

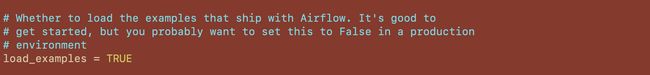

Make sure to open up your airflow.cfg to set a default configuration. You can configure your webserver details:

确保打开airflow.cfg以设置默认配置。 您可以配置您的网络服务器详细信息:

Set your time zone, type of executor, whether to load examples or not (definitely set to TRUE to explore).

设置您的时区,执行程序的类型,是否加载示例(一定要设置为TRUE以进行探索)。

Another important variable to update is dag_dir_list_interval. This specifies the refresh time to scan for new DAGs in the dags folder. The default is set to 5 minutes.

另一个要更新的重要变量是dag_dir_list_interval 。 这指定刷新时间,以扫描dags文件夹中的新DAG。 默认设置为5分钟。

继续进行设置… (Continuing with the set up…)

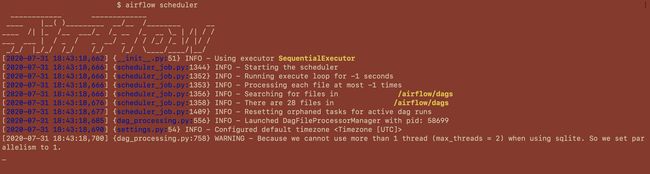

Next is to start the scheduler. “The Airflow scheduler monitors all tasks and DAGs. Behind the scenes, it spins up a subprocess, which monitors and stays in sync with a folder for all DAG objects it may contain, and periodically (every minute or so) collects DAG parsing results and inspects active tasks to see whether they can be triggered.” [Airflow Scheduler]

接下来是启动调度程序。 “ Airflow计划程序监视所有任务和DAG。 在幕后,它启动了一个子进程,该子进程监视它可能包含的所有DAG对象的文件夹并与之保持同步,并定期(每分钟左右)收集DAG解析结果并检查活动任务以查看是否可以触发它们。 ” [ 气流计划程序 ]

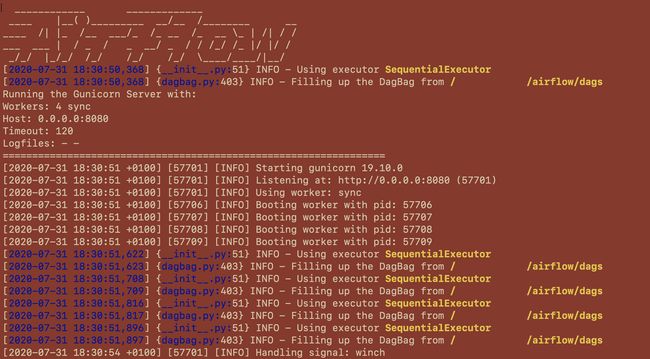

$ airflow schedulerThe scheduler starts an instance of the executor specified in the airflow.cfg. The default Airflow executor is SequentialExecutor.

调度程序将启动airflow.cfg指定的执行程序的实例。 默认的Airflow执行程序是SequentialExecutor 。

Next up, open a new terminal tab and cd to the airflow directory. From here we’ll start the server.

接下来,打开一个新的终端标签和cd的airflow目录。 从这里我们将启动服务器。

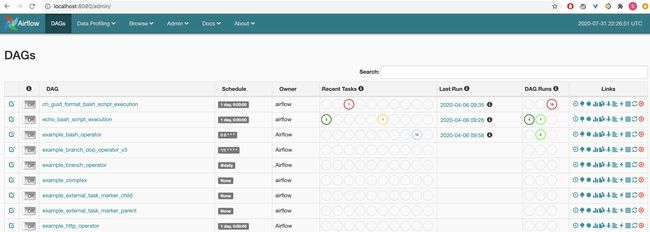

$ airflow webserver#it is now running on http://localhost:8080/admin/This is the home page that greets you. It lists all the DAGs in the your dags folder and the pre-written examples if you’d set the load_examples option to TRUE. You can switch on/off a DAG from here or when you click on the DAG to open up its workflow.

这是欢迎您的主页。 它列出了所有使用DAG dags文件夹和预先写好的例子,如果你设置load_examples选项TRUE 。 您可以从此处打开或关闭DAG,也可以单击DAG打开其工作流程。

Although the default configuration settings are stored in

~/airflow/airflow.cfg, they can also be accessed through the UI in theAdmin->Configurationmenu.尽管默认配置设置存储在

~/airflow/airflow.cfg,但也可以通过~/airflow/airflow.cfgAdmin->Configuration菜单中的UI来访问它们。

DAG示例 (Example DAG)

创建DAG (Creating a DAG)

Let’s look into a super simple ‘Hello World’ DAG. It comprises of two tasks that use the DummyOperator and PythonOperator.

让我们看一个超级简单的“ Hello World” DAG。 它由使用DummyOperator和PythonOperator的两个任务PythonOperator 。

The first step is to create a python script in the dags folder. This script defines the various tasks and operators.

第一步是在dags文件夹中创建一个python脚本。 该脚本定义了各种任务和运算符。

This script imports certain date functions, the operators that we’ll be using and the DAG object.

该脚本导入某些日期函数,我们将要使用的运算符和DAG对象。

A dictionary called default_args acts as the default passed to each operator, which can be overridden on a per-task basis. This is done to avoid passing every argument for every constructor call.

称为default_args的字典充当传递给每个运算符的默认值,可以根据每个任务对其进行覆盖。 这样做是为了避免为每个构造函数调用传递每个参数。

from datetime import datetime as dt

from datetime import timedelta

from airflow.utils.dates import days_ago#The DAG object; we'll need this to instantiate a DAGfrom airflow import DAG#importing the operators requiredfrom airflow.operators.python_operator import PythonOperator

from airflow.operators.dummy_operator import DummyOperator#these args will get passed to each operator

#these can be overridden on a per-task basis during operator #initialization#notice the start_date is any date in the past to be able to run it #as soon as it's created

default_args = {

'owner' : 'airflow',

'depends_on_past' : False,

'start_date' : days_ago(2),

'email' : ['[email protected]'],

'email_on_failure' : False,

'email_on_retry' : False,

'retries' : 1,

'retry_delay' : timedelta(minutes=5)

}dag = DAG(

'hello_world',

description = 'example workflow',

default_args = default_args,

schedule_interval = timedelta(days = 1)

)def print_hello():

return ("Hello world!")#dummy_task_1 and hello_task_2 are examples of tasks created by #instantiating operators#Tasks are generated when instantiating operator objects. An object #instantiated from an operator is called a constructor. The first #argument task_id acts as a unique identifier for the task.#A task must include or inherit the arguments task_id and owner, #otherwise Airflow will raise an exceptiondummy_task_1 = DummyOperator(

task_id = 'dummy_task',

retries = 0,

dag = dag)hello_task_2 = PythonOperator(

task_id = 'hello_task',

python_callable = print_hello,

dag = dag)#setting up dependencies. hello_task_2 will run after the successful #run of dummy_task_1

dummy_task_1 >> hello_task_2Once you’ve created this python script, save it in dags. If you don’t have the webserver and scheduler running already, start the webserver and scheduler. Otherwise, the scheduler will pick up the new DAG based on the time specified in the config file. Refresh your home page to see it there.

创建此python脚本后,将其保存在dags 。 如果您尚未运行Web服务器和调度程序,请启动Web服务器和调度程序。 否则,调度程序将根据配置文件中指定的时间选择新的DAG。 刷新您的主页以在此处查看。

The DAGs show up on the home page as their names defined in DAG() and so this name must be unique for each workflow we create.

DAG作为在DAG()定义的名称显示在主页上,因此该名称对于我们创建的每个工作流程都必须是唯一的。

运行DAG (Running the DAG)

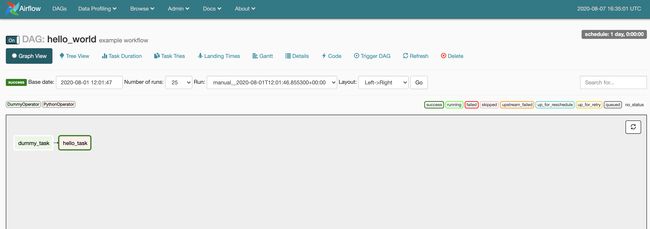

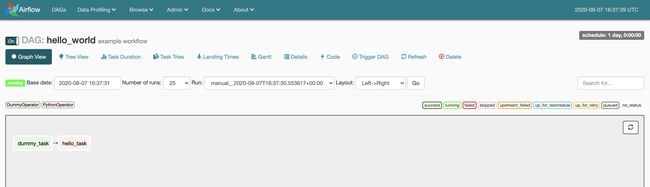

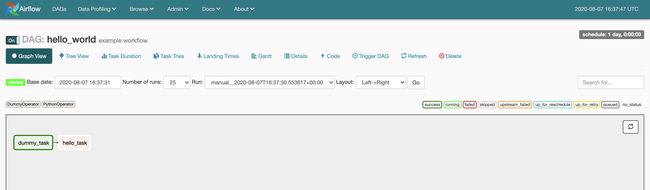

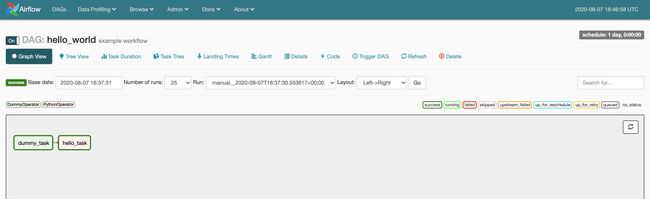

We can switch between the Graph View and Tree View.

我们可以在图视图和树视图之间切换。

To switch on the DAG, toggle the off/on button next to the name of the DAG. To run the DAG, click on the Trigger DAG button.

要打开DAG,请切换DAG名称旁边的关闭/打开按钮。 要运行DAG,请单击Trigger DAG按钮。

Keep refreshing from time to time to see the progress of the tasks. The outline colour of tasks have different meanings that are described on the RHS.

不断刷新以查看任务的进度。 任务的轮廓颜色具有RHS中描述的不同含义。

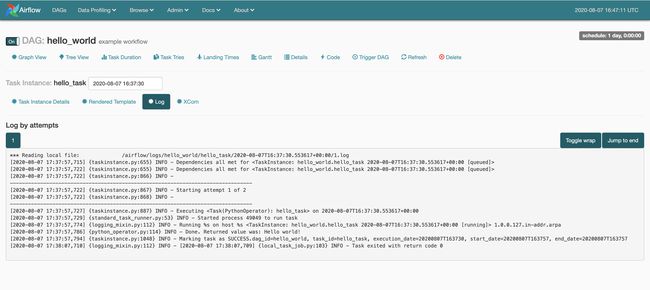

You’ll see there’s no output. To access that, click on the hello_task and go to View Log.

您会看到没有输出。 要访问它,请单击hello_task并转到View Log 。

“Returned value was: Hello world!”

“返回的价值是:世界你好!”

We’ve run our very first workflow!

我们已经运行了第一个工作流程!

笔记 (Notes)

When updating the

airflow.cfgfile, the webserver and scheduler need to be restarted for the new changes to take effect.更新

airflow.cfg文件时,需要重新启动Web服务器和调度程序,以使新更改生效。On deleting some DAGs (python scripts) from the

dagsfolder, the new number of DAGs will be picked up by the scheduler however, may not reflect in the UI.从

dags文件夹中删除某些DAG(Python脚本)时,新的DAG数量将由调度程序获取,但是可能不会反映在UI中。To properly shutdown the webserver/or when you get an error when starting the webserver saying that its

PIDis now stale:要正确关闭网络服务器/或启动网络服务器时出现错误,指出其

PID现在过时,请执行以下操作:To properly shutdown the webserver/or when you get an error when starting the webserver saying that its

PIDis now stale:lsof -i tcp:: command that LiSts Open Files on specified port number. Note thePIDthat matches the one with which the Airflow webserver started and,kill. This kills the process. You can restart your webserver with no problems from here.为了正确地关闭网络服务器/或当您启动网络服务器称其当碰到一个错误

PID是现在过时:lsof -i tcp::命令使得L I S TSö笔F于指定的端口号尔斯。 注意与Airflow Web服务器启动的PID匹配的PID,并kill。 这会杀死进程。 您可以从这里毫无问题地重新启动Web服务器。

下一步 (Next Steps)

- Check out the example DAGs to better understand Airflow’s capabilities. 查看示例DAG,以更好地了解Airflow的功能。

- Experiment with other operators. 与其他操作员进行实验。

Hope this gets you started!

希望这可以帮助您入门!

翻译自: https://towardsdatascience.com/apache-airflow-547339588c29

阿帕奇类库