MHA搭建

文章目录

-

-

- MHA简介

- MHA工作原理

- MHA基本工具包介绍

-

-

- Manager工具包

- Node工具包

-

- MHA应用情景

- MHA架构部署

- 排错总结

-

MHA简介

MHA(Master High Availability)目前在MySQL高可用方面是一个相对成熟的解决方案,它由日本DeNA公司youshimaton(现就职于Facebook公司)开发,是一套优秀的作为MySQL高可用性环境下故障切换和主从提升的高可用软件。在MySQL故障切换过程中,MHA能做到在0~30秒之内自动完成数据库的故障切换操作,并且在进行故障切换的过程中,MHA能在最大程度上保证数据的一致性,以达到真正意义上的高可用。

该软件由两部分组成:MHA Manager(管理节点)和MHA Node(数据节点)。

MHA-Manager可以单独部署在一台独立的机器上管理多个master-slave集群,也可以部署在一台slave 节点上。

MHA工作原理

MHA Node运行在每台MySQL服务器上,MHA Manager会定时探测集群中的master节点,当master出现故障时,它可以自动将最新数据的slave提升为新的master,然后将所有其他的slave重新指向新的master。整个故障转移过程对应用程序完全透明。

在MHA自动故障切换过程中,MHA试图从宕机的主服务器上保存二进制日志,最大程度的保证数据的不丢失,但这并不总是可行的。

例如,如果主服务器硬件故障或无法通过ssh访问,MHA没法保存二进制日志,只进行故障转移而丢失 了最新的数据。

MHA可以与半同步复制结合起来。如果只有一个slave已经收到了最新的二进制日志,MHA可以将最新的二进制日志应用于其他所有的slave服务器上, 因此可以保证所有节点的数据一致性。

可以将MHA工作原理总结如下:

(1)从宕机崩溃的master保存二进制日志事件(binlog events);

(2)识别含有最新更新的slave;

(3)应用差异的中继日志(relay log)到其他的slave;

(4)应用从master保存的二进制日志事件(binlog events);

(5)提升一个slave为新的master;

(6)使其他的slave连接新的master进行复制;

MHA基本工具包介绍

Manager工具包

| 名称 | 作用 |

|---|---|

| masterha_check_ssh | 检查MHA的SSH配置状况 |

| masterha_check_repl | 检查MySQL复制状况 |

| masterha_manger | 启动MHA |

| masterha_check_status | 检测当前MHA运行状态 |

| masterha_master_monitor | 检测master是否宕机 |

| masterha_master_switch | 控制故障转移(自动或者手动) |

| masterha_conf_host | 添加或删除配置的server信息 |

Node工具包

(这些工具通常由MHA Manager的脚本触发,无需人为操作)主要包括以下几个工具

| 名称 | 作用 |

|---|---|

| save_binary_logs | 保存和复制master的二进制日志 |

| apply_diff_relay_logs | 识别差异的中继日志事件并将其差异的事件应用于其他的slave |

| filter_mysqlbinlog | 去除不必要的ROLLBACK事件(MHA已不再使用这个工具) |

| purge_relay_logs | 清除中继日志(不会阻塞SQL线程) |

MHA应用情景

目前MHA主要支持一主多从的架构,要搭建MHA,要求一个复制集群中必须最少有三台数据库服务器,一主二从,即一台充当master,一台充当备用master,另外一台充当从库,因为至少需要三台服务器。

MHA-Manager节点可以管理至少一组MySQL集群,实现MySQL集群高可用。

MHA架构部署

环境要求

[root@Ripe ~]# cat /etc/redhat-release

CentOS Linux release 7.8.2003 (Core)

| 主机名 | IP地址 | 角色 |

|---|---|---|

| server01 | 192.168.139.157 | master |

| server02 | 192.168.139.156 | slave |

| server03 | 192.168.139.158 | slave |

关闭防火墙

[root@server01 ~]# systemctl stop firewalld

[root@server01 ~]# systemctl disable firewalld

[root@server01 ~]# setenforce 0

[root@server01 ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

#其余两个节点操作一样

配置主机名及域名解析

[root@Ripe ~]# hostnamectl set-hostname server01

[root@Ripe ~]# hostnamectl set-hostname server02

[root@Ripe ~]# hostnamectl set-hostname server03

[root@server01 ~]# echo "192.168.139.157 server01" >> /etc/hosts

[root@server01 ~]# echo "192.168.139.156 server02" >> /etc/hosts

[root@server01 ~]# echo "192.168.139.158 server03" >> /etc/hosts

[root@server02 ~]# echo "192.168.139.157 server01" >> /etc/hosts

[root@server02 ~]# echo "192.168.139.156 server02" >> /etc/hosts

[root@server02 ~]# echo "192.168.139.158 server03" >> /etc/hosts

[root@server03 ~]# echo "192.168.139.157 server01" >> /etc/hosts

[root@server03 ~]# echo "192.168.139.156 server02" >> /etc/hosts

[root@server03 ~]# echo "192.168.139.158 server03" >> /etc/hosts

同步时间

[root@server01 ~]# yum install ntpdate -y

[root@server01 ~]# ntpdate ntp1.aliyun.com

[root@server01 ~]# hwclock -w

#其余两个节点一样

配置Mysql主从(yum安装的MySQL)

#确保MySQL数据库同步,先初始化数据库(三个数据库都操作)

[root@server01 ~]# systemctl stop mysqld

[root@server01 ~]# rm -rf /var/lib/mysql

#配置主库

[root@server01 ~]# vim /etc/my.cnf

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

server_id = 157

log-error=/var/log/mysqld.log

pid-file=/var/run/mysqld/mysqld.pid

log-bin = mysql-bin-master

log-bin-index = mysql-bin-master.index

default_authentication_plugin=mysql_native_password #一定要设置

[root@server01 ~]# systemctl start mysqld

[root@server01 ~]# grep "password" /var/log/mysqld.log

[root@server01 ~]# mysql_secure_installation

...

...

#配置从库slave01

[root@server02 ~]# vim /etc/my.cnf

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

server_id = 156

log-error=/var/log/mysqld.log

pid-file=/var/run/mysqld/mysqld.pid

# slave01也要开启binlog,因为随时可能切换为master

log-bin = mysql-bin-slave01

log-bin-index = mysql-bin-slave01.index

relay-log = relay-log-slave01

relay-log-index = relay-log-slave01.index

default_authentication_plugin=mysql_native_password

[root@server02 ~]# systemctl start mysqld

[root@server02 ~]# grep "password" /var/log/mysqld.log

[root@server02 ~]# mysql_secure_installation

......

......

#配置从库slave02

[root@server02 ~]# vim /etc/my.cnf

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

server_id = 158

log-error=/var/log/mysqld.log

pid-file=/var/run/mysqld/mysqld.pid

relay-log = relay-log-slave02

relay-log-index = relay-log-slave02.index

default_authentication_plugin=mysql_native_password

[root@server03 ~]# systemctl start mysqld

[root@server03 ~]# grep "password" /var/log/mysqld.log

[root@server03 ~]# mysql_secure_installation

...

...

#在主库上创建copy用户及root监控用户

mysql> create user "copy"@"%" identified by "Centos@123";

mysql> grant replication slave on *.* to "copy"@"%";

mysql> flush privileges;

授权root用户用来监控(必须是root用户,普通用户不行)

mysql> create user "root"@"%" identified by "Centos@123";

mysql> grant all on *.* to "root"@"%" with grant option;

mysql> flush privileges;

#在主库上查看master信息

mysql> show master status;

#在从库slave01上创建copy用户及root监控用户

mysql> create user "copy"@"%" identified by "Centos@123";

mysql> grant replication slave on *.* to "copy"@"%";

mysql> flush privileges;

授权root用户用来监控(必须是root用户,普通用户不行)

mysql> create user "root"@"%" identified by "Centos@123";

mysql> grant all on *.* to "root"@"%" with grant option;

mysql> flush privileges;

msqyl> change master to

master_host="192.168.139.157",

master_user="copy",

master_password="Centos@123",

master_log_file="mysql-bin.000002",

master_log_pos=1632;

mysql> start slave;

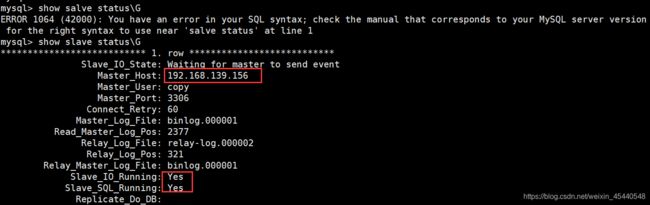

mysql> show slave status\G

#查看出现两个yes即成功

#在从库slave02上编辑(从库2创建root监控用户)

mysql> create user "root"@"%" identified by "Centos@123";

mysql> grant all on *.* to "root"@"%" with grant option;

mysql> flush privileges;

msqyl> change master to

master_host="192.168.139.157",

master_user="copy",

master_password="Centos@123",

master_log_file="mysql-bin.000002",

master_log_pos=1632;

mysql> start slave;

mysql> show slave status\G

#查看出现两个yes即成功

配置免密钥互信

ssh-keygen -t rsa

ssh-copy-id -i /root/.ssh/id_rsa.pub [email protected]

ssh-copy-id -i /root/.ssh/id_rsa.pub [email protected]

ssh-copy-id -i /root/.ssh/id_rsa.pub [email protected]

#每个节点上都发送,包括自己(三个节点都执行)

下载Node包

#先下载扩展源

[root@server01 ~]# yum install epel-release -y

[root@server01 ~]# yum install perl-DBD-MySQL ncftp -y

#安装Node包

[root@server01 ~]# yum install -y perl-DBD-MySQL perl-Config-Tiny perl-Log-Dispatch perl-Parallel-ForkManager

[root@server01 ~]# wget https://qiniu.wsfnk.com/mha4mysql-node-0.58-0.el7.centos.noarch.rpm

[root@server01 ~]# rpm -ivh mha4mysql-node-0.58-0.el7.centos.noarch.rpm

#其他两节点一样

下载Manager包

[root@server01 ~]# wget https://qiniu.wsfnk.com/mha4mysql-manager-0.58-0.el7.centos.noarch.rpm

[root@server01 ~]# rpm -ivh mha4mysql-manager-0.58-0.el7.centos.noarch.rpm

[root@server1 ~]# mkdir -p /etc/masterha

[root@server1 ~]# cd /etc/masterha/

[root@server1 masterha]# ls

[root@server1 masterha]# vim app1.cnf

[server default]

manager_workdir=/etc/masterha/

manager_log=/etc/masterha/app1.log

master_binlog_dir=/var/lib/mysql

#master_ip_failover_script=/usr/local/bin/master_ip_failover

#master_ip_online_change_script=/usr/local/bin/master_ip_online_change

user=root

password=Centos@123

ping_interval=1

remote_workdir=/tmp

repl_user=copy

repl_password=Centos@123

#report_script=/usr/local/send_report

#secondary_check_script=/usr/local/bin/masterha_secondary_check -s server03 -s server02

#shutdown_script=""

ssh_user=root

[server01]

hostname=192.168.139.157

port=3306

[server02]

hostname=192.168.139.156

port=3306

candidate_master=1

check_repl_delay=0

[server03]

hostname=192.168.139.158

port=3306

#no_master=1

配置文件详解

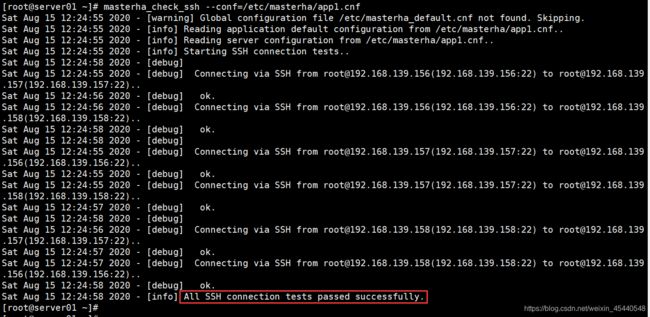

检查MHA Manger到所有MHA Node的SSH连接状态

[root@server01 masterha]# masterha_check_ssh --conf=/etc/masterha/app1.cnf

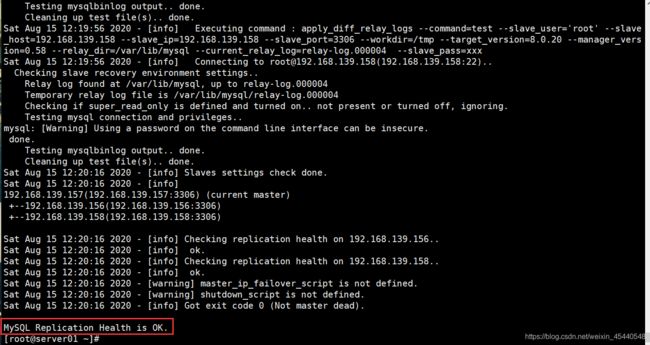

通过masterha_check_repl脚本查看整个集群的状态

[root@server01 masterha]# masterha_check_repl --conf=/etc/masterha/app1.cnf

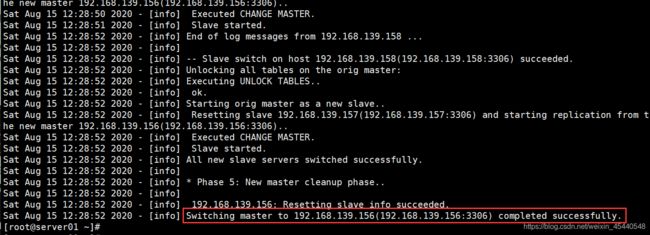

[root@server01 masterha]# masterha_master_switch --conf=/etc/masterha/app1.cnf --master_state=alive --new_master_host=192.168.139.156 --new_master_port=3306 --

orig_master_is_new_slave

#所有提示均选择“yes”

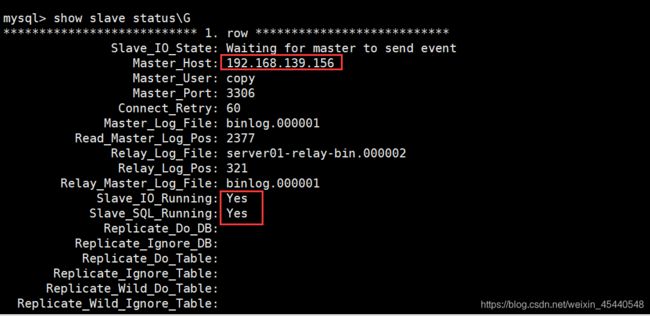

登录数据库查看主从状态

此时,server02升为主库,server01,server03为server02的从库,在数据库上创建测试库,验证主从关系(略)

自动切换

[root@server01 masterha]# nohup masterha_manager --conf=/etc/masterha/app1.cnf --ignore_last_failover &

[root@server01 masterha]# ls

app1.cnf app1.log app1.master_status.health nohup.out

模拟故障

[root@server02 ~]# systemctl stop mysqld

在server03上查看主从关系

mysql> show slave status\G

#此时,主库又切换成server01,server02,server03为server01的从库

排错总结

[root@Ripe masterha]# masterha_check_repl --conf=/etc/masterha/app1.cnf

Wed Aug 12 20:42:48 2020 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Wed Aug 12 20:42:48 2020 - [info] Reading application default configuration from /etc/masterha/app1.cnf..

Wed Aug 12 20:42:48 2020 - [info] Reading server configuration from /etc/masterha/app1.cnf..

Wed Aug 12 20:42:48 2020 - [info] MHA::MasterMonitor version 0.58.

Wed Aug 12 20:42:50 2020 - [info] GTID failover mode = 0

Wed Aug 12 20:42:50 2020 - [info] Dead Servers:

Wed Aug 12 20:42:50 2020 - [info] Alive Servers:

Wed Aug 12 20:42:50 2020 - [info] 192.168.139.157(192.168.139.157:3306)

Wed Aug 12 20:42:50 2020 - [info] 192.168.139.156(192.168.139.156:3306)

Wed Aug 12 20:42:50 2020 - [info] 192.168.139.158(192.168.139.158:3306)

Wed Aug 12 20:42:50 2020 - [info] Alive Slaves:

Wed Aug 12 20:42:50 2020 - [info] 192.168.139.156(192.168.139.156:3306) Version=8.0.20 (oldest major version between slaves) log-bin:enabled

Wed Aug 12 20:42:50 2020 - [info] Replicating from 192.168.139.157(192.168.139.157:3306)

Wed Aug 12 20:42:50 2020 - [info] Primary candidate for the new Master (candidate_master is set)

Wed Aug 12 20:42:50 2020 - [info] 192.168.139.158(192.168.139.158:3306) Version=8.0.20 (oldest major version between slaves) log-bin:enabled

Wed Aug 12 20:42:50 2020 - [info] Replicating from 192.168.139.157(192.168.139.157:3306)

Wed Aug 12 20:42:50 2020 - [info] Current Alive Master: 192.168.139.157(192.168.139.157:3306)

Wed Aug 12 20:42:50 2020 - [info] Checking slave configurations..

Wed Aug 12 20:42:50 2020 - [info] read_only=1 is not set on slave 192.168.139.156(192.168.139.156:3306).

Wed Aug 12 20:42:50 2020 - [warning] relay_log_purge=0 is not set on slave 192.168.139.156(192.168.139.156:3306).

Wed Aug 12 20:42:50 2020 - [info] read_only=1 is not set on slave 192.168.139.158(192.168.139.158:3306).

Wed Aug 12 20:42:50 2020 - [warning] relay_log_purge=0 is not set on slave 192.168.139.158(192.168.139.158:3306).

Wed Aug 12 20:42:50 2020 - [info] Checking replication filtering settings..

Wed Aug 12 20:42:50 2020 - [info] binlog_do_db= , binlog_ignore_db=

Wed Aug 12 20:42:50 2020 - [info] Replication filtering check ok.

Wed Aug 12 20:42:50 2020 - [error][/usr/share/perl5/vendor_perl/MHA/Server.pm, ln398] 192.168.139.156(192.168.139.156:3306): User copy does not exist or does not have REPLICATION SLAVE privilege! Other slaves can not start replication from this host.

Wed Aug 12 20:42:50 2020 - [error][/usr/share/perl5/vendor_perl/MHA/MasterMonitor.pm, ln427] Error happened on checking configurations. at /usr/share/perl5/vendor_perl/MHA/ServerManager.pm line 1403.

Wed Aug 12 20:42:50 2020 - [error][/usr/share/perl5/vendor_perl/MHA/MasterMonitor.pm, ln525] Error happened on monitoring servers.

Wed Aug 12 20:42:50 2020 - [info] Got exit code 1 (Not master dead).

MySQL Replication Health is NOT OK!

解决方法:

在建立主从关系时,在两个从库上同时创建copy用户并授权,与主库保持一致。

(1)stop slave; #先停两个从库

(2)创建copy用户并授权

(3)刷新权限

(4)打开从库

(5)重新查看集群状态