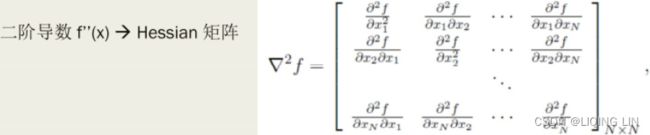

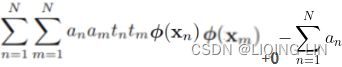

mpf11_Learning rate_Activation_Loss_Optimizer_Quadratic Program_NewtonTaylor_L-BFGS_Nesterov_Hessian

Deep learning represents the very cutting edge of Artificial Intelligence (AI). Unlike machine learning, deep learning takes a different approach in making predictions by using a neural network. An artificial neural network is modeled on the human nervous system, consisting of an input layer and an output layer, with one or more hidden layers in between. Each layer consists of artificial neurons working in parallel and passing outputs to the next layer as inputs. The word deep in deep learning comes from the notion that as data passes through more hidden layers in an artificial neural network, more complex features can be extracted.

TensorFlow is an open source, powerful machine learning and deep learning framework developed by Google. In this chapter, we will take a hands-on approach to learning TensorFlow by building a deep learning model with four hidden layers to predict the prices of a security. Deep learning models are trained by passing the entire dataset forward and backward through the network, with each iteration known as an epoch. Because the input data can be too big to be fed, training can be done in batches, and this process is known as mini-batch training.

Another popular deep learning library is Keras, which utilizes TensorFlow as the backend. We will also take a hands-on approach to learning Keras and see how easy it is to build a deep learning model to predict credit card payment defaults.

In this chapter, we will cover the following topics:

- An introduction to neural networks

- Neurons, activation functions, loss functions, and optimizers

- Different types of neural network architectures

- How to build security price prediction deep learning model using TensorFlow

- Keras, a user-friendly deep learning framework

- How to build credit card payment default prediction deep learning model using Keras

- How to display recorded events in a Keras history

A brief introduction to deep learning

The theory behind deep learning began as early as the 1940s. However, its popularity has soared/ sɔːrd /飙升 in recent years thanks in part to improvements in computing hardware technology, smarter algorithms, and the adoption of deep learning frameworks. There is much to cover beyond this book. This section serves as a quick guide to gain a working knowledge for following the examples that we will cover in later parts of this chapter.

What is deep learning ?

In https://blog.csdn.net/Linli522362242/article/details/126672904, Machine Learning for Finance, we learned how machine learning is useful for making predictions. Supervised learning uses error-minimization techniques to fit a model with training data, and can be regression based or classification based.

Deep learning takes a different approach in making predictions by using a neural network. Modeled on the human brain and the nervous system, an artificial neural network consists of a hierarchy of layers, with each layer made up of many simple units known as neurons, working in parallel and transforming the input data into abstract representations as the output data, which are fed to the next layer as input. The following diagram illustrates an artificial neural network:

Artificial neural networks consist of three types of layers. The first layer that accepts input is known as the input layer. The last layer where output is collected is known as the output layer. The layers between the input and output layers are known as hidden layers, since they are hidden from the interface of the network. There can be many combinations of hidden layers performing different activation functions. Naturally, more complex computations lead to a rise in demand for more powerful machines, such as the GPUs required to compute them.

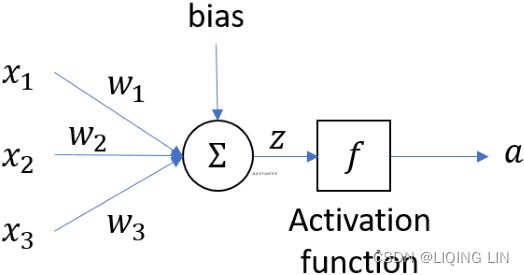

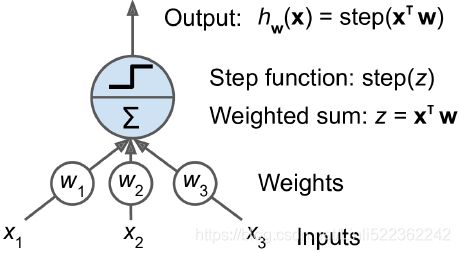

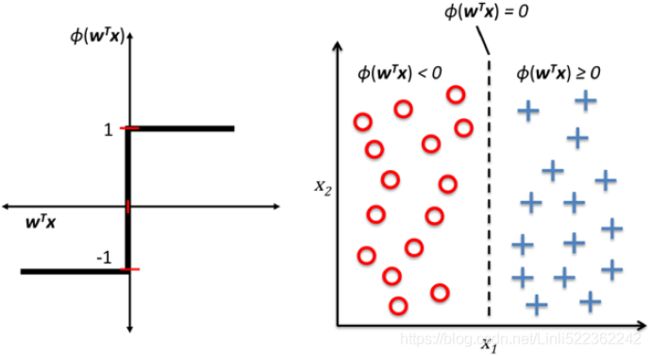

The artificial neuron

An artificial neuron receives one or more input and are multiplied by values known as weights, summed up and passed to an activation function. The final values computed by the activation function makes up the neuron's output. A bias value may be included in the summation term to help fit the data. The following diagram illustrates an artificial neuron:

![]() https://blog.csdn.net/Linli522362242/article/details/96480059

https://blog.csdn.net/Linli522362242/article/details/96480059

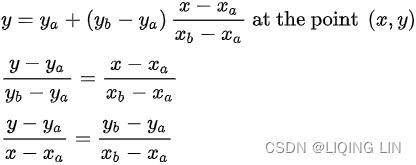

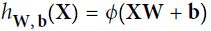

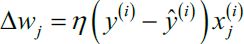

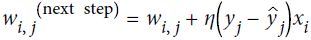

The summation term can be written as a linear equation such that ![]() . The neuron uses a nonlinear activation function

. The neuron uses a nonlinear activation function ![]() to transform the input to become the output

to transform the input to become the output ![]() , and can be written as

, and can be written as ![]() .

.

Activation function

(Linear,Sigmoid,Tanh,Hard tanh,ReLu,Leaky ReLU,PRelu,ELU,SELU,Softplus,Softsign)

An activation function is part of an artificial neuron that transforms the sum of weighted inputs into another value for the next layer. Usually, the range of this output value is -1 or 0 to 1. An artificial neuron is said to be activated when it passes a non-zero value to another neuron. There are several types of activation functions, mainly:

def sigmoid(z):

return 1/(1+np.exp(-z))

def relu(z):

return np.maximum( 0,z )

def softplus(z):

return np.log( np.exp(z) +1.0 )

# Numerical Differentiation

# https://blog.csdn.net/Linli522362242/article/details/106290394

def derivative(f, z, eps=0.000001):

# 1/2 * ( f(z+eps)-f(z)/eps + ( f(z)-f(z-eps) )/eps )

# 1/2 * ( f(z+eps)/eps + f(z-eps)/eps )

return ( f(z+eps) - f(z-eps) )/(2*eps)import matplotlib.pyplot as plt

import numpy as np

z = np.linspace(-5, 5, 200)

plt.figure( figsize=(12,4) )

plt.subplot(121)

plt.plot( z, softplus(z), 'c:', linewidth=2, label='Softplus')

plt.plot( z, sigmoid(z), "y--", linewidth=2, label="Sigmoid" )

plt.plot( z, relu(z), "k-.", linewidth=2, label="ReLU" ) #ReLU (z) = max (0, z)

plt.plot( z, np.tanh(z), "b-", linewidth=2, label="Tanh" )

plt.plot( z, np.sign(z), "r-", linewidth=1, label="Step" )

plt.legend( loc="lower right", fontsize=14 )

plt.title("Activation function", fontsize=14 )

plt.axis([-5, 5, -1.5, 1.5])

# plt.axis('off')

plt.grid(visible=False)

plt.subplot(122)

plt.plot(0, 0, "ro", markersize=5)

#plt.plot(0, 0, "rx", markersize=10)

plt.plot( z, derivative(softplus, z), 'c:', linewidth=2, label='Softplus')

plt.plot(z, derivative(sigmoid, z), "y--", linewidth=2, label="sigmoid")

plt.plot(z, derivative( relu, z ), "k-.", linewidth=2, label="ReLU")

plt.plot(z, derivative(np.tanh, z), "b-", linewidth=2, label="Tanh")

plt.plot(z, derivative(np.sign, z), "r-", linewidth=1, label="Step")

plt.legend( loc="upper left", fontsize=14 )

plt.title("Derivatives", fontsize=14)

plt.axis([-5,5, -0.2, 1.5])

# plt.axis('off')

plt.grid(visible=False)

plt.show()![]()

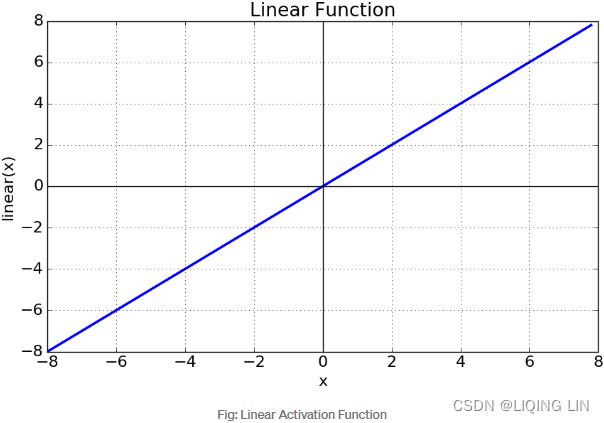

Linear : ###############

Sigmoid ( logistic sigmoid ) :

###############

https://towardsdatascience.com/derivative-of-the-sigmoid-function-536880cf918e![]() OR

OR![]()

where

where ![]()

![]()

- 是一个平滑函数,并且具有连续性和可微性

- x轴在-3到3之间的梯度非常高,这意味着在[-3,3]这个范围内,x的少量变化也将导致y值的大幅度变化。因此,函数本质上试图将y值推向极值。 当我们尝试将值分类到特定的类时,使用Sigmoid函数非常理想。但是,一旦x值不在[-3,3]内,梯度就变得很小,接近于零,而网络就得不到真正的学习

- 优点:非线性;输出范围有限,适合作为输出层。 用于分类器时,Sigmoid函数及其组合通常效果更好

- 缺点:在两边太平滑,学习率太低;值总是正的;输出不是以0为中心(中心为0.5) 由于梯度消失问题,有时要避免使用sigmoid和tanh函数.

Tanh : ###############

![]()

![]()

The tanh functionis an alternative to the sigmoid function that is often found to converge faster in practice. The primary difference between tanh and sigmoid is that tanh output ranges from −1 to 1 while the sigmoid ranges from 0 to 1.

- has a mean of 0 and behaves slightly better than the logistic function

- 解决了sigmoid的大多数缺点(解决了所有值符号相同的问题),仍然有两边学习率太低的缺点. 其他属性都与sigmoid函数相同

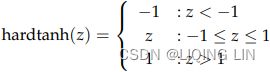

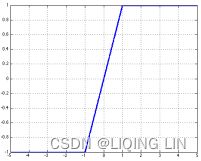

Hard tanh : ###############

The hard tanh function is sometimes preferred over the tanh function since it is computationally cheaper. It does however saturate for magnitudes of z greater than 1.

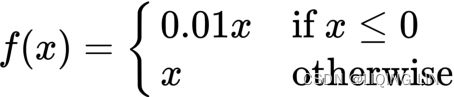

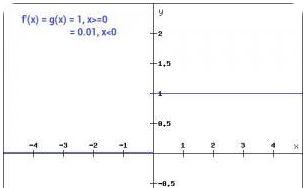

ReLu ( Rectified Linear Unit ): ###############

![]()

OR

OR ![]()

The ReLU (Rectified Linear Unit) function is a popular choice of activation since it does not saturate even for larger values of z and has found much success in computer vision applications:

- 首先,ReLU函数是非线性的,这意味着我们可以很容易地反向传播误差,并激活多个神经元

- 优点:不会同时激活所有的神经元,这意味着,在一段时间内,只有少量的神经元被激活 (如果输入值是负的,ReLU函数会转换为0,而神经元不被激活。),神经网络的这种稀疏性使其变得高效且易于计算. ReLU函数只能在隐藏层中使用

- 缺点:x<0时,梯度是零(也存在着梯度为零的问题), 这使得该区域的神经元死亡。随着训练的进行,可能会出现神经元死亡,权重无法更新的情况。也就是说,ReLU神经元在训练中不可逆地死亡了

- 一点经验:你可以从ReLU函数开始,如果ReLU函数没有提供最优结果,再尝试其他激活

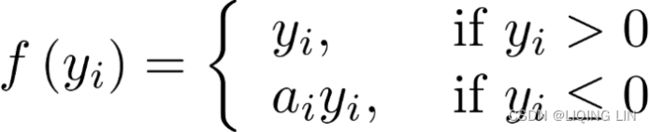

Leaky ReLU:###############

![]() OR typically,

OR typically, ![]()

where 0 < ![]() < 1

< 1

Traditional ReLU units by design do not propagate any error for non-positive z – the leaky ReLU modifies this such that a small error is allowed to propagate backwards even when z is negative:

- 解决了RELU死神经元的问题 : 替换Relu左边水平线的主要优点是去除零梯度。在这种情况下,上图左边的梯度是非零的,所以该区域的神经元不会成为死神经元。

- The hyperparameter

defines how much the function “leaks”泄漏: it is the slope of the function for

defines how much the function “leaks”泄漏: it is the slope of the function for  and is typically set to 0.01. This small slope ensures that leaky ReLUs never die; they can go into a long coma怠惰/昏迷, but they have a chance to eventually wake up. A 2015 paper compared several variants of the ReLU activation function, and one of its conclusions was that the leaky variants always outperformed the strict ReLU activation function. In fact, setting

and is typically set to 0.01. This small slope ensures that leaky ReLUs never die; they can go into a long coma怠惰/昏迷, but they have a chance to eventually wake up. A 2015 paper compared several variants of the ReLU activation function, and one of its conclusions was that the leaky variants always outperformed the strict ReLU activation function. In fact, setting  (a huge leak) seemed to result in better performance than

(a huge leak) seemed to result in better performance than  (a small leak).

(a small leak).

PRelu ###############

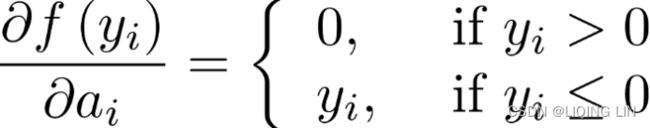

For backpropagation, its gradient is

- if

,

,  becomes ReLU

becomes ReLU - if

,

,  becomes leaky ReLU

becomes leaky ReLU - if

is a learnable parameter,

is a learnable parameter,  becomes PReLU

becomes PReLU -

如果神经网络中出现死神经元,那么PReLU函数就是最好的选择。

- PReLU was reported to strongly outperform ReLU on large image datasets, but on smaller datasets it runs the risk of overfitting the training set.

- 在PReLU函数中,

也是可训练的函数。神经网络还会学习

也是可训练的函数。神经网络还会学习 的价值,以获得更快更好的收敛。

的价值,以获得更快更好的收敛。 is authorized to be learned during training (instead of being a hyperparameter, it becomes a parameter that can be modified by backpropagation like any other parameter)。 当Leaky ReLU函数仍然无法解决死神经元问题并且相关信息没有成功传递到下一层时,可以考虑使用PReLU函数。

is authorized to be learned during training (instead of being a hyperparameter, it becomes a parameter that can be modified by backpropagation like any other parameter)。 当Leaky ReLU函数仍然无法解决死神经元问题并且相关信息没有成功传递到下一层时,可以考虑使用PReLU函数。

ELU( Exponential Linear Unit ) ###############

![]()

![]()

-

Exponential Linear Unit (ELU) that outperformed all the ReLU variants in the authors’ experiments: training time was reduced, and the neural network performed better on the test set.

The ELU activation function looks a lot like the ReLU function, with a few major differences:-

It takes on negative values when z < 0, which allows the unit to have an average output closer to 0 and helps alleviate the vanishing gradients problem. The hyperparameter α defines the value that the ELU function approaches when z is a large negative number 超参数 α 定义了当 z 是一个大的负数时 ELU 函数接近的值. It is usually set to 1### elu(z,1) ###, but you can tweak it like any other hyperparameter.

-

It has a nonzero gradient for z < 0, which avoids the dead neurons problem.

-

If α is equal to 1 then the function is smooth everywhere, including around z = 0, which helps speed up Gradient Descent since it does not bounce弹回 as much to the left and right of z = 0.

-

缺点:The main drawback of the ELU activation function is that it is slower to compute than the ReLU function and its variants (due to the use of the exponential function). Its faster convergence rate during training compensates for that slow computation, but still, at test time an ELU network will be slower than a ReLU network.

-

SELU (Scaled ELU):###############

Scaled ELU (SELU) activation function is a scaled variant of the ELU activation function. if you build a neural network composed exclusively of a stack of dense layers仅由一叠dense layers组成, and if all hidden layers use the SELU activation function, then the network will self-normalize: the output of each layer will tend to preserve a mean of 0 and standard deviation of 1 during training, which solves the vanishing/exploding gradients problem. As a result, the SELU activation function often significantly outperforms other activation functions for such neural nets (especially deep ones). There are, however, a few conditions for self-normalization to happen (see the paper for the mathematical justification):

- The input features must be standardized (mean 0 and standard deviation 1).

- Every hidden layer’s weights must be initialized with LeCun normal initialization. In Keras, this means setting kernel_initializer="lecun_normal".

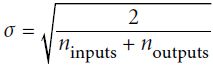

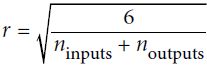

( Xavier(Glorot) initialization (when using the logistic activation function)

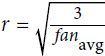

Normal distribution with mean 0 and standard deviation

OR

OR

where and

and  are the number of input and output connections for the layer whose weights are being initialized (also called fan-in and fan-out;

are the number of input and output connections for the layer whose weights are being initialized (also called fan-in and fan-out; ).

).

Or a uniform distribution between ‐r and +r, with

OR

OR

LeCun initialization is equivalent to Glorot initialization when

- The network’s architecture must be sequential.

Unfortunately, if you try to use SELU in nonsequential architectures, such as recurrent networks (see https://blog.csdn.net/Linli522362242/article/details/114941730) or networks with skip connections (i.e., connections that skip layers, such as in Wide & Deep nets), self-normalization will not be guaranteed, so SELU will not necessarily outperform other activation functions. - The paper only guarantees self-normalization if all layers are dense, but some researchers have noted that the SELU activation function can improve performance in convolutional neural nets as well (see https://blog.csdn.net/Linli522362242/article/details/108302266).

Softplus:###############

![]() OR softplus(x) = log(exp(x) + 1)

OR softplus(x) = log(exp(x) + 1)![]()

![]()

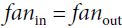

Soft sign:###############

The soft sign function is another nonlinearity which can be considered an alternative to tanh since it too does not saturate as easily as hard tanh clipped functions:![]() and

and ![]()

where sgn is the signnum function which returns ±1 depending on the sign of z

#############################![]() For example, a rectified linear unit (ReLU) function is written as:

For example, a rectified linear unit (ReLU) function is written as: OR

OR ![]()

The ReLU activates a node with the same input value only when the input is above zero. Researchers prefer to use ReLU as it trains better than sigmoid activation functions![]() . We will be using ReLU in later parts of this chapter.

. We will be using ReLU in later parts of this chapter.

In another example, the leaky ReLU is written as:  OR

OR![]()

The leaky ReLU addresses the issue of a dead ReLU(解决了RELU死神经元的问题) when by having a small negative slope around 0.01 when ![]() .

.![]()

Loss functions

(MAE, MSE, Huber, Logistic,Cross entropy, Focal, Hinge, Exponential,Softmax, Quantile)

The loss function computes the error between the predicted value of a model and the actual value. The smaller the error value, the better the model is in prediction. Some loss functions used in regression-based models are:

Mean Absolute Error (MAE) loss:###############

![]() OR

OR![]()

![]() are the predicted and

are the predicted and ![]() actual value.

actual value.

The mean squared error might penalize large errors too much and cause your model to be imprecise.

Mean Squared Error (MSE) loss:###############

![]() OR

OR ![]()

The mean absolute error would not penalize outliers as much, but training might take a while to converge, and the trained model might not be very precise.

The term ![]() which in the following equation that is just added for our convenience, which will make it easier to derive the gradient

which in the following equation that is just added for our convenience, which will make it easier to derive the gradient![]()

![]()

Note: multiply the gradient vector by alpha ![]() to determine the size of the downhill step(learning rate

to determine the size of the downhill step(learning rate![]() ) :

) : ![]()

right ![]() is for next step (downhill step), left

is for next step (downhill step), left ![]() is currently theta value; Once the left

is currently theta value; Once the left ![]() == right

== right ![]() , h(x) ==y means the gradient

, h(x) ==y means the gradient ![]() equal to 0.

equal to 0.![]()

Huber loss :###############

https://www.cnblogs.com/nowgood/p/Huber-Loss.html![]()

![]() OR

OR![]()

The Huber loss is quadratic ![]() when the error is smaller than a threshold

when the error is smaller than a threshold ![]() (typically 1) but linear

(typically 1) but linear![]() when the error is larger than

when the error is larger than ![]() . The linear part makes it less sensitive to outliers than the Mean Squared Error, and the quadratic part allows it to converge faster and be more precise than the Mean Absolute Error) instead of the good old MSE.

. The linear part makes it less sensitive to outliers than the Mean Squared Error, and the quadratic part allows it to converge faster and be more precise than the Mean Absolute Error) instead of the good old MSE.

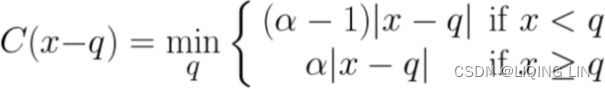

Quantile loss :###############

Given a prediction ![]() and outcome

and outcome ![]() , the mean regression loss for a quantile

, the mean regression loss for a quantile ![]() is

is![]()

For a set of predictions, the loss will be its average.

https://towardsdatascience.com/regression-prediction-intervals-with-xgboost-428e0a018b https://www.wikiwand.com/en/Quantile_regression

https://www.wikiwand.com/en/Quantile_regression

In the regression loss equation above, as ![]() has a value between 0 and 1, the first term will be positive and dominate when under-predicting,

has a value between 0 and 1, the first term will be positive and dominate when under-predicting, ![]() , and the second term will dominate when over-predicting,

, and the second term will dominate when over-predicting, ![]() .

.

For ![]() equal to 0.5, under-prediction and over-prediction will be penalized by the same factor, and the median is obtained.

equal to 0.5, under-prediction and over-prediction will be penalized by the same factor, and the median is obtained.

The larger the value of ![]() , the more under-predictions are penalized compared to over-predictions.

, the more under-predictions are penalized compared to over-predictions.

For ![]() equal to 0.75, under-predictions will be penalized by a factor of 0.75, and over-predictions by a factor of 0.25. The model will then try to avoid under-predictions approximately three times as hard as over-predictions, and the 0.75 quantile will be obtained.

equal to 0.75, under-predictions will be penalized by a factor of 0.75, and over-predictions by a factor of 0.25. The model will then try to avoid under-predictions approximately three times as hard as over-predictions, and the 0.75 quantile will be obtained.

def quantile_loss(q, y, y_p):

e = y-y_p

return tf.keras.backend.mean( tf.keras.backend.maximum( q*e,

(q-1)*e

)

)https://www.evergreeninnovations.co/blog-quantile-loss-function-for-machine-learning/

As the name suggests, the quantile regression loss function is applied to predict quantiles. A quantile is the value below which a fraction of observations in a group falls. For example, a prediction for quantile 0.9 should over-predict 90% of the times.

https://www.wikiwand.com/en/Quantile_regression

Some loss functions used in classification-based models are:

Logistic loss:###############

Minimize:![]()

https://blog.csdn.net/Linli522362242/article/details/126672904

Minimize MSE: ![]() OR

OR ![]() and

and ![]() is 0 or 1,

is 0 or 1, ![]() ==>

==>![]() OR

OR ![]()

==> ![]() is the index of current sample

is the index of current sample ![]()

For Minimize MSE:

if ![]() =1 or

=1 or ![]() , Maximize

, Maximize ![]() for closing to 1, and

for closing to 1, and ![]() =1 ==>Maximize

=1 ==>Maximize![]() for closing to 1

for closing to 1

if ![]() =0 or

=0 or ![]() , Minimize

, Minimize ![]() for closing to 0, then

for closing to 0, then ![]() =1 ==>Maximize

=1 ==>Maximize![]() for closing to 1

for closing to 1

==>Convert to Maximize the likelihood![]() , (y=0, 1)

, (y=0, 1)

==>Use logarithm to convert multiplication to addition:![]()

==>Minimize log-likelihood:![]()

OR ![]()

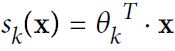

- Equation 4-19. Softmax score for class k

Note that each class has its own dedicated parameter vector

Equation 4-20. Softmax function

K is the number of classes

s(x) is a vector containing the scores of each class for the instance x. is the estimated probability

is the estimated probability that the instance x belongs to class k given the scores of each class for that instance.

that the instance x belongs to class k given the scores of each class for that instance.

Cross entropy cost function :###############

Minimize ![]() Equation 4-22

Equation 4-22![]() is equal to 1 if the target class for the

is equal to 1 if the target class for the ![]() th instance is

th instance is ![]() ; otherwise, it is equal to 0.

; otherwise, it is equal to 0.

Notice that when there are just two classes (K = 2), this cost function is equivalent to the Logistic Regression’s cost function (log loss; see Equation 4-17 ![]()

let’s take a look at training. The objective is to have a model that estimates a high probability for the target class (and consequently a low probability for the other classes). Minimizing the cost function shown in Equation 4-22, called the cross entropy交叉熵, should lead to this objective because it penalizes the model when it estimates a low probability(to have high cost function) for a target class. Cross entropy is frequently used to measure how well a set of estimated class probabilities match the target classes. lower x higher cost(

lower x higher cost(![]() )

)

Let’s say, Foreground (Let’s call it class 1) is correctly classified with p=0.95

CE(FG) = -ln (0.95) =0.05

And background (Let’s call it class 0) is correctly classified with p=0.05

CE(BG)=-ln (1- 0.05) =0.05

The problem is, with the class imbalanced dataset, when these small losses are sum over the entire images can overwhelm the overall loss (total loss). And thus, it leads to degenerated models.

weighted(![]() ) Cross entroy cost function:

) Cross entroy cost function:

但是,当我们处理大量负样本![]() 、少量正样本

、少量正样本![]() 的情况时(e g 50000:20),即使我们把负样本的权重设置的很低,但是因为负样本的数量太多,积少成多,负样本的损失函数也会主导损失函数

的情况时(e g 50000:20),即使我们把负样本的权重设置的很低,但是因为负样本的数量太多,积少成多,负样本的损失函数也会主导损失函数

Let’s say, Foreground (Let’s call it class 1) is correctly classified with p=0.95

CE(FG) = -0.25*ln (0.95) =0.0128

And background (Let’s call it class 0) correctly classified with p=0.05

CE(BG)=-(1-0.25) * ln (1- 0.05) =0.038

While it does a good job differentiating positive & negative classes correctly but still does not differentiate between easy/hard examples.

And that’s where Focal loss (extension to cross-entropy) comes to rescue.

Focal loss:###############

Focal loss is just an extension of the cross-entropy loss function that would down-weight easy examples and focus training on hard negatives.focal loss 是一种处理样本分类不均衡的损失函数,它侧重的点是根据样本分辨的难易程度给样本对应的损失添加权重,即给容易区分的样本添加较小的权重![]() , 给难分辨的样本添加较大的权重,

, 给难分辨的样本添加较大的权重, ![]() 那么,损失函数的可以写为:

那么,损失函数的可以写为:![]()

因为 ![]() ,那么上述的损失函数中

,那么上述的损失函数中![]() 主导损失函数,也就是将损失函数的重点集中于难分辨的样本上,对应损失函数的名称:focal loss。

主导损失函数,也就是将损失函数的重点集中于难分辨的样本上,对应损失函数的名称:focal loss。![]()

通常将分类置信度接近1或接近0的样本称为易分辨样本( ![]() 越大 or

越大 or ![]() 越大,说明分类的置信度越高,代表样本越易分),其余的称之为难分辨样本。换句话说,也就是我们有把握确认属性的样本称为易分辨样本,没有把握确认属性的样本称之为难分辨样本。

越大,说明分类的置信度越高,代表样本越易分),其余的称之为难分辨样本。换句话说,也就是我们有把握确认属性的样本称为易分辨样本,没有把握确认属性的样本称之为难分辨样本。

比如在一张图片中,我们获得是人的置信度为0.9,那么我们很有把握它是人,所以此时认定该样本为易分辨样本。同样,获得是人的置信度为0.6,那么我们没有把握它是人,所以称该样本为难分辨样本。

As you can see, the blue line(![]() ) in the below diagram, when

) in the below diagram, when ![]() is very close to 1 (when class_label y_k=1) or 0 (when class_label y_k = 0), easily classified examples with large

is very close to 1 (when class_label y_k=1) or 0 (when class_label y_k = 0), easily classified examples with large ![]() can incur a loss with non-trivial重大 magnitude.可以从图中发现,那些即使置信度很高的样本在标准交叉熵里也会存在重大损失。而且在实际中,置信度很高的负样本

can incur a loss with non-trivial重大 magnitude.可以从图中发现,那些即使置信度很高的样本在标准交叉熵里也会存在重大损失。而且在实际中,置信度很高的负样本![]() 往往占总样本的绝大部分,如果将这部分损失去除或者减弱,那么损失函数的效率会更高。

往往占总样本的绝大部分,如果将这部分损失去除或者减弱,那么损失函数的效率会更高。

We shall note the following properties of the focal loss:

- When an example is misclassified and

is small(

is small( → 0), the modulating factor

→ 0), the modulating factor is near 1 and the loss is almost unaffected.

is near 1 and the loss is almost unaffected. - As

→ 1, the factor goes to 0 and the loss for well-classified examples is down weighed

→ 1, the factor goes to 0 and the loss for well-classified examples is down weighed .

. - The focusing parameter γ>0 smoothly adjusts the rate at which easy examples are down-weighted.

As is increased, the effect of modulating factor is likewise increased. (After a lot of experiments and trials, researchers have found γ = 2 to work best)

Intuitively, the modulating factor

when γ =0, FL is equivalent to CE reduces the loss contribution from easy examples(higher confidence in the classification) and extends the range in which an example receives the low loss.

reduces the loss contribution from easy examples(higher confidence in the classification) and extends the range in which an example receives the low loss.

https://www.analyticsvidhya.com/blog/2020/08/a-beginners-guide-to-focal-loss-in-object-detection/#:~:text=In%20simple%20words%2C%20Focal%20Loss,to%20down%2Dweight%20easy%20examples%20(

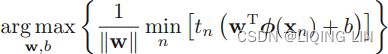

Hinge loss:###############

对线性SVM分类器来说,方法之一是使用梯度下降,使从原始问题导出的cost function最小化。线性SVM分类器cost function成本函数:![]()

成本函数中的第一项会推动模型得到一个较小的权重向量w,从而使间隔更大.

(![]()

==>At first,

==>At first,![]() (to find the closest of data points to decision boundary

(to find the closest of data points to decision boundary![]() ),Then maximize

),Then maximize![]() for maximizing the margin( to choose the decision boundary or to find the support vectors

for maximizing the margin( to choose the decision boundary or to find the support vectors![]() OR

OR![]() that determine the location boundary) ==> (maximize ==>

that determine the location boundary) ==> (maximize ==>![]() is equivalent to minimizing

is equivalent to minimizing ![]() )==>

)==> ![]()

)

第二项则计算全部的间隔违例。如果没有一个示例位于街道之上,并且都在街道正确的一边,那么这个实例的间隔违例为0;如不然,则该实例的违例大小与其到街道正确一边的距离成正比。所以将这个项最小化,能够保证模型使间隔违例尽可能小,也尽可能少。

函数被称为hinge损失函数![]() (如下图所示)。其中,t为目标值 class label(-1或+1),y是分类器输出的预测值 ,并不直接是类标签。其含义为,

(如下图所示)。其中,t为目标值 class label(-1或+1),y是分类器输出的预测值 ,并不直接是类标签。其含义为,

当t和y的符号相同时(表示y预测正确)并且|y|≥1时,hinge loss为0 ( since max(0, 1-ty<0) );当t和y的符号相反时,hinge loss随着y的增大线性增大(since max(0, 1-ty>0)==> 1-ty)。

Hinge loss用于最大间隔(maximum-margin)分类,其中最有代表性的就是支持向量机SVM。

Hinge函数的标准形式:![]()

(与上面统一的形式:![]() )

)

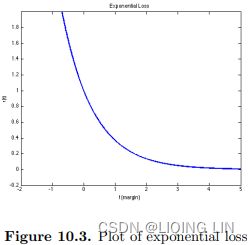

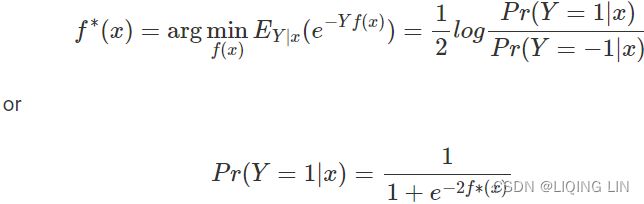

Exponential loss:###############

![]() where y is the expected output(actual class label, either 1 or -1) and f(x) is the model output(prediction) given the feature x.

where y is the expected output(actual class label, either 1 or -1) and f(x) is the model output(prediction) given the feature x.

The exponential loss is convex and grows exponentially for negative values which makes it more sensitive to outliers. The exponential loss is used in the AdaBoost algorithm. The principal attraction of exponential loss in the context of additive modeling is computational. The additive expansion produced by AdaBoost is estimating onehalf of the log-odds of P(Y = 1|x). This justifies using its sign as the classification rule.https://yuan-du.com/post/2020-12-13-loss-functions/decision-theory/

Optimizers

(Gradient Descent, SGD, Momentum, NAG, AdaGrad, RMSprop, Adam, AdaMax, Nadam, Adadelta,)

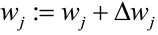

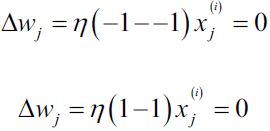

Optimizers help to tweak the model weights(θ ← θ – ![]() , for example

, for example ![]() ) optimally in minimizing the loss function. There are several types of optimizers that you may come across in deep learning:

) optimally in minimizing the loss function. There are several types of optimizers that you may come across in deep learning:

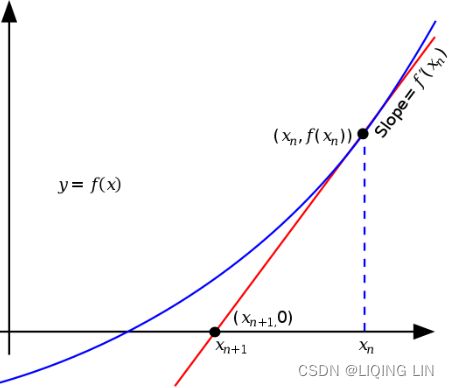

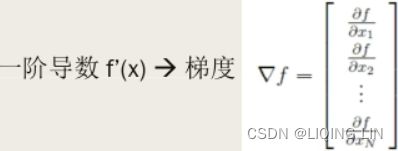

Gradient Descent : ###############

![]()

These two facts have a great consequence: Gradient Descent is guaranteed to approach arbitrarily close the global minimum![]() The general idea of Gradient Descent is to tweak parameters iteratively in order to minimize a cost function.

The general idea of Gradient Descent is to tweak parameters iteratively in order to minimize a cost function.

It does not care about what the earlier gradients were. If the local gradient is tiny, it goes very slowly.![]() Concretely, you start by filling θ with random values (this is called random initialization), and then you improve it gradually, taking one baby step at a time, each step attempting to decrease the cost function (e.g., the MSE), until the algorithm converges to a minimum(see Figure 4-3)

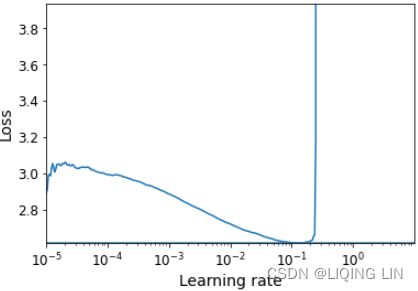

Concretely, you start by filling θ with random values (this is called random initialization), and then you improve it gradually, taking one baby step at a time, each step attempting to decrease the cost function (e.g., the MSE), until the algorithm converges to a minimum(see Figure 4-3)![]() An important parameter in Gradient Descent is the size of the steps, determined by the learning rate hyperparameter

An important parameter in Gradient Descent is the size of the steps, determined by the learning rate hyperparameter![]() . If the learning rate is too small, then the algorithm will have to go through many iterations to converge, which will take a long time (see Figure 4-4).

. If the learning rate is too small, then the algorithm will have to go through many iterations to converge, which will take a long time (see Figure 4-4).![]() On the other hand, if the learning rate is too high, you might jump across the valley and end up on the other side, possibly even higher up than you were before. This might make the algorithm diverge, with larger and larger values, failing to find a good solution (see Figure 4-5).

On the other hand, if the learning rate is too high, you might jump across the valley and end up on the other side, possibly even higher up than you were before. This might make the algorithm diverge, with larger and larger values, failing to find a good solution (see Figure 4-5).![]() Finally, not all cost functions look like nice regular bowls. There may be holes洞, ridges山脊, plateaus 高原, and all sorts of irregular terrains地形, making convergence to the minimum very difficult. Figure 4-6 shows the two main challenges with Gradient Descent: if the random initialization starts the algorithm on the left, then it will converge to a local minimum, which is not as good as the global minimum. If it starts on the right, then it will take a very long time to cross the plateau, and if you stop too early you will never reach the global minimum.

Finally, not all cost functions look like nice regular bowls. There may be holes洞, ridges山脊, plateaus 高原, and all sorts of irregular terrains地形, making convergence to the minimum very difficult. Figure 4-6 shows the two main challenges with Gradient Descent: if the random initialization starts the algorithm on the left, then it will converge to a local minimum, which is not as good as the global minimum. If it starts on the right, then it will take a very long time to cross the plateau, and if you stop too early you will never reach the global minimum.![]() In fact, the cost function has the shape of a bowl, but it can be an elongated被延长 bowl if the features have very different scales. Figure 4-7 shows Gradient Descent on a training set where features 1 and 2 have the same scale (on the left), and on a training set where feature 1 has much smaller values than feature 2 (on the right).Since feature 1 is smaller, it takes a larger change in θ1 to affect the cost function, which is why the bowl is elongated along the θ1 axis.

In fact, the cost function has the shape of a bowl, but it can be an elongated被延长 bowl if the features have very different scales. Figure 4-7 shows Gradient Descent on a training set where features 1 and 2 have the same scale (on the left), and on a training set where feature 1 has much smaller values than feature 2 (on the right).Since feature 1 is smaller, it takes a larger change in θ1 to affect the cost function, which is why the bowl is elongated along the θ1 axis.

As you can see, on the left the Gradient Descent algorithm goes straight toward the minimum, thereby reaching it quickly, whereas on the right it first goes in a direction almost orthogonal to the direction of the global minimum, and it ends with a long

march down an almost flat valley. It will eventually reach the minimum, but it will take a long time.

#########################

WARNING

When using Gradient Descent, you should ensure that all features have a similar scale (e.g., using Scikit-Learn’s StandardScaler class), or else it will take much longer to converge.

#########################![]()

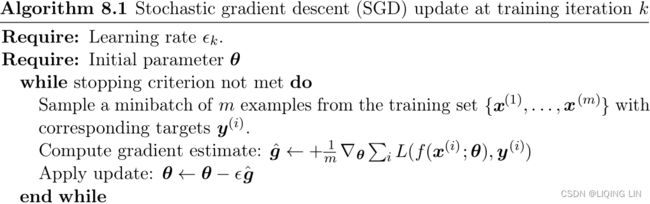

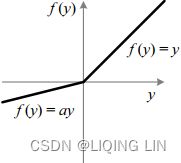

SGD (Stochastic Gradient Descent) :###############

https://blog.csdn.net/Linli522362242/article/details/104005906

The main problem with Batch Gradient Descent is the fact that it uses the whole training set to compute the gradients at every step, which makes it very slow when the training set is large. At the opposite extreme, Stochastic Gradient Descent just picks a random instance in the training set at every step and computes the gradients based only on that single instance. Obviously this makes the algorithm much faster since it has very little data to manipulate at every iteration. It also makes it possible to train on huge training sets, since only one instance needs to be in memory at each iteration (SGD can be implemented as an out-of-core algorithm.)

On the other hand, due to its stochastic (i.e., random) nature, this algorithm is much less regular than Batch Gradient Descent: instead of gently decreasing平缓的下降 until it reaches the minimum, the cost function will bounce跳 up and down, decreasing only on average大体上. Over time it will end up very close to the minimum, but once it gets there it will continue to bounce around, never settling down (see Figure 4-9). So once the algorithm stops, ![]() the final parameter values are good, but not optimal.

the final parameter values are good, but not optimal.![]()

![]() When the cost function is very irregular (as in the left figure), this can actually help the algorithm jump out of local minima, so Stochastic Gradient Descent has a better chance of finding the global minimum than Batch Gradient Descent does.

When the cost function is very irregular (as in the left figure), this can actually help the algorithm jump out of local minima, so Stochastic Gradient Descent has a better chance of finding the global minimum than Batch Gradient Descent does.

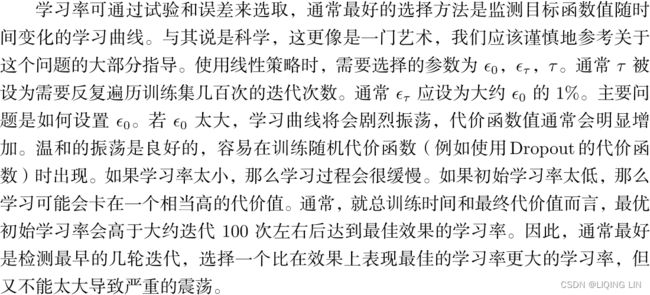

Therefore randomness is good to escape from local optima, but bad because it means that the algorithm can never settle at the minimum. One solution to this dilemma/dɪˈlemə/窘境is to gradually reduce the learning rate. The steps start out large (which helps make quick progress and escape local minima), then get smaller and smaller, allowing the algorithm to settle at the global minimum. This process is called simulated annealing/əˈniːlɪŋ/模拟退火, because it resembles类似于 the process of annealing in metallurgy冶金 where molten熔融 metal is slowly cooled down. The function that determines the learning rate at each iteration is called the learning schedule. If the learning rate is reduced too quickly, you may get stuck in a local minimum, or even end up frozen halfway to the minimum. If the learning rate is reduced too slowly, you may jump around the minimum for a long time and end up with a suboptimal solution if you halt training too early.

#################################

Note

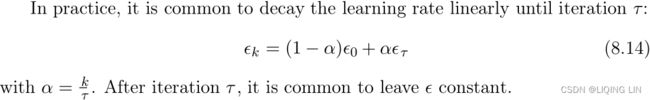

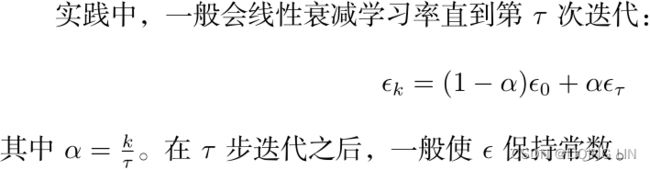

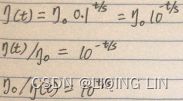

In stochastic gradient descent implementations, the fixed learning rate ![]() is often replaced by an adaptive learning rate that decreases over time, for example,

is often replaced by an adaptive learning rate that decreases over time, for example,![]() where

where ![]() and

and ![]() are constants. Note that stochastic gradient descent does not reach the global minimum but an area very close to it. By using an adaptive learning rate, we can achieve further annealing磨炼 to a better global minimum

are constants. Note that stochastic gradient descent does not reach the global minimum but an area very close to it. By using an adaptive learning rate, we can achieve further annealing磨炼 to a better global minimum

#################################

theta_path_sgd = []

m=len(X_b)

np.random.seed(42)

n_epochs = 50

t0,t1= 5,50

def learning_schedule(t):

return t0/(t+t1)

theta = np.random.randn(2,1)

for epoch in range(n_epochs): # n_epochs=50 replaces n_iterations=1000

for i in range(m): # m = len(X_b)

if epoch==0 and i<20:

y_predict = X_new_b.dot(theta)

style="b-" if i>0 else "r--"

plt.plot(X_new,y_predict, style)######

random_index = np.random.randint(m) ##### Stochastic

xi = X_b[random_index:random_index+1]

yi = y[random_index:random_index+1]

gradients = 2*xi.T.dot( xi.dot(theta) - yi ) ##### Gradient

eta=learning_schedule(epoch*m + i) ############## e.g. 5/( (epoch*m+i)+50)

theta = theta-eta * gradients ###### Descent

theta_path_sgd.append(theta)

plt.plot(X, y, "b.")

plt.xlabel("$x_1$", fontsize=18)

plt.ylabel("$y$", rotation=0, fontsize=18)

plt.title("Figure 4-10. Stochastic Gradient Descent first 10 steps")

plt.axis([0,2, 0,15])

plt.show()https://blog.csdn.net/Linli522362242/article/details/104005906![]() 04_TrainingModels_Normal Equation(正态方程,正规方程) Derivation_Gradient Descent_Polynomial Regression_LIQING LIN的博客-CSDN博客

04_TrainingModels_Normal Equation(正态方程,正规方程) Derivation_Gradient Descent_Polynomial Regression_LIQING LIN的博客-CSDN博客

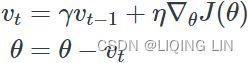

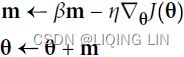

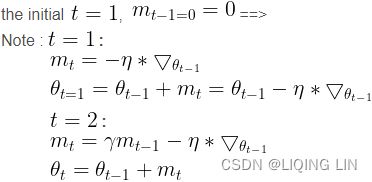

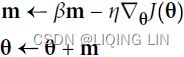

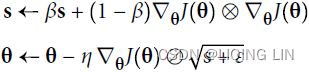

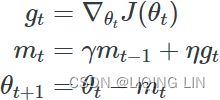

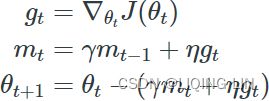

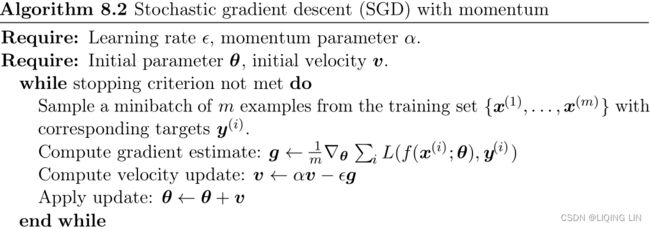

Momentum:###############

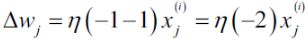

![]() # it subtracts the local gradient * η from the momentum vector m, m is negative

# it subtracts the local gradient * η from the momentum vector m, m is negative![]() # it updates the weights by adding this momentum vector m, note m is negative OR

# it updates the weights by adding this momentum vector m, note m is negative OR

#* 下降初期时,使用上一次参数更新,下降方向一致,乘上较大的β能够进行很好的加速

#* 下降中后期时,在局部最小值来回震荡的时候,![]() -->0,β使得更新幅度增大,跳出陷阱

-->0,β使得更新幅度增大,跳出陷阱

#* 在梯度改变方向的时候(梯度上升,梯度方向与βm相反),能够减少更新总而言之,momentum项能够在相关方向加速SGD,抑制振荡,从而加快收敛

The momentum term (βm) increases for dimensions whose gradients point in the same directions and reduces updates for dimensions whose gradients change directions. As a result, we gain faster convergence and reduced oscillation.

Momentum optimization cares a great deal about what previous gradients were: at each iteration, it subtracts the local gradient from the momentum vector m (multiplied by the learning rate η), and it updates the weights by adding this momentum vector m (see Equation 11-4). In other words, the gradient is used for acceleration, not for speed. To simulate some sort of friction摩擦 mechanism and prevent the momentum m from growing too large, the algorithm introduces a new hyperparameter β, called the momentum, which must be set between 0 (high friction) and 1 (no friction). A typical momentum value is 0.9.

You can easily verify that if the gradient remains constant, the terminal velocity m (i.e., the maximum size of the weight updates) is equal to that gradient multiplied by the learning rate η multiplied by 1/(1–β) (ignoring the sign).### ![]() VS

VS ![]() and 0<= β <1

and 0<= β <1

It is thus helpful to think of the momentum hyperparameter β in terms of

![]()

![]() For example, if β = 0.9, then the terminal velocity is equal to 10 times the gradient times the learning rate, so momentum optimization ends up going 10 times faster than Gradient Descent! This allows momentum optimization to escape from plateaus停滞时期 much faster than Gradient Descent. We saw in Chapter 4 that when the inputs have very different scales, the cost function will look like an elongated bowl (see Figure 4-7). Gradient Descent goes down the steep slope陡坡 quite fast, but then it takes a very long time to go down the valley深谷. In contrast, momentum optimization will roll down the valley faster and faster until it reaches the bottom (the optimum). In deep neural networks that don’t use Batch Normalization, the upper layers will often end up having inputs with very different scales, so using momentum optimization helps a lot. It can also help roll past local optima.

For example, if β = 0.9, then the terminal velocity is equal to 10 times the gradient times the learning rate, so momentum optimization ends up going 10 times faster than Gradient Descent! This allows momentum optimization to escape from plateaus停滞时期 much faster than Gradient Descent. We saw in Chapter 4 that when the inputs have very different scales, the cost function will look like an elongated bowl (see Figure 4-7). Gradient Descent goes down the steep slope陡坡 quite fast, but then it takes a very long time to go down the valley深谷. In contrast, momentum optimization will roll down the valley faster and faster until it reaches the bottom (the optimum). In deep neural networks that don’t use Batch Normalization, the upper layers will often end up having inputs with very different scales, so using momentum optimization helps a lot. It can also help roll past local optima.

Due to the momentum, the optimizer may overshoot超调 a bit, then come back, overshoot again, and oscillate[ˈɑsɪleɪt]使振荡 like this many times before stabilizing at the minimum. This is one of the reasons it’s good to have a bit of friction in the system(β): it gets rid of these oscillations and thus speeds up convergence. OR

OR

class MomentumGradientDescent(MiniBatchGradientDescent):

def __init__(self, gamma=0.9, **kwargs):

self.gamma = gamma # gammar also called momentum, 当gamma=0时,相当于小批量随机梯度下降

super(MomentumGradientDescent, self).__init__(**kwargs)

def fit(self, X, y):

X = np.c_[np.ones(len(X)), X]

n_samples, n_features = X.shape

self.theta = np.ones(n_features)

self.velocity = np.zeros_like(self.theta) ################

self.loss_ = [0]

self.i = 0

while self.i < self.n_iter:#n_iter: epochs

self.i += 1

if self.shuffle:

X, y = self._shuffle(X, y)

errors = []

for j in range(0, n_samples, self.batch_size):

mini_X, mini_y = X[j: j + self.batch_size], y[j: j + self.batch_size]

error = mini_X.dot(self.theta) - mini_y

errors.append(error.dot(error))

mini_gradient = 1/ self.batch_size * mini_X.T.dot(error)# without*2 since cost/loss

self.velocity = self.velocity * self.gamma + self.eta * mini_gradient

self.theta -= self.velocity

#loss*1/2 for convenient computing gradient

loss = 1 / (2 * self.batch_size) * np.mean(errors)

delta_loss = loss - self.loss_[-1] #当结果改善的变动低于某个阈值时,程序提前终止

self.loss_.append(loss)

if np.abs(delta_loss) < self.tolerance:

break

return selfIn keras:

tf.keras.optimizers.SGD(

learning_rate=0.01, momentum=0.0, nesterov=False, name="SGD", **kwargs

)* The update rule for θ with gradient g when momentum(β) is 0.0:

![]()

* The update rule when momentum is larger than 0.0(β>0):

the initial ![]() ,

, ![]() ==>

==> ![]()

Note : ![]() :

:

![]() 有方向 -gradent 是向下(negative)==>

有方向 -gradent 是向下(negative)==> ![]()

![]() # note usually initialize

# note usually initialize ![]()

![]() :

:

![]()

![]()

https://github.com/tensorflow/tensorflow/blob/v1.15.0/tensorflow/python/keras/optimizer_v2/gradient_descent.py#L29-L164

if `nesterov` is False, gradient is evaluated at theta(t).

# v(t+1) = momentum * v(t) - learning_rate * gradient

# theta(t+1) = theta(t) + v(t+1)

velocity = momentum * velocity - learning_rate * g

w = w + velocityOR keras

# https://github.com/tensorflow/tensorflow/blob/v2.2.0/tensorflow/python/keras/optimizers.py

#

class SGD(Optimizer):

"""Stochastic gradient descent optimizer.

Includes support for momentum,

learning rate decay, and Nesterov momentum.

Arguments:

lr: float >= 0. Learning rate.

momentum: float >= 0. Parameter that accelerates SGD in the relevant

direction and dampens oscillations.

decay: float >= 0. Learning rate decay over each update.

nesterov: boolean. Whether to apply Nesterov momentum.

"""

def __init__(self, lr=0.01, momentum=0., decay=0., nesterov=False, **kwargs):

super(SGD, self).__init__(**kwargs)

with K.name_scope(self.__class__.__name__):

self.iterations = K.variable(0, dtype='int64', name='iterations')

self.lr = K.variable(lr, name='lr')

self.momentum = K.variable(momentum, name='momentum')

self.decay = K.variable(decay, name='decay')

self.initial_decay = decay

self.nesterov = nesterov

def _create_all_weights(self, params):

shapes = [K.int_shape(p) for p in params]

moments = [K.zeros(shape) for shape in shapes] ##########################

self.weights = [self.iterations] + moments

return moments

def get_updates(self, loss, params):

grads = self.get_gradients(loss, params)

self.updates = [state_ops.assign_add(self.iterations, 1)]

lr = self.lr

if self.initial_decay > 0:

lr = lr * ( # pylint: disable=g-no-augmented-assignment

1. /

(1. +

self.decay * math_ops.cast(self.iterations, K.dtype(self.decay))))

# momentum

moments = self._create_all_weights(params)

for p, g, m in zip(params, grads, moments):

v = self.momentum * m - lr * g # velocity # m=0 ==> v = - lr * g #####

self.updates.append(state_ops.assign(m, v))

if self.nesterov:

new_p = p + self.momentum * v - lr * g

else:

new_p = p + v ############################ SGD with momentum ########

# Apply constraints.

if getattr(p, 'constraint', None) is not None:

new_p = p.constraint(new_p)

self.updates.append(state_ops.assign(p, new_p))

return self.updates When nesterov=True, this rule becomes: # velocity m < 0

if `nesterov` is True, gradient is evaluated at theta(t) + momentum * v(t),

and the variables always store theta + m v instead of theta

# do the momentum stage first for gradient descent part

velocity = momentum * velocity - learning_rate * g # for gradient descent part

# update the parameters ==> then do the gradient descent part ==> update weight

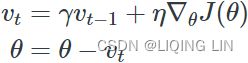

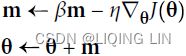

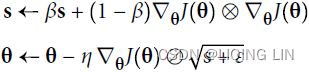

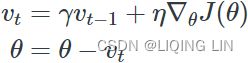

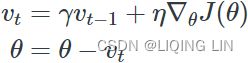

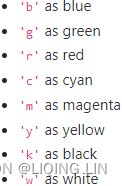

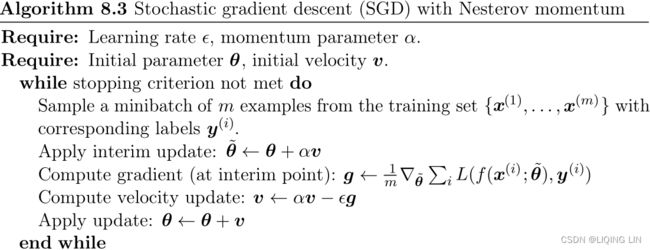

w = w + momentum * velocity - learning_rate * g # g:evaluated at theta(t)+momentum*v(t)Nesterov Accelerated Gradient( NAG ) :###############

![]()

![]()

the initial ![]() ,

, ![]() ==>

==> ![]()

Note : ![]() :

:

![]() 无方向 gradent >0 ==>

无方向 gradent >0 ==> ![]()

![]() # note usually initialize

# note usually initialize ![]()

#OR random_uniform(shape=[n_features,1],minval=-1.0, maxval=1.0,)

![]() :

:

![]()

![]()

#######Nesterov Accelerated Gradient and Momentum![]() http://proceedings.mlr.press/v28/sutskever13.pdf

http://proceedings.mlr.press/v28/sutskever13.pdf

the initial ![]() ,

, ![]() ==>

==> ![]()

Note : ![]() :

:

![]() 有方向 -gradent 是向下(negative)==>

有方向 -gradent 是向下(negative)==> ![]()

![]() # note usually initialize

# note usually initialize ![]()

#OR random_uniform(shape=[n_features,1],minval=-1.0, maxval=1.0,)

![]() :

:

![]()

![]()

![]()

![]()

class NesterovAccelerateGradient(MomentumGradientDescent):

def __init__(self, **kwargs):

super(NesterovAccelerateGradient, self).__init__(**kwargs)

def fit(self, X, y):

X = np.c_[np.ones(len(X)), X]

n_samples, n_features = X.shape #n_features: n_features+1

#OR random_uniform(shape=[n_features,1],minval=-1.0, maxval=1.0,)

self.theta = np.ones(n_features)

self.velocity = np.zeros_like(self.theta)#################

self.loss_ = [0]

self.i = 0

while self.i < self.n_iter:

self.i += 1

if self.shuffle:

X, y = self._shuffle(X, y)

errors = []

for j in range(0, n_samples, self.batch_size):

mini_X, mini_y = X[j: j + self.batch_size], y[j: j + self.batch_size]

# gamma also called momentum '-' since we use -self.velocity

error = mini_X.dot(self.theta - self.gamma * self.velocity) - mini_y############

errors.append(error.dot(error))

mini_gradient = 1 / self.batch_size * mini_X.T.dot(error)

self.velocity = self.velocity * self.gamma + self.eta * mini_gradient

self.theta -= self.velocity

loss = 1 / (2 * self.batch_size) * np.mean(errors)

delta_loss = loss - self.loss_[-1]

self.loss_.append(loss)

if np.abs(delta_loss) < self.tolerance:

break

return selfin keras:

if `nesterov` is True, gradient is evaluated at theta(t) + momentum * v(t),

and the variables always store theta + m v instead of theta

# do the momentum stage first for gradient descent part

velocity = momentum * velocity - learning_rate * g # for gradient descent part

# update the parameters ==> then do the gradient descent part ==> update weight

w = w + momentum * velocity - learning_rate * g#######

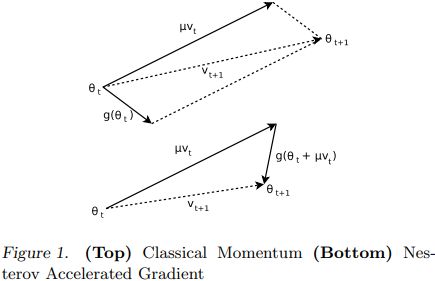

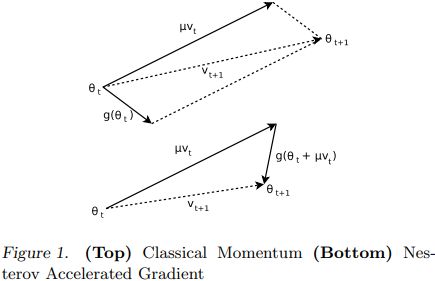

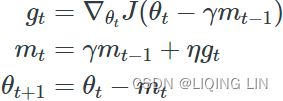

Nesterov momentum optimization measures the gradient of the cost function not at the local position θ(![]() ) but slightly ahead in the direction of the momentum, at θ + βm (

) but slightly ahead in the direction of the momentum, at θ + βm (![]() ).

).

This small tweak works because in general the momentum vector m will be pointing in the right direction (i.e., toward the optimum), so it will be slightly more accurate to use the gradient measured a bit farther in that direction![]() rather than using the gradient at the original position

rather than using the gradient at the original position![]() , as you can see in Figure 11-6 (where

, as you can see in Figure 11-6 (where ![]() represents the gradient of the cost function measured at the starting point θ, and

represents the gradient of the cost function measured at the starting point θ, and ![]() represents the gradient at the point located at θ + βm,

represents the gradient at the point located at θ + βm,

![]()

![]()

Figure 11-6. Regular versus Nesterov Momentum optimization(![]() ==β); the former applies the gradients

==β); the former applies the gradients![]() computed before the momentum step(βm), while the latter applies the gradients

computed before the momentum step(βm), while the latter applies the gradients![]() ######

######![]() computed after.

computed after.

While Momentum first computes the current gradient![]() (blue vector) and then takes a big jump(red vector,βm) in the direction of the updated accumulated gradient,

(blue vector) and then takes a big jump(red vector,βm) in the direction of the updated accumulated gradient,

NAG first makes a big jump in the direction of the previous accumulated gradient (red vector,βm), measures the gradient (![]() green vector) and then makes a correction

green vector) and then makes a correction![]() , which results in the complete NAG update. This anticipatory update prevents us from going too fast and results in increased responsiveness, which has significantly increased the performance of RNNs on a number of tasks.

, which results in the complete NAG update. This anticipatory update prevents us from going too fast and results in increased responsiveness, which has significantly increased the performance of RNNs on a number of tasks. <==

<==

As you can see, the Nesterov update ends up slightly closer to the optimum. After a while, these small improvements add up and NAG ends up being significantly faster than regular Momentum optimization. Moreover, note that when the momentum βm pushes the weights across a valley, ![]() continues to push further across the valley, while

continues to push further across the valley, while ![]() pushes back toward the bottom of the valley(更正方向). This helps reduce oscillations and thus converges faster.

pushes back toward the bottom of the valley(更正方向). This helps reduce oscillations and thus converges faster.

https://blog.csdn.net/Linli522362242/article/details/106982127

keras Nesterov Accelerated Gradient( NAG )

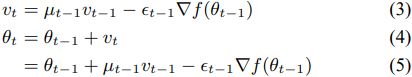

################### ###################

where ![]() are the model parameters,

are the model parameters, ![]() the velocity,

the velocity, ![]() ∈ [0, 1] the momentum (decay) coefficient and

∈ [0, 1] the momentum (decay) coefficient and ![]() > 0 the learning rate at iteration

> 0 the learning rate at iteration ![]() , f(θ) is the objective function and

, f(θ) is the objective function and ![]() is a shorthand notation for the gradient

is a shorthand notation for the gradient ![]() . These equations have a form similar to standard momentum updates:

. These equations have a form similar to standard momentum updates:  note

note ![]()

OR

OR

and differ only in the evaluation point of the gradient at each iteration. This important difference, thought to counterbalance too high velocities ![]() by “peeking ahead” actual objective values

by “peeking ahead” actual objective values![]() (更正方向) in the candidate search direction, results in significantly improved RNN performance on a number of tasks.

(更正方向) in the candidate search direction, results in significantly improved RNN performance on a number of tasks.

In this section, we derive a new formulation of Nesterov momentum differing from (3) and (5) only in the linear combination coefficients of the velocity and gradient contributions at each iteration, and we offer an alternative interpretation of the method. The key departure from (1) and (2) resides in committing to the “peekedahead” parameters ![]() and backtracking by the same amount before each update. Our new parameters

and backtracking by the same amount before each update. Our new parameters ![]() updates become:

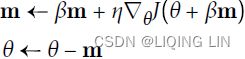

updates become:![]()

![]() (6)==>keras fomula

(6)==>keras fomula![]()

![]()

![]()

![]()

![]()

![]() ==>keras

==>keras ![]()

![]()

![]() (7)

(7)

Assuming a zero initial velocity ![]() = 0 and velocity at convergence of optimization

= 0 and velocity at convergence of optimization ![]() ≃ 0 (since

≃ 0 (since ![]() ), the parameters

), the parameters ![]() are a completely equivalent replacement of θ.

are a completely equivalent replacement of θ.

Note that equation (7) is identical to regular/classical momentum (5) with different linear combination coefficients. More precisely, for an equivalent velocity update (6), the velocity contribution to the new parameters ![]() is reduced relatively to the gradient contribution

is reduced relatively to the gradient contribution ![]() . This allows storing past velocities for a longer time with a higher

. This allows storing past velocities for a longer time with a higher ![]() , while actually using those velocities more conservatively during the updates. We suspect this mechanism is a crucial ingredient for good empirical performance. While the “peeking ahead” point of view suggests that a similar strategy could be adapted for regular gradient descent (misleadingly, because it would amount to a reduced learning rate

, while actually using those velocities more conservatively during the updates. We suspect this mechanism is a crucial ingredient for good empirical performance. While the “peeking ahead” point of view suggests that a similar strategy could be adapted for regular gradient descent (misleadingly, because it would amount to a reduced learning rate ![]() ), our derivation shows why it is important to choose search directions aligned with the current velocity to yield substantial improvement. The general case is also simpler to implement.

), our derivation shows why it is important to choose search directions aligned with the current velocity to yield substantial improvement. The general case is also simpler to implement.

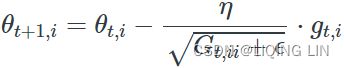

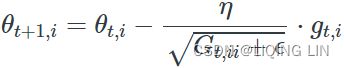

AdaGrad (adaptive gradient) : ###############

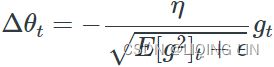

Consider the elongated bowl problem again: Gradient Descent starts by quickly going down the steepest slope, which does not point straight toward the global optimum, then it very slowly goes down to the bottom of the valley. It would be nice if the algorithm could correct its direction earlier to point a bit more toward the global optimum. The AdaGrad algorithm achieves this correction by scaling down the gradient vector along the steepest dimensions (see Equation 11-6).![]()

OR ![]() # accumulates s 约束项regularizer: 1/

# accumulates s 约束项regularizer: 1/![]()

![]()

###########

# ![]() to denote the gradient at time step

to denote the gradient at time step ![]()

# ![]() is then the partial derivative of the objective function w.r.t. to the parameter

is then the partial derivative of the objective function w.r.t. to the parameter ![]() at time step

at time step ![]() :

: ![]()

# the general learning rate η at each time step ![]() for every parameter

for every parameter ![]() based on the past gradients that have been computed for

based on the past gradients that have been computed for![]() :

:

![]() is a diagonal matrix where each diagonal element

is a diagonal matrix where each diagonal element ![]() is the sum of the squares of the gradients w.r.t.

is the sum of the squares of the gradients w.r.t. ![]() up to time step

up to time step ![]()

# As ![]() contains the sum of the squares of the past gradients w.r.t. to all parameters

contains the sum of the squares of the past gradients w.r.t. to all parameters ![]() along its diagonal, we can now vectorize our implementation by performing a matrix-vector product⊙between

along its diagonal, we can now vectorize our implementation by performing a matrix-vector product⊙between ![]() and

and ![]() :

:

###########

Adagrad is an algorithm for gradient-based optimization that does just this: It adapts the learning rate to the parameters, performing smaller updates (i.e. low learning rates) for parameters associated with frequently occurring features(bigger (accumulates the square of the gradients)![]() ==>

==>![]() is scaled down by a factor of

is scaled down by a factor of ![]() ), and larger updates (i.e. high learning rates) for parameters associated with infrequent features(lower

), and larger updates (i.e. high learning rates) for parameters associated with infrequent features(lower ![]() ). For this reason, it is well-suited for dealing with sparse data. Dean et al. have found that Adagrad greatly improved the robustness of SGD and used it for training large-scale neural nets at Google, which -- among other things -- learned to recognize cats in Youtube videos.

). For this reason, it is well-suited for dealing with sparse data. Dean et al. have found that Adagrad greatly improved the robustness of SGD and used it for training large-scale neural nets at Google, which -- among other things -- learned to recognize cats in Youtube videos.

特点:

it eliminates the need to manually tune the learning rate![]() ( It helps point the resulting updates more directly toward the global optimum). Most implementations use a default value of 0.01 and leave it at that.

( It helps point the resulting updates more directly toward the global optimum). Most implementations use a default value of 0.01 and leave it at that.

前期accumulates较小的时候,regularizer较大,能够放大梯度

后期accumulates较大的时候,regularizer较小,能够约束梯度

适合处理稀疏梯度

缺点:

由公式可以看出,仍依赖于人工设置一个全局学习率

learning rate设置过大的话,会使regularizer过于敏感,对梯度的调节太大

中后期,分母上梯度平方的累加将会越来越大,使![]() -->0,使gradent-->0,使得训练提前结束

-->0,使gradent-->0,使得训练提前结束

Adagrad's main weakness is its accumulation of the squared gradients in the denominator: Since every added term![]() is positive, the accumulated sum

is positive, the accumulated sum ![]() keeps growing during training. This in turn causes the learning rate

keeps growing during training. This in turn causes the learning rate![]() to shrink and eventually become infinitesimally small, at which point the algorithm is no longer able to acquire additional knowledge.

to shrink and eventually become infinitesimally small, at which point the algorithm is no longer able to acquire additional knowledge.

The first step accumulates the square of the gradients into the vector s (recall that the ⊗ symbol represents the element-wise multiplication![]() ). This vectorized form is equivalent to computing

). This vectorized form is equivalent to computing ![]() for each element

for each element ![]() of the vector s; in other words, each

of the vector s; in other words, each ![]() accumulates the squares of the partial derivative of the cost function with regard to parameter

accumulates the squares of the partial derivative of the cost function with regard to parameter ![]() . If the cost function is steep along the

. If the cost function is steep along the ![]() dimension, then

dimension, then![]() will get larger and larger at each iteration.

will get larger and larger at each iteration.

The second step is almost identical to Gradient Descent, but with one big difference: the gradient vector is scaled down by a factor of s + ε (the ⊘ symbol represents the element-wise division, and ε is a smoothing term to avoid division by zero, typically set to ![]() ). This vectorized form is equivalent to simultaneously computing

). This vectorized form is equivalent to simultaneously computing![]() for all parameters

for all parameters ![]() .

.

In short, this algorithm decays the learning rate, but it does so faster for steep陡峭 dimensions than for dimensions with gentler slopes平缓坡度. This is called an adaptive learning rate. It helps point the resulting updates more directly toward the global optimum (see Figure 11-7). One additional benefit is that it requires much less tuning of the learning rate hyperparameter η.

Figure 11-7. AdaGrad versus Gradient Descent: the former can correct its direction earlier to point to the optimum![]()

AdaGrad frequently performs well for simple quadratic problems, but it often stops too early when training neural networks. ![]() The learning rate gets scaled down so much that the algorithm ends up stopping entirely before reaching the global optimum. So even though Keras has an Adagrad optimizer, you should not use it to train deep neural networks (it may be efficient for simpler tasks such as Linear Regression, though). Still, understanding AdaGrad is helpful to grasp the other adaptive learning rate optimizers.

The learning rate gets scaled down so much that the algorithm ends up stopping entirely before reaching the global optimum. So even though Keras has an Adagrad optimizer, you should not use it to train deep neural networks (it may be efficient for simpler tasks such as Linear Regression, though). Still, understanding AdaGrad is helpful to grasp the other adaptive learning rate optimizers.![]()

OR ![]() # accumulates s 约束项regularizer: 1/

# accumulates s 约束项regularizer: 1/![]()

![]()

class AdaptiveGradientDescent(MiniBatchGradientDescent):

def __init__(self, epsilon=1e-6, **kwargs):

self.epsilon = epsilon

super(AdaptiveGradientDescent, self).__init__(**kwargs)

def fit(self, X, y):

X = np.c_[np.ones(len(X)), X]

n_samples, n_features = X.shape

self.theta = np.ones(n_features)

self.loss_ = [0]

gradient_sum = np.zeros(n_features)##############s

self.i = 0

while self.i < self.n_iter:

self.i += 1

if self.shuffle:

X, y = self._shuffle(X, y)

errors = []

for j in range(0, n_samples, self.batch_size):

mini_X, mini_y = X[j: j + self.batch_size], y[j: j + self.batch_size]

error = mini_X.dot(self.theta) - mini_y

errors.append(error.dot(error))

mini_gradient = 1 / self.batch_size * mini_X.T.dot(error)

gradient_sum += mini_gradient ** 2 ##############

adj_gradient = mini_gradient / (np.sqrt(gradient_sum + self.epsilon))

self.theta -= self.eta * adj_gradient

loss = 1 / (2 * self.batch_size) * np.mean(errors)

delta_loss = loss - self.loss_[-1]

self.loss_.append(loss)

if np.abs(delta_loss) < self.tolerance:

break

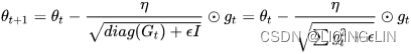

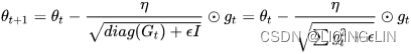

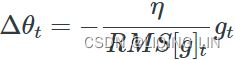

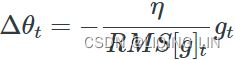

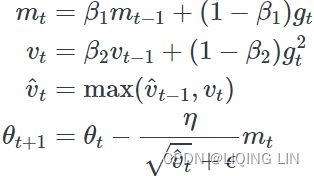

return selfRMSprop (Root Mean Square propagation) : ###############

As we’ve seen, AdaGrad runs the risk of slowing down a bit too fast and never converging to the global optimum. The RMSProp algorithm fixes this by accumulating only the gradients from the most recent iterations (as opposed to all the gradients since the beginning of training). It does so by using exponential decay in the first step (see Equation 11-7).

OR RMSprop as well divides the learning rate![]() by an exponentially decaying average of squared gradients.

by an exponentially decaying average of squared gradients.

Equation 11-7. RMSProp algorithm![]() OR

OR

The decay rate β (or ![]() )is typically set to 0.9. Yes, it is once again a new hyperparameter, but this default value often works well, so you may not need to tune it at all. while a good default value for the learning rate η is 0.001.

)is typically set to 0.9. Yes, it is once again a new hyperparameter, but this default value often works well, so you may not need to tune it at all. while a good default value for the learning rate η is 0.001.

###########AdaGrad![]()

# ![]() to denote the gradient at time step

to denote the gradient at time step ![]()

# ![]() is then the partial derivative of the objective function w.r.t. to the parameter

is then the partial derivative of the objective function w.r.t. to the parameter ![]() at time step

at time step ![]() :

: ![]()

# the general learning rate η at each time step ![]() for every parameter

for every parameter ![]() based on the past gradients that have been computed for

based on the past gradients that have been computed for![]() :

:

![]() is a diagonal matrix where each diagonal element

is a diagonal matrix where each diagonal element ![]() is the sum of the squares of the gradients w.r.t.

is the sum of the squares of the gradients w.r.t. ![]() up to time step

up to time step ![]()

# As ![]() contains the sum of the squares of the past gradients w.r.t. to all parameters

contains the sum of the squares of the past gradients w.r.t. to all parameters ![]() along its diagonal, we can now vectorize our implementation by performing a matrix-vector product⊙between

along its diagonal, we can now vectorize our implementation by performing a matrix-vector product⊙between ![]() and

and ![]() :

:

###########

![]() OR

OR

class RMSProp(MiniBatchGradientDescent):

def __init__(self, gamma=0.9, epsilon=1e-6, **kwargs):

self.gamma = gamma #called momenturm B

self.epsilon = epsilon

super(RMSProp, self).__init__(**kwargs)

def fit(self, X, y):

X = np.c_[np.ones(len(X)), X]

n_samples, n_features = X.shape

self.theta = np.ones(n_features)

self.loss_ = [0]

gradient_exp = np.zeros(n_features)

self.i = 0

while self.i < self.n_iter:

self.i += 1

if self.shuffle:

X, y = self._shuffle(X, y)

errors = []

for j in range(0, n_samples, self.batch_size):

mini_X, mini_y = X[j: j + self.batch_size], y[j: j + self.batch_size]

error = mini_X.dot(self.theta) - mini_y

errors.append(error.dot(error))

mini_gradient = 1 / self.batch_size * mini_X.T.dot(error)

###################################################

gradient_exp = self.gamma * gradient_exp + (1 - self.gamma) * mini_gradient**2

gradient_rms = np.sqrt(gradient_exp + self.epsilon)

self.theta -= self.eta / gradient_rms * mini_gradient

loss = 1 / (2 * self.batch_size) * np.mean(errors)

delta_loss = loss - self.loss_[-1]

self.loss_.append(loss)

if np.abs(delta_loss) < self.tolerance:

break

return selfin tensor:tensorflow/rmsprop.py at master · tensorflow/tensorflow · GitHub

"""One-line documentation for rmsprop module.

rmsprop algorithm [tieleman2012rmsprop]

A detailed description of rmsprop.

- maintain a moving (discounted) average of the square of gradients

- divide gradient by the root of this average

mean_square = decay * mean_square{t-1} + (1-decay) * gradient ** 2

# momentum Defaults to 0.0.

mom = momentum * mom{t-1} + learning_rate * g_t /

sqrt(mean_square + epsilon)

delta = - mom

This implementation of RMSProp uses plain momentum, not Nesterov momentum.

The centered version additionally maintains a moving (discounted) average of the

gradients, and uses that average to estimate the variance:

################

mean_grad = decay * mean_grad{t-1} + (1-decay) * gradient

################

mean_square = decay * mean_square{t-1} + (1-decay) * gradient ** 2

mom = momentum * mom{t-1} + learning_rate * g_t /

sqrt(mean_square - mean_grad**2 + epsilon)

############

delta = - mom

"""in kera:keras/rmsprop.py at v2.10.0 · keras-team/keras · GitHub

centered : Boolean. If True, gradients are normalized by the estimated variance of the gradient通过梯度的估计方差对梯度进行归一化;

if False, by the uncentered second moment.![]() OR

OR

Setting this to True may help with training, but is slightly more expensive in terms of computation and memory. Defaults to False.

######224

# mean_square: at iteration t, the mean of ( all gradients in mini-batch )

# mean_square = decay * mean_square{t-1} + (1-decay) * gradient ** 2

rms_t = coefficients["rho"] * rms

+ coefficients["one_minus_rho"] * tf.square(grad)

# tf.compat.v1.assign( ref, value, v

alidate_shape=None, use_locking=None, name=None )

# return : A Tensor that will hold the new value of ref

# after the assignment has completed

# rms : is a tensor

rms_t = tf.compat.v1.assign(

rms, rms_t, use_locking=self._use_locking

)# rms_t variable is refer to rms which was filled with new value

denom_t = rms_t

if self.centered:

mg = self.get_slot(var, "mg")

# mean: the mean of gradients from t=1 to t>1

# -minus mean_grad at iteration t

# mean_grad = decay * mean_grad{t-1} + (1-decay) * gradient

mg_t = (

coefficients["rho"] * mg

+ coefficients["one_minus_rho"] * grad

)

mg_t = tf.compat.v1.assign(

mg, mg_t, use_locking=self._use_locking

)

####### mean_square + epsilon - mean_grad**2

denom_t = rms_t - tf.square(mg_t)

######

# momentum: Defaults to 0.0.

# mom = momentum * mom{t-1} +

# learning_rate * g_t / sqrt(mean_square + epsilon)

# delta = - mom

var_t = var - coefficients["lr_t"] * grad / (

tf.sqrt(denom_t) + coefficients["epsilon"]

)

return tf.compat.v1.assign(

var, var_t, use_locking=self._use_locking

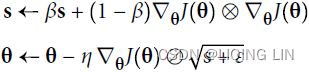

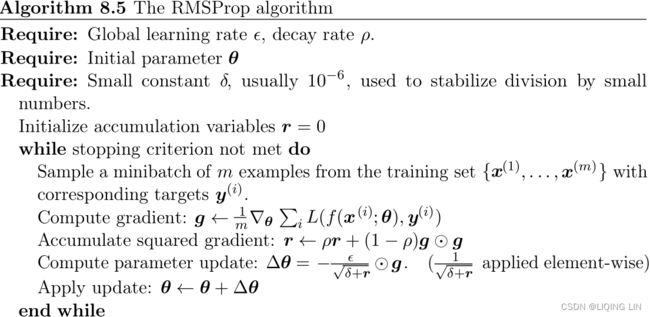

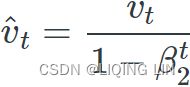

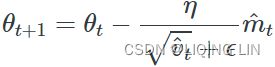

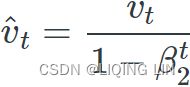

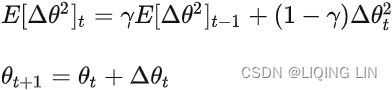

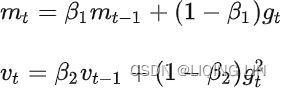

).opAdam (adaptive moment estimation) :###############

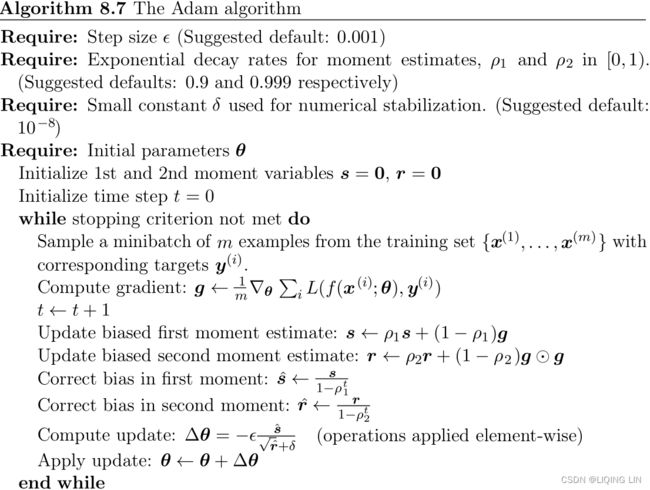

Adam, which stands for adaptive moment estimation自适应矩估计, combines the ideas of momentum optimization and RMSProp: just like momentum optimization, it keeps track of an exponentially decaying average of past gradients; and just like RMSProp, it keeps track of an exponentially decaying average of past squared gradients (see Equation 11-8).

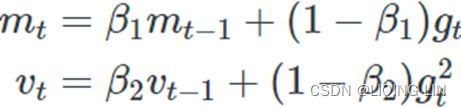

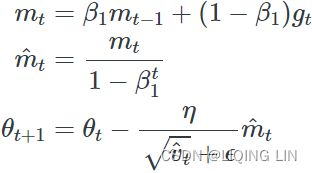

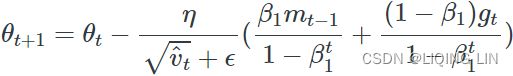

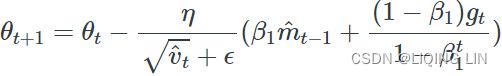

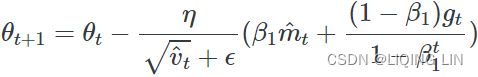

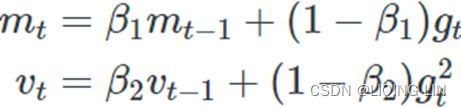

Equation 11-8. Adam algorithm

1. ![]() OR

OR![]()

2. ![]()

![]()

The only difference is that step 1 computes an exponentially decaying average rather than an exponentially decaying sum, but these are actually equivalent except for a constant factor (the decaying average is just ![]() times the decaying sum,

times the decaying sum, ![]() and

and ![]() are estimates of the first moment一阶矩 (the mean) of the gradient

are estimates of the first moment一阶矩 (the mean) of the gradient ![]() and the second moment二阶矩 (the uncentered variance) of the squared gradient

and the second moment二阶矩 (the uncentered variance) of the squared gradient ![]() at time step

at time step ![]() .

.

As ![]() and

and ![]() are initialized as vectors of 0's, the authors of Adam observe that they are biased towards zero, especially during the initial time steps, and especially when the decay rates are small (i.e.

are initialized as vectors of 0's, the authors of Adam observe that they are biased towards zero, especially during the initial time steps, and especially when the decay rates are small (i.e. ![]() and

and ![]() are close to 1)).

are close to 1)).

The momentum decay hyperparameter ![]() is typically initialized to 0.9, while the scaling decay hyperparameter

is typically initialized to 0.9, while the scaling decay hyperparameter ![]() is often initialized to 0.999

is often initialized to 0.999

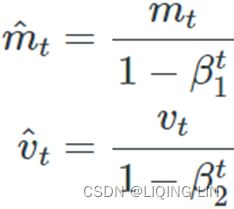

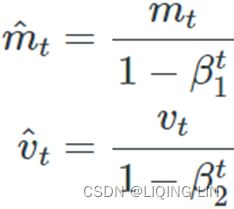

3. ![]() OR

OR![]() compute a bias correction

compute a bias correction ![]()

4.![]() OR

OR  compute a bias correction

compute a bias correction

Steps 3 and 4 are somewhat of a technical detail: since ![]() and

and ![]() are initialized at 0, they will be biased toward 0 at the beginning of training, so these two steps will help boost

are initialized at 0, they will be biased toward 0 at the beginning of training, so these two steps will help boost ![]() and

and ![]() at the beginning of training.

at the beginning of training.

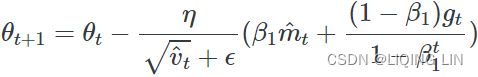

5.![]() (

(![]() )OR

)OR (unsigned

(unsigned ![]() )

)

If you just look at steps 1, 2, and 5, you will notice Adam’s close similarity to both momentum optimization and RMSProp. As earlier, the smoothing term ε is usually initialized to a tiny number such as ![]() . These are the default values for the Adam class (to be precise, epsilon defaults to None, which tells Keras to use keras.backend.epsilon(), which defaults to

. These are the default values for the Adam class (to be precise, epsilon defaults to None, which tells Keras to use keras.backend.epsilon(), which defaults to ![]() ; you can change it using keras.backend.set_epsilon()).

; you can change it using keras.backend.set_epsilon()).

Since Adam is an adaptive learning rate algorithm (like AdaGrad and RMSProp), it requires less tuning of the learning rate hyperparameter η. You can often use the default value η = 0.001, making Adam even easier to use than Gradient Descent.

可以看出,直接对梯度的矩估计对内存没有额外的要求,而且可以根据梯度进行动态调整,而![]() 对学习率形成一个动态约束,而且有明确的范围。

对学习率形成一个动态约束,而且有明确的范围。

特点:

结合了Adagrad善于处理稀疏梯度和RMSprop善于处理非平稳目标的优点

对内存需求较小

为不同的参数计算不同的自适应学习率

也适用于大多非凸优化- 适用于大数据集和高维空间

Note : in keras 偏差修正in learning rate:

# https://github.com/keras-team/keras/blob/b80dd12da9c0bc3f569eca3455e77762cf2ee8ef/keras/optimizers/optimizer_v2/adam.py

# 133

def _prepare_local(self, var_device, var_dtype, apply_state):

super()._prepare_local(var_device, var_dtype, apply_state)

local_step = tf.cast(self.iterations + 1, var_dtype)

beta_1_t = tf.identity(self._get_hyper("beta_1", var_dtype))

beta_2_t = tf.identity(self._get_hyper("beta_2", var_dtype))

beta_1_power = tf.pow(beta_1_t, local_step)

beta_2_power = tf.pow(beta_2_t, local_step)

# Correction

lr = apply_state[(var_device, var_dtype)]["lr_t"] * (

tf.sqrt(1 - beta_2_power) / (1 - beta_1_power)

)

apply_state[(var_device, var_dtype)].update(

dict(

lr=lr,

epsilon=tf.convert_to_tensor(self.epsilon, var_dtype),

beta_1_t=beta_1_t,

beta_1_power=beta_1_power,

one_minus_beta_1_t=1 - beta_1_t,

beta_2_t=beta_2_t,

beta_2_power=beta_2_power,

one_minus_beta_2_t=1 - beta_2_t,

)

)

Adam相对于RMSProp![]() 新增了两处改动。其一,Adam使用经过指数移动加权 平均的梯度值(in mini-batch)来替换原始的梯度值;其二,Adam对经指数加权后的梯度值

新增了两处改动。其一,Adam使用经过指数移动加权 平均的梯度值(in mini-batch)来替换原始的梯度值;其二,Adam对经指数加权后的梯度值 ![]() 和平方梯度值

和平方梯度值 ![]() 都进行了修正,亦即偏差修正(Bias Correction

都进行了修正,亦即偏差修正(Bias Correction![]() and

and )

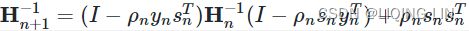

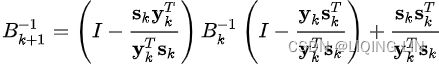

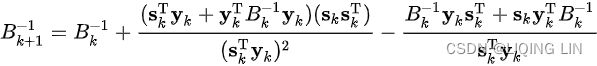

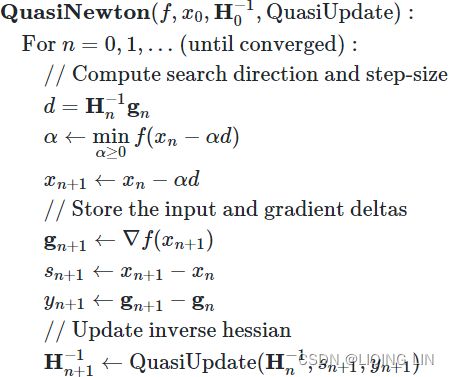

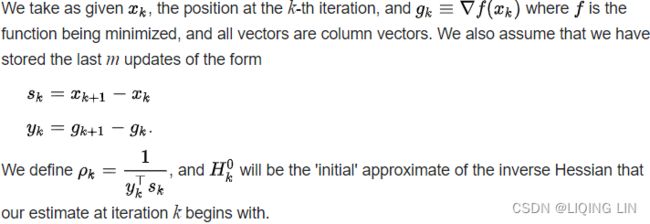

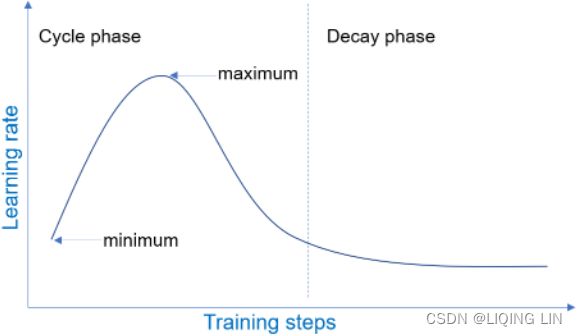

)