从零基础入门Tensorflow2.0 ----一、2. 实战回归模型

every blog every motto: There’s only one corner of the universe you can be sure of improving, and that’s your own self.

0. 前言

上一节我们讲了分类模型,这一节主要讲回归模型。

1. 代码部分

1. 导入模块

import matplotlib as mpl

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import sklearn

import pandas as pd

import os

import sys

import time

import tensorflow as tf

from tensorflow import keras

print(tf.__version__)

print(sys.version_info)

for module in mpl,np,pd,sklearn,tf,keras:

print(module.__name__,module.__version__)

2. 数据

2.1 导入数据

from sklearn.datasets import fetch_california_housing

# 房价预测

housing = fetch_california_housing()

print(housing.DESCR)

print(housing.data.shape)

print(housing.target.shape)

2.2 预览

import pprint

pprint.pprint(housing.data[0:5])

pprint.pprint(housing.target[0:5])

2.3 划分样本

# 划分样本

from sklearn.model_selection import train_test_split

x_train_all,x_test,y_train_all,y_test = train_test_split(housing.data,housing.target,random_state=7)

x_train,x_valid,y_train,y_valid = train_test_split(x_train_all,y_train_all,random_state=11)

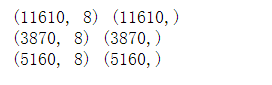

print(x_train.shape,y_train.shape)

print(x_valid.shape,y_valid.shape)

print(x_test.shape,y_test.shape)

3. 数据归一化

# 归一化

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

x_train_scaled = scaler.fit_transform(x_train)

x_valid_scaled = scaler.transform(x_valid)

x_test_scaled = scaler.transform(x_test)

4. 模型

4. 1 搭建模型

# 搭建模型

model = keras.models.Sequential([

keras.layers.Dense(30,activation='relu',input_shape=x_train.shape[1:]),

keras.layers.Dense(1),

])

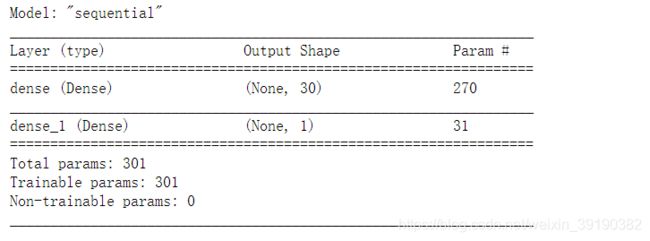

4.2 打印模型信息

# 打印model信息

model.summary()

4. 3 编译

# 编译

model.compile(loss='mean_squared_error',optimizer="sgd")

4.4 回调函数

# 回调函数

callbacks = [keras.callbacks.EarlyStopping(patience=5,min_delta=1e-3)]

5. 训练

#训练

history = model.fit(x_train_scaled,y_train,validation_data=(x_valid_scaled,y_valid),epochs=100,callbacks=callbacks)

6. 打印学习曲线

# 学习曲线

def plot_learning_curves(history):

pd.DataFrame(history.history).plot(figsize=(8,5))

plt.grid(True)

plt.gca().set_ylim(0,1)

plt.show()

plot_learning_curves(history)

7. 测试集上

model.evaluate(x_test_scaled,y_test)

![]()