1. Sample space Ω = "All possible out comes".

Each outcome i in Ω , has a probability P(i) >= 0;

Constraint : Sum(i in Ω) {P(i)} = 1.

2. Choosing a random pivot in outer QuickSort Call:

Ω ={1, 2, ... , n } and P(i) = 1/n for all i in Ω.

3. An event is a subset S of Ω.

The probability of an event S is Sum(i in S) { P(i) }.

4. The event "the chosen pivot gives a 25-75 split of better" = {(n/4 + 1) th smallest element, ..., (3n/4)th smallest element} , so its Probability = 1/2

5. A random variable X is a real-valued function defined on Ω. (i.e. size of subarray passed to 1st recursive call)

6. Let X be a random variable, the expectation E[X] of X = average value of X = Sum(i in Ω){ X(i) P(i)}

7. The expectation of the size of the subarray passed to the first recursive call in QuickSort is :

1/n X 0 + 1/n X 1 + 1/n X 2 ... + 1/n X (n-1) = (n-1)/2

8. Let X1, X2 , ... , Xn be random variable defined on Ω. Then :

E[Sum(Xj)] = Sum(E[Xj])

9. Proof of Linearity of Expectation:

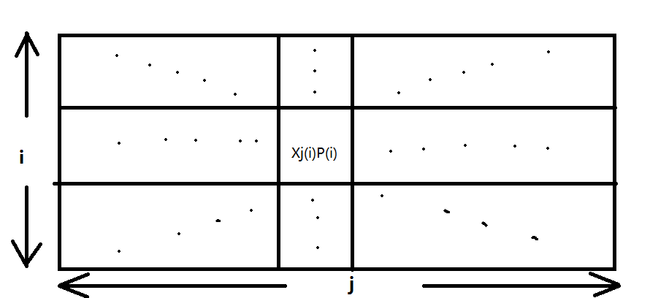

Sum(j = 0~n) { E(Xj) } = Sum(j=0~n) { Sum(i in Ω) {Xj(i) P (i) } }

= Sum(i in Ω) { Sum(j=0~n) {Xj(i) P (i) } }

= Sum(i in Ω) { P (i) Sum(j=0~n) {Xj(i)} }

= E( Sum(j=0~n) {Xj} )

10. Problem: need to assign n jobs to n servers.

Proposed Solution: assign each job to a random server.

Question: what is expected number of jobs assigned to a server.

Sample Space = All n^n assignments of jobs to servers ,each equally likely.

Let Y = total number of jobs assinged to the 1st server.

Let Xj = 1 if jth job assined to 1st server, 0 otherwise.

Y = Sum (j=1~n) {Xj} , So E[Y] = Sum (j=1~n) {E[Xj]} = Sum(j=1~n){P[Xj=1]} = n X 1/n = 1.

11. Let X, Y be events on Ω, P(X|Y) = P(X and Y) / P(Y) ( the probability of X given Y ---- Conditional Probability)

12. Events X and Y are independent iif P(X and Y) = P(X)P(Y)

P(X and Y) = P(X)P(Y) <==> P(X|Y) = P(X) <==> P(Y|X) = P(Y)

13. Random variable X and Y defined on Ω, X and Y are independent iff for all a, b , event "X=a" and event "Y=b" are independent. <==> P(X=a and Y=b) = P(X=a) P(Y=b)

14. if A, B are independent , then E[A B] = E[A] E[B] , proof :

E[A B] = Sum ( for all a, b) { a b P(X=a and Y=b)} = Sum ( for all a, b ) { a b P(X=a) P(Y=b) }

= Sum ( for all a ) {a P(X=a)} Sum (for all b) {b P(Y=b)}

= E[X] E[Y]

15. Let X1, X2 is randomly valued 0 or 1 , and X3= X1 xor X2.

So X1 and X3 are independent. But X1X3 and X2 are not independent : E[X1X2X3] <> E[X1X2]E[X3]