RK Camera hal 图像处理

soc:RK3568

system:Android12

今天发现外接的USBCamera用Camera 2API打开显示颠倒,如果在APP 里使用Camera1处理这块接口较少,调整起来比较麻烦

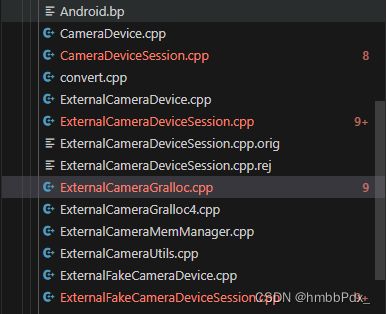

RK Camera hal位置:hardware/interfaces/camera

核心的文件在:

开机会启动:[email protected]服务

遍历/dev/videox ,通过V4l2获取摄像头驱动 长 宽 数据格式与帧率,判断当前的摄像头节点是否有效,有效就会告诉CameraServer注册为CameraId,主要代码如下

ExternalCameraDevice.cpp

std::vector ExternalCameraDevice::getCandidateSupportedFormatsLocked(

int fd, CroppingType cropType,

const std::vector& fpsLimits,

const std::vector& depthFpsLimits,

const Size& minStreamSize,

bool depthEnabled) {

std::vector outFmts;

if (!mSubDevice){

// VIDIOC_QUERYCAP get Capability

struct v4l2_capability capability;

int ret_query = ioctl(fd, VIDIOC_QUERYCAP, &capability);

if (ret_query < 0) {

ALOGE("%s v4l2 QUERYCAP %s failed: %s", __FUNCTION__, strerror(errno));

}

struct v4l2_fmtdesc fmtdesc{};

fmtdesc.index = 0;

if (capability.device_caps & V4L2_CAP_VIDEO_CAPTURE_MPLANE)

fmtdesc.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

else

fmtdesc.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

int ret = 0;

while (ret == 0) {

//获取摄像头格式

ret = TEMP_FAILURE_RETRY(ioctl(fd, VIDIOC_ENUM_FMT, &fmtdesc));

ALOGV("index:%d,ret:%d, format:%c%c%c%c", fmtdesc.index, ret,

fmtdesc.pixelformat & 0xFF,

(fmtdesc.pixelformat >> 8) & 0xFF,

(fmtdesc.pixelformat >> 16) & 0xFF,

(fmtdesc.pixelformat >> 24) & 0xFF);

if (ret == 0 && !(fmtdesc.flags & V4L2_FMT_FLAG_EMULATED)) {

auto it = std::find (

kSupportedFourCCs.begin(), kSupportedFourCCs.end(), fmtdesc.pixelformat);

if (it != kSupportedFourCCs.end()) {

// Found supported format

v4l2_frmsizeenum frameSize {

.index = 0,

.pixel_format = fmtdesc.pixelformat};

//获取摄像头SIZE

for (; TEMP_FAILURE_RETRY(ioctl(fd, VIDIOC_ENUM_FRAMESIZES, &frameSize)) == 0;

++frameSize.index) {

if (frameSize.type == V4L2_FRMSIZE_TYPE_DISCRETE) {

ALOGV("index:%d, format:%c%c%c%c, w %d, h %d", frameSize.index,

fmtdesc.pixelformat & 0xFF,

(fmtdesc.pixelformat >> 8) & 0xFF,

(fmtdesc.pixelformat >> 16) & 0xFF,

(fmtdesc.pixelformat >> 24) & 0xFF,

frameSize.discrete.width, frameSize.discrete.height);

// Disregard h > w formats so all aspect ratio (h/w) <= 1.0

// This will simplify the crop/scaling logic down the road

if (frameSize.discrete.height > frameSize.discrete.width) {

continue;

}

// Discard all formats which is smaller than minStreamSize

if (frameSize.discrete.width < minStreamSize.width

|| frameSize.discrete.height < minStreamSize.height) {

continue;

}

SupportedV4L2Format format {

.width = frameSize.discrete.width,

.height = frameSize.discrete.height,

.fourcc = fmtdesc.pixelformat

};

//获取对于的摄像头参数

if (format.fourcc == V4L2_PIX_FMT_Z16 && depthEnabled) {

updateFpsBounds(fd, cropType, depthFpsLimits, format, outFmts);

} else {

updateFpsBounds(fd, cropType, fpsLimits, format, outFmts);

}

}

}

#ifdef HDMI_ENABLE

if(strstr((const char*)capability.driver,"hdmi")){

ALOGE("driver.find :%s",capability.driver);

struct v4l2_dv_timings timings;

if(TEMP_FAILURE_RETRY(ioctl(fd, VIDIOC_SUBDEV_QUERY_DV_TIMINGS, &timings)) == 0)

{

char fmtDesc[5]{0};

sprintf(fmtDesc,"%c%c%c%c",

fmtdesc.pixelformat & 0xFF,

(fmtdesc.pixelformat >> 8) & 0xFF,

(fmtdesc.pixelformat >> 16) & 0xFF,

(fmtdesc.pixelformat >> 24) & 0xFF);

ALOGV("hdmi index:%d,ret:%d, format:%s", fmtdesc.index, ret,fmtDesc);

ALOGE("%s, hdmi I:%d, wxh:%dx%d", __func__,

timings.bt.interlaced, timings.bt.width, timings.bt.height);

ALOGV("add hdmi index:%d,ret:%d, format:%c%c%c%c", fmtdesc.index, ret,

fmtdesc.pixelformat & 0xFF,

(fmtdesc.pixelformat >> 8) & 0xFF,

(fmtdesc.pixelformat >> 16) & 0xFF,

(fmtdesc.pixelformat >> 24) & 0xFF);

SupportedV4L2Format formatGet {

.width = timings.bt.width,

.height = timings.bt.height,

.fourcc = fmtdesc.pixelformat

};

updateFpsBounds(fd, cropType, fpsLimits, formatGet, outFmts);

SupportedV4L2Format format_640x360 {

.width = 640,

.height = 360,

.fourcc = fmtdesc.pixelformat

};

updateFpsBounds(fd, cropType, fpsLimits, format_640x360, outFmts);

SupportedV4L2Format format_1920x1080 {

.width = 1920,

.height = 1080,

.fourcc = fmtdesc.pixelformat

};

updateFpsBounds(fd, cropType, fpsLimits, format_1920x1080, outFmts);

}

}

#endif

}

}

fmtdesc.index++;

}

trimSupportedFormats(cropType, &outFmts);

} 上面正常跑入,就可以通过dumpsys media.camera | grep map 获取到支持的摄像头

rk3588_s:/ $ dumpsys media.camera | grep map

Device 0 maps to "0"

Device 1 maps to "1"

Device 2 maps to "112"

Device 3 maps to "201"之后Camera 2 API 通过open 会调到CameraServer最终进到ExternalCameraDevice::open

1.openCamera

Return ExternalCameraDevice::open(

const sp& callback, ICameraDevice::open_cb _hidl_cb) {

Status status = Status::OK;

sp session = nullptr;

if (callback == nullptr) {

ALOGE("%s: cannot open camera %s. callback is null!",

__FUNCTION__, mCameraId.c_str());

_hidl_cb(Status::ILLEGAL_ARGUMENT, nullptr);

return Void();

}

//获取摄像头参数

if (isInitFailed()) {

ALOGE("%s: cannot open camera %s. camera init failed!",

__FUNCTION__, mCameraId.c_str());

_hidl_cb(Status::INTERNAL_ERROR, nullptr);

return Void();

}

mLock.lock();

ALOGV("%s: Initializing device for camera %s", __FUNCTION__, mCameraId.c_str());

session = mSession.promote();

if (session != nullptr && !session->isClosed()) {

ALOGE("%s: cannot open an already opened camera!", __FUNCTION__);

mLock.unlock();

_hidl_cb(Status::CAMERA_IN_USE, nullptr);

return Void();

}

//打开摄像头

unique_fd fd(::open(mDevicePath.c_str(), O_RDWR));

#ifdef SUBDEVICE_ENABLE

if(!mSubDevice){

if (fd.get() < 0) {

int numAttempt = 0;

do {

ALOGW("%s: v4l2 device %s open failed, wait 33ms and try again",

__FUNCTION__, mDevicePath.c_str());

usleep(OPEN_RETRY_SLEEP_US); // sleep and try again

fd.reset(::open(mDevicePath.c_str(), O_RDWR));

numAttempt++;

} while (fd.get() < 0 && numAttempt <= MAX_RETRY);

if (fd.get() < 0) {

ALOGE("%s: v4l2 device open %s failed: %s",

__FUNCTION__, mDevicePath.c_str(), strerror(errno));

mLock.unlock();

_hidl_cb(Status::INTERNAL_ERROR, nullptr);

return Void();

}

}

}

#else

if (fd.get() < 0) {

int numAttempt = 0;

do {

ALOGW("%s: v4l2 device %s open failed, wait 33ms and try again",

__FUNCTION__, mDevicePath.c_str());

usleep(OPEN_RETRY_SLEEP_US); // sleep and try again

fd.reset(::open(mDevicePath.c_str(), O_RDWR));

numAttempt++;

} while (fd.get() < 0 && numAttempt <= MAX_RETRY);

if (fd.get() < 0) {

ALOGE("%s: v4l2 device open %s failed: %s",

__FUNCTION__, mDevicePath.c_str(), strerror(errno));

mLock.unlock();

_hidl_cb(Status::INTERNAL_ERROR, nullptr);

return Void();

}

}

#endif

//创建Session

session = createSession(

callback, mCfg, mSupportedFormats, mCroppingType,

mCameraCharacteristics, mCameraId, std::move(fd));

if (session == nullptr) {

ALOGE("%s: camera device session allocation failed", __FUNCTION__);

mLock.unlock();

_hidl_cb(Status::INTERNAL_ERROR, nullptr);

return Void();

}

if (session->isInitFailed()) {

ALOGE("%s: camera device session init failed", __FUNCTION__);

session = nullptr;

mLock.unlock();

_hidl_cb(Status::INTERNAL_ERROR, nullptr);

return Void();

}

mSession = session;

mLock.unlock();

_hidl_cb(status, session->getInterface());

return Void();

} Camera framework 调用ExternalCameraDeviceSession::processCaptureResult(std::shared_ptr

void ExternalCameraDeviceSession::enqueueV4l2Frame(const sp& frame) {

ATRACE_CALL();

frame->unmap();

ATRACE_BEGIN("VIDIOC_QBUF");

v4l2_buffer buffer{};

if (mCapability.device_caps & V4L2_CAP_VIDEO_CAPTURE_MPLANE)

buffer.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

else

buffer.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buffer.memory = V4L2_MEMORY_MMAP;

if (V4L2_TYPE_IS_MULTIPLANAR(buffer.type)) {

buffer.m.planes = planes;

buffer.length = PLANES_NUM;

}

buffer.index = frame->mBufferIndex;

#ifdef SUBDEVICE_ENABLE

if(!isSubDevice()){

if (TEMP_FAILURE_RETRY(ioctl(mV4l2Fd.get(), VIDIOC_QBUF, &buffer)) < 0) {

ALOGE("%s: QBUF index %d fails: %s", __FUNCTION__,

frame->mBufferIndex, strerror(errno));

return;

}

}

#else

if (TEMP_FAILURE_RETRY(ioctl(mV4l2Fd.get(), VIDIOC_QBUF, &buffer)) < 0) {

ALOGE("%s: QBUF index %d fails: %s", __FUNCTION__,

frame->mBufferIndex, strerror(errno));

return;

}

#endif

ATRACE_END();

{

std::lock_guard lk(mV4l2BufferLock);

mNumDequeuedV4l2Buffers--;

}

mV4L2BufferReturned.notify_one();

}

Status ExternalCameraDeviceSession::processCaptureResult(std::shared_ptr& req) {

ATRACE_CALL();

// Return V4L2 buffer to V4L2 buffer queue

sp v4l2Frame =

static_cast(req->frameIn.get());

enqueueV4l2Frame(v4l2Frame);

// NotifyShutter

notifyShutter(req->frameNumber, req->shutterTs);

// Fill output buffers

hidl_vec results;

results.resize(1);

CaptureResult& result = results[0];

result.frameNumber = req->frameNumber;

result.partialResult = 1;

result.inputBuffer.streamId = -1;

result.outputBuffers.resize(req->buffers.size());

for (size_t i = 0; i < req->buffers.size(); i++) {

result.outputBuffers[i].streamId = req->buffers[i].streamId;

result.outputBuffers[i].bufferId = req->buffers[i].bufferId;

if (req->buffers[i].fenceTimeout) {

result.outputBuffers[i].status = BufferStatus::ERROR;

if (req->buffers[i].acquireFence >= 0) {

native_handle_t* handle = native_handle_create(/*numFds*/1, /*numInts*/0);

handle->data[0] = req->buffers[i].acquireFence;

result.outputBuffers[i].releaseFence.setTo(handle, /*shouldOwn*/false);

}

notifyError(req->frameNumber, req->buffers[i].streamId, ErrorCode::ERROR_BUFFER);

} else {

result.outputBuffers[i].status = BufferStatus::OK;

// TODO: refactor

if (req->buffers[i].acquireFence >= 0) {

native_handle_t* handle = native_handle_create(/*numFds*/1, /*numInts*/0);

handle->data[0] = req->buffers[i].acquireFence;

result.outputBuffers[i].releaseFence.setTo(handle, /*shouldOwn*/false);

}

}

}

// Fill capture result metadata

fillCaptureResult(req->setting, req->shutterTs);

const camera_metadata_t *rawResult = req->setting.getAndLock();

V3_2::implementation::convertToHidl(rawResult, &result.result);

req->setting.unlock(rawResult);

// update inflight records

{

std::lock_guard lk(mInflightFramesLock);

mInflightFrames.erase(req->frameNumber);

}

// Callback into framework

invokeProcessCaptureResultCallback(results, /* tryWriteFmq */true);

freeReleaseFences(results);

return Status::OK;

} 接下来主要是initialize,通过开启一些OutputThread ,图像处理线程FormatConvertThread

bool ExternalCameraDeviceSession::initialize() {

#ifdef SUBDEVICE_ENABLE

if(!isSubDevice()){

if (mV4l2Fd.get() < 0) {

ALOGE("%s: invalid v4l2 device fd %d!", __FUNCTION__, mV4l2Fd.get());

return true;

}

}

#else

if (mV4l2Fd.get() < 0) {

ALOGE("%s: invalid v4l2 device fd %d!", __FUNCTION__, mV4l2Fd.get());

return true;

}

#endif

struct v4l2_capability capability;

#ifdef SUBDEVICE_ENABLE

int ret = -1;

if(!isSubDevice()){

ioctl(mV4l2Fd.get(), VIDIOC_QUERYCAP, &capability);

}

#else

int ret = ioctl(mV4l2Fd.get(), VIDIOC_QUERYCAP, &capability);

#endif

std::string make, model;

if (ret < 0) {

ALOGW("%s v4l2 QUERYCAP failed", __FUNCTION__);

mExifMake = "Generic UVC webcam";

mExifModel = "Generic UVC webcam";

} else {

// capability.card is UTF-8 encoded

char card[32];

int j = 0;

for (int i = 0; i < 32; i++) {

if (capability.card[i] < 128) {

card[j++] = capability.card[i];

}

if (capability.card[i] == '\0') {

break;

}

}

if (j == 0 || card[j - 1] != '\0') {

mExifMake = "Generic UVC webcam";

mExifModel = "Generic UVC webcam";

} else {

mExifMake = card;

mExifModel = card;

}

}

initOutputThread();

if (mOutputThread == nullptr) {

ALOGE("%s: init OutputThread failed!", __FUNCTION__);

return true;

}

mOutputThread->setExifMakeModel(mExifMake, mExifModel);

mFormatConvertThread->createJpegDecoder();

status_t status = initDefaultRequests();

if (status != OK) {

ALOGE("%s: init default requests failed!", __FUNCTION__);

return true;

}

mRequestMetadataQueue = std::make_unique(

kMetadataMsgQueueSize, false /* non blocking */);

if (!mRequestMetadataQueue->isValid()) {

ALOGE("%s: invalid request fmq", __FUNCTION__);

return true;

}

mResultMetadataQueue = std::make_shared(

kMetadataMsgQueueSize, false /* non blocking */);

if (!mResultMetadataQueue->isValid()) {

ALOGE("%s: invalid result fmq", __FUNCTION__);

return true;

}

// TODO: check is PRIORITY_DISPLAY enough?

mOutputThread->run("ExtCamOut", PRIORITY_DISPLAY);

mFormatConvertThread->run("ExtFmtCvt", PRIORITY_DISPLAY);

#ifdef HDMI_ENABLE

#ifdef HDMI_SUBVIDEO_ENABLE

sp client = rockchip::hardware::hdmi::V1_0::IHdmi::getService();

if(client.get()!= nullptr){

::android::hardware::hidl_string deviceId;

client->getHdmiDeviceId( [&](const ::android::hardware::hidl_string &id){

deviceId = id.c_str();

});

ALOGE("getHdmiDeviceId:%s",deviceId.c_str());

if(strstr(deviceId.c_str(), mCameraId.c_str())){

ALOGE("HDMI attach SubVideo %s",mCameraId.c_str());

if(strlen(ExternalCameraDevice::kSubDevName)>0){

sprintf(main_ctx.dev_name,"%s",ExternalCameraDevice::kSubDevName);

ALOGE("main_ctx.dev_name:%s",main_ctx.dev_name);

}

mSubVideoThread = new SubVideoThread(0);

mSubVideoThread->run("SubVideo", PRIORITY_DISPLAY);

}

}

#endif

#endif

return false;

} 每一帧都会在bool ExternalCameraDeviceSession::OutputThread::threadLoop() 里做格式转换和裁剪

//通过RGA处理每一帧图像,图像显示有问题可以在里面改

bool ExternalCameraDeviceSession::OutputThread::threadLoop() {

std::shared_ptr req;

auto parent = mParent.promote();

if (parent == nullptr) {

ALOGE("%s: session has been disconnected!", __FUNCTION__);

return false;

}

...

} else if (req->frameIn->mFourcc == V4L2_PIX_FMT_NV12){

int handle_fd = -1, ret;

const native_handle_t* tmp_hand = (const native_handle_t*)(*(halBuf.bufPtr));

ret = ExCamGralloc4::get_share_fd(tmp_hand, &handle_fd);

if (handle_fd == -1) {

LOGE("convert tmp_hand to dst_fd error");

return -EINVAL;

}

ALOGV("%s(%d): halBuf handle_fd(%d)", __FUNCTION__, __LINE__, handle_fd);

ALOGV("%s(%d) halbuf_wxh(%dx%d) frameNumber(%d)", __FUNCTION__, __LINE__,

halBuf.width, halBuf.height, req->frameNumber);

unsigned long vir_addr = reinterpret_cast(req->inData);

//通过RGA处理每一帧图像,图像显示有问题可以在里面改

camera2::RgaCropScale::rga_scale_crop(

tempFrameWidth, tempFrameHeight, vir_addr,

HAL_PIXEL_FORMAT_YCrCb_NV12,handle_fd,

halBuf.width, halBuf.height, 100, false, false,

(halBuf.format == PixelFormat::YCRCB_420_SP), is16Align,

true);

} else if (req->frameIn->mFourcc == V4L2_PIX_FMT_NV16){

...

}

int RgaCropScale::rga_scale_crop(

int src_width, int src_height,

unsigned long src_fd, int src_format,unsigned long dst_fd,

int dst_width, int dst_height,

int zoom_val, bool mirror, bool isNeedCrop,

bool isDstNV21, bool is16Align, bool isYuyvFormat)

{

int ret = 0;

rga_info_t src,dst;

int zoom_cropW,zoom_cropH;

int ratio = 0;

...

//我的是图像需要镜像 可以在这里改

if (mirror)

src.rotation = HAL_TRANSFORM_ROT_90; //HAL_TRANSFORM_ROT_

else

src.rotation = HAL_TRANSFORM_ROT_180;

...

}