inotify与文件同步

前言

文件同步,可以用rsync实现,实现本地主机和远程主机上的文件同步,或者实现本地不同路径下文件的同步。

但是作为c开发者,造轮子走起。

前期准备工作

项目需求

- 仅同步某个路径下的所有文件,不包括子目录。

- 路径中的文件均为小文件,且只需要全量同步。

- 路径中的文件不会无穷创建,文件内容会多次更改,并且是全量更新。

项目采用的技术(2021/05/25)

- 采用inotify监听目录下的文件修改,因为只是监听目录的文件,而不包括子目录,因此不需要递归监听。

- 服务端监听目录,并同步数据;客户端接收数据,并落盘。

- 服务端与客户端之间通信采用推拉模型,既是服务端推数据,客户端拉数据;采用自定义通信协议,采似websocket的通信协议。

- 记录不同客户端,以及每个客户端的各自同步情况,选用sqlite3进行数据持久化。

- 采用python的异步框架aiosync。

项目开写(2021/05/26)

基本能实现一对多同步文件了

项目再思考与修bug与优化(2021/05/27-2021/05/28)

- 表,来来回回改了几次,最终确定是这个

CREATE TABLE IF NOT EXISTS FILE_INFO (FILE_NAME TEXT NOT NULL, FILE_TIME INTEGER NOT NULL, COUNTER INTEGER NOT NULL UNIQUE, PRIMARY KEY(FILE_NAME));

CREATE INDEX IF NOT EXISTS COUNTER_INDEX ON FILE_INFO (COUNTER);

CREATE INDEX IF NOT EXISTS FILE_TIME_INDEX ON FILE_INFO (FILE_TIME);

CREATE TABLE IF NOT EXISTS USER (NAME TEXT NOT NULL, FILE_TIME INTEGER, COUNTER INTEGER, PRIMARY KEY(NAME));

两个表,一个表记录文件信息,每个成员都有索引;另一个表记录用户信息。

2. 数据通信协议是这样的

# 客户端注册

user_name\r\n

# 服务端验证通过

# 服务端同步数据

filename\r\n

filetime\r\n

filesize\r\n

filedate\r\n

#客户端响应

filename\r\n

filetime\r\n

目前只是这么写,其实还可以再删减写内容。

在用aiosync.StreamWriter的时候,用tcpdump抓包发现,其实数据包是分开发送的…

# ...

writer.write(filaname)

writer.write(b'\r\n')

writer.write(filetime)

writer.write(b'\r\n')

# ...

await writer.drain()

现在改成拼接成一个大包并发送

# ...

data = '{0}\r\n{1}\r\n{2}\r\n'.format(file_name,file_time,file_size).encode()

# ...

writer.write(data)

await writer.drain()

- 需要考虑到程序正常或异常退出的时候,数据的持久化。

- 每次inotify触发的时候,都会进行update数据库,记录文件信息。但是当服务端程序退出后,新文件会未记录到数据库中,因此服务端程序启动时,需要检查路径中的新文件;

- 每次和客户端同步后,都会进行update数据库,考虑到只是同步文件,改为了每秒同步一次;

- 定时清除数据库中文件记录,对于过期数据(一天前),已经没有同步必要,那么需要定时任务清除数据库中的过期的文件记录。

- keep-alive机制,服务端是永远处在等待inotify事件,因此对于客户端连接断开这种情况,服务端是无法感知的,会一直占用资源,因此需要加入keep-alive机制,由服务端定时向客户端发送心跳包。

\r\n

0\r\n

0\r\n

\r\n

- 注册信号事件

- 异步文件读写

async with aiofiles.open(file_name,'rb') as fd:

data += await fd.read()

发现异步文件读操作,实际耗时很大。最后改为同步文件读。其实还有sendfile,零拷贝,但还没用过。

此处应该有个连接

- sqlite3的优化,除了一下参数可以设置,还有采用事务方式提交sql,不过我这边用的地方不多,就不改了。

-- 数据传递到操作系统层后不同步

PRAGMA synchronous = OFF;

-- 关闭日志,因此不进行回滚或原子提交

PRAGMA journal_mode = OFF;

此处应该有个连接

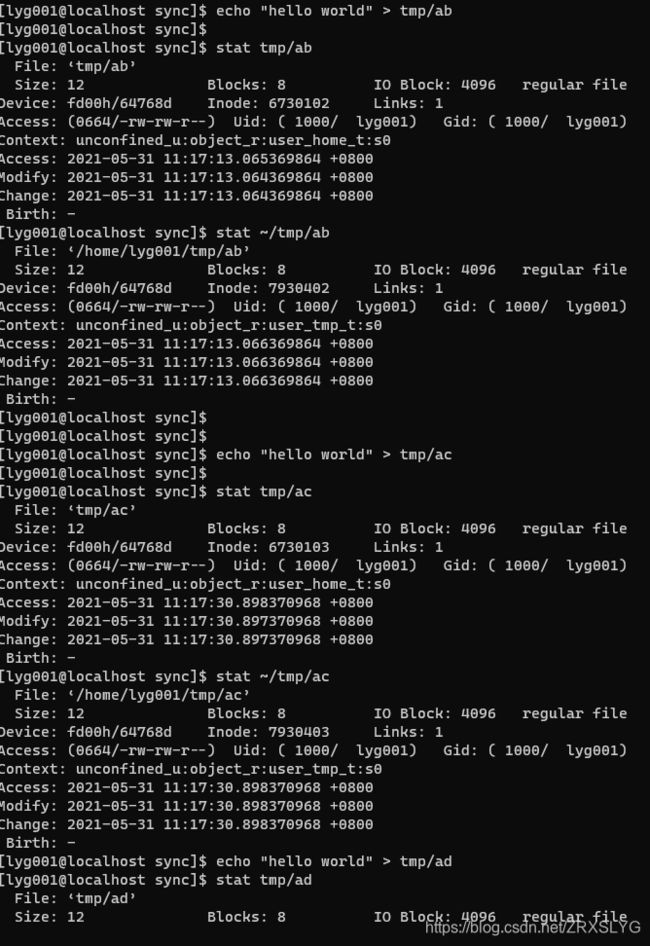

9. inotify的参数设置

sysctl -a | grep inotify

>> fs.inotify.max_queued_events = 16384

>> fs.inotify.max_user_instances = 128

>> fs.inotify.max_user_watches = 8192

当创建文件过快,且超过了inotify的上限后,开始丢失数据,我用傲腾试过,但是减少压测的文件数就没事了。

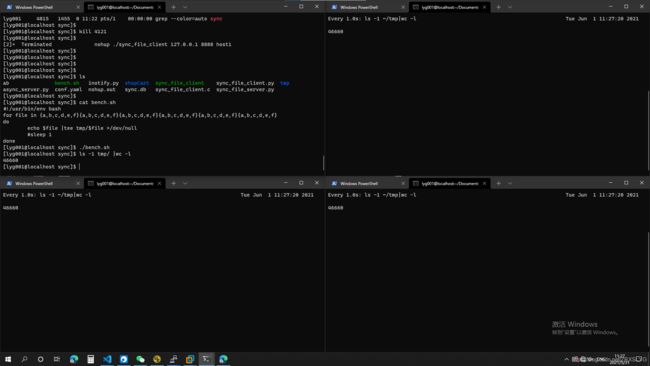

最后效果

#!/usr/bin/env bash

for file in {a,b,c,d,e,f}{a,b,c,d,e,f}{a,b,c,d,e,f}{a,b,c,d,e,f}{a,b,c,d,e,f}{a,b,c,d,e,f}

do

echo $file |tee tmp/$file >/dev/null

#sleep 1

done

我的服务端是机械硬盘,2台客户端是固态,1台客户端是机械硬盘,同步速度受限于服务端的创建文本及写文件速度。

后记

项目还有优化的地方:

- 大文件的话可以用sendfile;

- 实现增量同步;

- 采用文件缓存,多个客户端同步一个文件时,可以读文件到内存中,然后再同步到各个客户端;

- 用go会不会更好;

- 没钱;

代码

服务端代码

import asyncio

import pyinotify

import aiofiles

import sqlite3

import time

import datetime

import os

from sys import argv

import signal

from yaml import load

try:

from yaml import CLoader as Loader

except ImportError:

from yaml import Loader

db = None

counter = 1

timer = 0

queue_list = [] #队列, 用以通知client True: notify触发, False: keepalive

client_name = {} #字典, 让client name不重复, client_name[name] = (timer,counter)

default_name = 'root@@123_'

def create_db():

global db

cursor = db.cursor()

# 数据传递到操作系统层后不同步

cursor.execute('PRAGMA synchronous = OFF;')

# 关闭日志,因此不进行回滚或原子提交

cursor.execute('PRAGMA journal_mode = OFF;')

sql = 'CREATE TABLE IF NOT EXISTS FILE_INFO (FILE_NAME TEXT NOT NULL, FILE_TIME INTEGER NOT NULL, COUNTER INTEGER NOT NULL UNIQUE, PRIMARY KEY(FILE_NAME));'

print(sql)

cursor.execute(sql)

sql = 'CREATE INDEX IF NOT EXISTS COUNTER_INDEX ON FILE_INFO (COUNTER);'

print(sql)

cursor.execute(sql)

sql = 'CREATE INDEX IF NOT EXISTS FILE_TIME_INDEX ON FILE_INFO (FILE_TIME);'

print(sql)

cursor.execute(sql)

sql = 'CREATE TABLE IF NOT EXISTS USER (NAME TEXT NOT NULL, FILE_TIME INTEGER, COUNTER INTEGER, PRIMARY KEY(NAME));'

print(sql)

cursor.execute(sql)

db.commit()

cursor.close()

pass

def update_db_file(filename,file_time,counter):

global db

rowcount = 0

cursor = db.cursor()

sql_insert = 'INSERT INTO FILE_INFO (FILE_NAME,FILE_TIME,COUNTER) VALUES("{filename}",{file_time},{counter});'.format(filename=filename,file_time=file_time,counter=counter)

sql_update = 'UPDATE FILE_INFO set FILE_TIME={file_time},COUNTER={counter} where FILE_NAME="{filename}";'.format(filename=filename,file_time=file_time,counter=counter)

#print('update_db_file','sql_update',sql_update)

#print('update_db_file','sql_insert',sql_insert)

try:

cursor.execute(sql_update)

db.commit()

rowcount = cursor.rowcount

except Exception as e:

#print(e)

rowcount=0

pass

if rowcount==1:

cursor.close()

return cursor

try:

cursor.execute(sql_insert)

db.commit()

rowcount = cursor.rowcount

except Exception as e:

#print(e)

rowcount=0

pass

cursor.close()

return cursor

def select_db_file_next(counter):

global db

cursor = db.cursor()

sql = 'SELECT FILE_NAME, FILE_TIME, COUNTER FROM FILE_INFO WHERE COUNTER > {counter} ORDER BY COUNTER LIMIT 1;'.format(counter=counter)

#print('select_db_next',sql)

cursor.execute(sql)

column = cursor.fetchone()

cursor.close()

return column

pass

def delete_db_file_timeout(timer):

global db

cursor = db.cursor()

sql = 'DELETE FROM FILE_INFO WHERE FILE_TIME < {timer};'.format(timer=timer)

#print('delete_db_timeout',sql)

cursor.execute(sql)

db.commit()

cursor.close()

pass

def select_db_name(name):

global db

cursor = db.cursor()

sql = 'SELECT COUNTER,FILE_TIME FROM USER WHERE NAME = "{name}";'.format(name=name)

cursor.execute(sql)

column = cursor.fetchone()

cursor.close()

if column == None:

return 0,0

return column

def update_db_name_counter(name,file_time,counter):

global db

rowcount = 0

cursor = db.cursor()

sql_insert = 'INSERT INTO USER (NAME,FILE_TIME,COUNTER) VALUES("{name}",{file_time},{counter});'.format(name=name,file_time=file_time,counter=counter)

sql_update = 'UPDATE USER set FILE_TIME={file_time}, COUNTER={counter} where NAME="{name}";'.format(name=name,file_time=file_time,counter=counter)

#print('update_db_name_counter','sql_update',sql_update)

#print('update_db_name_counter','sql_insert',sql_insert)

try:

cursor.execute(sql_update)

db.commit()

rowcount = cursor.rowcount

except Exception as e:

#print(e)

rowcount=0

pass

if rowcount==1:

cursor.close()

return rowcount

try:

cursor.execute(sql_insert)

db.commit()

rowcount = cursor.rowcount

except Exception as e:

#print(e)

rowcount=0

pass

cursor.close()

return rowcount

pass

class MyEventHandler(pyinotify.ProcessEvent):

def process_IN_MODIFY(self,event):

#print('MODIFY',event.pathname)

if not os.path.isfile(event.pathname):

return

global counter,timer

file_name = event.pathname

timer = round(os.stat(event.pathname).st_mtime * 1000000000)

update_db_file(file_name,timer,counter)

counter+=1

client_name[default_name] = (timer,counter)

try:

for queue in queue_list:

queue.put_nowait(True)

except Exception as e:

pass

async def client_connected_cb(reader,writer):

global client_name,queue_list

name = '' # 客户端名字

queue = asyncio.Queue(maxsize = 2)

file_name = ''

file_time = 0

file_size = 0

counter = 0

flag = True

try:

name = await reader.readline()

name = name.decode().strip()

print('name {name} start sync'.format(name=name))

except Exception as e:

writer.close()

return

if name in client_name:

await asyncio.sleep(5)

writer.close()

return

# 通过客户端名字找到数据库中的记录

counter,file_time = select_db_name(name)

client_name[name] = (file_time,counter)

queue_list.append(queue)

try:

while True:

while True:

column = select_db_file_next(counter)

# 数据库中无更新的数据, 则等待队列

if column == None:

break

file_name,file_time,counter = column

#print('name {name}, file_name {file_name}, file_time {file_time}'.format(name=name,file_name=file_name,file_time=file_time))

file_size = os.stat(file_name).st_size

data = '{0}\r\n{1}\r\n{2}\r\n'.format(file_name,file_time,file_size).encode()

if file_size>0:

'''

async with aiofiles.open(file_name,'rb') as fd:

data += await fd.read()

'''

with open(file_name,'rb') as fd:

data += fd.read(file_size)

data += b'\r\n'

writer.write(data)

await writer.drain()

await reader.readline()

await reader.readline()

#update_db_name_counter(name,file_time,counter)

client_name[name] = (file_time,counter)

while True:

# 队列返回True, 说明是inotify

# 队列返回False, 说明是keepalive

flag = await queue.get()

if flag:

break

# keepalive

writer.write(b'\r\n0\r\n0\r\n\r\n')

await writer.drain()

await reader.readline()

await reader.readline()

except Exception as e:

print('client_connected_cb',e)

pass

writer.close()

update_db_name_counter(name,file_time,counter)

del client_name[name]

queue_list.remove(queue)

print('name {name} finish sync'.format(name=name))

pass

async def server_handler(port):

server = await asyncio.start_server(client_connected_cb,'0.0.0.0',port)

await server.serve_forever()

async def keepalive(seconds):

# 定时做心跳检测

while True:

await asyncio.sleep(seconds)

try:

for queue in queue_list:

queue.put_nowait(False)

except Exception as e:

pass

async def clean_db_timeout():

# 定时清除数据库中过期数据

# 默认是凌晨2点执行

# 清除1天前的数据

while True:

t = time.time()

t_datetime = datetime.datetime.fromtimestamp(t)

t2 = t + 86400

t2_datetime = datetime.datetime.fromtimestamp(t2)

t3_datetime = datetime.datetime(year=t2_datetime.year,month=t2_datetime.month,day=t2_datetime.day,hour=2)

diff_datetime = t3_datetime-t_datetime

diff_second = diff_datetime.total_seconds()

#等到凌晨2点执行

await asyncio.sleep(diff_second)

now = time.time_ns()

#删除1天前的db记录

now -= 86400000000000

delete_db_file_timeout(now)

pass

async def db_dump():

# 定时持久化

global db,client_name

while True:

await asyncio.sleep(1)

for name in client_name:

timer,counter = client_name[name]

update_db_name_counter(name,timer,counter)

def signal_handler(signum, frame):

global db,client_name

for name in client_name:

timer,counter = client_name[name]

update_db_name_counter(name,timer,counter)

db.close()

exit(0)

if __name__ == '__main__':

if len(argv) < 2:

print("python3 sync_file_server.py conf.yaml")

exit(0)

conf = argv[1]

conf_data = {}

with open(conf,'r',encoding='UTF-8') as conf_fd:

conf_data = load(conf_fd.read(),Loader=Loader)

db = conf_data['db']

port = conf_data['port']

notify_path = conf_data['notify_path']

second = conf_data['keep_alive']

print('start server port{port}, watch path{notify_path}, db{db}, keep_alive{second}'.

format(port=port,notify_path=notify_path,db=db,second=second))

db = sqlite3.connect(db)

create_db()

#获取数据库default_name的counter,timer

counter,timer = select_db_name(default_name)

if counter==0:

counter += 1

client_name[default_name] = (timer,counter)

print('default_name={default_name}, counter={counter}, timer={timer}'.format(default_name=default_name,counter=counter,timer=timer))

signal.signal(signal.SIGTERM,signal_handler)

signal.signal(signal.SIGINT,signal_handler)

#判断是否有新文件未记录到数据库

timer_tmp = timer

for file in os.listdir(notify_path):

file = notify_path + '/' + file

if not os.path.isfile(file):

continue

file_time = round(os.stat(file).st_mtime * 1000000000)

if file_time > timer:#大于, 极端情况下会出现两个文件有相同时间

update_db_file(file,file_time,counter)

counter += 1

timer_tmp = max(timer_tmp,file_time)

timer = timer_tmp

client_name[default_name] = (timer,counter)

# 获取loop

loop = asyncio.get_event_loop()

# 注册inotify事件到loop

wm = pyinotify.WatchManager()

notifier = pyinotify.AsyncioNotifier(wm, loop, default_proc_fun=MyEventHandler())

wm.add_watch(notify_path, pyinotify.IN_MODIFY)

# 注册server, 定时任务到loop

tasks = [

server_handler(port),

keepalive(second),

clean_db_timeout(),

db_dump(),

]

loop.run_until_complete(asyncio.wait(tasks))

客户端代码

#include