Optional lab: Linear Regression using Scikit-LearnⅠ

scikit-learn是一个开源的、可用于商业的机器学习工具包,此工具包包含本课程中需要使用的许多算法的实现

Goals

In this lab you will utilize scikit-learn to implement linear regression using Gradient Descent

Tools

You will utilize functions from scikit-learn as well as matplotlib and NumPy.

import numpy as np

np.set_printoptions(precision=2)

from sklearn.linear_model import LinearRegression, SGDRegressor

from sklearn.preprocessing import StandardScaler

from lab_utils_multi import load_house_data

import matplotlib.pyplot as plt

dlblue = '#0096ff'; dlorange = '#FF9300'; dldarkred='#C00000'; dlmagenta='#FF40FF'; dlpurple='#7030A0';

plt.style.use('./deeplearning.mplstyle')

np.set_printoptions()用于控制Python中小数的显示精度

np.set_printoptions(precision=None, threshold=None, linewidth=None, suppress=None, formatter=None)

precision:控制输出结果的精度(即小数点后的位数),默认值为8

threshold:当数组元素总数过大时,设置显示的数字位数,其余用省略号代替(当数组元素总数大于设置值,控制输出值得个数为6个,当数组元素小于或者等于设置值得时候,全部显示),当设置值为sys.maxsize(需要导入sys库),则会输出所有元素

linewidth:每行字符的数目,其余的数值会换到下一行

suppress:小数是否需要以科学计数法的形式输出

formatter:自定义输出规则

Gradient Descent

Scikit-learn有一个梯度下降回归模型sklearn.linear_model.SGDRegressor. 与之前的梯度下降实现一样,此模型在使用归一化输入时表现最佳

sklearn.preprocessing.StandardScaler 将像之前的lab一样执行z-score标准化,这里称为“标准分数”

Load the data set

X_train, y_train = load_house_data()

X_features = ['size(sqft)','bedrooms','floors','age']

Scale/Normalize the training data

scaler = StandardScaler()

X_norm = scaler.fit_transform(X_train)

print(f"Peak to Peak range by column in Raw X:{np.ptp(X_train,axis=0)}")

print(f"Peak to Peak range by column in Normalized X:{np.ptp(X_norm,axis=0)}")

输出如下

Peak to Peak range by column in Raw X:[2.41e+03 4.00e+00 1.00e+00 9.50e+01]

Peak to Peak range by column in Normalized X:[5.85 6.14 2.06 3.69]

Create and fit the regression model

sgdr = SGDRegressor(max_iter=1000)

sgdr.fit(X_norm, y_train)

print(sgdr)

print(f"number of iterations completed: {sgdr.n_iter_}, number of weight updates: {sgdr.t_}")

输出如下

SGDRegressor()

number of iterations completed: 122, number of weight updates: 12079.0

View parameters

注意,这些参数与归一化的输入数据相关联,拟合参数与之前使用该数据的lab中的参数值非常接近

b_norm = sgdr.intercept_

w_norm = sgdr.coef_

print(f"model parameters: w: {w_norm}, b:{b_norm}")

print(f"model parameters from previous lab: w: [110.56 -21.27 -32.71 -37.97], b: 363.16")

输出如下

model parameters: w: [110.13 -21.06 -32.48 -38.05], b:[363.16]

model parameters from previous lab: w: [110.56 -21.27 -32.71 -37.97], b: 363.16

Make predictions

预测训练数据的目标,use both the predict routine and compute using w w w and b b b

# make a prediction using sgdr.predict()

y_pred_sgd = sgdr.predict(X_norm)

# make a prediction using w,b.

y_pred = np.dot(X_norm, w_norm) + b_norm

print(f"prediction using np.dot() and sgdr.predict match: {(y_pred == y_pred_sgd).all()}")

print(f"Prediction on training set:\n{y_pred[:4]}" )

print(f"Target values \n{y_train[:4]}")

输出如下

prediction using np.dot() and sgdr.predict match: True

Prediction on training set:

[295.19 485.88 389.58 492.04]

Target values

[300. 509.8 394. 540. ]

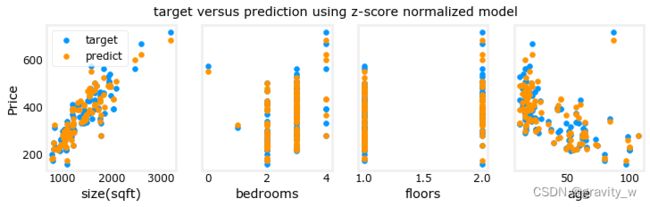

Plot Results

绘制预测值与目标值的关系图

# plot predictions and targets vs original features

fig,ax=plt.subplots(1,4,figsize=(12,3),sharey=True)

for i in range(len(ax)):

ax[i].scatter(X_train[:,i],y_train, label = 'target')

ax[i].set_xlabel(X_features[i])

ax[i].scatter(X_train[:,i],y_pred,color=dlorange, label = 'predict')

ax[0].set_ylabel("Price"); ax[0].legend();

fig.suptitle("target versus prediction using z-score normalized model")

plt.show()

Congratulations!

In this lab you:

- 使用了一个开源的机器学习工具包scikit-learn

- 使用该工具包中的梯度下降和特征归一化实现了线性回归