Nutanix Hybird Could

Flexibility

–

The findings also make it clear that enterprise IT teams highly value having the flexibility to choose the optimum IT infrastructure for each of their business applications on a dynamic basis, with 61% of respondents saying that application mobility across clouds and cloud types is “essential.” Cherry-picking infrastructure in this way to match the right resources to each workload as needs change results in a growing mixture of on- and off-prem cloud resources, a.k.a. the hybrid cloud.

Security

–

Security is driving deployment decisions, according to research findings, and respondent soverwhelmingly chose the hybrid cloud model as the one they believe to be the most secure — even over private clouds and traditional datacenters.

Expanding Cloud Options

–

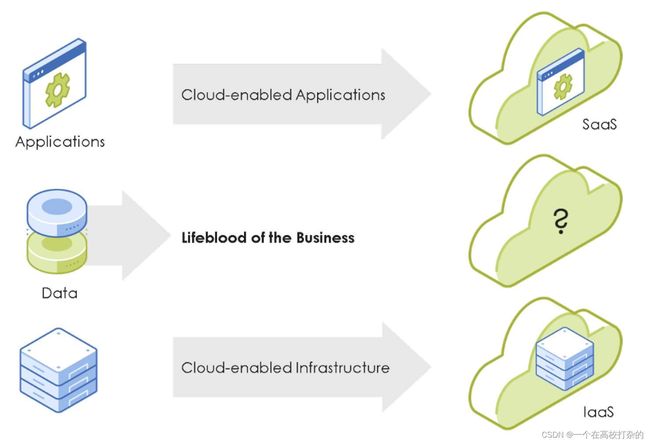

The proverbial “cloud” is no longer the simple notion it once was. There was a time when IT made a fairly straightforward decision whether to run an application in its on-prem datacenter or in the public cloud. However, with the growth of additional cloud options, such as managed on premises private cloud services, decision-making has become much more nuanced. Instead of facing a binary cloud-or-no-cloud situation, IT departments today more often are deciding on which cloud(s) to use, often on an application-by-application basis.

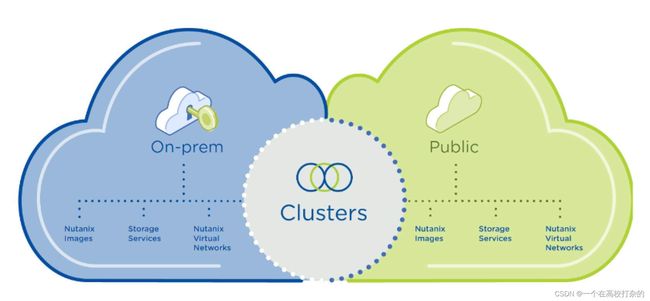

Reducing Friction in the Hybrid Cloud

Many organizations would like to operate their applications and data in a hybrid environment spanning on-prem datacenters and public clouds. However, expanding from private to public cloud environments poses many challenges, including the need to learn new technology skills, need to rearchitect applications, and multiple tools and silos to manage cloud accounts.

There is a pressing need for a single platform that can span private, distributed, and public clouds so that operators can manage their traditional and modern applications using a consistent cloud platform.

Using the same platform on both clouds, a hybrid model dramatically reduces the operational complexity of extending, bursting, or migrating your applications and data between clouds. Operators can conveniently use the same skill sets, tools, and practices used on-prem to manage applications running in public clouds such as AWS. Nutanix Clusters integrates with public cloud accounts like AWS, so you can run applications within existing Virtual Private Clouds (or VPCs), eliminating network complexity, and improving performance.

Maintain cloud optionality with portable software that can move with your applications and data across clouds. With a consistent consumption model that spans private and public clouds, you can confidently plan your long-term hybrid and multicloud strategy, maximizing the benefits of each environment.

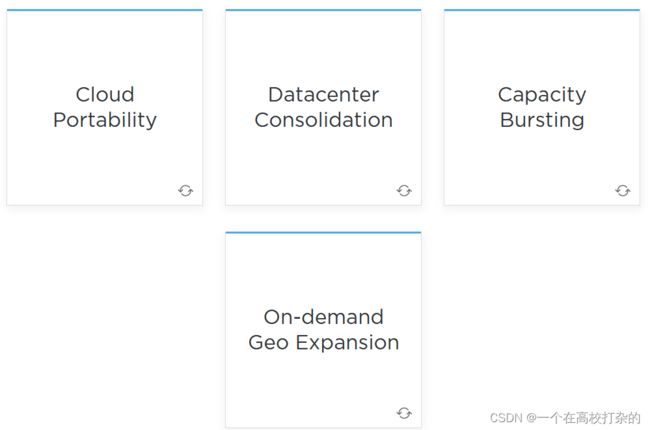

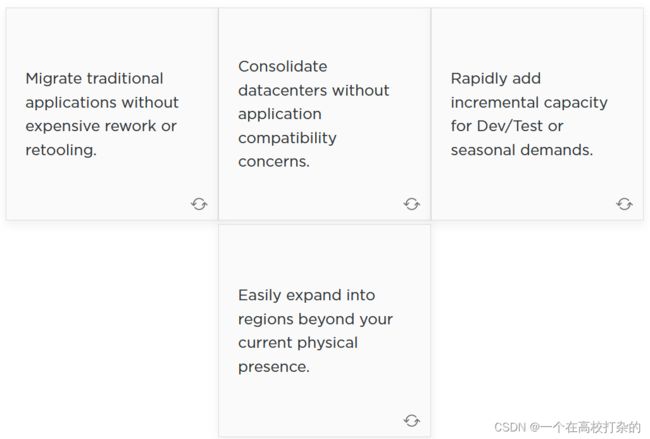

Hybrid Cloud Key Use Cases

State in Data and Applications

In a hybrid world, where cost efficiency is of utmost importance, you must differentiate the items that must exist and persist, and those that need only be leveraged when called upon. This concept is called “having state” - where a database may be required to be online 24x7 and replicated across multiple geographies to ensure data availability and accessibility, while the application front-end used to access these data may only need to service a given set of users for their localized working hours.

In this example, the data can be considered ever present, managed, and stateful. These data are groomed, protected, and correlated with other data sets. The application front-end may be ephemeral, having only the number of instances required to service the customer’s needs, and some spare capacity to fulfil potential incoming sessions as needed.

If these application instances ebb and flow with user demand, they can reduce or increase cost as needed – much like adjusting the flow of water through a faucet, you only use what you need at any given time. This optimizes cloud resource utilization, minimizes the impact of complex environments and security footprints at scale, and ultimately preserves cost efficiency for the business while still providing the agility desired.

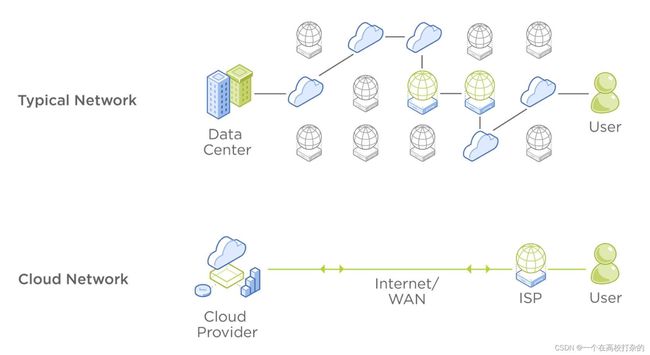

Network: Pipes of Friction

The hybrid cloud model presents new challenges with access to these data as well: can you access the data you need, in the timeframe you require, via the platform of your choice?

To this end data locality is a differentiated advantage, providing faster access and lower turnaround time to providing an end-user with their requested resources. If data locality reduces friction between users and their services, then the transmission of that data can impact that locality by increasing or decreasing performance and speed to access.

The more direct path a set of data requests can take through the cloud, the faster response time services can maintain, while simultaneously limiting the number of points of failure any given network has in the data path. Ultimately this reduces friction in the cloud and provides agility for both businesses and customers alike.

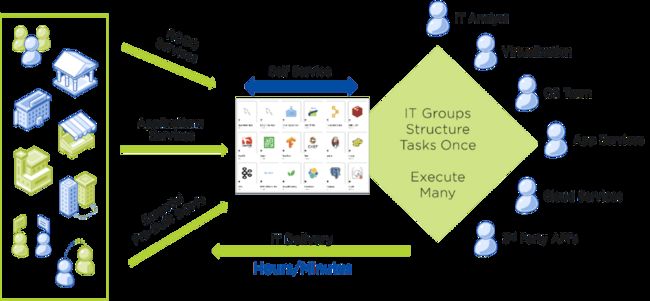

Reducing Friction Through Automation

Existing disparate systems within a company, siloed organizations, as well as unoptimized and manual workflows cause inefficiencies in any business. These factors all led decision makers towards the cloud adoption model in the first place, which is delivered “as-a-Service” through streamlined processes, homogeneous platforms and automated workflows.

By automation of repeatable, expectable, and manual tasks, and inserting a self-service portal that service consumers can easily satisfy their demands without a chain of human responsibility, we reduce the task time for IT workers from days per person to potentially zero input. This allows IT workers to streamline their processes even further, and shift their work onto more proactive tasks that build on top of this model. Each iteration that allows the business to consume IT services in a streamlined fashion builds more value and realizes greater innovation cycles for IT as a whole.

This organization and automation further drive home the desired goal of “Write Once, Read Many” – where expected and repeatable work can be automated and delivered at the speed of business without the need for reactive means or interrupting the flow of IT innovation. Innovation thereby becomes the default state for your IT department, rapidly growing the capabilities of the business and further creating a differentiation between a business and their competitors.

Nutanix Zero Trust Architecture

Nutanix approaches Zero Trust in multiple ways:

- Role-Based Authentication Controls (RBAC) and the concept of applied least-privilege in Prism Central.

- Microsegmentation with Nutanix Flow.

- Data-at-rest encryption with Nutanix’s native key management.

At each of these layers Nutanix helps assert a Zero Trust Architecture to ensure that only the right user, on the right device and network, has access to the right applications and data.

Identity

Authentication

–

Prism supports user authentication. There are three authentication options:

- Local user authentication: Users can authenticate if they have a local Prism account

- Active Directory or OpenLDAP authentication: Users can authenticate using their Active Directory or OpenLDAP credentials when the required authentication option is enabled in Prism.

- SAML authentication: Users can authenticate through a qualified identify provider when SAML support is enabled in Prism. The Security Assertion Markup Language (SAML) is an open standard for exchanging authentication and authorization data between two parties; ADFS as the identity provider (IDP) and Prism is the service provider.

Authorization: RBAC

–

Prism supports role-based access control (RBAC) that you can configure to provide customized access permissions for users based on their assigned roles. The roles dashboard allows you to view information about all defined roles and the users and groups assigned to those roles.

- Prism includes a set of predefined roles.

- You can also define additional custom roles.

- Configuring authentication confers default user permissions that vary depending on the type of authentication (full permissions from a directory service or no permissions from an identity provider). You can configure role maps to customize these user permissions.

- You can refine access permissions even further by assigning roles to individual users or groups that apply to a specified set of entities.

- With RBAC, user roles do not depend on the project membership. You can use RBAC and log in to Prism even without a project membership

Encrypt Everything, Everywhere

Evolution of SED/Key Management into software-encrypted-storage and Native KMS.

Data-at-rest encryption can be delivered through self-encrypting drives (SED) that are installed in your hardware. This provides strong data protection by encrypting user and application data for FIPS 140-2 Level 2 compliance. For SED drives, key management servers are accessed via an interface using the industry-standard Key Management Interface Protocol (KMIP) instead of storing the keys in the cluster. Nutanix also provides the option to use a native data-at-rest encryption feature that does not require specialized hardware from self-encrypting drives (SED).

Hardware encryption leveraging SEDs and External Key Management software are costly to configure and maintain, as well as complex to operate. Nutanix recognized the need to simplify cost and complexity by enabling software-based storage encryption, introduced in AOS 5.5. The introduction of Native Key Management also allowed for external key management servers to be integrated into the Nutanix cluster itself. This further reduced cost and complexity, and this software-defined solution helps companies further drive simplicity and automation in their security domains.

Nutanix solutions support SAML integration and optional two-factor authentication for system administrators in environments requiring additional layers of security. When implemented, administrator logins require a combination of a client certificate and username and password.

Governance and Compliance

Configuration Baselines

–

Nutanix publishes custom security baseline documents, based on United States Department of Defense (DoD) Security Technical Implementation Guides (STIGs) that cover the entire infrastructure stack and prescribe steps to secure deployment in the field.

Nutanix baselines are based on common National Institute of Standards and Technology (NIST) standards that can be applied to multiple regulatory concerns, e.g., for government, healthcare (HIPAA), or retail and finance (PCI-DSS).

Automated Validation and Self-Healing

–

Nutanix baselines are published in a machine-readable format, allowing for automated validation and ongoing monitoring of the security baseline for compliance.

Nutanix has implemented Security Configuration Management Automation (SCMA) to efficiently check security entities in the security baselines that cover both HCI and AHV virtualization.

Nutanix automatically reports log inconsistencies and reverts them to the baseline. With SCMA, systems can self-heal from any deviation and remain in compliance (hourly, daily, weekly, or monthly intervals).

Capital Expenditures (Capex) vs. Operating Expenditures (Opex)

Businesses seek to be the most agile and competitive in their respective markets, while at the same time maximizing cost efficiency and ensuring the efforts of the businesses are appropriate for the demand in those same markets. To that end, every businesses initiative decision ultimately comes down to financial expense, and the value realized when making any purchase. There are two models to be aware of when proposing or making purchases in a hybrid cloud world: Capital expenditures and operating expenditures.

Capex

Capex are any large purchases a business makes for a project or initiative, normally paid up-front for goods or services to be delivered. Capex costs for IT are normally allocated for purchases of software and hardware, or one-time costs of service provided for large projects.

An easy way to think of capex costs would be to think of a bottle of water: When you are thirsty, you simply purchase a bottle of water and drink it. The water consumed can be considered the utility of the asset, or usefulness of a product (as you are now no longer thirsty) and the bottle itself is also an asset that can either be repurposed (recycled, refilled with more water, or used for an art project). In this example, we fully own the bottle, the water inside, and the utility or value of that water (we are no longer thirsty).

Businesses have traditionally leveraged capex cost models to purchase assets as there are two major financial benefits: tax write-offs and financial reporting measures. Capex costs are sometimes seen as a necessary business expense, and as such are written off on taxes on a depreciation schedule. This means that a purchase of one million dollars ($1M) can be used to reduce corporate taxes either in one fiscal year, or spread out over the lifetime of the asset itself (known as a depreciation schedule). Capex purchases also usually result in the gain of an asset or multiple assets, that can grow the company’s balance sheet while spreading out the depreciation of that asset’s cost over multiple years. This approach can ultimately show an increase in valuation of a company and reflect directly in stock price, and from a business perspective this increase is clearly desirable.

There are also added responsibilities that come with the capex cost model: assets are now owned, and require upkeep and maintenance; large capital costs in the enterprise need to be planned for at least a year (or multiple years) in advance, and budgeted by management to ensure responsible spending; capital costs may not be accurately forecasted and become a drag on corporate finances (inappropriate sizing of hardware/software; lack of training incorporated in projects; unknown or undocumented software costs and renewals, etc.) Cost controls for capital expenditures are normally budgeted for yearly, and follow a schedule of approvals at various levels in the management chain, giving tight control to corporate costs at various stages.

Opex

In contrast to capex, opex are regular payments made at repeatable intervals (daily, weekly, monthly, etc.) for subscriptions and services that assist with the normal daily operation of the business. Normal operational expenses include: payroll for employees, utility expenses such as electrical costs and cellular provider usage, and SaaS subscription fees. In our water example, imagine that opex means that you would “pay by the drink” and not own the bottle. This simplifies purchasing for many businesses, being able to “buy the value” without owning and managing the asset.

Public cloud expenses fall under this category of operational expenses, as they are a “pay as you go” model, and none of the physical assets in the cloud are owned by the subscriber. The financial advantage here is that any subscriptions purchased via opex funds are fully tax deductible at the time of purchase and may ultimately mean more real-time margin for the company, equating to hopefully more financial gains for shareholders.

One downside to opex is that there are no assets to add to the corporate balance sheet, so the additional value is in the utility of a service, and not in the purchase of the actual item used. Another danger here is the variable nature of cloud usage equates to an unknown monthly expense. As opposed to capex payments, which are up-front costs to the business, and budgeted for yearly; opex costs are utility model – paid for after usage, which can put a strain on finances if not forecasted appropriately. Cloud sprawl, and hence cost sprawl, are quite common when adopting a new cloud model of operation and can be detrimental to the business if not monitored carefully.

Ultimately there are benefits and detractors to both financial expense models, and every business has a certain combination of both capex and opex in use. Knowing the different options and how to optimize them will ultimately make IT departments successful in delivering business value while effectively managing costs at scale.

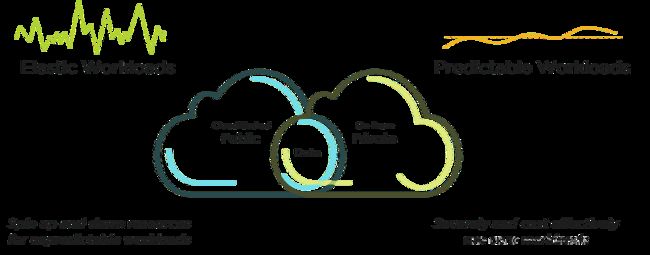

Predictable vs. Unpredictable Workloads

Every business has applications that they rely upon for normal operations: whether they be database servers to store and retrieve valuable data; web servers for eCommerce sites or even simply company online presence; to massively complex and intricate ERP systems that manage the flow from order to delivery, and AI workloads used to formulate and model predictive algorithms. This diversity of application usage and need requires capacity of hardware and software to service the need, and to make things more interesting: the usage of any given application can vary wildly depending on this business needs!

Predictable workloads can be defined as the hardware and software that supports any given application, which in turn can be expected to operate in an observable and understood fashion. We can “predict” how an application is going to be used, by simply knowing what business needs that application serves. One good example of this predictability could be an email server. An IT department would know how many users they have across the company, set limits on attachment sizes sent per email, and know how many additional users they would have per month/quarter/year to plan for growth. Usage by individual will be varied, but ultimately the total expected performance needs and usage of an email server can be sized and predicted successfully.

Unpredictable workloads can be viewed as the opposite of predictable workloads: any set of hardware and software that support a business application that cannot be quantified or understood to perform in an expected way. A good example of one of these workloads might be an eCommerce site. Businesses regularly run marketing and sales campaigns to drive potential customers to their websites to purchase products and services. While we can (hopefully) expect customers to purchase our offerings, it is unknown how many users may be attracted to which various campaigns, and hence visit the eCommerce site to buy something. This lack of visibility is complicated when we look at the seasonality of workloads: Holidays can see a sharp, drastic uptrend in purchasing from users, while the rest of the year we may have more expectable results. Similarly, various events may see large inflows of user demand: theWorld Cup drives billions of page views for FIFA every 4 years, while the remaining 3 may see much less public demand on their IT infrastructure.

Before cloud computing, both predictable and unpredictable workloads would be hosted on the same platforms in the datacenter. Businesses would capitalize costs, own assets to perform the work desired, and service customer needs. The problem with this operating model is that businesses would suffer from the ownership of an asset, while not reaping any benefit from it, if that asset was purchased to service unpredictable workloads. The opposite can also be said:when lacking the appropriate capacity to service customer demand, businesses would suffer the loss of revenue by losing access to those customers who were unable to purchase goods or services due to the increased demand.

How do we solve for both predictable and unpredictable workloads while still optimizing cost and meeting customer demand? By combining the best of both worlds, the hybrid cloud approach enables predictable workloads to attain efficiency and scale while maximizing capital expense, and simultaneously enabling unpredictable workloads to achieve the dynamic webscale they require to meet the seasonality of user demands and obtaining the unique cost benefits of “pay as you go”. The elastic nature of the cloud perfectly meets unpredictable workload needs, while the steady-state nature of predictable workloads can be perfectly suited for datacenter consumption.