- IK分词

初心myp

实现简单的分词功能,智能化分词添加依赖配置:4.10.4org.apache.lucenelucene-core${lucene.version}org.apache.lucenelucene-analyzers-common${lucene.version}org.apache.lucenelucene-queryparser${lucene.version}org.apache.lucenel

- SpringMVC执行流程(原理),通俗易懂

国服冰

SpringMVCspringmvc

SpringMVC执行流程(原理),通俗易懂一、图解SpringMVC流程二、进一步理解Springmvc的执行流程1、导入依赖2、建立展示的视图3、web.xml4、spring配置文件springmvc-servlet5、Controller6、tomcat配置7、访问的url8、视图页面一、图解SpringMVC流程图为SpringMVC的一个较完整的流程图,实线表示SpringMVC框架提

- 实时数据流计算引擎Flink和Spark剖析

程小舰

flinkspark数据库kafkahadoop

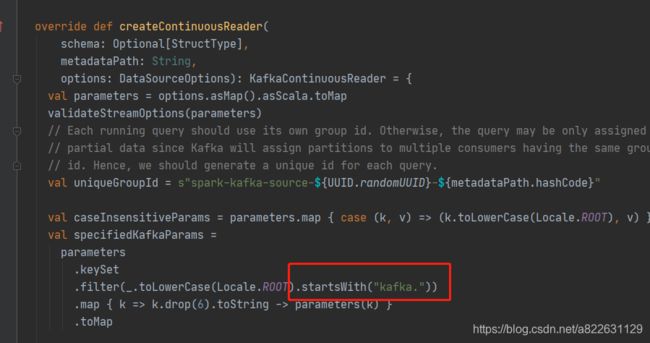

在过去几年,业界的主流流计算引擎大多采用SparkStreaming,随着近两年Flink的快速发展,Flink的使用也越来越广泛。与此同时,Spark针对SparkStreaming的不足,也继而推出了新的流计算组件。本文旨在深入分析不同的流计算引擎的内在机制和功能特点,为流处理场景的选型提供参考。(DLab数据实验室w.x.公众号出品)一.SparkStreamingSparkStreamin

- Linux系统配置(应用程序)

1风天云月

Linuxlinux应用程序编译安装rpmhttp

目录前言一、应用程序概述1、命令与程序的关系2、程序的组成3、软件包封装类型二、RPM1、RPM概述2、RPM用法三、编译安装1、解包2、配置3、编译4、安装5、启用httpd服务结语前言在Linux中的应用程序被视为将软件包安装到系统中后产生的各种文档,其中包括可执行文件、配置文件、用户手册等内容,这些文档被组织为一个有机的整体,为用户提供特定的功能,因此对于“安装软件包”与“安装应用程序”这两

- LVS+Keepalived实现高可用和负载均衡

2401_84412895

程序员lvs负载均衡运维

2、开启网卡子接口配置VIP[root@a~]#cd/etc/sysconfig/network-scripts/[root@anetwork-scripts]#cp-aifcfg-ens32ifcfg-ens32:0[root@anetwork-scripts]#catifcfg-ens32:0BOOTPROTO=staticDEVICE=ens32:0ONBOOT=yesIPADDR=10.1

- Java并发核心:线程池使用技巧与最佳实践! | 多线程篇(五)

bug菌¹

Java实战(进阶版)javaJava零基础入门Java并发线程池多线程篇

本文收录于「Java进阶实战」专栏,专业攻坚指数级提升,希望能够助你一臂之力,帮你早日登顶实现财富自由;同时,欢迎大家关注&&收藏&&订阅!持续更新中,up!up!up!!环境说明:Windows10+IntelliJIDEA2021.3.2+Jdk1.8本文目录前言摘要正文何为线程池?为什么需要线程池?线程池的好处线程池使用场景如何创建线程池?线程池的常见配置源码解析案例分享案例代码演示案例运行

- 【Coze搞钱实战】3. 避坑指南:对话流设计中的6个致命错误(真实案例)

AI_DL_CODE

Coze平台对话流设计客服Bot避坑用户流失封号风险智能客服配置故障修复指南

摘要:对话流设计是智能客服Bot能否落地的核心环节,直接影响用户体验与业务安全。本文基于50+企业Bot部署故障分析,聚焦导致用户流失、投诉甚至封号的6大致命错误:无限循环追问、人工移交超时、敏感词过滤缺失、知识库冲突、未处理否定意图、跨平台适配失败。通过真实案例拆解每个错误的表现形式、技术根因及工业级解决方案,提供可直接复用的Coze配置代码、工作流模板和检测工具。文中包含对话流健康度检测工具使

- Pktgen-DPDK:开源网络测试工具的深度解析与应用

艾古力斯

本文还有配套的精品资源,点击获取简介:Pktgen-DPDK是基于DPDK的高性能流量生成工具,适用于网络性能测试、硬件验证及协议栈开发。它支持多种网络协议,能够模拟高吞吐量的数据包发送。本项目通过利用DPDK的高速数据包处理能力,允许用户自定义数据包内容,并实现高效的数据包管理与传输。文章将指导如何安装DPDK、编译Pktgen、配置工具以及使用方法,最终帮助开发者和网络管理员深入理解并优化网络

- 环境搭建 | Python + Anaconda / Miniconda + PyCharm 的安装、配置与使用

本文将分别介绍Python、Anaconda/Miniconda、PyCharm的安装、配置与使用,详细介绍Python环境搭建的全过程,涵盖Python、Pip、PythonLauncher、Anaconda、Miniconda、Pycharm等内容,以官方文档为参照,使用经验为补充,内容全面而详实。由于图片太多,就先贴一个无图简化版吧,详情请查看Python+Anaconda/Minicond

- MySQL复习题

一.填空题1.关系数据库的标准语言是SQL。2.数据库发展的3个阶段中,数据独立性最高的是阶段数据库系统。3.概念模型中的3种基本联系分别是一对一、一对多和多对多。4.MySQL配置文件的文件名是my.ini或my.cnf。5.在MySQL配置文件中,datadir用于指定数据库文件的保存目录。6.添加IFNOTEXISTS可在创建的数据库已存在时防止程序报错。7.MySQL提供的SHOWCREA

- centos7安装配置 Anaconda3

Anaconda是一个用于科学计算的Python发行版,Anaconda于Python,相当于centos于linux。下载[root@testsrc]#mwgethttps://mirrors.tuna.tsinghua.edu.cn/anaconda/archive/Anaconda3-5.2.0-Linux-x86_64.shBegintodownload:Anaconda3-5.2.0-L

- Spark SQL架构及高级用法

Aurora_NeAr

sparksql架构

SparkSQL架构概述架构核心组件API层(用户接口)输入方式:SQL查询;DataFrame/DatasetAPI。统一性:所有接口最终转换为逻辑计划树(LogicalPlan),进入优化流程。编译器层(Catalyst优化器)核心引擎:基于规则的优化器(Rule-BasedOptimizer,RBO)与成本优化器(Cost-BasedOptimizer,CBO)。处理流程:阶段输入输出关键动

- qemu virt-manager 创建虚拟机设置虚拟机桥接网络

三希

网络php开发语言

在virt-manager中设置虚拟机桥接网络的步骤如下:确认主机网络桥接已配置打开终端,执行brctlshow命令查看是否已有桥接接口(通常名为br0或类似名称)如果没有桥接接口,需先创建:sudonano/etc/netplan/01-netcfg.yaml添加类似以下配置(根据实际网卡调整):yamlnetwork:version:2renderer:networkdethernets:en

- ubuntu 查看防火墙 相关操作

三希

windows

在Ubuntu系统里,查看防火墙状态和配置主要借助ufw(UncomplicatedFirewall)工具,它是Ubuntu默认的防火墙配置界面。下面为你介绍常用的查看命令:一、查看防火墙状态要查看防火墙是否处于运行状态,可以使用以下命令:bashsudoufwstatus或者使用更详细的版本:bashsudoufwstatusverbose输出结果里,Status:active意味着防火墙正在运

- 新手如何通过github pages静态网站托管搭建个人网站和项目站点

vvandre

Web技术github

一、githubpages静态网站托管介绍githubpages它是一个免费快捷的静态网站托管服务。对比传统建站,它有哪些优点呢?在传统方式中,首先要租用服务器,服务器上需要运行外部程序,还需要再购买域名,要配置SSL证书,最后还要配置DNS,将域名解析到服务器。这一套繁琐操作,基本上就把小白劝退了。graphTDA[租用服务器]-->B[部署Web应用(运行外部程序,如Nginx)]B-->C[

- mac os 10.9 mysql_MAC OSX 10.9 apache php mysql 环境配置

AY05

macos10.9mysql

#终端内运行sudoapachectlstart#启动Apachesudoapachectlrestart#重启Apachesudoapachectlstop#停止Apache#配置Apachesudovi/private/etc/apache2/httpd.conf#将里面的这一行去掉前面的##LoadModulephp5_modulelibexec/apache2/libphp5.so#配置P

- 二级域名分发系统商业版全开源v3版

CloseAi论坛

程序源码二级域名分发系统商业版开源

介绍:名分发-快乐二级域名分发源码主要是二级域名分发网站源码,域名接口配置自己研究吧网盘下载地址:https://zijiewangpan.com/NbX6950sYLn图片:

- 修改CentOS的SSH登录端口(22端口)

❀͜͡傀儡师

centossshlinux

要修改CentOS系统的SSH服务默认端口(22端口),请按照以下步骤操作:备份SSH配置文件sudocp/etc/ssh/sshd_config/etc/ssh/sshd_config.bak编辑SSH配置文件sudovi/etc/ssh/sshd_config查找并修改端口设置找到以下行(大约在第13行左右):#Port22取消注释并添加新端口(例如使用56001):#Port22Port56

- 二级域名分发网站源码 商业版全开源

lskelasi

程序源码二级域名分发源码二级域名分发网站源码源码

介绍:快乐二级域名分发-快乐二级域名分发源码主要是二级域名分发网站源码,不懂的不要下载了。本套源码可设置收费使用,有充值接口,域名接口配置自己研究吧网盘下载地址:https://zijiewangpan.com/jsX0JAuRE01图片:

- 微服务日志追踪,Skywalking接入TraceId功能

Victor刘

微服务skywalkingjava

文章目录一、借助skywalking追加traceIdlogbacklog4j2效果二、让skywalking显示日志内容版本差异logback配置文件log4j2配置文件一、借助skywalking追加traceId背景:在微服务或多副本中难以观察一个链路的日志,需要通过唯一traceId标识来查找,下面介绍Skywalking-traceId在Java中的配置方法。介绍两种java日志的配置方

- 全局修改GitLab14默认语言为中文

GitLab安装成功后默认语言是英语,只有登录后才能手动指定为中文,且这个配置只对自己生效,经查阅资料后,总结全局修改GitLab14默认语言为中文方法如下:0.进入容器如果你用Docker部署的GitLab,那么需要使用命令sudodockerexec-itgitlab/bin/bash进入容器1.修改rails配置文件打开/opt/gitlab/embedded/service/gitlab-

- Charles 配置 https

Monkey_猿

httpCharles配置https

Charles一.Iphone(MAC版;win可参照使用):首先,对Charles进行配置:菜单:Proxy->ProxySettings...->勾选EnabletransparentHTTPproxyingProxy->ProxySettings然后找到电脑的局域网IP地址:这里自己去找吧接着,打开你的iPhone:设置->Wifi->连接上和电脑同一路由器的Wifi,点击右边的i进入配置配

- Claude Code 超详细完整指南(2025最新版)

笙囧同学

python

终端AI编程助手|高频使用点+生态工具+完整命令参考+最新MCP配置目录快速开始(5分钟上手)详细安装指南系统要求Windows安装(WSL方案)macOS安装Linux安装安装验证配置与认证首次认证环境变量配置代理配置⚡基础命令详解启动命令会话管理文件操作Think模式完全指南MCP服务器配置详解MCP基础概念添加MCP服务器10个必备MCP服务器MCP故障排除记忆系统详解高级使用技巧成本控制策

- qt报错说no suitable kits found

看起来你的QtCreator在试图找一个"kit"来编译你的程序,但是没有找到合适的kit.Kit是QtCreator中用来配置编译环境的一种东西,它包含了编译器、编译选项、以及所要使用的Qt库等信息.当QtCreator试图找一个kit来编译你的程序时,如果没有找到合适的kit,就会出现这个错误.要解决这个问题,你需要在QtCreator中配置一个合适的kit.具体来说,你需要安装Qt库和编译器

- 彻底搞懂Cache-Control

qu木木

网络http缓存

文章目录一、是什么?二、核心作用三、指令详解(常用)四、常见场景配置示例五、重要注意事项一、是什么?Cache-Control是HTTP头部中最关键、最灵活的控制缓存的字段,用于定义在客户端(浏览器)和代理服务器(如CDN)上的缓存策略。它取代了HTTP/1.0时代较为简单的Expires和Pragma头部,提供了更精细的控制。二、核心作用是否缓存:明确支出响应是否可以缓存,以及可以被谁缓存(浏览

- 001 Configuration结构体构造

盖世灬英雄z

DramSysc++人工智能

目录DramSys代码分析1Configuration结构体构造1.1`from_path`函数详解1.2构造过程总结这种设计的好处2Simulator例化过程2.1instantiateInitiatorDramSys代码分析1Configuration结构体构造好的,我们来详细解释一下DRAMSysConfiguration.cpp文件中from_path函数的配置构造过程。这个文件是DRAM

- Spring Boot 2整合Druid的两种方式

玩代码

springboot后端javaDruid

一、自定义整合Druid(非Starter方式)适用于需要完全手动控制配置的场景添加依赖(pom.xml)com.alibabadruid1.2.8org.springframework.bootspring-boot-starter-jdbc创建配置类@ConfigurationpublicclassDruidConfig{@Bean@ConfigurationProperties(prefix

- Linux中Samba服务器安装与配置文件

長樂.-

linux运维服务器

Samba简述27zkqsamba是一个基于TCP/IP协议的开源软件套件,可以在Linux、Windows、macOS等操作系统上运行。它允许不同操作系统的计算机之间实现文件和打印机共享。samba提供了一个服务,使得Windows操作系统可以像访问本地文件一样访问Linux、Mac等操作系统上的共享文件。实现跨平台的文件共享,提高办公环境的效率和便利性。samba也支持Windows网络邻居协

- Ubuntu Docker 安装Redis

LLLL96

Ubuntudockerdockerredisubuntu

目录介绍1.数据结构丰富2.高性能3.持久化1.拉取Redis镜像2.创建挂载目录(可选)3.配置Redis持久化(可选)4.使用配置文件运行容器5.查看redis日志介绍1.数据结构丰富Redis支持多种数据结构,包括:字符串(String):可以用来存储任何类型的数据,例如文本、数字或二进制数据。哈希(Hash):存储字段和值的映射,适合用于表示对象。列表(List):有序的字符串列表,可以用

- 零数学基础理解AI核心概念:梯度下降可视化实战

九章云极AladdinEdu

人工智能gpu算力深度学习pytorchpython语言模型opencv

点击“AladdinEdu,同学们用得起的【H卡】算力平台”,H卡级别算力,按量计费,灵活弹性,顶级配置,学生专属优惠。用Python动画演示损失函数优化过程,数学公式具象化读者收获:直观理解模型训练本质,破除"数学恐惧症"当盲人登山者摸索下山路径时,他本能地运用了梯度下降算法。本文将用动态可视化技术,让你像感受重力一样理解AI训练的核心原理——无需任何数学公式推导。一、梯度下降:AI世界的"万有

- linux系统服务器下jsp传参数乱码

3213213333332132

javajsplinuxwindowsxml

在一次解决乱码问题中, 发现jsp在windows下用js原生的方法进行编码没有问题,但是到了linux下就有问题, escape,encodeURI,encodeURIComponent等都解决不了问题

但是我想了下既然原生的方法不行,我用el标签的方式对中文参数进行加密解密总该可以吧。于是用了java的java.net.URLDecoder,结果还是乱码,最后在绝望之际,用了下面的方法解决了

- Spring 注解区别以及应用

BlueSkator

spring

1. @Autowired

@Autowired是根据类型进行自动装配的。如果当Spring上下文中存在不止一个UserDao类型的bean,或者不存在UserDao类型的bean,会抛出 BeanCreationException异常,这时可以通过在该属性上再加一个@Qualifier注解来声明唯一的id解决问题。

2. @Qualifier

当spring中存在至少一个匹

- printf和sprintf的应用

dcj3sjt126com

PHPsprintfprintf

<?php

printf('b: %b <br>c: %c <br>d: %d <bf>f: %f', 80,80, 80, 80);

echo '<br />';

printf('%0.2f <br>%+d <br>%0.2f <br>', 8, 8, 1235.456);

printf('th

- config.getInitParameter

171815164

parameter

web.xml

<servlet>

<servlet-name>servlet1</servlet-name>

<jsp-file>/index.jsp</jsp-file>

<init-param>

<param-name>str</param-name>

- Ant标签详解--基础操作

g21121

ant

Ant的一些核心概念:

build.xml:构建文件是以XML 文件来描述的,默认构建文件名为build.xml。 project:每个构建文

- [简单]代码片段_数据合并

53873039oycg

代码

合并规则:删除家长phone为空的记录,若一个家长对应多个孩子,保留一条家长记录,家长id修改为phone,对应关系也要修改。

代码如下:

- java 通信技术

云端月影

Java 远程通信技术

在分布式服务框架中,一个最基础的问题就是远程服务是怎么通讯的,在Java领域中有很多可实现远程通讯的技术,例如:RMI、MINA、ESB、Burlap、Hessian、SOAP、EJB和JMS等,这些名词之间到底是些什么关系呢,它们背后到底是基于什么原理实现的呢,了解这些是实现分布式服务框架的基础知识,而如果在性能上有高的要求的话,那深入了解这些技术背后的机制就是必须的了,在这篇blog中我们将来

- string与StringBuilder 性能差距到底有多大

aijuans

之前也看过一些对string与StringBuilder的性能分析,总感觉这个应该对整体性能不会产生多大的影响,所以就一直没有关注这块!

由于学程序初期最先接触的string拼接,所以就一直没改变过自己的习惯!

- 今天碰到 java.util.ConcurrentModificationException 异常

antonyup_2006

java多线程工作IBM

今天改bug,其中有个实现是要对map进行循环,然后有删除操作,代码如下:

Iterator<ListItem> iter = ItemMap.keySet.iterator();

while(iter.hasNext()){

ListItem it = iter.next();

//...一些逻辑操作

ItemMap.remove(it);

}

结果运行报Con

- PL/SQL的类型和JDBC操作数据库

百合不是茶

PL/SQL表标量类型游标PL/SQL记录

PL/SQL的标量类型:

字符,数字,时间,布尔,%type五中类型的

--标量:数据库中预定义类型的变量

--定义一个变长字符串

v_ename varchar2(10);

--定义一个小数,范围 -9999.99~9999.99

v_sal number(6,2);

--定义一个小数并给一个初始值为5.4 :=是pl/sql的赋值号

- Mockito:一个强大的用于 Java 开发的模拟测试框架实例

bijian1013

mockito单元测试

Mockito框架:

Mockito是一个基于MIT协议的开源java测试框架。 Mockito区别于其他模拟框架的地方主要是允许开发者在没有建立“预期”时验证被测系统的行为。对于mock对象的一个评价是测试系统的测

- 精通Oracle10编程SQL(10)处理例外

bijian1013

oracle数据库plsql

/*

*处理例外

*/

--例外简介

--处理例外-传递例外

declare

v_ename emp.ename%TYPE;

begin

SELECT ename INTO v_ename FROM emp

where empno=&no;

dbms_output.put_line('雇员名:'||v_ename);

exceptio

- 【Java】Java执行远程机器上Linux命令

bit1129

linux命令

Java使用ethz通过ssh2执行远程机器Linux上命令,

封装定义Linux机器的环境信息

package com.tom;

import java.io.File;

public class Env {

private String hostaddr; //Linux机器的IP地址

private Integer po

- java通信之Socket通信基础

白糖_

javasocket网络协议

正处于网络环境下的两个程序,它们之间通过一个交互的连接来实现数据通信。每一个连接的通信端叫做一个Socket。一个完整的Socket通信程序应该包含以下几个步骤:

①创建Socket;

②打开连接到Socket的输入输出流;

④按照一定的协议对Socket进行读写操作;

④关闭Socket。

Socket通信分两部分:服务器端和客户端。服务器端必须优先启动,然后等待soc

- angular.bind

boyitech

AngularJSangular.bindAngularJS APIbind

angular.bind 描述: 上下文,函数以及参数动态绑定,返回值为绑定之后的函数. 其中args是可选的动态参数,self在fn中使用this调用。 使用方法: angular.bind(se

- java-13个坏人和13个好人站成一圈,数到7就从圈里面踢出一个来,要求把所有坏人都给踢出来,所有好人都留在圈里。请找出初始时坏人站的位置。

bylijinnan

java

import java.util.ArrayList;

import java.util.List;

public class KickOutBadGuys {

/**

* 题目:13个坏人和13个好人站成一圈,数到7就从圈里面踢出一个来,要求把所有坏人都给踢出来,所有好人都留在圈里。请找出初始时坏人站的位置。

* Maybe you can find out

- Redis.conf配置文件及相关项说明(自查备用)

Kai_Ge

redis

Redis.conf配置文件及相关项说明

# Redis configuration file example

# Note on units: when memory size is needed, it is possible to specifiy

# it in the usual form of 1k 5GB 4M and so forth:

#

- [强人工智能]实现大规模拓扑分析是实现强人工智能的前奏

comsci

人工智能

真不好意思,各位朋友...博客再次更新...

节点数量太少,网络的分析和处理能力肯定不足,在面对机器人控制的需求方面,显得力不从心....

但是,节点数太多,对拓扑数据处理的要求又很高,设计目标也很高,实现起来难度颇大...

- 记录一些常用的函数

dai_lm

java

public static String convertInputStreamToString(InputStream is) {

StringBuilder result = new StringBuilder();

if (is != null)

try {

InputStreamReader inputReader = new InputStreamRead

- Hadoop中小规模集群的并行计算缺陷

datamachine

mapreducehadoop并行计算

注:写这篇文章的初衷是因为Hadoop炒得有点太热,很多用户现有数据规模并不适用于Hadoop,但迫于扩容压力和去IOE(Hadoop的廉价扩展的确非常有吸引力)而尝试。尝试永远是件正确的事儿,但有时候不用太突进,可以调优或调需求,发挥现有系统的最大效用为上策。

-----------------------------------------------------------------

- 小学4年级英语单词背诵第二课

dcj3sjt126com

englishword

egg 蛋

twenty 二十

any 任何

well 健康的,好

twelve 十二

farm 农场

every 每一个

back 向后,回

fast 快速的

whose 谁的

much 许多

flower 花

watch 手表

very 非常,很

sport 运动

Chinese 中国的

- 自己实践了github的webhooks, linux上面的权限需要注意

dcj3sjt126com

githubwebhook

环境, 阿里云服务器

1. 本地创建项目, push到github服务器上面

2. 生成www用户的密钥

sudo -u www ssh-keygen -t rsa -C "

[email protected]"

3. 将密钥添加到github帐号的SSH_KEYS里面

3. 用www用户执行克隆, 源使

- Java冒泡排序

蕃薯耀

冒泡排序Java冒泡排序Java排序

冒泡排序

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

蕃薯耀 2015年6月23日 10:40:14 星期二

http://fanshuyao.iteye.com/

- Excle读取数据转换为实体List【基于apache-poi】

hanqunfeng

apache

1.依赖apache-poi

2.支持xls和xlsx

3.支持按属性名称绑定数据值

4.支持从指定行、列开始读取

5.支持同时读取多个sheet

6.具体使用方式参见org.cpframework.utils.excelreader.CP_ExcelReaderUtilTest.java

比如:

Str

- 3个处于草稿阶段的Javascript API介绍

jackyrong

JavaScript

原文:

http://www.sitepoint.com/3-new-javascript-apis-may-want-follow/?utm_source=html5weekly&utm_medium=email

本文中,介绍3个仍然处于草稿阶段,但应该值得关注的Javascript API.

1) Web Alarm API

&

- 6个创建Web应用程序的高效PHP框架

lampcy

Web框架PHP

以下是创建Web应用程序的PHP框架,有coder bay网站整理推荐:

1. CakePHP

CakePHP是一个PHP快速开发框架,它提供了一个用于开发、维护和部署应用程序的可扩展体系。CakePHP使用了众所周知的设计模式,如MVC和ORM,降低了开发成本,并减少了开发人员写代码的工作量。

2. CodeIgniter

CodeIgniter是一个非常小且功能强大的PHP框架,适合需

- 评"救市后中国股市新乱象泛起"谣言

nannan408

首先来看百度百家一位易姓作者的新闻:

三个多星期来股市持续暴跌,跌得投资者及上市公司都处于极度的恐慌和焦虑中,都要寻找自保及规避风险的方式。面对股市之危机,政府突然进入市场救市,希望以此来重建市场信心,以此来扭转股市持续暴跌的预期。而政府进入市场后,由于市场运作方式发生了巨大变化,投资者及上市公司为了自保及为了应对这种变化,中国股市新的乱象也自然产生。

首先,中国股市这两天

- 页面全屏遮罩的实现 方式

Rainbow702

htmlcss遮罩mask

之前做了一个页面,在点击了某个按钮之后,要求页面出现一个全屏遮罩,一开始使用了position:absolute来实现的。当时因为画面大小是固定的,不可以resize的,所以,没有发现问题。

最近用了同样的做法做了一个遮罩,但是画面是可以进行resize的,所以就发现了一个问题,当画面被reisze到浏览器出现了滚动条的时候,就发现,用absolute 的做法是有问题的。后来改成fixed定位就

- 关于angularjs的点滴

tntxia

AngularJS

angular是一个新兴的JS框架,和以往的框架不同的事,Angularjs更注重于js的建模,管理,同时也提供大量的组件帮助用户组建商业化程序,是一种值得研究的JS框架。

Angularjs使我们可以使用MVC的模式来写JS。Angularjs现在由谷歌来维护。

这里我们来简单的探讨一下它的应用。

首先使用Angularjs我

- Nutz--->>反复新建ioc容器的后果

xiaoxiao1992428

DAOmvcIOCnutz

问题:

public class DaoZ {

public static Dao dao() { // 每当需要使用dao的时候就取一次

Ioc ioc = new NutIoc(new JsonLoader("dao.js"));

return ioc.get(