K8S学习--Kubeadm-7--Ansible二进制部署

K8S学习–Kubeadm 安装 kubernetes-1-组件简介

K8S学习–Kubeadm 安装 kubernetes-2-安装部署

K8S学习–Kubeadm-3-dashboard部署和升级

K8S学习–Kubeadm-4-测试运行Nginx+Tomcat

K8S学习–Kubeadm-5-资源管理核心概念

K8S学习–Kubeadm-6–Pod生命周期和探针

1.1K8S-手动二进制部署

https://github.com/easzlab/kubeasz #部署参考

1.1.1安装注意事项

注意:

禁用 swap selinux iptables

# ufw status

Status: inactive

# getenforce

Disabled

# swapoff -a 临时关闭

free -h

total used free shared buff/cache available

Mem: 1.9G 149M 1.4G 9.0M 349M 1.6G

Swap: 0B 0B 0B #swap的大小为0

# vim /etc/fstab

#/swapfile none swap sw 0 0

注释/swapfile文件

1.1.2基础环境准备

服务器环境最小化安装基础系统,并关闭防火墙 selinux 和 swap ,更新软件源、时间同步、安装常用命令 ,重启后验证基础配置。

#apt-get update & apt-get upgrade -y

#ntpdate ntp1.aliyun.com

#ntpdate time1.aliyun.com && hwclock -w #2个选择一个时间同步即可

# cat /etc/security/limits.conf|grep -v ^#

root soft core unlimited

root hard core unlimited

root soft nproc 1000000

root hard nproc 1000000

root soft nofile 1000000

root hard nofile 1000000

root soft memlock 32000

root hard memlock 32000

root soft msgqueue 8192000

root hard msgqueue 8192000

* soft core unlimited

* hard core unlimited

* soft nproc 1000000

* hard nproc 1000000

* soft nofile 1000000

* hard nofile 1000000

* soft memlock 32000

* hard memlock 32000

* soft msgqueue 8192000

* hard msgqueue 8192000

# cat /etc/sysctl.conf|grep -v ^#

net.ipv4.conf.default.rp_filter = 1

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1 #这个参数一定要开

net.ipv4.conf.default.accept_source_route = 0

kernel.sysrq = 0

kernel.core_uses_pid = 1

net.ipv4.tcp_syncookies = 1

net.bridge.bridge-nf-call-ip6tables = 0

net.bridge.bridge-nf-call-iptables = 0

net.bridge.bridge-nf-call-arptables = 0

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.shmmax = 68719476736

kernel.shmall = 4294967296

net.ipv4.tcp_mem = 786432 1048576 1572864

net.ipv4.tcp_rmem = 4096 87380 4194304

net.ipv4.tcp_wmem = 4096 16384 4194304

net.ipv4.tcp_window_scaling = 1

net.ipv4.tcp_sack = 1

net.core.wmem_default = 8388608

net.core.rmem_default = 8388608

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.core.netdev_max_backlog = 262144

net.core.somaxconn = 20480

net.core.optmem_max = 81920

net.ipv4.tcp_max_syn_backlog = 262144

net.ipv4.tcp_syn_retries = 3

net.ipv4.tcp_retries1 = 3

net.ipv4.tcp_retries2 = 15

net.ipv4.tcp_timestamps = 0

net.ipv4.tcp_tw_reuse = 0

net.ipv4.tcp_tw_recycle = 0

net.ipv4.tcp_fin_timeout = 1

net.ipv4.tcp_max_tw_buckets = 20000

net.ipv4.tcp_max_orphans = 3276800

net.ipv4.tcp_synack_retries = 1

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_keepalive_time = 300

net.ipv4.tcp_keepalive_intvl = 30

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.ip_local_port_range = 10001 65000

vm.overcommit_memory = 0

vm.swappiness = 10

#sysctl -a #查看内核参数

#sysctl -p

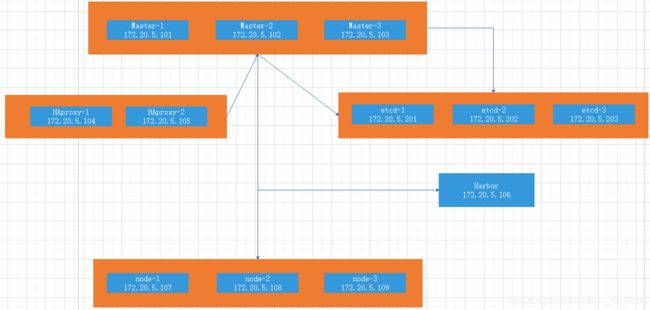

| 角色 | 主机名 | IP地址 | 内存大小 |

|---|---|---|---|

| K8S-MASTER-1 | Master-1 | 172.20.5.101 | 3G |

| K8S-MASTER-2 | Master-2 | 172.20.5.102 | 2G |

| K8S-MASTER-3 | Master-3 | 172.20.5.103 | 2G |

| K8S-HAPROXY-1 | HA-1 | 172.20.5.104 | 1G |

| K8S-HAPROXY-2 | HA-2 | 172.20.5.105 | 1G |

| K8S-HARBOR | Harbor | 172.20.5.106 | 3G |

| K8S-NODE-1 | node-1 | 172.20.5.107 | 2G |

| K8S-NODE-2 | node-2 | 172.20.5.108 | 2G |

| K8S-NODE-3 | node-3 | 172.20.5.109 | 2G |

| K8S-ETCD-1 | etcd-1 | 172.20.5.201 | 3G |

| K8S-ETCD-2 | etcd-2 | 172.20.5.202 | 3G |

| K8S-ETCD-3 | etcd-3 | 172.20.5.203 | 3G |

1.1.3基本架构

2.1.1HA-keepalived部署

实现Haproxy的高可用,在2台机器上都要部署一遍

[root@HA-1 ~]#apt install keepalived haproxy -y

[root@HA-2 ~]#apt install keepalived haproxy -y

[root@HA-1 ~]#find / -name keepalived.conf* #找寻参考配置文件

[root@HA-1 ~]#cp /usr/share/doc/keepalived/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf

[root@HA-1 ~]#vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 56

priority 100 #优先级为100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.20.5.248 dev eth0 label eth0:1

172.20.5.249 dev eth0 label eth0:2

}

}

#systemctl restart keepalived

#systemctl enable keepalived

HA-2的配置文件

[root@HA-2 ~]#vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state BACKUP #从HA

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 56

priority 80 #优先级为80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.20.5.248 dev eth0 label eth0:1

172.20.5.249 dev eth0 label eth0:2

}

}

#systemctl restart keepalived

#systemctl enable keepalived

2.1.2检测是否成功

#ifconfig

关闭HA-1 systemctl stop keepalived

看VIP是否飘到HA-2

2.1.3 Haproxy部署

需要在全部HA机器上部署一样的环境

#vim /etc/haproxy/haproxy.cfg #在最后添加

listen stats #状态页面

mode http

bind 0.0.0.0:9999

stats enable

log global

stats uri /haproxy-status

stats auth haadmin:123456

listen k8s_api_nodes_6443

bind 172.20.5.248:6443

mode tcp

#balance leastconn

server 172.20.5.101 172.20.5.101:6443 check inter 2000 fall 3 rise 5

# server 172.20.5.102 172.20.5.102:6443 check inter 2000 fall 3 rise 5

# server 172.20.5.103 172.20.5.103:6443 check inter 2000 fall 3 rise 5

查看端口是否监听172.20.5.248:6443

#ss -ntl

LISTEN 0 2000 172.20.5.248:6443 0.0.0.0:*

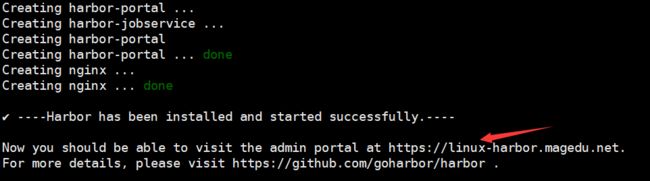

3.1.1 Harbor部署

https://github.com/goharbor/harbor/releases/tag/v1.7.6#harbor-offline-installer-v1.7.6.tgz 下载地址

[root@Harbor ~]#cd /usr/local/src/

[root@Harbor src]#apt install docker-compose

[root@Harbor src]#cat docker-install.sh

#!/bin/bash

# step 1: 安装必要的一些系统工具

sudo apt-get update

sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common

# step 2: 安装GPG证书

curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

# Step 3: 写入软件源信息

sudo add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

# Step 4: 更新并安装Docker-CE

sudo apt-get -y update

sudo apt-get -y install docker-ce docker-ce-cli

[root@Harbor src]#bash docker-install.sh

[root@Harbor src]#tar xvf harbor-offline-installer-v1.7.6.tgz

[root@Harbor src]#cd harbor/

[root@Harbor harbor]#pwd

/usr/local/src/harbor

#修改harbor的配置文件

[root@Harbor harbor]#vim harbor.cfg

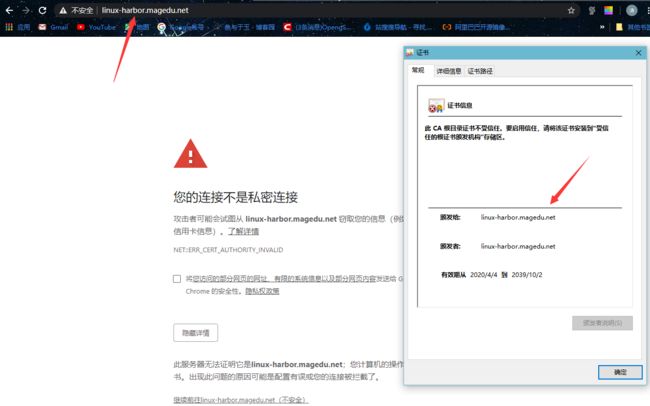

8 hostname = linux-harbor.magedu.net #harbor域名

12 ui_url_protocol = https #传输为https

20 ssl_cert = /usr/local/src/harbor/certs/harbor-ca.crt #证书路径

21 ssl_cert_key = /usr/local/src/harbor/certs/harbor-ca.key #钥匙路径

69 harbor_admin_password = 123456 #登入密码

3.2.1 证书生成

[root@Harbor harbor]#vim harbor.cfg

[root@Harbor harbor]#mkdir certs/

[root@Harbor harbor]#cd certs/

[root@Harbor certs]#openssl genrsa -out /usr/local/src/harbor/certs/harbor-ca.key #生成私钥

Generating RSA private key, 2048 bit long modulus (2 primes)

..............................+++++

....................+++++

e is 65537 (0x010001)

[root@Harbor certs]#openssl req -x509 -new -nodes -key /usr/local/src/harbor/certs/harbor-ca.key -subj "/CN=linux-harbor.magedu.net" -days 7120 -out /usr/local/src/harbor/certs/harbor-ca.crt

Can't load /root/.rnd into RNG

140179367604672:error:2406F079:random number generator:RAND_load_file:Cannot open file:../crypto/rand/randfile.c:88:Filename=/root/.rnd

[root@Harbor certs]#touch /root/.rnd #ubantu需要创建这个文件

[root@Harbor certs]#openssl req -x509 -new -nodes -key /usr/local/src/harbor/certs/harbor-ca.key -subj "/CN=linux-harbor.magedu.net" -days 7120 -out /usr/local/src/harbor/certs/harbor-ca.crt

#/CN=和harbor的地址保持一致

[root@Harbor harbor]#ll /usr/local/src/harbor/certs/harbor-ca.* #确认是否文件路径

-rw-r--r-- 1 root root 1151 Apr 4 11:37 /usr/local/src/harbor/certs/harbor-ca.crt

-rw------- 1 root root 1675 Apr 4 11:34 /usr/local/src/harbor/certs/harbor-ca.key

安装harbor

[root@Harbor harbor]#./install.sh

3.3.1添加Host文件

在window机器中添加host文件,在最后添加即可

C:\Windows\System32\drivers\etc\hosts

172.20.5.106 linux-harbor.magedu.net

访问harbor页面。看是否能够访问以及DNS是否能够解析域名

登入查看:

3.3.2配置master-1的hosts文件:

[root@Master-1 ~]#vim /etc/hosts

172.20.5.101 Master-1

172.20.5.102 Master-2

172.20.5.103 Master-3

172.20.5.107 node-1

172.20.5.108 node-2

172.20.5.109 node-3

172.20.5.201 etcd-1

172.20.5.202 etcd-2

172.20.5.203 etcd-3

172.20.5.106 linux-harbor.magedu.net

这里接下来会安装docker,但是ansible部署最好先不用安装docker 这里在其他的机器上面测试一下

这里用HA2机器来做实验:

[root@HA-2 ~]#cat docker-install.sh

#!/bin/bash

# step 1: 安装必要的一些系统工具

sudo apt-get update

sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common

# step 2: 安装GPG证书

curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

# Step 3: 写入软件源信息

sudo add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

# Step 4: 更新并安装Docker-CE

sudo apt-get -y update

sudo apt-get -y install docker-ce docker-ce-cli

[root@HA-2 ~]#bash docker-install.sh

[root@HA-2 ~]#vim /etc/hosts

172.20.5.106 linux-harbor.magedu.net

[root@HA-2 ~]#docker login linux-harbor.magedu.net #docker 登入Harbor

Username: admin

Password:

Error response from daemon: Get https://linux-harbor.magedu.net/v2/: x509: certificate signed by unknown authority

#这里因为没有证书,所以登入失败

#client 同步在crt证书:

[root@HA-2 ~]#mkdir /etc/docker/certs.d/linux-harbor.magedu.net -p #一定要以自己的域名为目录名创建

#在harbor主机中拷贝公钥到HA-2

root@Harbor certs]#scp harbor-ca.crt 172.20.5.105:/etc/docker/certs.d/linux-harbor.magedu.net

[root@HA-2 ~]#systemctl restart docker #重启docker

[root@HA-2 ~]#docker login linux-harbor.magedu.net

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

登入成功

4.1 Ansible二进制部署

https://github.com/easzlab/kubeasz/blob/master/docs/setup/00-planning_and_overall_intro.md

#部署步骤

安装python2.7 在所有的master主机以及node主机和etcd主机都要安装

#apt-get install python2.7 -y

#ln -s /usr/bin/python2.7 /usr/bin/python

4.1.1在ansible控制端安装及准备ansible

[root@Master-1 ~]#apt install python-pip -y #安装python-pip部署工具

[root@Master-1 ~]#apt install ansible -y

4.1.2在ansible控制端配置免密码登录

[root@Master-1 ~]#ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:JEH6osh/7otnZuNPTnmHQqrOaig4fQxg+zlvAGaV/g0 root@Master-1

The key's randomart image is:

+---[RSA 2048]----+

| ..o |

| o . . |

| o . . . |

|.= . E o |

|+.o o +.S |

|.o.o oo.. . |

|+oooo. = o . |

|=.++=O+ o . |

|oooB&*+o |

+----[SHA256]-----+

[root@Master-1 ~]#apt install sshpass -y #安装非交互远传工具命令

[root@Master-1 ~]#vim ssh-copy.sh

[root@Master-1 ~]#cat ssh-copy.sh

#!/bin/bash

#目标主机列表

IP="

172.20.5.101

172.20.5.102

172.20.5.103

172.20.5.107

172.20.5.108

172.20.5.109

172.20.5.201

172.20.5.202

172.20.5.203

"

for node in ${IP};do

sshpass -p 123456 ssh-copy-id ${node} -o StrictHostKeyChecking=no

if [ $? -eq 0 ];then

echo "${node} 秘钥copy完成"

else

echo "${node} 秘钥copy失败"

fi

done

[root@Master-1 ~]#bash ssh-copy.sh

[root@Master-1 ~]#echo $?

0

测试这里是否可以直接ssh连接到其他机器看是否免密登入

4.1.2在ansible控制端编排k8s安装

https://github.com/easzlab/kubeasz/releases/#发布说明

[root@Master-1 ~]#export release=2.2.0

[root@Master-1 ~]#curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/${release}/easzup

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 597 100 597 0 0 72 0 0:00:08 0:00:08 --:--:-- 149

100 12965 100 12965 0 0 842 0 0:00:15 0:00:15 --:--:-- 2999

[root@Master-1 ~]#vim easzup

17 export DOCKER_VER=19.03.8 #改变版本

[root@Master-1 ~]#chmod a+x easzup #给执行权限

[root@Master-1 ~]#./easzup -D #运行安装

#这个过程大概有十几分钟

下载完成之后,查看目录文件:

[root@Master-1 ~]#cd /etc/ansible/

[root@Master-1 ansible]#ll

total 132

drwxrwxr-x 11 root root 4096 Feb 1 10:55 ./

drwxr-xr-x 95 root root 4096 Apr 4 14:07 ../

-rw-rw-r-- 1 root root 395 Feb 1 10:35 01.prepare.yml

-rw-rw-r-- 1 root root 58 Feb 1 10:35 02.etcd.yml

-rw-rw-r-- 1 root root 149 Feb 1 10:35 03.containerd.yml

-rw-rw-r-- 1 root root 137 Feb 1 10:35 03.docker.yml

-rw-rw-r-- 1 root root 470 Feb 1 10:35 04.kube-master.yml

-rw-rw-r-- 1 root root 140 Feb 1 10:35 05.kube-node.yml

-rw-rw-r-- 1 root root 408 Feb 1 10:35 06.network.yml

-rw-rw-r-- 1 root root 77 Feb 1 10:35 07.cluster-addon.yml

-rw-rw-r-- 1 root root 3686 Feb 1 10:35 11.harbor.yml

-rw-rw-r-- 1 root root 431 Feb 1 10:35 22.upgrade.yml

-rw-rw-r-- 1 root root 1975 Feb 1 10:35 23.backup.yml

-rw-rw-r-- 1 root root 113 Feb 1 10:35 24.restore.yml

-rw-rw-r-- 1 root root 1752 Feb 1 10:35 90.setup.yml

-rw-rw-r-- 1 root root 1127 Feb 1 10:35 91.start.yml

-rw-rw-r-- 1 root root 1120 Feb 1 10:35 92.stop.yml

-rw-rw-r-- 1 root root 337 Feb 1 10:35 99.clean.yml

-rw-rw-r-- 1 root root 10283 Feb 1 10:35 ansible.cfg

drwxrwxr-x 3 root root 4096 Apr 4 14:10 bin/

drwxrwxr-x 2 root root 4096 Feb 1 10:55 dockerfiles/

drwxrwxr-x 8 root root 4096 Feb 1 10:55 docs/

drwxrwxr-x 3 root root 4096 Apr 4 14:18 down/

drwxrwxr-x 2 root root 4096 Feb 1 10:55 example/

-rw-rw-r-- 1 root root 414 Feb 1 10:35 .gitignore

drwxrwxr-x 14 root root 4096 Feb 1 10:55 manifests/

drwxrwxr-x 2 root root 4096 Feb 1 10:55 pics/

-rw-rw-r-- 1 root root 5607 Feb 1 10:35 README.md

drwxrwxr-x 23 root root 4096 Feb 1 10:55 roles/

drwxrwxr-x 2 root root 4096 Feb 1 10:55 tools/

拷贝模板配置文件

[root@Master-1 ansible]#cp example/hosts.multi-node ./hosts

[root@Master-1 ansible]#vim hosts

[root@Master-1 ansible]#cat hosts

# 'etcd' cluster should have odd member(s) (1,3,5,...)

# variable 'NODE_NAME' is the distinct name of a member in 'etcd' cluster

[etcd]

172.20.5.201 NODE_NAME=etcd1

172.20.5.202 NODE_NAME=etcd2

172.20.5.203 NODE_NAME=etcd3

# master node(s) #这里暂时加2个master 后面演示增加master节点

[kube-master]

172.20.5.101

172.20.5.102

# work node(s) #这里暂时加2个node 后面演示增加node节点

[kube-node]

172.20.5.107

172.20.5.108

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'yes' to install a harbor server; 'no' to integrate with existed one

# 'SELF_SIGNED_CERT': 'no' you need put files of certificates named harbor.pem and harbor-key.pem in directory 'down'

[harbor]

#172.20.5.8 HARBOR_DOMAIN="harbor.yourdomain.com" NEW_INSTALL=no SELF_SIGNED_CERT=yes

# [optional] loadbalance for accessing k8s from outside

[ex-lb]

172.20.5.104 LB_ROLE=master EX_APISERVER_VIP=172.20.5.248 EX_APISERVER_PORT=6443 #VIP以及VIP端口和HA地址

172.20.5.105 LB_ROLE=backup EX_APISERVER_VIP=172.20.5.249 EX_APISERVER_PORT=6443

# [optional] ntp server for the cluster

[chrony]

#172.20.5.1

[all:vars]

# --------- Main Variables ---------------

# Cluster container-runtime supported: docker, containerd

CONTAINER_RUNTIME="docker"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="flannel"

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="172.32.0.0/16" #service网段地址

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="10.20.0.0/16" #node网段地址

# NodePort Range

NODE_PORT_RANGE="30000-60000" #node端口范围

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="magedu.local."

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/usr/bin" #二进制目录放置地址

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/ansible"

4.1.3 测试网络正常联通

[root@Master-1 ansible]#ansible all -m ping

172.20.5.104 | UNREACHABLE! => { #HA-1 正常报错

"changed": false,

"msg": "SSH Error: data could not be sent to remote host \"172.20.5.104\". Make sure this host can be reached over ssh",

"unreachable": true

}

172.20.5.105 | UNREACHABLE! => { #HA-3 正常报错

"changed": false,

"msg": "SSH Error: data could not be sent to remote host \"172.20.5.105\". Make sure this host can be reached over ssh",

"unreachable": true

}

172.20.5.107 | SUCCESS => {

"changed": false,

"ping": "pong"

}

172.20.5.102 | SUCCESS => {

"changed": false,

"ping": "pong"

}

172.20.5.108 | SUCCESS => {

"changed": false,

"ping": "pong"

}

172.20.5.201 | SUCCESS => {

"changed": false,

"ping": "pong"

}

172.20.5.101 | SUCCESS => {

"changed": false,

"ping": "pong"

}

172.20.5.202 | SUCCESS => {

"changed": false,

"ping": "pong"

}

172.20.5.203 | SUCCESS => {

"changed": false,

"ping": "pong"

}

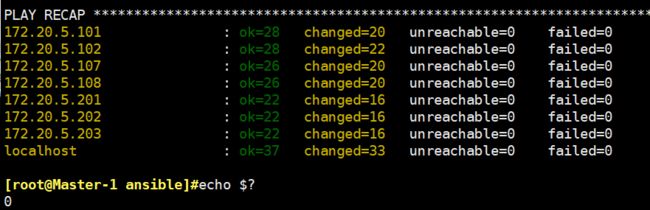

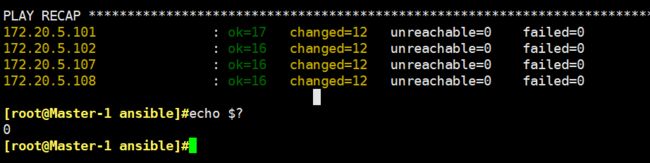

4.2.1 01.prepare.yml部署

[root@Master-1 ansible]#vim 01.prepare.yml

# [optional] to synchronize system time of nodes with 'chrony'

- hosts:

- kube-master

- kube-node

- etcd

# - ex-lb #注释

# - chrony #注释时间同步

roles:

- { role: chrony, when: "groups['chrony']|length > 0" }

# to create CA, kubeconfig, kube-proxy.kubeconfig etc.

- hosts: localhost

roles:

- deploy

# prepare tasks for all nodes

- hosts:

- kube-master

- kube-node

- etcd

roles:

- prepare

[root@Master-1 ansible]#ansible-playbook 01.prepare.yml

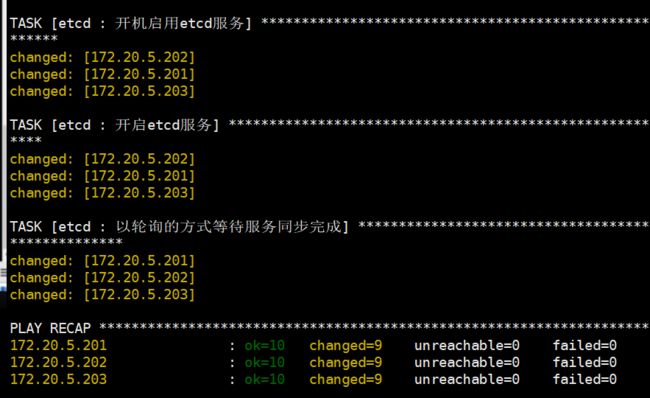

4.2.2 02.etcd.yml部署

[root@Master-1 ansible]#ansible-playbook 02.etcd.yml

4.2.2.1 检测etcd节点

去etcd节点查看是否服务起来:这里以etcd-1为例

[root@etcd-1 ~]#ps -ef|grep etcd

root 4740 1 0 14:57 ? 00:00:00 /usr/bin/etcd --name=etcd1 --cert-

file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem --peer-cert-

file=/etc/etcd/ssl/etcd.pem --peer-key-file=/etc/etcd/ssl/etcd-key.pem --trusted-ca-

file=/etc/kubernetes/ssl/ca.pem --peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem --

initial-advertise-peer-urls=https://172.20.5.201:2380 --listen-peer-

urls=https://172.20.5.201:2380 --listen-client-

urls=https://172.20.5.201:2379,http://127.0.0.1:2379 --advertise-client-

urls=https://172.20.5.201:2379 --initial-cluster-token=etcd-cluster-0 --initial-

cluster=etcd1=https://172.20.5.201:2380,etcd2=https://172.20.5.202:2380,etcd3=https://172

.20.5.203:2380 --initial-cluster-state=new --data-dir=/var/lib/etcd

root 4769 1079 0 14:59 pts/0 00:00:00 grep --color=auto etcd

检测etcd

[root@etcd-1 ~]#export NODE_IPS="172.20.5.201 172.20.5.202 172.20.5.203"

[root@etcd-1 ~]#echo $NODE_IPS

172.20.5.201 172.20.5.202 172.20.5.203

[root@etcd-1 ~]#for ip in ${NODE_IPS}; do ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem endpoint health; done

https://172.20.5.201:2379 is healthy: successfully committed proposal: took = 2.198515ms

https://172.20.5.202:2379 is healthy: successfully committed proposal: took = 2.457971ms

https://172.20.5.203:2379 is healthy: successfully committed proposal: took = 1.859514ms

4.2.3 03.docker.yml部署

[root@Master-1 ansible]#ansible-playbook 03.docker.yml

[root@node-1 ~]#docker version

Client: Docker Engine - Community

Version: 19.03.5

API version: 1.40

Go version: go1.12.12

Git commit: 633a0ea838

Built: Wed Nov 13 07:22:05 2019

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 19.03.5

API version: 1.40 (minimum version 1.12)

Go version: go1.12.12

Git commit: 633a0ea838

Built: Wed Nov 13 07:28:45 2019

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: v1.2.10

GitCommit: b34a5c8af56e510852c35414db4c1f4fa6172339

runc:

Version: 1.0.0-rc8+dev

GitCommit: 3e425f80a8c931f88e6d94a8c831b9d5aa481657

docker-init:

Version: 0.18.0

GitCommit: fec3683

发现上面的版本是 19.03.5 不是最新的版本,也不是自己定义想安装的版本

可以通过把对应的docker二进制文件拷贝到/etc/ansible/bin/这个目录下

[root@Master-1 ansible]#cd down/

[root@Master-1 down]#ll

total 792828

drwxrwxr-x 3 root root 4096 Apr 4 14:18 ./

drwxrwxr-x 12 root root 4096 Apr 4 14:47 ../

-rw------- 1 root root 203646464 Apr 4 14:14 calico_v3.4.4.tar

-rw------- 1 root root 40974848 Apr 4 14:15 coredns_1.6.6.tar

-rw------- 1 root root 126891520 Apr 4 14:15 dashboard_v2.0.0-rc3.tar

-rw-r--r-- 1 root root 63673514 Apr 4 14:06 docker-19.03.8.tgz #下载好的docker文件

-rw-rw-r-- 1 root root 1737 Feb 1 10:35 download.sh

-rw------- 1 root root 55390720 Apr 4 14:16 flannel_v0.11.0-amd64.tar

-rw------- 1 root root 152580096 Apr 4 14:18 kubeasz_2.2.0.tar

-rw------- 1 root root 40129536 Apr 4 14:16 metrics-scraper_v1.0.3.tar

-rw------- 1 root root 41199616 Apr 4 14:17 metrics-server_v0.3.6.tar

drwxr-xr-x 2 root root 4096 Oct 19 22:56 packages/

-rw------- 1 root root 754176 Apr 4 14:17 pause_3.1.tar

-rw------- 1 root root 86573568 Apr 4 14:17 traefik_v1.7.20.tar

[root@Master-1 down]#tar xvf docker-19.03.8.tgz

docker/

docker/containerd

docker/docker

docker/ctr

docker/dockerd

docker/runc

docker/docker-proxy

docker/docker-init

docker/containerd-shim

[root@Master-1 down]#cp ./docker/* /etc/ansible/bin/ #把解压后的docker/中的所有文件拷贝

到/etc/ansible/bin/这个目录下

[root@Master-1 down]#cd /etc/ansible

[root@Master-1 ansible]#./bin/docker version

Client: Docker Engine - Community

Version: 19.03.8

API version: 1.40

Go version: go1.12.17

Git commit: afacb8b7f0

Built: Wed Mar 11 01:22:56 2020

OS/Arch: linux/amd64

Experimental: false

[root@Master-1 ansible]#ansible-playbook 03.docker.yml #重新ansible一下

[root@node-1 ~]#docker version

Client: Docker Engine - Community

Version: 19.03.8

API version: 1.40

Go version: go1.12.17

Git commit: afacb8b7f0

Built: Wed Mar 11 01:22:56 2020

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 19.03.8

API version: 1.40 (minimum version 1.12)

Go version: go1.12.17

Git commit: afacb8b7f0

Built: Wed Mar 11 01:30:32 2020

OS/Arch: linux/amd64

Experimental: false

4.3.3.1 安装证书

[root@Master-1 ansible]#mkdir /etc/docker/certs.d/linux-harbor.magedu.net -p #这个目录要以harb的域名命名

[root@Harbor certs]#scp harbor-ca.crt 172.20.5.101:/etc/docker/certs.d/linux-harbor.magedu.net

[root@Master-1 ansible]#docker login linux-harbor.magedu.net

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

4.3.3.2分发证书:

[root@Master-1 ansible]#cp /etc/docker/certs.d/linux-harbor.magedu.net/harbor-ca.crt /opt/ #备份一份证书给到后面脚本使用

[root@Master-1 ~]#vim ssh-harbor.sh

[root@Master-1 ~]#cat ssh-harbor.sh

#!/bin/bash

#目标主机列表

IP="

172.20.5.101ll

172.20.5.102

172.20.5.103

172.20.5.107

172.20.5.108

172.20.5.109

172.20.5.201

172.20.5.202

172.20.5.203

"

for node in ${IP};do

# sshpass -p 123456 ssh-copy-id ${node} -o StrictHostKeyChecking=no

# if [ $? -eq 0 ];then

# echo "${node} 秘钥copy完成"

# else

# echo "${node} 秘钥copy失败"

# fi

ssh ${node} "mkdir /etc/docker/certs.d/linux-harbor.magedu.net -p"

scp /opt/harbor-ca.crt ${node}:/etc/docker/certs.d/linux-harbor.magedu.net/harbor-ca.crt

done

[root@Master-1 ~]#vim ssh-harbor.sh

[root@Master-1 ~]#bash ssh-harbor.sh

harbor-ca.crt 100% 1151 2.4MB/s 00:00

harbor-ca.crt 100% 1151 2.1MB/s 00:00

harbor-ca.crt 100% 1151 1.3MB/s 00:00

harbor-ca.crt 100% 1151 2.1MB/s 00:00

harbor-ca.crt 100% 1151 1.5MB/s 00:00

harbor-ca.crt 100% 1151 2.0MB/s 00:00

harbor-ca.crt 100% 1151 2.1MB/s 00:00

harbor-ca.crt 100% 1151 2.0MB/s 00:00

harbor-ca.crt 100% 1151 2.2MB/s 00:00

4.3.3.3hosts文件拷贝

[root@Master-1 ~]#cat ssh-hosts.sh

#!/bin/bash

#目标主机列表

IP="

172.20.5.101

172.20.5.102

172.20.5.103

172.20.5.107

172.20.5.108

172.20.5.109

172.20.5.201

172.20.5.202

172.20.5.203

"

for node in ${IP};do

# sshpass -p 123456 ssh-copy-id ${node} -o StrictHostKeyChecking=no

# if [ $? -eq 0 ];then

# echo "${node} 秘钥copy完成"

# else

# echo "${node} 秘钥copy失败"

# fi

# ssh ${node} "mkdir /etc/docker/certs.d/linux-harbor.magedu.net -p"

# scp /opt/harbor-ca.crt ${node}:/etc/docker/certs.d/linux-harbor.magedu.net/harbor-ca.crt

scp /opt/hosts ${node}:/etc/hosts

echo "${node} hosts file copy finish"

done

[root@Master-1 ~]#vim ssh-hosts.sh

[root@Master-1 ~]#bash ssh-hosts.sh

hosts 100% 434 1.1MB/s 00:00

172.20.5.101 hosts file copy finish

hosts 100% 434 366.0KB/s 00:00

172.20.5.102 hosts file copy finish

hosts 100% 434 681.6KB/s 00:00

172.20.5.103 hosts file copy finish

hosts 100% 434 502.9KB/s 00:00

172.20.5.107 hosts file copy finish

hosts 100% 434 803.5KB/s 00:00

172.20.5.108 hosts file copy finish

hosts 100% 434 808.9KB/s 00:00

172.20.5.109 hosts file copy finish

hosts 100% 434 563.1KB/s 00:00

172.20.5.201 hosts file copy finish

hosts 100% 434 805.5KB/s 00:00

172.20.5.202 hosts file copy finish

hosts 100% 434 667.1KB/s 00:00

在其他节点查看hosts文件是否同步完成 这里以node-1为例子

[root@node-1 ~]#cat /etc/hosts

127.0.0.1 localhost

127.0.1.1 ubuntu.taotaobao.com ubuntu

# The following lines are desirable for IPv6 capable hosts

::1 localhost ip6-localhost ip6-loopback

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.20.5.101 Master-1

172.20.5.102 Master-2

172.20.5.103 Master-3

172.20.5.107 node-1

172.20.5.108 node-2

172.20.5.109 node-3

172.20.5.201 etcd-1

172.20.5.202 etcd-2

172.20.5.203 etcd-3

172.20.5.106 linux-harbor.magedu.net

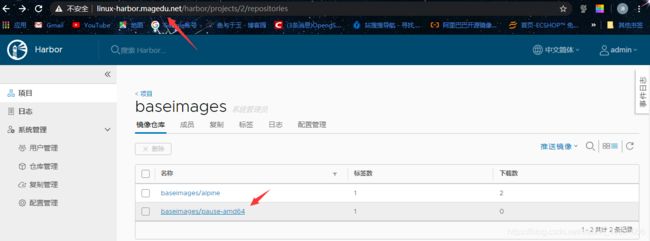

4.3.3.4测试节点能否正常从harbor中下载镜像

[root@Master-1 ~]#docker pull alpine

[root@Master-1 ~]#docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

alpine latest a187dde48cd2 11 days ago 5.6MB

[root@Master-1 ~]#docker tag alpine linux-harbor.magedu.net/baseimages/alpine:latest

[root@Master-1 ~]#docker push linux-harbor.magedu.net/baseimages/alpine

The push refers to repository [linux-harbor.magedu.net/baseimages/alpine]

beee9f30bc1f: Pushed

latest: digest: sha256:cb8a924afdf0229ef7515d9e5b3024e23b3eb03ddbba287f4a19c6ac90b8d221 size: 528

在node上面尝试从harbor下载能否成功

[root@node-1 ~]#docker pull linux-harbor.magedu.net/baseimages/alpine

Using default tag: latest

latest: Pulling from baseimages/alpine

Digest: sha256:cb8a924afdf0229ef7515d9e5b3024e23b3eb03ddbba287f4a19c6ac90b8d221

Status: Image is up to date for linux-harbor.magedu.net/baseimages/alpine:latest

linux-harbor.magedu.net/baseimages/alpine:latest

成功下载

4.2.4 04.kube-master部署

[root@Master-1 ~]#cd /etc/ansible/

[root@Master-1 ansible]#ansible-playbook 04.kube-master.yml

[root@Master-1 ansible]#kubectl get node

NAME STATUS ROLES AGE VERSION

172.20.5.101 Ready,SchedulingDisabled master 107s v1.17.2 #不被调度状态

172.20.5.102 Ready,SchedulingDisabled master 107s v1.17.2

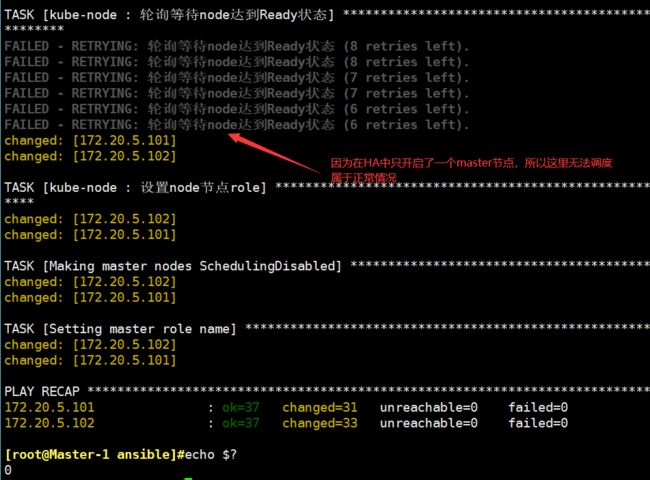

4.2.5 05.kube-node

查看剧本文件镜像地址是否能够下载镜像

[root@Master-1 ansible]#vim roles/kube-node/defaults/main.yml

5 SANDBOX_IMAGE: "mirrorgooglecontainers/pause-amd64:3.1"

#下载镜像

[root@Master-1 ansible]#docker pull mirrorgooglecontainers/pause-amd64:3.1

3.1: Pulling from mirrorgooglecontainers/pause-amd64

Digest: sha256:59eec8837a4d942cc19a52b8c09ea75121acc38114a2c68b98983ce9356b8610

Status: Image is up to date for mirrorgooglecontainers/pause-amd64:3.1

docker.io/mirrorgooglecontainers/pause-amd64:3.1

[root@Master-1 ansible]#docker tag mirrorgooglecontainers/pause-amd64:3.1 linux-harbor.magedu.net/baseimages/pause-amd64:3.1

[root@Master-1 ansible]#docker push linux-harbor.magedu.net/baseimages/pause-amd64:3.1

The push refers to repository [linux-harbor.magedu.net/baseimages/pause-amd64]

e17133b79956: Pushed

3.1: digest: sha256:fcaff905397ba63fd376d0c3019f1f1cb6e7506131389edbcb3d22719f1ae54d size: 527

#上传镜像到harbor

[root@Master-1 ansible]#vim roles/kube-node/defaults/main.yml

5 #SANDBOX_IMAGE: "mirrorgooglecontainers/pause-amd64:3.1" #注释

6 SANDBOX_IMAGE: "linux-harbor.magedu.net/baseimages/pause-amd64:3.1 "

#ansible部署

[root@Master-1 ansible]#ansible-playbook 05.kube-node.yml

[root@Master-1 ansible]#kubectl get node

NAME STATUS ROLES AGE VERSION

172.20.5.101 Ready,SchedulingDisabled master 17m v1.17.2

172.20.5.102 Ready,SchedulingDisabled master 17m v1.17.2

172.20.5.107 Ready node 58s v1.17.2

172.20.5.108 Ready node 61s v1.17.2

4.2.5.1 node-haproxy原理

角色执行过程中会在每一个node节点都会下载haproxy服务

[root@node-1 ~]#vim /etc/haproxy/haproxy.cfg #查看node节点中的haproxy配置文件

global

log /dev/log local1 warning

chroot /var/lib/haproxy

user haproxy

group haproxy

daemon

nbproc 1

defaults

log global

timeout connect 5s

timeout client 10m

timeout server 10m

listen kube-master

bind 127.0.0.1:6443 #监听端口

mode tcp

option tcplog

option dontlognull

option dontlog-normal

balance roundrobin

server 172.20.5.101 172.20.5.101:6443 check inter 10s fall 2 rise 2 weight 1

#转发到的后端master主机

server 172.20.5.102 172.20.5.102:6443 check inter 10s fall 2 rise 2 weight 1

service访问这个127.0.0.1:6443地址端口。 然后通过node的haproxy在调度到后端的master主机

[root@node-1 ~]#vim /etc/kubernetes/kubelet.kubeconfig

5 server: https://127.0.0.1:6443 #定义的监听地址

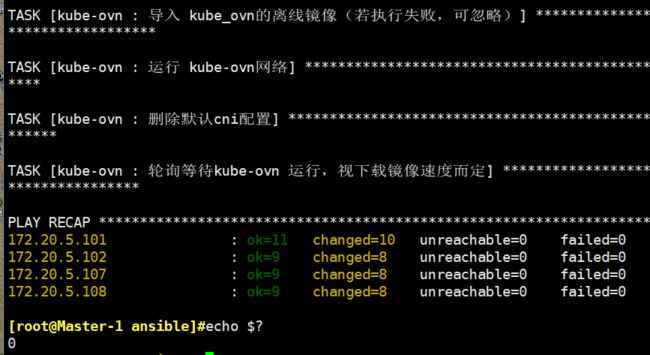

4.2.6 06.network.yml 部署

[root@Master-1 ansible]#ansible-playbook 06.network.yml

4.2.6.1测试网络组件

创建pod节点用来健康测试

[root@Master-1 ansible]# kubectl run net-test --image=alpine --replicas=4 sleep 360000

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future

version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

deployment.apps/net-test created

[root@Master-1 ansible]#kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

net-test-6c94768685-hpgvp 1/1 Running 0 43s 10.20.3.4 172.20.5.107 <none> <none>

net-test-6c94768685-hslmg 1/1 Running 0 43s 10.20.2.4 172.20.5.108 <none> <none>

net-test-6c94768685-mn68g 1/1 Running 0 43s 10.20.3.5 172.20.5.107 <none> <none>

net-test-6c94768685-qrrqj 1/1 Running 0 43s 10.20.2.5 172.20.5.108 <none> <none>

[root@Master-1 ansible]#kubectl exec -it net-test-6c94768685-hslmg sh #进入到pod

/ # ifconfig

eth0 Link encap:Ethernet HWaddr FE:09:87:A9:7C:33

inet addr:10.20.2.4 Bcast:0.0.0.0 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1

RX packets:13 errors:0 dropped:0 overruns:0 frame:0

TX packets:1 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:950 (950.0 B) TX bytes:42 (42.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ # ping 10.20.3.5 #测试内网中node之间是否能够通信

PING 10.20.3.5 (10.20.3.5): 56 data bytes

64 bytes from 10.20.3.5: seq=0 ttl=62 time=0.640 ms

64 bytes from 10.20.3.5: seq=1 ttl=62 time=0.406 ms

^C

--- 10.20.3.5 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.406/0.523/0.640 ms

/ # ping 223.6.6.6 #测试外网和node之间是否能够通信

PING 223.6.6.6 (223.6.6.6): 56 data bytes

64 bytes from 223.6.6.6: seq=0 ttl=127 time=13.160 ms

64 bytes from 223.6.6.6: seq=1 ttl=127 time=15.784 ms

^C

--- 223.6.6.6 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 13.160/14.472/15.784 ms

/ #

/ # ping www.baidu.com #测试不通因为现在还没有配置DNS

ping: bad address 'www.baidu.com'