文本处理——利用Keras组件,构建LSTM神经网络,并完成情感分析任务;

利用Keras组件,构建LSTM神经网络,并完成情感分析任务;

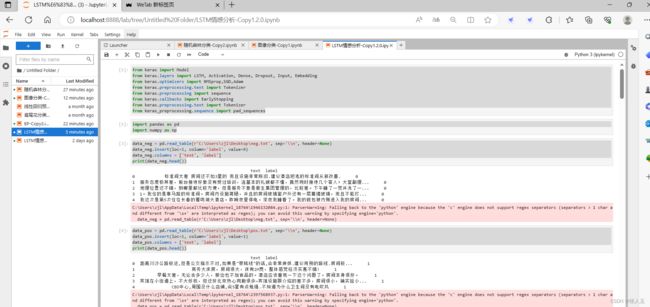

from keras import Model

from keras.layers import LSTM, Activation, Dense, Dropout, Input, Embedding

from keras.optimizers import RMSprop,SGD,Adam

from keras.preprocessing.text import Tokenizer

from keras.preprocessing import sequence

from keras.callbacks import EarlyStopping

from keras.preprocessing.text import Tokenizer

from keras_preprocessing.sequence import pad_sequences

import pandas as pd

import numpy as np

data_neg = pd.read_table(r'C:\Users\zjl\Desktop\neg.txt', sep='\\n', header=None)

data_neg.insert(loc=1, column='label', value=0)

data_neg.columns = ['text', 'label']

print(data_neg.head())

data_pos = pd.read_table(r'C:\Users\zjl\Desktop\pos.txt', sep='\\n', header=None)

data_pos.insert(loc=1, column='label', value=1)

data_pos.columns = ['text', 'label']

print(data_pos.head())

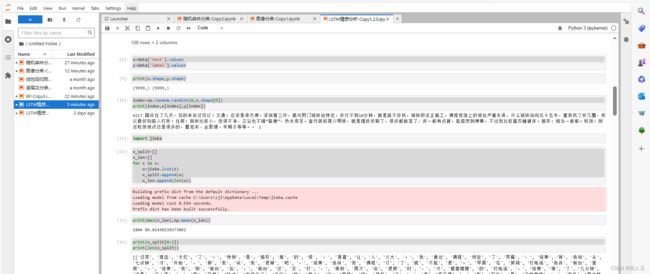

data=pd.concat([data_neg,data_pos])

np.random.seed(2023)

data=data.sample(frac=1.0)

data.head(100)

x=data['text'].values

y=data['label'].values

print(x.shape,y.shape)

index=np.random.randint(0,x.shape[0])

print(index,x[index],y[index])

import jieba

x_split=[]

x_len=[]

for s in x:

w=jieba.lcut(s)

x_split.append(w)

x_len.append(len(w))

print(max(x_len),np.mean(x_len))

print(x_split[0:2])

print(len(x_split))

from keras.utils import to_categorical

y_one=to_categorical(y,2)

from sklearn.model_selection import train_test_split、

entences_train, sentences_test, y_train, y_test = train_test_split(x_split, y_one, test_size=0.2, random_state=2023)

tokenizer = Tokenizer(num_words=10000,split=',')

tokenizer.fit_on_texts(sentences_train)

X_train = tokenizer.texts_to_sequences(sentences_train)

X_test = tokenizer.texts_to_sequences(sentences_test)

vocab_size = len(tokenizer.word_index) + 1

print(sentences_train[2])

print(X_train[2])

print(tokenizer.sequences_to_texts([X_train[2]]))

print(y_train[2])

maxlen=150

X_train = pad_sequences(X_train, padding='post', maxlen=maxlen)

X_test = pad_sequences(X_test, padding='post', maxlen=maxlen)

len(X_train[0])

X_train[2]

## 定义LSTM模型

inputs = Input(name='inputs', shape=[maxlen])

x = Embedding(output_dim=128, input_dim=vocab_size, input_length=maxlen)(inputs)

x = LSTM(128)(x)

x = Dropout(0.2)(x)

x = Dense(32)(x)

x = Activation('relu')(x)

x = Dropout(0.2)(x)

x = Dense(2)(x)

predictions = Activation('softmax')(x)

model = Model(inputs=inputs, outputs=predictions)

model.summary()

sgd = Adam(lr=0.01)

model.compile(loss="categorical_crossentropy",optimizer=sgd,metrics=["accuracy"])

## 模型训练

model_fit = model.fit(X_train,y_train,batch_size=128,epochs=20,validation_split=0.2

## 当val-loss不再提升时停止训练

)

model_fit.history

model.evaluate(X_test,y_test)

sentences=input("请输入待分析的中文文本:")

sentences_input=jieba.lcut(sentences)

inputs = tokenizer.texts_to_sequences([sentences_input])

inputs_pad=pad_sequences(inputs, padding='post', maxlen=maxlen)

y_pred=model.predict(inputs_pad)

p=np.argmax(y_pred,axis=-1)

print(p)