python之线性回归-脚本

回归问题

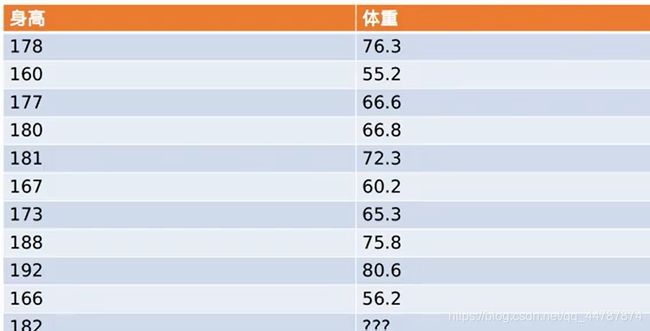

下面以这个例子为例:

函数:f(特征)->特征值

f(身高)->(体重)

拟合

预测

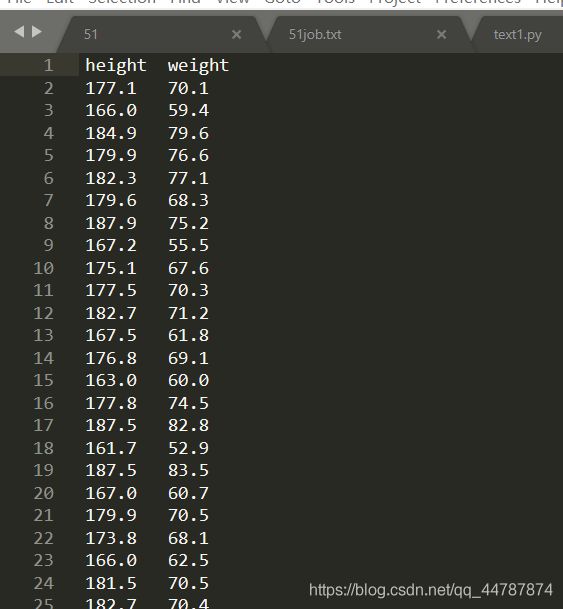

首先我们把需要分析的数据导入放在python文件夹下

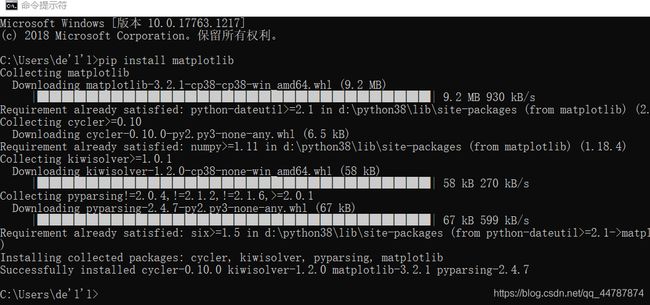

然后我们还要导入第三方工具,来实现可视化数据

pip install matplatlib

python 代码

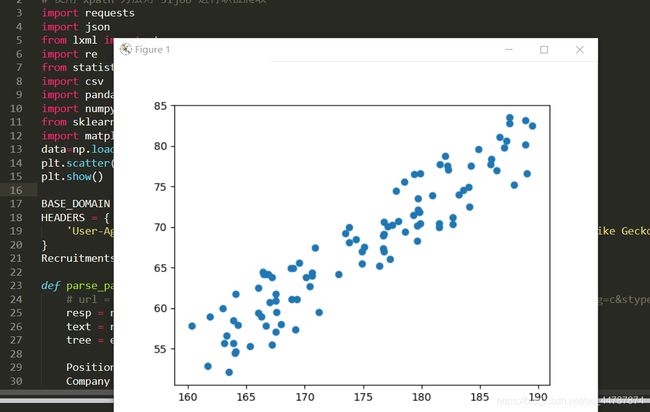

import numpy as np

import matplotlib.pyplot as plt

data=np.loadtxt(r'hw.txt', delimiter='\t', skiprows=True)

plt.scatter(data[:,0], data[:,1])

plt.show()

效果

分析

发现身高体重成正比

线性回归

y=wx+b

只需求出w和b

如何求?

在函数的值和实际值有误差

76.3*w+b-178

55.2*w+b-160

…

把所有误差平方后相加,

赋给损失函数loss(w,b),所以我们要求出来让损失函数最小的w,b

用梯度下降法

初始化:

def init_data():

data = np.loadtxt('hw.txt', delimiter='\t')

return data

def linear_regression():

learning_rate = 0.01 #步长

initial_b = 0

initial_m = 0

num_iter = 1000 #迭代次数

data = init_data()

[b, m] = optimizer(data, initial_b, initial_m, learning_rate, num_iter)

plot_data(data,b,m)

print(b, m)

return b, m

def optimizer(data, initial_b, initial_m, learning_rate, num_iter):

b = initial_b

m = initial_m

for i in range(num_iter):

b, m = compute_gradient(b, m, data, learning_rate)

# after = computer_error(b, m, data)

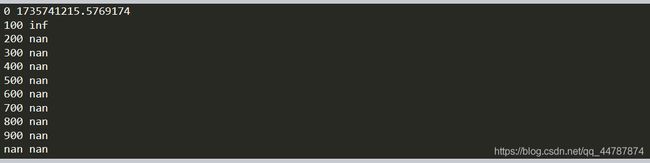

if i % 100 == 0:

print(i, computer_error(b, m, data)) # 损失函数,即误差

return [b, m]

def compute_gradient(b_cur, m_cur, data, learning_rate):

b_gradient = 0

m_gradient = 0

N = float(len(data))

#

# 偏导数, 梯度

for i in range(0, len(data)):

x = data[i, 0]

y = data[i, 1]

b_gradient += -(2 / N) * (y - ((m_cur * x) + b_cur))

m_gradient += -(2 / N) * x * (y - ((m_cur * x) + b_cur)) #偏导数

new_b = b_cur - (learning_rate * b_gradient)

new_m = m_cur - (learning_rate * m_gradient)

return [new_b, new_m]

def computer_error(b, m, data):

totalError = 0

x = data[:, 0]

y = data[:, 1]

totalError = (y - m * x - b) ** 2

totalError = np.sum(totalError, axis=0)

return totalError / len(data)

if __name__ == '__main__':

linear_regression()

问题

1ValueError: could not convert string to float: ‘177.1\t70.1’

解决:hw.TXT中数据是以tab分开的

在修改代码

data = np.loadtxt('hw.txt', delimiter='\t')

RuntimeWarning: overflow encountered in square

出现上溢出现象

注意:nan代表Not A Number(不是一个数),它并不等于0,因为nan不是一个数,所以相关计算都无法得到数字。inf表示正无穷。

补充:想把这些warnings忽略

import warnings

warnings.filterwarnings('ignore')