keras入门笔记2:我们来玩卷积层( Convolution Layer)~一层卷积层分类Mnist!

哈啰哈啰~继之前的:

keras深度学习入门笔记附录1:让我们看看有多少种让搭建好的模型开始跑的方式(fit 和 train on batch)

https://blog.csdn.net/timcanby/article/details/103644089

从代码案例入门keras1:LeNet对手写数字Mnist分类

https://blog.csdn.net/timcanby/article/details/103620371

之后,小女子打算整理一个扫盲系列的第二个入门笔记:让我们来用一层卷积层分类Mnist:

(搬走代码给github小星星啊)

盆友们应该知道,全联接层为flatten后的数据注入类别信息,这就说明什么呢 ,其实我只要多一点epochs,只用一层fc都可以玩mnist分类 。数据的导入代码见(从代码案例入门keras1:LeNet对手写数字Mnist分类),模型部分的代码如下:

model = Sequential()

model.add(Flatten(name='flatten',input_shape=(28, 28, 1)))

model.add(Dense(10,activation='softmax',name='fc1'))

model.compile(loss=keras.losses.categorical_crossentropy,

optimizer=keras.optimizers.Adam(),

metrics=['accuracy']完整代码:https://github.com/timcanby/Kares__StudyNotes/blob/master/oneFCTest.py

网络的结构就是:

Layer (type) Output Shape Param #

=================================================================

history=model.fit(x=X_train,y=Y_train, batch_size=batch_size,nb_epoch=n_epochs)

flatten (Flatten) (None, 784) 0

_________________________________________________________________

fc1 (Dense) (None, 10) 7850

=================================================================

Total params: 7,850

Trainable params: 7,850

Non-trainable params: 0

_________________________________________________________________

如此简单粗暴。换句话说就是数据读进去就把它拉成一维然后喂给输出是十个概率的softmax装载全联接层。这样只训练一道是肯定不行的比如epoch1:

Epoch 1/100

100/60000 [..............................] - ETA: 1:06 - loss: 14.8443 - acc: 0.0700

4200/60000 [=>............................] - ETA: 2s - loss: 13.9142 - acc: 0.1276

9200/60000 [===>..........................] - ETA: 1s - loss: 11.9051 - acc: 0.2503

14500/60000 [======>.......................] - ETA: 0s - loss: 10.2923 - acc: 0.3507

20700/60000 [=========>....................] - ETA: 0s - loss: 8.9224 - acc: 0.4362

26400/60000 [============>.................] - ETA: 0s - loss: 8.0683 - acc: 0.4899

31100/60000 [==============>...............] - ETA: 0s - loss: 7.5861 - acc: 0.5201

37200/60000 [=================>............] - ETA: 0s - loss: 6.9932 - acc: 0.5568

43300/60000 [====================>.........] - ETA: 0s - loss: 6.5184 - acc: 0.5865

48900/60000 [=======================>......] - ETA: 0s - loss: 6.1924 - acc: 0.6069

54000/60000 [==========================>...] - ETA: 0s - loss: 5.9157 - acc: 0.6243

59900/60000 [============================>.] - ETA: 0s - loss: 5.6578 - acc: 0.6403

60000/60000 [==============================] - 1s 11us/step - loss: 5.6542 - acc: 0.6406为什么不行?感受一下如果你心不在学习上给你看一遍的东西你能记住吗??哈哈哈哈所以我们如果在不去仔细观察的前提下看得多了我们也可以记住,所以在100个epoch后我们可以看到:

100/60000 [..............................] - ETA: 1s - loss: 0.9671 - acc: 0.9400

3500/60000 [>.............................] - ETA: 0s - loss: 1.1487 - acc: 0.9280

7000/60000 [==>...........................] - ETA: 0s - loss: 1.1750 - acc: 0.9256

10900/60000 [====>.........................] - ETA: 0s - loss: 1.1918 - acc: 0.9249

16100/60000 [=======>......................] - ETA: 0s - loss: 1.2007 - acc: 0.9242

20600/60000 [=========>....................] - ETA: 0s - loss: 1.1664 - acc: 0.9266

24300/60000 [===========>..................] - ETA: 0s - loss: 1.1546 - acc: 0.9272

27700/60000 [============>.................] - ETA: 0s - loss: 1.1421 - acc: 0.9280

31400/60000 [==============>...............] - ETA: 0s - loss: 1.1560 - acc: 0.9270

34700/60000 [================>.............] - ETA: 0s - loss: 1.1615 - acc: 0.9267

38000/60000 [==================>...........] - ETA: 0s - loss: 1.1517 - acc: 0.9273

41300/60000 [===================>..........] - ETA: 0s - loss: 1.1367 - acc: 0.9283

44200/60000 [=====================>........] - ETA: 0s - loss: 1.1420 - acc: 0.9279

47500/60000 [======================>.......] - ETA: 0s - loss: 1.1386 - acc: 0.9282

51200/60000 [========================>.....] - ETA: 0s - loss: 1.1340 - acc: 0.9285

56100/60000 [===========================>..] - ETA: 0s - loss: 1.1314 - acc: 0.9287

60000/60000 [==============================] - 1s 13us/step - loss: 1.1286 - acc: 0.9289机器看了100遍以后表示记住了92.89%哈哈。当然今天的主题不是这个。我们今天来看的是当你有一点点学习欲望的时候如何学习知识

为什么这么说呢,大家可能看到过说卷积层是个抽取局部细节的过滤器,它把图像抽象到高度抽象的空间在进行分类:

图片来自:https://towardsdatascience.com/a-comprehensive-guide-to-convolutional-neural-networks-the-eli5-way-3bd2b1164a53

也是这篇文章里说的一样:The objective of the Convolution Operation is to extract the high-level featuressuch as edges, from the input image.

就是说机器在尝试把图像的轮廓啊,颜色信息抽象到高纬再进行分类也就是,尝试去用“脑子理解这张图”了。所以我们今天就看看用一层的卷积来分类mnist,看看这个卷积究竟神奇在哪里,今天的模型架构如下:

在刚刚的基础下:from keras.layers import Conv2D

然后模型部分是:

model = Sequential()

model.add(Conv2D(16, kernel_size=(3,3), strides=(1, 1), padding='same', activation='tanh',name='conv',input_shape=(28, 28, 1)))

model.add(Flatten(name='flatten'))

model.add(Dense(10,activation='softmax',name='fc1'))

model.compile(loss=keras.losses.categorical_crossentropy,

optimizer=keras.optimizers.Adam(),

metrics=['accuracy'])然后跑一下:可以看到只需要一个epoch,batchsize=100

100/60000 [..............................] - ETA: 1:35 - loss: 2.5583 - acc: 0.0700

800/60000 [..............................] - ETA: 15s - loss: 1.2045 - acc: 0.6087

1500/60000 [..............................] - ETA: 10s - loss: 0.8945 - acc: 0.7080

2200/60000 [>.............................] - ETA: 8s - loss: 0.7685 - acc: 0.7582

2800/60000 [>.............................] - ETA: 7s - loss: 0.7062 - acc: 0.7779

3500/60000 [>.............................] - ETA: 6s - loss: 0.6357 - acc: 0.8009

4200/60000 [=>............................] - ETA: 6s - loss: 0.5920 - acc: 0.8174

4900/60000 [=>............................] - ETA: 6s - loss: 0.5481 - acc: 0.8312

5600/60000 [=>............................] - ETA: 5s - loss: 0.5217 - acc: 0.8389

6300/60000 [==>...........................] - ETA: 5s - loss: 0.4976 - acc: 0.8460

7000/60000 [==>...........................] - ETA: 5s - loss: 0.4744 - acc: 0.8530

7700/60000 [==>...........................] - ETA: 5s - loss: 0.4502 - acc: 0.8614

8400/60000 [===>..........................] - ETA: 4s - loss: 0.4379 - acc: 0.8670

9000/60000 [===>..........................] - ETA: 4s - loss: 0.4287 - acc: 0.8698

9600/60000 [===>..........................] - ETA: 4s - loss: 0.4154 - acc: 0.8735

10200/60000 [====>.........................] - ETA: 4s - loss: 0.4035 - acc: 0.8769

10900/60000 [====>.........................] - ETA: 4s - loss: 0.3906 - acc: 0.8808

11600/60000 [====>.........................] - ETA: 4s - loss: 0.3851 - acc: 0.8830

12300/60000 [=====>........................] - ETA: 4s - loss: 0.3759 - acc: 0.8863

13000/60000 [=====>........................] - ETA: 4s - loss: 0.3666 - acc: 0.8892

13700/60000 [=====>........................] - ETA: 4s - loss: 0.3585 - acc: 0.8915

14400/60000 [======>.......................] - ETA: 4s - loss: 0.3498 - acc: 0.8942

15000/60000 [======>.......................] - ETA: 4s - loss: 0.3448 - acc: 0.8957

15700/60000 [======>.......................] - ETA: 3s - loss: 0.3383 - acc: 0.8978

16400/60000 [=======>......................] - ETA: 3s - loss: 0.3334 - acc: 0.8995

17100/60000 [=======>......................] - ETA: 3s - loss: 0.3268 - acc: 0.9016

17800/60000 [=======>......................] - ETA: 3s - loss: 0.3210 - acc: 0.9035

18500/60000 [========>.....................] - ETA: 3s - loss: 0.3159 - acc: 0.9049

19200/60000 [========>.....................] - ETA: 3s - loss: 0.3125 - acc: 0.9062

19900/60000 [========>.....................] - ETA: 3s - loss: 0.3088 - acc: 0.9074

20600/60000 [=========>....................] - ETA: 3s - loss: 0.3038 - acc: 0.9091

21300/60000 [=========>....................] - ETA: 3s - loss: 0.3005 - acc: 0.9103

22000/60000 [==========>...................] - ETA: 3s - loss: 0.2969 - acc: 0.9113

22700/60000 [==========>...................] - ETA: 3s - loss: 0.2929 - acc: 0.9126

23400/60000 [==========>...................] - ETA: 3s - loss: 0.2887 - acc: 0.9139

24100/60000 [===========>..................] - ETA: 3s - loss: 0.2858 - acc: 0.9146

24800/60000 [===========>..................] - ETA: 2s - loss: 0.2823 - acc: 0.9156

25500/60000 [===========>..................] - ETA: 2s - loss: 0.2779 - acc: 0.9168

26200/60000 [============>.................] - ETA: 2s - loss: 0.2750 - acc: 0.9176

26900/60000 [============>.................] - ETA: 2s - loss: 0.2724 - acc: 0.9186

27600/60000 [============>.................] - ETA: 2s - loss: 0.2696 - acc: 0.9195

28300/60000 [=============>................] - ETA: 2s - loss: 0.2674 - acc: 0.9203

29000/60000 [=============>................] - ETA: 2s - loss: 0.2655 - acc: 0.9210

29700/60000 [=============>................] - ETA: 2s - loss: 0.2638 - acc: 0.9214

30500/60000 [==============>...............] - ETA: 2s - loss: 0.2614 - acc: 0.9222

31200/60000 [==============>...............] - ETA: 2s - loss: 0.2590 - acc: 0.9229

31800/60000 [==============>...............] - ETA: 2s - loss: 0.2574 - acc: 0.9235

32500/60000 [===============>..............] - ETA: 2s - loss: 0.2553 - acc: 0.9240

33200/60000 [===============>..............] - ETA: 2s - loss: 0.2523 - acc: 0.9249

33900/60000 [===============>..............] - ETA: 2s - loss: 0.2503 - acc: 0.9255

34500/60000 [================>.............] - ETA: 2s - loss: 0.2479 - acc: 0.9263

35200/60000 [================>.............] - ETA: 2s - loss: 0.2463 - acc: 0.9267

35900/60000 [================>.............] - ETA: 1s - loss: 0.2454 - acc: 0.9270

36600/60000 [=================>............] - ETA: 1s - loss: 0.2440 - acc: 0.9275

37300/60000 [=================>............] - ETA: 1s - loss: 0.2432 - acc: 0.9276

38000/60000 [==================>...........] - ETA: 1s - loss: 0.2430 - acc: 0.9280

38700/60000 [==================>...........] - ETA: 1s - loss: 0.2413 - acc: 0.9285

39400/60000 [==================>...........] - ETA: 1s - loss: 0.2393 - acc: 0.9290

40100/60000 [===================>..........] - ETA: 1s - loss: 0.2375 - acc: 0.9296

40800/60000 [===================>..........] - ETA: 1s - loss: 0.2355 - acc: 0.9302

41500/60000 [===================>..........] - ETA: 1s - loss: 0.2336 - acc: 0.9307

42200/60000 [====================>.........] - ETA: 1s - loss: 0.2319 - acc: 0.9311

42900/60000 [====================>.........] - ETA: 1s - loss: 0.2302 - acc: 0.9317

43600/60000 [====================>.........] - ETA: 1s - loss: 0.2284 - acc: 0.9323

44300/60000 [=====================>........] - ETA: 1s - loss: 0.2274 - acc: 0.9324

45000/60000 [=====================>........] - ETA: 1s - loss: 0.2257 - acc: 0.9328

45600/60000 [=====================>........] - ETA: 1s - loss: 0.2244 - acc: 0.9331

46300/60000 [======================>.......] - ETA: 1s - loss: 0.2234 - acc: 0.9334

47000/60000 [======================>.......] - ETA: 1s - loss: 0.2226 - acc: 0.9335

47700/60000 [======================>.......] - ETA: 1s - loss: 0.2212 - acc: 0.9338

48300/60000 [=======================>......] - ETA: 0s - loss: 0.2201 - acc: 0.9341

49000/60000 [=======================>......] - ETA: 0s - loss: 0.2187 - acc: 0.9345

49700/60000 [=======================>......] - ETA: 0s - loss: 0.2176 - acc: 0.9348

50400/60000 [========================>.....] - ETA: 0s - loss: 0.2163 - acc: 0.9351

51100/60000 [========================>.....] - ETA: 0s - loss: 0.2149 - acc: 0.9356

51800/60000 [========================>.....] - ETA: 0s - loss: 0.2135 - acc: 0.9359

52500/60000 [=========================>....] - ETA: 0s - loss: 0.2125 - acc: 0.9362

53200/60000 [=========================>....] - ETA: 0s - loss: 0.2117 - acc: 0.9366

53800/60000 [=========================>....] - ETA: 0s - loss: 0.2107 - acc: 0.9369

54500/60000 [==========================>...] - ETA: 0s - loss: 0.2100 - acc: 0.9372

55200/60000 [==========================>...] - ETA: 0s - loss: 0.2094 - acc: 0.9375

55900/60000 [==========================>...] - ETA: 0s - loss: 0.2088 - acc: 0.9378

56600/60000 [===========================>..] - ETA: 0s - loss: 0.2082 - acc: 0.9378

57300/60000 [===========================>..] - ETA: 0s - loss: 0.2072 - acc: 0.9382

58000/60000 [============================>.] - ETA: 0s - loss: 0.2063 - acc: 0.9384

58700/60000 [============================>.] - ETA: 0s - loss: 0.2053 - acc: 0.9387

59300/60000 [============================>.] - ETA: 0s - loss: 0.2043 - acc: 0.9389

59900/60000 [============================>.] - ETA: 0s - loss: 0.2033 - acc: 0.9393

60000/60000 [==============================] - 5s 82us/step - loss: 0.2030 - acc: 0.9394所以关于卷积层里的参数到底有什么奥秘呢?

Conv2D(16, kernel_size=(3,3), strides=(1, 1), padding='same', activation='tanh',name='conv',input_shape=(28, 28, 1))我觉得这里最有疑问的应该就是第一第二个参数了,filter个数以及卷积核的大小。关于这两个参数的学术意义,很多大佬们都有剖析过,比如:https://blog.csdn.net/jyy555555/article/details/80515562

我觉得作者分析的贼详细所以,我们这个门在哪都还没有找到怎么入门的教程就看看在一层卷积层下这两个参数之间的关系:

代码:https://github.com/timcanby/Kares__StudyNotes/blob/master/20200101OneConvtest.py

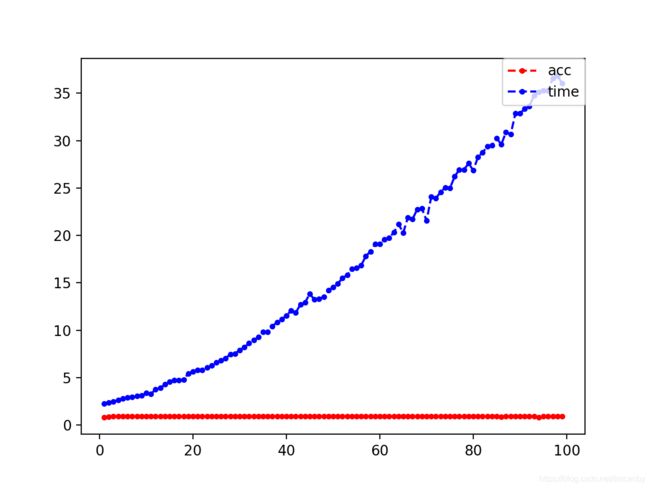

代码加入了计算时间的测量,(最后注释的内容是可以看每一层的输出,感兴趣的朋友自行操作)这里摆放2*2 3*3 4*4 5*5在1-100个filter下的计算时间和accuracy,以及accuracy最高时候的filter数:

| 2*2 | 3*3 | 4*4 | 5*5 | |

| 图像 |  |

|

|

|

kernel=2

maxfilter 21

accMax 0.9319500026355187

kenel=3

maxfilter 16

accMax 0.9414833366622527

kernel=4

maxfilter 14

accMax 0.9419666689758499

kernel=5

maxfilter 9

accMax 0.9414000028371811所以可见在一层的情况下,小核需要的filter数要稍微多一些会收获比较好的效果,但是看个人的数据我觉得还是各有各的特点。当kernel=5的时候计算量会增大但是在小输出的情况下就能有比较好的效果。

好的 多余的分析盆友们可以自己去玩,今天就到这里。(转载请注明出处,github给小星星的是好青年)