ASIFT算法过程实现 --- 配置避坑指南

常规的SIFT算法进行图像匹配的时候,只能进行两个摄像机夹角比较小的(最大是15°),拍摄的图像进行相机的图像匹配,但是针对于相机之间的夹角比较大的时候,上述的算法匹配就是会出现问题.为了解决上面的这个问题,使用了一种改进的算法匹配方式ASIFT算法进行匹配.具体这种算法的优点见博客论文:基于ASIFT算法特征匹配的研究算法特征匹配的研究-图像处理文档类资源-CSDN下载在进行相机图像匹配的时候,一般的情况下我们是使用的双目相机,但是二者的视角是几乎近似平行的,平时我们更多下载资源、学习资料请访问CSDN下载频道.https://download.csdn.net/download/m0_47489229/87108632配置过程出错:

一开始我是看的这两篇博客,进行的配置ASIFT算法在WIN7 64位系统下利用VS2012生成_zz420521的博客-CSDN博客_demo_asift欢迎大家交流,转载时请注明原文出处:http://blog.csdn.net/zlr289652981/article/details/63684857传统的sift算法在视角变化不大的情况下,可以获得良好的匹配效果,但如果在视角变化偏大时,其匹配效果会急剧下降,甚至找不到匹配点。由Guoshen Yu和Jean-Michel Morel提出的ASIFT算法很好的解决了在大视角变化条件下的图像https://blog.csdn.net/zz420521/article/details/63686163搭建可随意更改路径的VS工程-以ASIFT算法为例_zz420521的博客-CSDN博客在前一篇的博文中,笔者介绍了如何利用Guoshen Yu和Jean-Michel Morel提出的ASIFT算法的windows下C++版本源码来构建工程,具体见链接:http://blog.csdn.net/zz420521/article/details/63686163但实际上,虽然工程是跑通了,但是里面还包含了许多我们不熟悉的或者不需要的东西,尤其是一些路径设置的问题,对工程里面的设置https://blog.csdn.net/zz420521/article/details/65437441?ops_request_misc=&request_id=&biz_id=102&utm_term=vs2017%E5%AE%9E%E7%8E%B0asift%E7%AE%97%E6%B3%95&utm_medium=distribute.pc_search_result.none-task-blog-2~all~sobaiduweb~default-0-65437441.nonecase&spm=1018.2226.3001.4187我是用的VS2017进行配置,已经配置好的是OpenCV3.6.4+contribe3.6.4,然后按照上面的步骤进行配置完成之后,发现有错误出现,当然我的需求是使用代码进行编制,在进行生成解决方案的时候会出现一个如下的错误:

这里的问题貌似是需要进行提前配置Eigen和Boost库,但是我发现这个地方是直接使用一个exe文件进行操作的,并不是我所需要的.百度也没有发现想要解决的东西.

后来查阅资料发现,就是代码形式的一般是相应的python形式的,但是在查询代码的过程之中,查到一个c++形式实现这个功能的代码,如下所示:

#include

#include

#include

#include

#include

#include

#include

using namespace std;

using namespace cv;

static void help(char** argv)

{

cout

<< "This is a sample usage of AffineFeature detector/extractor.\n"

<< "And this is a C++ version of samples/python/asift.py\n"

<< "Usage: " << argv[0] << "\n"

<< " [ --feature= ] # Feature to use.\n"

<< " [ --flann ] # use Flann-based matcher instead of bruteforce.\n"

<< " [ --maxlines= ] # The maximum number of lines in visualizing the matching result.\n"

<< " [ --image1= ]\n"

<< " [ --image2= ] # Path to images to compare."

<< endl;

}

static double timer()

{

return getTickCount() / getTickFrequency();

}

int main(int argc, char** argv)

{

vector fileName;

cv::CommandLineParser parser(argc, argv,

"{help h ||}"

"{feature|brisk|}"

"{flann||}"

"{maxlines|50|}"

"{image1|aero1.jpg|}{image2|aero3.jpg|}");

if (parser.has("help"))

{

help(argv);

return 0;

}

string feature = parser.get("feature");

bool useFlann = parser.has("flann");

int maxlines = parser.get("maxlines");

fileName.push_back(samples::findFile(parser.get("image1")));

fileName.push_back(samples::findFile(parser.get("image2")));

if (!parser.check())

{

parser.printErrors();

cout << "See --help (or missing '=' between argument name and value?)" << endl;

return 1;

}

Mat img1 = imread(fileName[0], IMREAD_GRAYSCALE);

Mat img2 = imread(fileName[1], IMREAD_GRAYSCALE);

if (img1.empty())

{

cerr << "Image " << fileName[0] << " is empty or cannot be found" << endl;

return 1;

}

if (img2.empty())

{

cerr << "Image " << fileName[1] << " is empty or cannot be found" << endl;

return 1;

}

Ptr backend;

Ptr matcher;

if (feature == "sift")

{

backend = SIFT::create();

if (useFlann)

matcher = DescriptorMatcher::create("FlannBased");

else

matcher = DescriptorMatcher::create("BruteForce");

}

else if (feature == "orb")

{

backend = ORB::create();

if (useFlann)

matcher = makePtr(makePtr(6, 12, 1));

else

matcher = DescriptorMatcher::create("BruteForce-Hamming");

}

else if (feature == "brisk")

{

backend = BRISK::create();

if (useFlann)

matcher = makePtr(makePtr(6, 12, 1));

else

matcher = DescriptorMatcher::create("BruteForce-Hamming");

}

else

{

cerr << feature << " is not supported. See --help" << endl;

return 1;

}

cout << "extracting with " << feature << "..." << endl;

Ptr ext = AffineFeature::create(backend);

vector kp1, kp2;

Mat desc1, desc2;

ext->detectAndCompute(img1, Mat(), kp1, desc1);

ext->detectAndCompute(img2, Mat(), kp2, desc2);

cout << "img1 - " << kp1.size() << " features, "

<< "img2 - " << kp2.size() << " features"

<< endl;

cout << "matching with " << (useFlann ? "flann" : "bruteforce") << "..." << endl;

double start = timer();

// match and draw

vector< vector > rawMatches;

vector p1, p2;

vector distances;

matcher->knnMatch(desc1, desc2, rawMatches, 2);

// filter_matches

for (size_t i = 0; i < rawMatches.size(); i++)

{

const vector& m = rawMatches[i];

if (m.size() == 2 && m[0].distance < m[1].distance * 0.75)

{

p1.push_back(kp1[m[0].queryIdx].pt);

p2.push_back(kp2[m[0].trainIdx].pt);

distances.push_back(m[0].distance);

}

}

vector status;

vector< pair > pointPairs;

Mat H = findHomography(p1, p2, status, RANSAC);

int inliers = 0;

for (size_t i = 0; i < status.size(); i++)

{

if (status[i])

{

pointPairs.push_back(make_pair(p1[i], p2[i]));

distances[inliers] = distances[i];

// CV_Assert(inliers <= (int)i);

inliers++;

}

}

distances.resize(inliers);

cout << "execution time: " << fixed << setprecision(2) << (timer() - start) * 1000 << " ms" << endl;

cout << inliers << " / " << status.size() << " inliers/matched" << endl;

cout << "visualizing..." << endl;

vector indices(inliers);

cv::sortIdx(distances, indices, SORT_EVERY_ROW + SORT_ASCENDING);

// explore_match

int h1 = img1.size().height;

int w1 = img1.size().width;

int h2 = img2.size().height;

int w2 = img2.size().width;

Mat vis = Mat::zeros(max(h1, h2), w1 + w2, CV_8U);

img1.copyTo(Mat(vis, Rect(0, 0, w1, h1)));

img2.copyTo(Mat(vis, Rect(w1, 0, w2, h2)));

cvtColor(vis, vis, COLOR_GRAY2BGR);

vector corners(4);

corners[0] = Point2f(0, 0);

corners[1] = Point2f((float)w1, 0);

corners[2] = Point2f((float)w1, (float)h1);

corners[3] = Point2f(0, (float)h1);

vector icorners;

perspectiveTransform(corners, corners, H);

transform(corners, corners, Matx23f(1, 0, (float)w1, 0, 1, 0));

Mat(corners).convertTo(icorners, CV_32S);

polylines(vis, icorners, true, Scalar(255, 255, 255));

for (int i = 0; i < min(inliers, maxlines); i++)

{

int idx = indices[i];

const Point2f& pi1 = pointPairs[idx].first;

const Point2f& pi2 = pointPairs[idx].second;

circle(vis, pi1, 2, Scalar(0, 255, 0), -1);

circle(vis, pi2 + Point2f((float)w1, 0), 2, Scalar(0, 255, 0), -1);

line(vis, pi1, pi2 + Point2f((float)w1, 0), Scalar(0, 255, 0));

}

if (inliers > maxlines)

cout << "only " << maxlines << " inliers are visualized" << endl;

imshow("affine find_obj", vis);

waitKey();

cout << "done" << endl;

return 0;

}

还是老样子,这里的报错原因是我的库是opencv3.6.4,但是支持这个地方的是opencv4.5.0版本以上,还必须要配置contribe,所以一切还得重新弄.

我在使用配置vs2015+cmake3.24.0+opencv4.5.3+contribe4.5.3过程又出现了之前的老问题,解决方案失败,就是像下面的这个所示:

这个过程就是我是连接的外网,发现还是不行,就是需要下载很多很多的配置文件,将这些配置文件需要一个一个下载下来才可以,感觉还是比较困难的.然后就接着找博客,我发现了这一篇博客.史上最全最详细讲解 VS2015+OpenCV4.5.1+OpenCV-4.5.1_Contribute+CUDA_元宇宙MetaAI的博客-CSDN博客视频正在录制请稍等VS2015+opencv4.1+opencv4.1_contributeCmake官网CMake Warning at cmake/OpenCVDownload.cmake:202 (message)如何去掉cmake编译OpenCV时的Cmake Warning:“OpenCVGenSetupVars.cmake:54https://blog.csdn.net/CSS360/article/details/117871722?ops_request_misc=&request_id=&biz_id=102&utm_term=vs2015%20%20opencv4.5.1&utm_medium=distribute.pc_search_result.none-task-blog-2~all~sobaiduweb~default-3-117871722.142%5Ev66%5Econtrol,201%5Ev3%5Eadd_ask,213%5Ev2%5Et3_esquery_v2&spm=1018.2226.3001.4187

我的电脑已经是使用vs2017配置好别的环境了,所以只能是用vs2015进行配置新的,参考上面的操作步骤,里面的东西在他的百度网盘里面下载.cache文件添加进去,就可以了.

可以个锤子,还是不行.....................................................................................................................

前面的配置过程都是有问题的,就是我走过的坑.

第一种配置

发现要安装之前还要安装一个什么CUDA什么鬼,这具体是个什么,我现在也不知道,需要在网上进行发查找,那就接着安装...

win10 vs2015 cmake3.18.0 opencv4.5.1 opencv_contrib4.5.1 编译攻略_dragon_perfect的博客-CSDN博客_cmake编译opencv4.5.1一、前期准备:需要下载的部分有四个:OpenCV, OpenCV_contrib, CMake,VisualStudio1. 下载OpenCV and OpenCV_contrib,要求是匹配的同版本,并解压缩存储到同一文件夹下;下载OpenCV链接:https://opencv.org/releases/下载OpenCV_contrib链接 :https://github.com/opencv/opencv_contrib/releases2. CMake的下载下载链接:ht...https://blog.csdn.net/zhulong1984/article/details/112728038https://www.baidu.com/link?url=STTSCR6UH-Zr7kAZXC8sNy3h9MSenc5VtvTHiSWMeQ30_MXa0-zrnioqai1bvwuNYjH2s4jpYwHufK5vrhn-LF0xMpJ8zL9pltVB_gINiJe&wd=&eqid=a6ef9f51000221a300000006637e2a66![]() https://www.baidu.com/link?url=STTSCR6UH-Zr7kAZXC8sNy3h9MSenc5VtvTHiSWMeQ30_MXa0-zrnioqai1bvwuNYjH2s4jpYwHufK5vrhn-LF0xMpJ8zL9pltVB_gINiJe&wd=&eqid=a6ef9f51000221a300000006637e2a66我在看完上面两个博客的介绍之后,发现配置之中不存在什么CUDA的配置,也就是说我没有安装CUDA,那就接着CSDN,github看不懂,智力有限.那么接下来请看下面的这个博文,进行配置CUDA配置过程.

https://www.baidu.com/link?url=STTSCR6UH-Zr7kAZXC8sNy3h9MSenc5VtvTHiSWMeQ30_MXa0-zrnioqai1bvwuNYjH2s4jpYwHufK5vrhn-LF0xMpJ8zL9pltVB_gINiJe&wd=&eqid=a6ef9f51000221a300000006637e2a66我在看完上面两个博客的介绍之后,发现配置之中不存在什么CUDA的配置,也就是说我没有安装CUDA,那就接着CSDN,github看不懂,智力有限.那么接下来请看下面的这个博文,进行配置CUDA配置过程.

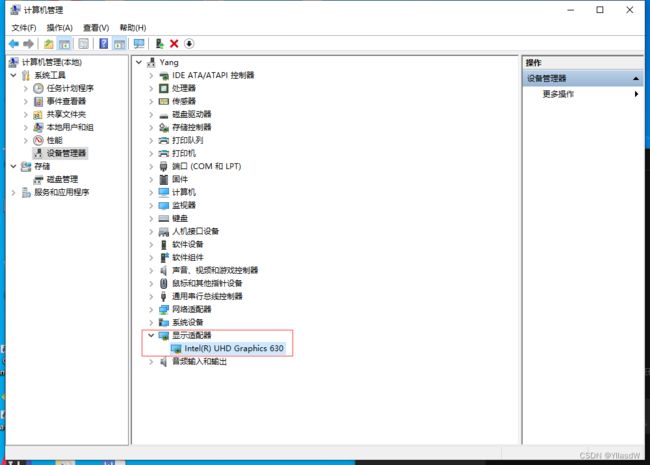

win10+cuda11.0+vs2019安装教程_离墨猫的博客-CSDN博客_cuda vs转自:https://www.jianshu.com/p/1fd15d2408bf?utm_campaign=hugo第一步:检查显卡支持的cuda版本1.第一种方法:win+R打开cmd,输入nvidia-smi,我的显卡是nvidia 2070super,支持的cuda版本是11.0图1 cmd查看显卡支持的cuda版本2.第二种方法:搜索框输入nvidia,出现nvidia控制面板,打开帮助中的系统信息,选择组件,出现cuda版本信息。第二步:安装vs2019.https://blog.csdn.net/syz201558503103/article/details/114867877?ops_request_misc=&request_id=&biz_id=102&utm_term=vs%20cuda%E5%A6%82%E4%BD%95%E5%AE%89%E8%A3%85&utm_medium=distribute.pc_search_result.none-task-blog-2~all~sobaiduweb~default-3-114867877.pc_v2_rank_dl_default&spm=1018.2226.3001.4449但是我看了一些实验室配的电脑的显卡,只有一个核显,没有独显,所以就是没有什么NVDIA配置,就没有什么CUDA配置.

到这个地方思路有断了,那么我可不可以不使用cuda的加速了.又开始了在vs2015上的配置过程,但是还是生成解决方案的时候,爆出了很多的错误.

实在没有办法了,只能在VS2017上进行配置了,突然发现可以,生成解决方案和install都没有爆出错误,很玄学. 然后我执行的代码把原来的修改了一下

#include

#include

#include

#include

#include

#include

#include

using namespace std;

using namespace cv;

static void help(char** argv)

{

cout

<< "This is a sample usage of AffineFeature detector/extractor.\n"

<< "And this is a C++ version of samples/python/asift.py\n"

<< "Usage: " << argv[0] << "\n"

<< " [ --feature= ] # Feature to use.\n"

<< " [ --flann ] # use Flann-based matcher instead of bruteforce.\n"

<< " [ --maxlines= ] # The maximum number of lines in visualizing the matching result.\n"

<< " [ --image1= ]\n"

<< " [ --image2= ] # Path to images to compare."

<< endl;

}

static double timer()

{

return getTickCount() / getTickFrequency();

}

int main(int argc, char** argv)

{

vector fileName;

cv::CommandLineParser parser(argc, argv,

"{help h ||}"

"{feature|brisk|}"

"{flann||}"

"{maxlines|50|}"

"{image1|aero1.jpg|}{image2|aero3.jpg|}");

if (parser.has("help"))

{

help(argv);

return 0;

}

string feature = parser.get("feature");

bool useFlann = parser.has("flann");

int maxlines = parser.get("maxlines");

fileName.push_back(samples::findFile(parser.get("image1")));

fileName.push_back(samples::findFile(parser.get("image2")));

if (!parser.check())

{

parser.printErrors();

cout << "See --help (or missing '=' between argument name and value?)" << endl;

return 1;

}

Mat img1 = imread("茉莉清茶1.jpg", IMREAD_GRAYSCALE);

Mat img2 = imread("茉莉清茶2.jpg", IMREAD_GRAYSCALE);

if (img1.empty())

{

cerr << "Image1 " << " is empty or cannot be found" << endl;

return 1;

}

if (img2.empty())

{

cerr << "Image2 " << " is empty or cannot be found" << endl;

return 1;

}

Ptr backend;

Ptr matcher;

if (feature == "sift")

{

backend = SIFT::create();

if (useFlann)

matcher = DescriptorMatcher::create("FlannBased");

else

matcher = DescriptorMatcher::create("BruteForce");

}

else if (feature == "orb")

{

backend = ORB::create();

if (useFlann)

matcher = makePtr(makePtr(6, 12, 1));

else

matcher = DescriptorMatcher::create("BruteForce-Hamming");

}

else if (feature == "brisk")

{

backend = BRISK::create();

if (useFlann)

matcher = makePtr(makePtr(6, 12, 1));

else

matcher = DescriptorMatcher::create("BruteForce-Hamming");

}

else

{

cerr << feature << " is not supported. See --help" << endl;

return 1;

}

cout << "extracting with " << feature << "..." << endl;

Ptr ext = AffineFeature::create(backend);

vector kp1, kp2;

Mat desc1, desc2;

ext->detectAndCompute(img1, Mat(), kp1, desc1);

ext->detectAndCompute(img2, Mat(), kp2, desc2);

cout << "img1 - " << kp1.size() << " features, "

<< "img2 - " << kp2.size() << " features"

<< endl;

cout << "matching with " << (useFlann ? "flann" : "bruteforce") << "..." << endl;

double start = timer();

// match and draw

vector< vector > rawMatches;

vector p1, p2;

vector distances;

matcher->knnMatch(desc1, desc2, rawMatches, 2);

// filter_matches

for (size_t i = 0; i < rawMatches.size(); i++)

{

const vector& m = rawMatches[i];

if (m.size() == 2 && m[0].distance < m[1].distance * 0.75)

{

p1.push_back(kp1[m[0].queryIdx].pt);

p2.push_back(kp2[m[0].trainIdx].pt);

distances.push_back(m[0].distance);

}

}

vector status;

vector< pair > pointPairs;

Mat H = findHomography(p1, p2, status, RANSAC);

int inliers = 0;

for (size_t i = 0; i < status.size(); i++)

{

if (status[i])

{

pointPairs.push_back(make_pair(p1[i], p2[i]));

distances[inliers] = distances[i];

// CV_Assert(inliers <= (int)i);

inliers++;

}

}

distances.resize(inliers);

cout << "execution time: " << fixed << setprecision(2) << (timer() - start) * 1000 << " ms" << endl;

cout << inliers << " / " << status.size() << " inliers/matched" << endl;

cout << "visualizing..." << endl;

vector indices(inliers);

cv::sortIdx(distances, indices, SORT_EVERY_ROW + SORT_ASCENDING);

// explore_match

int h1 = img1.size().height;

int w1 = img1.size().width;

int h2 = img2.size().height;

int w2 = img2.size().width;

Mat vis = Mat::zeros(max(h1, h2), w1 + w2, CV_8U);

img1.copyTo(Mat(vis, Rect(0, 0, w1, h1)));

img2.copyTo(Mat(vis, Rect(w1, 0, w2, h2)));

cvtColor(vis, vis, COLOR_GRAY2BGR);

vector corners(4);

corners[0] = Point2f(0, 0);

corners[1] = Point2f((float)w1, 0);

corners[2] = Point2f((float)w1, (float)h1);

corners[3] = Point2f(0, (float)h1);

vector icorners;

perspectiveTransform(corners, corners, H);

transform(corners, corners, Matx23f(1, 0, (float)w1, 0, 1, 0));

Mat(corners).convertTo(icorners, CV_32S);

polylines(vis, icorners, true, Scalar(255, 255, 255));

for (int i = 0; i < min(inliers, maxlines); i++)

{

int idx = indices[i];

const Point2f& pi1 = pointPairs[idx].first;

const Point2f& pi2 = pointPairs[idx].second;

circle(vis, pi1, 2, Scalar(0, 255, 0), -1);

circle(vis, pi2 + Point2f((float)w1, 0), 2, Scalar(0, 255, 0), -1);

line(vis, pi1, pi2 + Point2f((float)w1, 0), Scalar(0, 255, 0));

}

if (inliers > maxlines)

cout << "only " << maxlines << " inliers are visualized" << endl;

imshow("affine find_obj", vis);

waitKey();

cout << "done" << endl;

return 0;

}

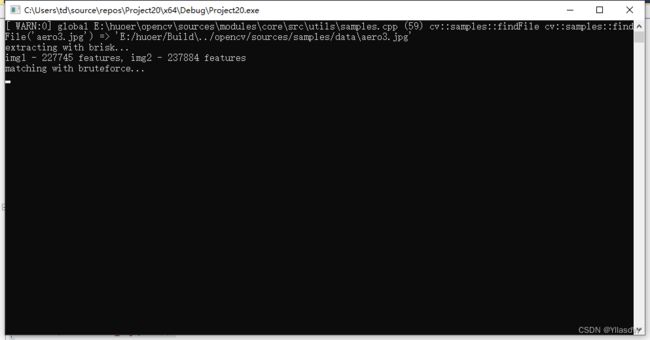

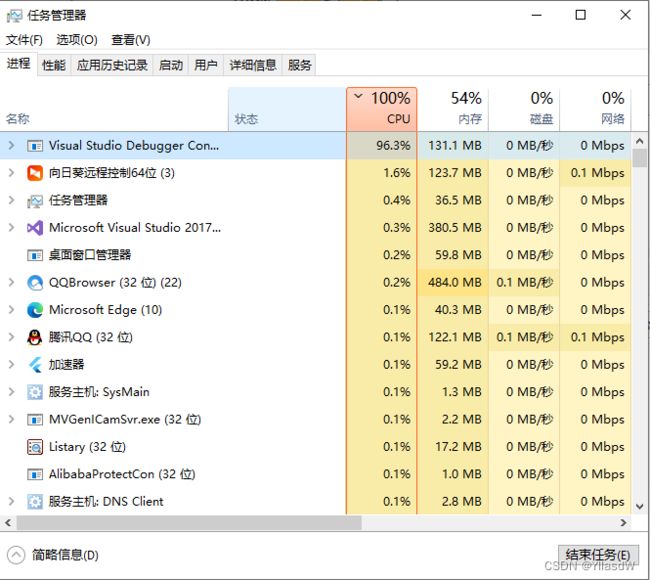

貌似是没有配置相应的cuda加速,然后代码就是一直处于卡顿的状态.

因为ASIFT本来就是比较慢的,那只能慢慢等啦.反正我的CPU已经跑疯了.

这个过程是需要等待很长的时间才可以出现结果的,害,慢慢等吧.受不了了,等的时间实在太长了,等了半个小时还不行,只能是把像素降低.输出结果如下所示:

然后这个过程使用的VS2017+cmake+opencv4.5.1+contribe4.5.1(cuda没有配置),我就放在下面的百度网盘连接里了.

链接:https://pan.baidu.com/s/1hPGGiupQW-1khpiCe4QHGw?pwd=1111

提取码:1111

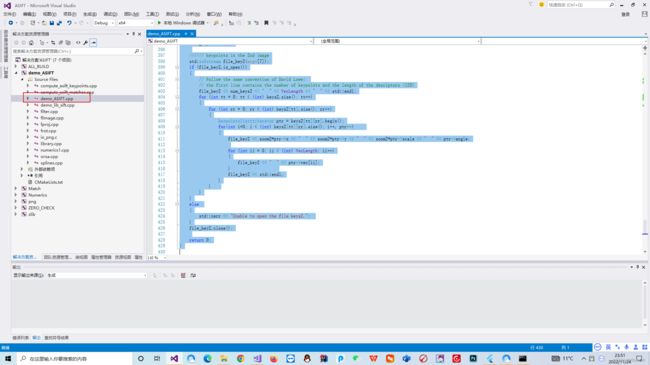

第二种配置

这里说的配置就是前面什么一个博客VS2012之中进行配置的一个过程,不知道为什么就是我的VS2017这个方式总是有问题,所以我使用了VS2015进行了配置.

将这个里面的代码进行改正,为什么呢?因为我看了看之前使用cmd进行操作的时候,这里会输出一定的函数,然后这里argc集合的第一个函数就是exe文件,所以第一个我就不用管,剩下的值我是自己进行配置的,由于像素的限制,就是输入的图片像素是需要进行改变的,变成一定的大小,这个是很容易实现的.后面的代码我是改成了这样的,就直接可以自己使用了.

// Copyright (c) 2008-2011, Guoshen Yu

// Copyright (c) 2008-2011, Jean-Michel Morel

//

// WARNING:

// This file implements an algorithm possibly linked to the patent

//

// Jean-Michel Morel and Guoshen Yu, Method and device for the invariant

// affine recognition recognition of shapes (WO/2009/150361), patent pending.

//

// This file is made available for the exclusive aim of serving as

// scientific tool to verify of the soundness and

// completeness of the algorithm description. Compilation,

// execution and redistribution of this file may violate exclusive

// patents rights in certain countries.

// The situation being different for every country and changing

// over time, it is your responsibility to determine which patent

// rights restrictions apply to you before you compile, use,

// modify, or redistribute this file. A patent lawyer is qualified

// to make this determination.

// If and only if they don't conflict with any patent terms, you

// can benefit from the following license terms attached to this

// file.

//

// This program is provided for scientific and educational only:

// you can use and/or modify it for these purposes, but you are

// not allowed to redistribute this work or derivative works in

// source or executable form. A license must be obtained from the

// patent right holders for any other use.

//

//

//*----------------------------- demo_ASIFT --------------------------------*/

// Detect corresponding points in two images with the ASIFT method.

// Please report bugs and/or send comments to Guoshen Yu [email protected]

//

// Reference: J.M. Morel and G.Yu, ASIFT: A New Framework for Fully Affine Invariant Image

// Comparison, SIAM Journal on Imaging Sciences, vol. 2, issue 2, pp. 438-469, 2009.

// Reference: ASIFT online demo (You can try ASIFT with your own images online.)

// http://www.ipol.im/pub/algo/my_affine_sift/

/*---------------------------------------------------------------------------*/

#include

#include

#include

#include

#include

using namespace std;

#ifdef _OPENMP

#include

#endif

#include "demo_lib_sift.h"

#include "io_png/io_png.h"

#include "library.h"

#include "frot.h"

#include "fproj.h"

#include "compute_asift_keypoints.h"

#include "compute_asift_matches.h"

# define IM_X 800//800

# define IM_Y 600//600

int main(int argc, char **argv)

{

argc = 8;

cout << "?????????????????????????????????????????????????????????????????????????????????????????????????????????????????????????" << endl;

/*

if ((argc != 8) && (argc != 9)) {

std::cerr << " ******************************************************************************* " << std::endl

<< " *************************** ASIFT image matching **************************** " << std::endl

<< " ******************************************************************************* " << std::endl

<< "Usage: " << argv[0] << " imgIn1.png imgIn2.png imgOutVert.png imgOutHori.png " << std::endl

<< " matchings.txt keys1.txt keys2.txt [Resize option: 0/1] " << std::endl

<< "- imgIn1.png, imgIn2.png: input images (in PNG format). " << std::endl

<< "- imgOutVert.png, imgOutHori.png: output images (vertical/horizontal concatenated, " << std::endl

<< " in PNG format.) The detected matchings are connected by write lines." << std::endl

<< "- matchings.txt: coordinates of matched points (col1, row1, col2, row2). " << std::endl

<< "- keys1.txt keys2.txt: ASIFT keypoints of the two images." << std::endl

<< "- [optional 0/1]. 1: input images resize to 800x600 (default). 0: no resize. " << std::endl

<< " ******************************************************************************* " << std::endl

<< " ********************* Jean-Michel Morel, Guoshen Yu, 2010 ******************** " << std::endl

<< " ******************************************************************************* " << std::endl;

return 1;

}

*/

Input

// Read image1

argv[1] = "C:\\Users\\td\\Desktop\\xianmu\\new_stereo_match\\book3.png";

argv[2] = "C:\\Users\\td\\Desktop\\xianmu\\new_stereo_match\\book4.png";

argv[3] = "C:\\Users\\td\\Desktop\\xianmu\\new_stereo_match\\bookshuchu1.png";

argv[4] = "C:\\Users\\td\\Desktop\\xianmu\\new_stereo_match\\bookshuchu2.png";

size_t w1 , h1 ;

size_t w2 , h2 ;

float * iarr1;

//argv[1] = "C:\\Users\\td\\Desktop\\xianmu\\new_stereo_match\\adam1.png";

if (NULL == (iarr1 = read_png_f32_gray(argv[1], &w1, &h1))) {

cout << iarr1 << endl;

std::cerr << "Unable to load image file " << argv[1] << std::endl;

return 1;

}

std::vector ipixels1(iarr1, iarr1 + w1 * h1);

free(iarr1);

// Read image2

float * iarr2;

//argv[2] = "C:\\Users\\td\\Desktop\\xianmu\\new_stereo_match\\adam2.png";

if (NULL == (iarr2 = read_png_f32_gray(argv[2], &w2, &h2))) {

std::cerr << "Unable to load image file " << argv[2] << std::endl;

return 1;

}

std::vector ipixels2(iarr2, iarr2 + w2 * h2);

free(iarr2);

cout << "?????????????????????????????????????????????????????????????????????????????????????????????????????????????????????????" << endl;

/ Resize the images to area wS*hW in remaining the apsect-ratio

/ Resize if the resize flag is not set or if the flag is set unequal to 0

float wS = IM_X;

float hS = IM_Y;

float zoom1=0, zoom2=0;

int wS1=0, hS1=0, wS2=0, hS2=0;

vector ipixels1_zoom, ipixels2_zoom;

int flag_resize = 1;

if (argc == 9)

{

flag_resize = atoi(argv[8]);

}

if ((argc == 8) || (flag_resize != 0))

{

cout << "WARNING: The input images are resized to " << wS << "x" << hS << " for ASIFT. " << endl

<< " But the results will be normalized to the original image size." << endl << endl;

float InitSigma_aa = 1.6;

float fproj_p, fproj_bg;

char fproj_i;

float *fproj_x4, *fproj_y4;

int fproj_o;

fproj_o = 3;

fproj_p = 0;

fproj_i = 0;

fproj_bg = 0;

fproj_x4 = 0;

fproj_y4 = 0;

float areaS = wS * hS;

// Resize image 1

float area1 = w1 * h1;

zoom1 = sqrt(area1/areaS);

wS1 = (int) (w1 / zoom1);

hS1 = (int) (h1 / zoom1);

int fproj_sx = wS1;

int fproj_sy = hS1;

float fproj_x1 = 0;

float fproj_y1 = 0;

float fproj_x2 = wS1;

float fproj_y2 = 0;

float fproj_x3 = 0;

float fproj_y3 = hS1;

/* Anti-aliasing filtering along vertical direction */

if ( zoom1 > 1 )

{

float sigma_aa = InitSigma_aa * zoom1 / 2;

GaussianBlur1D(ipixels1,w1,h1,sigma_aa,1);

GaussianBlur1D(ipixels1,w1,h1,sigma_aa,0);

}

// simulate a tilt: subsample the image along the vertical axis by a factor of t.

ipixels1_zoom.resize(wS1*hS1);

fproj (ipixels1, ipixels1_zoom, w1, h1, &fproj_sx, &fproj_sy, &fproj_bg, &fproj_o, &fproj_p,

&fproj_i , fproj_x1 , fproj_y1 , fproj_x2 , fproj_y2 , fproj_x3 , fproj_y3, fproj_x4, fproj_y4);

// Resize image 2

float area2 = w2 * h2;

zoom2 = sqrt(area2/areaS);

wS2 = (int) (w2 / zoom2);

hS2 = (int) (h2 / zoom2);

fproj_sx = wS2;

fproj_sy = hS2;

fproj_x2 = wS2;

fproj_y3 = hS2;

/* Anti-aliasing filtering along vertical direction */

if ( zoom1 > 1 )

{

float sigma_aa = InitSigma_aa * zoom2 / 2;

GaussianBlur1D(ipixels2,w2,h2,sigma_aa,1);

GaussianBlur1D(ipixels2,w2,h2,sigma_aa,0);

}

// simulate a tilt: subsample the image along the vertical axis by a factor of t.

ipixels2_zoom.resize(wS2*hS2);

fproj (ipixels2, ipixels2_zoom, w2, h2, &fproj_sx, &fproj_sy, &fproj_bg, &fproj_o, &fproj_p,

&fproj_i , fproj_x1 , fproj_y1 , fproj_x2 , fproj_y2 , fproj_x3 , fproj_y3, fproj_x4, fproj_y4);

}

else

{

ipixels1_zoom.resize(w1*h1);

ipixels1_zoom = ipixels1;

wS1 = w1;

hS1 = h1;

zoom1 = 1;

ipixels2_zoom.resize(w2*h2);

ipixels2_zoom = ipixels2;

wS2 = w2;

hS2 = h2;

zoom2 = 1;

}

/ Compute ASIFT keypoints

// number N of tilts to simulate t = 1, \sqrt{2}, (\sqrt{2})^2, ..., {\sqrt{2}}^(N-1)

int num_of_tilts1 = 7;

int num_of_tilts2 = 7;

// int num_of_tilts1 = 1;

// int num_of_tilts2 = 1;

int verb = 0;

// Define the SIFT parameters

siftPar siftparameters;

default_sift_parameters(siftparameters);

vector< vector< keypointslist > > keys1;

vector< vector< keypointslist > > keys2;

int num_keys1=0, num_keys2=0;

cout << "Computing keypoints on the two images..." << endl;

time_t tstart, tend;

tstart = time(0);

num_keys1 = compute_asift_keypoints(ipixels1_zoom, wS1, hS1, num_of_tilts1, verb, keys1, siftparameters);

num_keys2 = compute_asift_keypoints(ipixels2_zoom, wS2, hS2, num_of_tilts2, verb, keys2, siftparameters);

tend = time(0);

cout << "Keypoints computation accomplished in " << difftime(tend, tstart) << " seconds." << endl;

Match ASIFT keypoints

int num_matchings;

matchingslist matchings;

cout << "Matching the keypoints..." << endl;

tstart = time(0);

num_matchings = compute_asift_matches(num_of_tilts1, num_of_tilts2, wS1, hS1, wS2,

hS2, verb, keys1, keys2, matchings, siftparameters);

tend = time(0);

cout << "Keypoints matching accomplished in " << difftime(tend, tstart) << " seconds." << endl;

/ Output image containing line matches (the two images are concatenated one above the other)

int band_w = 20; // insert a black band of width band_w between the two images for better visibility

int wo = MAX(w1,w2);

int ho = h1+h2+band_w;

float *opixelsASIFT = new float[wo*ho];

for(int j = 0; j < (int) ho; j++)

for(int i = 0; i < (int) wo; i++) opixelsASIFT[j*wo+i] = 255;

/// Copy both images to output

for(int j = 0; j < (int) h1; j++)

for(int i = 0; i < (int) w1; i++) opixelsASIFT[j*wo+i] = ipixels1[j*w1+i];

for(int j = 0; j < (int) h2; j++)

for(int i = 0; i < (int) (int)w2; i++) opixelsASIFT[(h1 + band_w + j)*wo + i] = ipixels2[j*w2 + i];

Draw matches

matchingslist::iterator ptr = matchings.begin();

for(int i=0; i < (int) matchings.size(); i++, ptr++)

{

draw_line(opixelsASIFT, (int) (zoom1*ptr->first.x), (int) (zoom1*ptr->first.y),

(int) (zoom2*ptr->second.x), (int) (zoom2*ptr->second.y) + h1 + band_w, 255.0f, wo, ho);

}

/ Save imgOut

write_png_f32(argv[3], opixelsASIFT, wo, ho, 1);

delete[] opixelsASIFT; /*memcheck*/

/// Output image containing line matches (the two images are concatenated one aside the other)

int woH = w1+w2+band_w;

int hoH = MAX(h1,h2);

float *opixelsASIFT_H = new float[woH*hoH];

for(int j = 0; j < (int) hoH; j++)

for(int i = 0; i < (int) woH; i++) opixelsASIFT_H[j*woH+i] = 255;

/// Copy both images to output

for(int j = 0; j < (int) h1; j++)

for(int i = 0; i < (int) w1; i++) opixelsASIFT_H[j*woH+i] = ipixels1[j*w1+i];

for(int j = 0; j < (int) h2; j++)

for(int i = 0; i < (int) w2; i++) opixelsASIFT_H[j*woH + w1 + band_w + i] = ipixels2[j*w2 + i];

Draw matches

matchingslist::iterator ptrH = matchings.begin();

for(int i=0; i < (int) matchings.size(); i++, ptrH++)

{

draw_line(opixelsASIFT_H, (int) (zoom1*ptrH->first.x), (int) (zoom1*ptrH->first.y),

(int) (zoom2*ptrH->second.x) + w1 + band_w, (int) (zoom2*ptrH->second.y), 255.0f, woH, hoH);

}

/ Save imgOut

write_png_f32(argv[4], opixelsASIFT_H, woH, hoH, 1);

delete[] opixelsASIFT_H; /*memcheck*/

// Write the coordinates of the matched points (row1, col1, row2, col2) to the file argv[5]

std::ofstream file(argv[5]);

if (file.is_open())

{

// Write the number of matchings in the first line

file << num_matchings << std::endl;

matchingslist::iterator ptr = matchings.begin();

for(int i=0; i < (int) matchings.size(); i++, ptr++)

{

file << zoom1*ptr->first.x << " " << zoom1*ptr->first.y << " " << zoom2*ptr->second.x <<

" " << zoom2*ptr->second.y << std::endl;

}

}

else

{

std::cerr << "Unable to open the file matchings.";

}

file.close();

// Write all the keypoints (row, col, scale, orientation, desciptor (128 integers)) to

// the file argv[6] (so that the users can match the keypoints with their own matching algorithm if they wish to)

// keypoints in the 1st image

std::ofstream file_key1(argv[6]);

if (file_key1.is_open())

{

// Follow the same convention of David Lowe:

// the first line contains the number of keypoints and the length of the desciptors (128)

file_key1 << num_keys1 << " " << VecLength << " " << std::endl;

for (int tt = 0; tt < (int) keys1.size(); tt++)

{

for (int rr = 0; rr < (int) keys1[tt].size(); rr++)

{

keypointslist::iterator ptr = keys1[tt][rr].begin();

for(int i=0; i < (int) keys1[tt][rr].size(); i++, ptr++)

{

file_key1 << zoom1*ptr->x << " " << zoom1*ptr->y << " " << zoom1*ptr->scale << " " << ptr->angle;

for (int ii = 0; ii < (int) VecLength; ii++)

{

file_key1 << " " << ptr->vec[ii];

}

file_key1 << std::endl;

}

}

}

}

else

{

std::cerr << "Unable to open the file keys1.";

}

file_key1.close();

// keypoints in the 2nd image

std::ofstream file_key2(argv[7]);

if (file_key2.is_open())

{

// Follow the same convention of David Lowe:

// the first line contains the number of keypoints and the length of the desciptors (128)

file_key2 << num_keys2 << " " << VecLength << " " << std::endl;

for (int tt = 0; tt < (int) keys2.size(); tt++)

{

for (int rr = 0; rr < (int) keys2[tt].size(); rr++)

{

keypointslist::iterator ptr = keys2[tt][rr].begin();

for(int i=0; i < (int) keys2[tt][rr].size(); i++, ptr++)

{

file_key2 << zoom2*ptr->x << " " << zoom2*ptr->y << " " << zoom2*ptr->scale << " " << ptr->angle;

for (int ii = 0; ii < (int) VecLength; ii++)

{

file_key2 << " " << ptr->vec[ii];

}

file_key2 << std::endl;

}

}

}

}

else

{

std::cerr << "Unable to open the file keys2.";

}

file_key2.close();

return 0;

}

原始图片实在是太大了,我就把他的像素变小了一点点,然后输出的结果如下所示:

终于弄好了,太难了,花费了几天........................................

上面的两个代码我是同时运行的,总体是第一个方式比较快,需要进行降低像素.