Fast-Rcnn介绍

本文转载自:https://saicoco.github.io/

Fast-RCNN

Fast-RCNN之所以称为Fast,较RCNN快在proposals获取:RCNN将一张图上的所有proposals都输入到CNN中,而Fast-RCNN利用卷积的平移不变形,一次性将所有的proposals投影到卷积后的feature maps上,

极大的减少了运算量;其次则是端到端的训练,不像以前的模型:Selective search+Hog+SVM, SS+CNN+SVM这种分阶段训练手法,使得模型学习到的特征更为有效。

Fast-RCNN 原理

上图

就上图开讲吧,Fast-RCNN可以分为这么几个模块:

- roi_data_layer

- Deep ConvNet

- roi_pooling_layer

- softmax & box regressor

roi_data_layer主要负责分别向Deep ConvNet和roi_pooling_layer提供整张图片和图片中proposals, labels等,

而Deep ConvNet则从一张图片中提取feature maps, 利用得到的feature maps, roi_data_layer将图片中

proposals投影到其对应feature maps上,然后利用roi_pooling_layer将ROI采样得到固定长度特征向量(ROI feature vector),

然后送入最后的softmax和bbox regressor。这里主要详细讲讲roi_data_layer和roi_pooling_layer

roi_pooling_layer

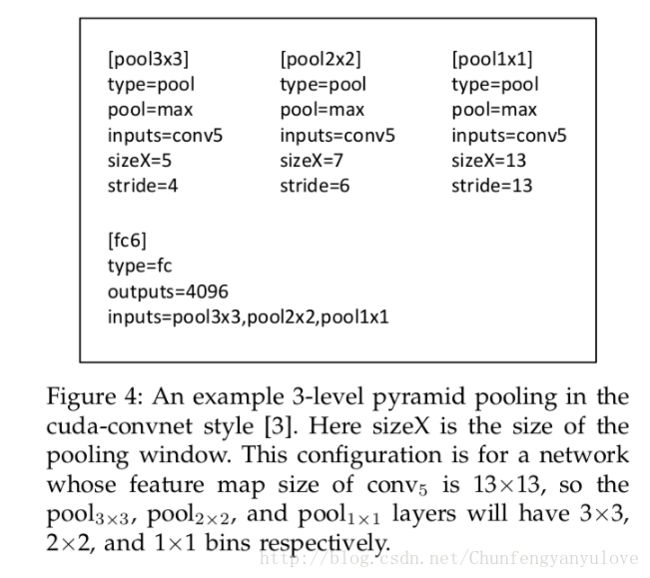

roi_pooling的作用是,将形状不一的propoasals通过maxpooling得到固定大小的maps,其思想来源于SPP-Net[^2],不同之处在于,SPP-Net是多层

池化,roi_pooling是单层。如下图所示:

为了将卷积层最后一层输出与全连接层匹配,利用SPP生成多个指定池化maps,使得对于不定尺寸的图片不需要经过放缩等操作即可输入到网络中。计算方法如下:

如上图,对于MxM的feature maps,预得到poolNxN,则需要计算滑动窗口大小和步长,通过步长预先计算,实现对任意pool结果的实现,此处滑动窗口win_size = ceil(M/N), stride = floor(M/N)

有了合适的win_size和stride,便可实现图片不定大小的输入。

对于roi_pooling,也是如此,输入为不定大小的proposals,整个训练过程为end-to-end,因此需要SPP一样的层来实现这样的功能:对于不定大小的proposals,可以映射到固定大小的maps。下面就结合代码

分析一下roi_pooling到底干了些什么:

#include ::LayerSetUp(const vector::Reshape(const vector::Forward_cpu(const vector(roi_height)

/ static_cast(pooled_height_);

const Dtype bin_size_w = static_cast(roi_width)

/ static_cast(pooled_width_);

const Dtype* batch_data = bottom_data + bottom[0]->offset(roi_batch_ind);

for (int c = 0; c < channels_; ++c) {

for (int ph = 0; ph < pooled_height_; ++ph) {

for (int pw = 0; pw < pooled_width_; ++pw) {

// Compute pooling region for this output unit:

// start (included) = floor(ph * roi_height / pooled_height_)

// end (excluded) = ceil((ph + 1) * roi_height / pooled_height_)

// 利用比例关系,得到maxpool中每个位置对应feature maps的位置区域,而这是相对于0开始的,因此

// 需要加上roi的起始坐标

int hstart = static_cast<int>(floor(static_cast(ph)

* bin_size_h));

int wstart = static_cast<int>(floor(static_cast(pw)

* bin_size_w));

int hend = static_cast<int>(ceil(static_cast(ph + 1)

* bin_size_h));

int wend = static_cast<int>(ceil(static_cast(pw + 1)

* bin_size_w));

hstart = min(max(hstart + roi_start_h, 0), height_);

hend = min(max(hend + roi_start_h, 0), height_);

wstart = min(max(wstart + roi_start_w, 0), width_);

wend = min(max(wend + roi_start_w, 0), width_);

bool is_empty = (hend <= hstart) || (wend <= wstart);

const int pool_index = ph * pooled_width_ + pw;

if (is_empty) {

top_data[pool_index] = 0;

argmax_data[pool_index] = -1;

}

// 对每个区域进行maxpooling

for (int h = hstart; h < hend; ++h) {

for (int w = wstart; w < wend; ++w) {

const int index = h * width_ + w;

if (batch_data[index] > top_data[pool_index]) {

top_data[pool_index] = batch_data[index];

argmax_data[pool_index] = index;

}

}

}

}

}

// Increment all data pointers by one channel

batch_data += bottom[0]->offset(0, 1);

top_data += top[0]->offset(0, 1);

argmax_data += max_idx_.offset(0, 1);

}

// Increment ROI data pointer

bottom_rois += bottom[1]->offset(1);

}

}

template <typename Dtype>

void ROIPoolingLayer::Backward_cpu(const vector 以上便是roi_pooling_layer的源码解析,够看。

roi_data_layer

该层主要还是利用SS方法获取proposals并且送入到网络,厉害之处是将一个分阶段的工作与网络成为一体,后续将详细讲解caffe中python_layer的使用,这里先挖个坑,这是一类问题。这里主要讲解

该层的主要功能。Talk cheat, show you code.

“`

def setup(self, bottom, top):

layer_params = yaml.load(self.param_str_)

self._num_classes = layer_params['num_classes']

self._name_to_top_map = {

'data': 0,

'rois': 1,

'labels': 2}

# data blob: holds a batch of N images, each with 3 channels

# The height and width (100 x 100) are dummy values

top[0].reshape(1, 3, 100, 100)

# rois blob: holds R regions of interest, each is a 5-tuple

# (n, x1, y1, x2, y2) specifying an image batch index n and a

# rectangle (x1, y1, x2, y2)

top[1].reshape(1, 5)

# labels blob: R categorical labels in [0, ..., K] for K foreground

# classes plus background

top[2].reshape(1)

if cfg.TRAIN.BBOX_REG:

self._name_to_top_map['bbox_targets'] = 3

self._name_to_top_map['bbox_loss_weights'] = 4

# bbox_targets blob: R bounding-box regression targets with 4

# targets per class

top[3].reshape(1, self._num_classes * 4)

# bbox_loss_weights blob: At most 4 targets per roi are active;

# thisbinary vector sepcifies the subset of active targets

top[4].reshape(1, self._num_classes * 4)

“`

作为网络的第一层,roi_data_layer向网络中提供了data, rois, labels三种数据,data为整张图片,rois为通过selective search方法得到的rois,然后根据overlap判断目标还是背景,得到标签,并且获得一定数量的proposals, labels为rois对应为背景还是目标,可以看出,在输入网络前,这些数据是

处理好的,这是与Faster RCNN不同之处,同时也是fast-RCNN的瓶颈。细节可以查看源码。