批量数据的聚合以及groupby实现

大家一定对sql非常熟悉,关系型数据库自不必说,现在越来越多的大数据系统也都支持sql,比如hive,odps ,presto,phoenix(hbase),galaxy 以及cep(esper)等都支持sql,或者类sql语言。sql语言更接近自然语言,让人非常容易理解,上手也比较方便,可以有效降低系统的入门门槛。很多大数据系统都用antlr来实现sql,antlr帮助我们实现sql语法解析和编译、抽象语法树啊一些复杂的概念,在antlr的帮助下,简单了很多。

sql可以帮助我们实现sum,avg,max,min,count等简单的聚合计算,还可以依靠parsii(https://github.com/scireum/parsii)这种表达式解析工具实现更复杂的表达式条件过滤功能。

sql看起来是对静态数据集的一种计算操作,比如select sum(field1) from tablex,是对表tablex的某一个字段进行加和操作,数据库的表相对来讲是一个静态的数据集。但其实sql还支持流数据的计算,对静态数据集和对流数据计算本质上并没有什么区别,都是单条记录,单个事件,或者tuple之类的数据单元分别计算后再聚合的结果。

不同系统的sql被antlr编译解析完成的执行计划也完全不同,hive是mr job,galaxy是storm topology等,那么假设我们现在有一批窗口数据,或者说有限数据集,如何完成这些数据按照字段分组聚合的功能?

有时候我们会在storm中完成一些聚合操作(非trident),那就需要你自己实现groupby之类的逻辑,当然我们也可以选择Esper或者siddhi这种开源cep引擎,你只需要写写sql就可以实现你的逻辑,但是一般cep 引擎比较消耗内存和cpu,而我们仅仅需要一些基础聚合功能,用它显得不划算。

那么现在我们就自己实现一个简单的分组聚合引擎:

1、首先定义一个Javabean,用来描述一种类型的事件或者叫record,包含事件的schema和一些标签数据

import java.io.Serializable;

import java.util.Map;

public class EventBase implements Serializable{

private long timestamp;

private Map<String, String> tags;

public EventBase(){

}

public long getTimestamp() {

return timestamp;

}

public void setTimestamp(long timestamp) {

this.timestamp = timestamp;

}

public Map<String, String> getTags() {

return tags;

}

public void setTags(Map<String, String> tags) {

this.tags = tags;

}

public String toString(){

StringBuffer sb = new StringBuffer();

sb.append("prefix:");

sb.append(", timestamp:");

sb.append(timestamp);

sb.append(", humanReadableDate:");

sb.append(timestamp);

sb.append(", tags: ");

if(tags != null){

for(Map.Entry<String, String> entry : tags.entrySet()){

sb.append(entry.toString());

sb.append(",");

}

}

sb.append(", encodedRowkey:");

return sb.toString();

}

}

用户可以继承该事件,实现自己的事件的定义,比如:

public class TestEvent extends EventBase {

private int numHosts;

private Long numClusters;

public int getNumHosts() {

return numHosts;

}

public void setNumHosts(int numHosts) {

this.numHosts = numHosts;

}

public Long getNumClusters() {

return numClusters;

}

public void setNumClusters(Long numClusters) {

this.numClusters = numClusters;

}

public String toString(){

StringBuffer sb = new StringBuffer();

sb.append(super.toString());

return sb.toString();

}

}

2、弄一个聚合接口,然后实现它

public interface Aggregator {

public void process(EventBase event) throws Exception;

}

3、定义聚合类型,目前先支持sum,avg,max,min,count这5种类型

import java.util.regex.Matcher;

import java.util.regex.Pattern;

public enum AggregateType {

count("^(count)$"),

sum("^sum\\((.*)\\)$"),

avg("^avg\\((.*)\\)$"),

max("^max\\((.*)\\)$"),

min("^min\\((.*)\\)$");

private Pattern pattern;

private AggregateType(String patternString){

this.pattern = Pattern.compile(patternString);

}

public AggregateTypeMatcher matcher(String function){

Matcher m = pattern.matcher(function);

if(m.find()){

return new AggregateTypeMatcher(this, true, m.group(1));

}else{

return new AggregateTypeMatcher(this, false, null);

}

}

public static AggregateTypeMatcher matchAll(String function){

for(AggregateType type : values()){

Matcher m = type.pattern.matcher(function);

if(m.find()){

return new AggregateTypeMatcher(type, true, m.group(1));

}

}

return new AggregateTypeMatcher(null, false, null);

}

}

public class AggregateTypeMatcher {

private final AggregateType type;

private final boolean matched;

private final String field;

public AggregateTypeMatcher(AggregateType type, boolean matched, String field){

this.type = type;

this.matched = matched;

this.field = field;

}

public boolean find(){

return this.matched;

}

public String field(){

return this.field;

}

public AggregateType type(){

return this.type;

}

}

4、实现聚合接口

import org.apache.commons.beanutils.PropertyUtils;

import java.beans.PropertyDescriptor;

import java.lang.reflect.InvocationTargetException;

import java.lang.reflect.Method;

import java.util.ArrayList;

import java.util.List;

public abstract class AbstractAggregator implements Aggregator {

private static final String UNASSIGNED = "unassigned";

protected List<String> groupbyFields;

protected List<AggregateType> aggregateTypes;

protected List<String> aggregatedFields;

private Boolean[] _groupbyFieldPlacementCache;

private Method[] _aggregateFieldReflectedMethodCache;

public AbstractAggregator(List<String> groupbyFields, List<AggregateType> aggregateFuntionTypes, List<String> aggregatedFields){

this.groupbyFields = groupbyFields;

this.aggregateTypes = aggregateFuntionTypes;

this.aggregatedFields = aggregatedFields;

_aggregateFieldReflectedMethodCache = new Method[this.aggregatedFields.size()];

_groupbyFieldPlacementCache = new Boolean[this.groupbyFields.size()];

}

public abstract Object result();

protected String createGroupFromTags(EventBase entity, String groupbyField, int i){

String groupbyFieldValue = entity.getTags().get(groupbyField);

if(groupbyFieldValue != null){

_groupbyFieldPlacementCache[i] = true;

return groupbyFieldValue;

}

return null;

}

protected String createGroupFromQualifiers(EventBase entity, String groupbyField, int i){

try{

PropertyDescriptor pd = PropertyUtils.getPropertyDescriptor(entity, groupbyField);

if(pd == null)

return null;

_groupbyFieldPlacementCache[i] = false;

return (String)(pd.getReadMethod().invoke(entity));

}catch(NoSuchMethodException ex){

return null;

}catch(InvocationTargetException ex){

return null;

}catch(IllegalAccessException ex){

return null;

}

}

protected String determineGroupbyFieldValue(EventBase entity, String groupbyField, int i){

Boolean placement = _groupbyFieldPlacementCache[i];

String groupbyFieldValue = null;

if(placement != null){

groupbyFieldValue = placement.booleanValue() ? createGroupFromTags(entity, groupbyField, i) : createGroupFromQualifiers(entity, groupbyField, i);

}else{

groupbyFieldValue = createGroupFromTags(entity, groupbyField, i);

if(groupbyFieldValue == null){

groupbyFieldValue = createGroupFromQualifiers(entity, groupbyField, i);

}

}

groupbyFieldValue = (groupbyFieldValue == null ? UNASSIGNED : groupbyFieldValue);

return groupbyFieldValue;

}

protected List<Double> createPreAggregatedValues(EventBase entity) throws Exception{

List<Double> values = new ArrayList<Double>();

int functionIndex = 0;

for(AggregateType type : aggregateTypes){

if(type.name().equals(AggregateType.count.name())){

values.add(new Double(1));

}else{

String aggregatedField = aggregatedFields.get(functionIndex);

try {

Method m = _aggregateFieldReflectedMethodCache[functionIndex];

if (m == null) {

String tmp = aggregatedField.substring(0, 1).toUpperCase() + aggregatedField.substring(1);

m = entity.getClass().getMethod("get" + tmp);

_aggregateFieldReflectedMethodCache[functionIndex] = m;

}

Object obj = m.invoke(entity);

values.add(numberToDouble(obj));

} catch (Exception ex) {

throw ex;

}

}

functionIndex++;

}

return values;

}

protected Double numberToDouble(Object obj) throws Exception {

if(obj instanceof Double)

return (Double)obj;

if(obj instanceof Integer){

return new Double(((Integer)obj).doubleValue());

}

if(obj instanceof Long){

return new Double(((Long)obj).doubleValue());

}

if(obj == null){

return new Double(0.0);

}

if(obj instanceof String){

try{

return new Double((String)obj);

}catch(Exception ex){

System.out.println("Datapoint ignored because it can not be converted to correct number for " + obj + ex);

return new Double(0.0);

}

}

throw new Exception(obj.getClass().toString() + " type is not support. The aggregated field must be numeric type, int, long or double");

}

}

我需要提供聚合字段,聚合类型,分组字段(group by) 其中聚合字段和聚合类型 list中元素是一一对应的。为每一种聚合类型实现聚合方法和工厂类,具体聚合算法:

对于数据集中的每一个单条数据先进行预处理:

对于count类型,那么直接返回结果1;sum、avg、max、min对于单条数据来讲,直接返回对应字段的值即可。

到这里我们仅仅完成了单条的域处理,实现groupby,实际上是按照字段完成分组,分组内的数据再进行聚合

5、实现bucket

import java.util.ArrayList;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

public class GroupbyBucket {

public static Map<String, FunctionFactory> functionFactories =

new HashMap<String, FunctionFactory>();

static{

functionFactories.put(AggregateType.count.name(), new CountFactory());

functionFactories.put(AggregateType.sum.name(), new SumFactory());

functionFactories.put(AggregateType.min.name(), new MinFactory());

functionFactories.put(AggregateType.max.name(), new MaxFactory());

functionFactories.put(AggregateType.avg.name(), new AvgFactory());

}

private List<AggregateType> types;

private Map<List<String>, List<Function>> group2FunctionMap = new HashMap<List<String>, List<Function>>();

public GroupbyBucket(List<AggregateType> types){

this.types = types;

}

public void addDatapoint(List<String> groupbyFieldValues, List<Double> values){

List<Function> functions = group2FunctionMap.get(groupbyFieldValues);

if(functions == null){

functions = new ArrayList<Function>();

for(AggregateType type : types){

functions.add(functionFactories.get(type.name()).createFunction());

}

group2FunctionMap.put(groupbyFieldValues, functions);

}

int functionIndex = 0;

for(Double v : values){

functions.get(functionIndex).run(v);

functionIndex++;

}

}

public Map<List<String>, List<Double>> result(){

Map<List<String>, List<Double>> result = new HashMap<List<String>, List<Double>>();

for(Map.Entry<List<String>, List<Function>> entry : this.group2FunctionMap.entrySet()){

List<Double> values = new ArrayList<Double>();

for(Function f : entry.getValue()){

values.add(f.result());

}

result.put(entry.getKey(), values);

}

return result;

}

public static interface FunctionFactory{

public Function createFunction();

}

public static abstract class Function{

protected int count;

public abstract void run(double v);

public abstract double result();

public int count(){

return count;

}

public void incrCount(){

count ++;

}

}

private static class CountFactory implements FunctionFactory{

@Override

public Function createFunction(){

return new Count();

}

}

private static class Count extends Sum{

public Count(){

super();

}

}

private static class SumFactory implements FunctionFactory{

@Override

public Function createFunction(){

return new Sum();

}

}

private static class Sum extends Function{

private double summary;

public Sum(){

this.summary = 0.0;

}

@Override

public void run(double v){

this.incrCount();

this.summary += v;

}

@Override

public double result(){

return this.summary;

}

}

private static class MinFactory implements FunctionFactory{

@Override

public Function createFunction(){

return new Min();

}

}

public static class Min extends Function{

private double minimum;

public Min(){

this.minimum = Double.MAX_VALUE;

}

@Override

public void run(double v){

if(v < minimum){

minimum = v;

}

this.incrCount();

}

@Override

public double result(){

return minimum;

}

}

private static class MaxFactory implements FunctionFactory{

@Override

public Function createFunction(){

return new Max();

}

}

public static class Max extends Function{

private double maximum;

public Max(){

this.maximum = 0.0;

}

@Override

public void run(double v){

if(v > maximum){

maximum = v;

}

this.incrCount();

}

@Override

public double result(){

return maximum;

}

}

private static class AvgFactory implements FunctionFactory{

@Override

public Function createFunction(){

return new Avg();

}

}

public static class Avg extends Function{

private double total;

public Avg(){

this.total = 0.0;

}

@Override

public void run(double v){

total += v;

this.incrCount();

}

@Override

public double result(){

return this.total/this.count;

}

}

} 6、分组聚合实现

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

public class AggregatorImple extends AbstractAggregator{

protected GroupbyBucket bucket;

public AggregatorImple(List<String> groupbyFields, List<AggregateType> aggregateFuntionTypes, List<String> aggregatedFields){

super(groupbyFields, aggregateFuntionTypes, aggregatedFields);

bucket = new GroupbyBucket(this.aggregateTypes);

}

public void process(EventBase entity) throws Exception{

List<String> groupbyFieldValues = createGroup(entity);

List<Double> preAggregatedValues = createPreAggregatedValues(entity);

bucket.addDatapoint(groupbyFieldValues, preAggregatedValues);

}

public Map<List<String>, List<Double>> result(){

return bucket.result();

}

protected List<String> createGroup(EventBase entity){

List<String> groupbyFieldValues = new ArrayList<String>();

int i = 0;

for(String groupbyField : groupbyFields){

String groupbyFieldValue = determineGroupbyFieldValue(entity, groupbyField, i++);

groupbyFieldValues.add(groupbyFieldValue);

}

return groupbyFieldValues;

}

}

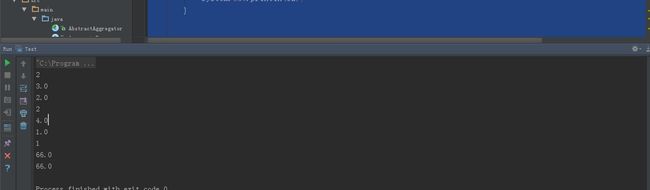

7、验证测试

import java.util.*;

/**

* Created by dongbin.db on 2015/12/22.

*/

public class Test {

private TestEvent createEntity(final String cluster, final String datacenter,

final String rack, int numHosts, long numClusters){

TestEvent entity = new TestEvent();

Map<String, String> tags = new HashMap<String, String>(){{

put("cluster", cluster);

put("datacenter", datacenter);

put("rack", rack);

}};

entity.setTags(tags);

entity.setNumHosts(numHosts);

entity.setNumClusters(numClusters);

return entity;

}

public void testSingleGroupbyFieldSingleFunctionForCount(){

TestEvent[] entities = new TestEvent[5];

entities[0] = createEntity("cluster1", "dc1", "rack123", 12, 2);

entities[1] = createEntity("cluster1", "dc1", "rack123", 20, 1);

entities[2] = createEntity("cluster1", "dc1", "rack128", 10, 0);

entities[3] = createEntity("cluster2", "dc1", "rack125", 9, 2);

entities[4] = createEntity("cluster2", "dc2", "rack126", 15, 2);

AggregatorImple agg = new AggregatorImple(Arrays.asList("cluster"), Arrays.asList(AggregateType.count),

Arrays.asList("*"));

try{

for(TestEvent e : entities){

agg.process(e);

}

Map<List<String>, List<Double>> result = agg.result();

System.out.println(result.size());

System.out.println(result.get(Arrays.asList("cluster1")).get(0));

System.out.println(result.get(Arrays.asList("cluster2")).get(0));

}catch(Exception ex){

System.out.println(ex);

}

agg = new AggregatorImple(Arrays.asList("datacenter"), Arrays.asList(AggregateType.count), Arrays.asList("*"));

try{

for(TestEvent e : entities){

agg.process(e);

}

Map<List<String>, List<Double>> result = agg.result();

System.out.printf(String.valueOf(result.size())+"\n");

System.out.println(result.get(Arrays.asList("dc1")).get(0));

System.out.println(result.get(Arrays.asList("dc2")).get(0));

}catch(Exception ex){

System.out.println(ex);

}

agg = new AggregatorImple(new ArrayList<String>(),

Arrays.asList(AggregateType.sum), Arrays.asList("numHosts"));

try{

for(TestEvent e : entities){

agg.process(e);

}

Map<List<String>, List<Double>> result = agg.result();

System.out.println(result.size());

System.out.println(result.get(new ArrayList<String>()).get(0));

System.out.println((double)(entities[0].getNumHosts()+entities[1].getNumHosts()+

entities[2].getNumHosts()+entities[3].getNumHosts()+entities[4].getNumHosts()));

}catch(Exception ex){

System.out.println(ex);

}

}

public static void main(String[] args) {

Test test = new Test();

test.testSingleGroupbyFieldSingleFunctionForCount();

}

}

代码地址:https://github.com/sumpan/groupby