Hadoop2.2.0生产环境模拟

// Provided by Red Hat bind package to configure the ISC BIND named(8) DNS

// server as a caching only nameserver (as a localhost DNS resolver only).

//

// See /usr/share/doc/bind*/sample/ for example named configuration files.

//

options {

listen-on port 53 { any; };

listen-on-v6 port 53 { ::1; };

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

allow-query { any; };

recursion yes;

forwarders { 202.101.172.35; };

dnssec-enable yes;

dnssec-validation yes;

dnssec-lookaside auto;

/* Path to ISC DLV key */

bindkeys-file "/etc/named.iscdlv.key";

managed-keys-directory "/var/named/dynamic";

};

logging {

channel default_debug {

file "data/named.run";

severity dynamic;

};

};

zone "." IN {

type hint;

file "named.ca";

};

include "/etc/named.rfc1912.zones";

include "/etc/named.root.key";

// named.rfc1912.zones:

//

// Provided by Red Hat caching-nameserver package

//

// ISC BIND named zone configuration for zones recommended by

// RFC 1912 section 4.1 : localhost TLDs and address zones

// and http://www.ietf.org/internet-drafts/draft-ietf-dnsop-default-local-zones-02.txt

// (c)2007 R W Franks

//

// See /usr/share/doc/bind*/sample/ for example named configuration files.

//

zone "localhost.localdomain" IN {

type master;

file "named.localhost";

allow-update { none; };

};

zone "localhost" IN {

type master;

file "named.localhost";

allow-update { none; };

};

//注释下面几行

//zone "1.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.ip6.arpa" IN {

// type master;

// file "named.loopback";

// allow-update { none; };

//};

//zone "1.0.0.127.in-addr.arpa" IN {

// type master;

// file "named.loopback";

// allow-update { none; };

//};

zone "0.in-addr.arpa" IN {

type master;

file "named.empty";

allow-update { none; };

};

zone "product" IN {

type master;

file "product.zone";

};

zone "100.168.192.in-addr.arpa" IN {

type master;

file "100.168.192.zone";

};

$TTL 86400

@ IN SOA product. root.product. (

2013122801 ; serial (d. adams)

3H ; refresh

15M ; retry

1W ; expiry

1D ) ; minimum

@ IN NS productserver.

productserver IN A 192.168.100.200

; 正解设置

product201 IN A 192.168.100.201

product202 IN A 192.168.100.202

product203 IN A 192.168.100.203

product204 IN A 192.168.100.204

product211 IN A 192.168.100.211

product212 IN A 192.168.100.212

product213 IN A 192.168.100.213

product214 IN A 192.168.100.214

$TTL 86400

@ IN SOA productserver. root.productserver. (

2013122801 ; serial (d. adams)

3H ; refresh

15M ; retry

1W ; expiry

1D ) ; minimum

IN NS productserver.

200 IN PTR productserver.product.

;反解设置

201 IN PTR product201.product.

202 IN PTR product202.product.

203 IN PTR product203.product.

204 IN PTR product204.product.

211 IN PTR product211.product.

212 IN PTR product212.product.

213 IN PTR product213.product.

214 IN PTR product214.product.

#/bin/bash

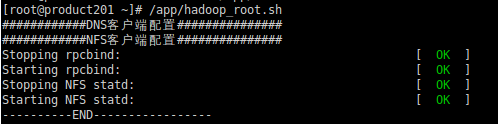

echo "############DNS客户端配置###############"

sed -i 's/hosts: files dns/hosts: dns files/g' `find /etc/ -name nsswitch.conf`

echo "nameserver 192.168.100.200" >>/etc/resolv.conf

chkconfig NetworkManager off

chkconfig autofs off

echo "############NFS客户端配置###############"

chkconfig rpcbind on

chkconfig nfslock on

service rpcbind restart

service nfslock restart

mkdir -p /mnt/hadoop

mkdir -p /mnt/.ssh

mount -t nfs productserver:/share/hadoop /mnt/hadoop

mount -t nfs productserver:/share/.ssh /mnt/.ssh

echo "mount -t nfs productserver:/share/hadoop /mnt/hadoop">>/etc/rc.d/rc.local

echo "mount -t nfs productserver:/share/.ssh /mnt/.ssh">>/etc/rc.d/rc.local

mkdir -p /app/hadoop/

chown -R hadoop:hadoop /app/hadoop

echo "----------END-----------------"

#/bin/bash

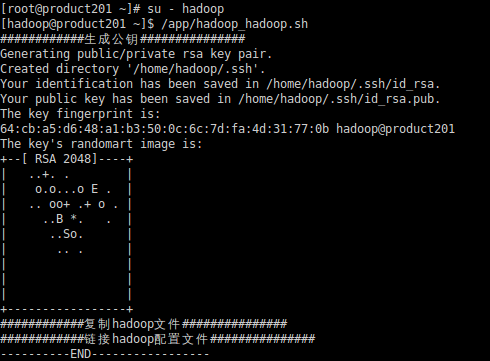

echo "############生成公钥###############"

ssh-keygen -t rsa -N123456 -f /home/hadoop/.ssh/id_rsa

cat /home/hadoop/.ssh/id_rsa.pub>>/mnt/.ssh/authorized_keys

ln -sf /mnt/.ssh/authorized_keys /home/hadoop/.ssh/authorized_keys

hostname>>/mnt/.ssh/slaves

echo "############复制hadoop文件###############"

cp -r /mnt/hadoop/hadoop220 /app/hadoop/

echo "############链接hadoop配置文件###############"

rm -rf /app/hadoop/hadoop220/etc/hadoop/*

ln -sf /mnt/.ssh/capacity-scheduler.xml /app/hadoop/hadoop220/etc/hadoop/capacity-scheduler.xml

ln -sf /mnt/.ssh/configuration.xsl /app/hadoop/hadoop220/etc/hadoop/configuration.xsl

ln -sf /mnt/.ssh/container-executor.cfg /app/hadoop/hadoop220/etc/hadoop/container-executor.cfg

ln -sf /mnt/.ssh/core-site.xml /app/hadoop/hadoop220/etc/hadoop/core-site.xml

ln -sf /mnt/.ssh/hadoop-env.cmd /app/hadoop/hadoop220/etc/hadoop/hadoop-env.cmd

ln -sf /mnt/.ssh/hadoop-env.sh /app/hadoop/hadoop220/etc/hadoop/hadoop-env.sh

ln -sf /mnt/.ssh/hadoop-metrics2.properties /app/hadoop/hadoop220/etc/hadoop/hadoop-metrics2.properties

ln -sf /mnt/.ssh/hadoop-metrics.properties /app/hadoop/hadoop220/etc/hadoop/hadoop-metrics.properties

ln -sf /mnt/.ssh/hadoop-policy.xml /app/hadoop/hadoop220/etc/hadoop/hadoop-policy.xml

ln -sf /mnt/.ssh/hdfs-site.xml /app/hadoop/hadoop220/etc/hadoop/hdfs-site.xml

ln -sf /mnt/.ssh/httpfs-env.sh /app/hadoop/hadoop220/etc/hadoop/httpfs-env.sh

ln -sf /mnt/.ssh/httpfs-log4j.properties /app/hadoop/hadoop220/etc/hadoop/httpfs-log4j.properties

ln -sf /mnt/.ssh/httpfs-signature.secret /app/hadoop/hadoop220/etc/hadoop/httpfs-signature.secret

ln -sf /mnt/.ssh/httpfs-site.xml /app/hadoop/hadoop220/etc/hadoop/httpfs-site.xml

ln -sf /mnt/.ssh/log4j.properties /app/hadoop/hadoop220/etc/hadoop/log4j.properties

ln -sf /mnt/.ssh/mapred-env.cmd /app/hadoop/hadoop220/etc/hadoop/mapred-env.cmd

ln -sf /mnt/.ssh/mapred-env.sh /app/hadoop/hadoop220/etc/hadoop/mapred-env.sh

ln -sf /mnt/.ssh/mapred-queues.xml.template /app/hadoop/hadoop220/etc/hadoop/mapred-queues.xml.template

ln -sf /mnt/.ssh/mapred-site.xml /app/hadoop/hadoop220/etc/hadoop/mapred-site.xml

ln -sf /mnt/.ssh/mapred-site.xml.template /app/hadoop/hadoop220/etc/hadoop/mapred-site.xml.template

ln -sf /mnt/.ssh/masters /app/hadoop/hadoop220/etc/hadoop/masters

ln -sf /mnt/.ssh/slaves /app/hadoop/hadoop220/etc/hadoop/slaves

ln -sf /mnt/.ssh/ssl-client.xml.example /app/hadoop/hadoop220/etc/hadoop/ssl-client.xml.example

ln -sf /mnt/.ssh/ssl-server.xml.example /app/hadoop/hadoop220/etc/hadoop/ssl-server.xml.example

ln -sf /mnt/.ssh/yarn-env.cmd /app/hadoop/hadoop220/etc/hadoop/yarn-env.cmd

ln -sf /mnt/.ssh/yarn-env.sh /app/hadoop/hadoop220/etc/hadoop/yarn-env.sh

ln -sf /mnt/.ssh/yarn-site.xml /app/hadoop/hadoop220/etc/hadoop/yarn-site.xml

echo "----------END-----------------"

cat ccc | awk '{ print "ln -sf " $10 }'

cat ccc | awk '{ print "ln -sf /mnt/.ssh/" $10 " /app/hadoop/hadoop220/etc/hadoop/" $10 }'