Kubernetes operator(六)CRD控制器 开发实战篇

云原生学习路线导航页(持续更新中)

- 本文是 Kubernetes operator学习 系列第六篇,前面5篇的学习,我们已经清楚CRD开发的各个环节,本节就实际设计一个CRD,并为之编写控制器

- 基于 kubernetes v1.24.0 代码分析

- Kubernetes operator学习系列 快捷链接

- Kubernetes operator(一)client-go篇

- Kubernetes operator(二)CRD篇

- Kubernetes operator(三)code-generator 篇

- Kubernetes operator(四)controller-tools 篇

- Kubernetes operator(五)api 和 apimachinery 篇

- Kubernetes operator(六)CRD控制器 开发实战篇

- Kubernetes operator(七) controller-runtime 篇

本文源码已放入仓库【恳求各位大佬给个star】:https://github.com/graham924/share-code-operator-study

1.需求分析

- 设计一个CRD,名称为 App

- 在AppSpec中,可以包含一个 Deployment 的 部分属性、一个 Service 的 部分属性

- 为App编写一个控制器 AppController

- 当创建App的时候,控制器AppController会检查AppSpec,如果包含 Deployment 或 Service,就创建对应的 Deployment 或 Service

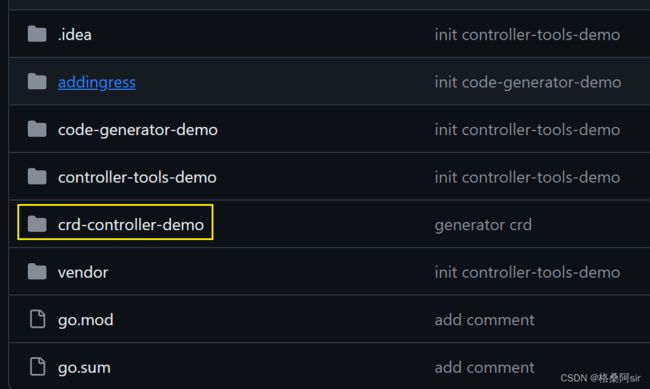

2.初始化项目

2.1.创建目录和文件

2.2.初始化项目

- 初始化go项目,并get client-go

cd crd-controller-demo go mod init crd-controller-demo go get k8s.io/client-go go get k8s.io/apimachinery

2.3.编写boilerplate.go.txt

- 该文件是文件开头统一的注释,会在使用 code-generator 脚本时,指定 boilerplate.go.txt 文件的所在目录

/* Copyright 2019 The Kubernetes Authors. Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. @Time : 2024/2 @Author : grahamzhu @Software: GoLand */

2.4.编写tools.go

- 我们要使用 code-generator,可代码中还没有任何位置 导入过 code-generator 的包,所以我们需要一个类,专门用于将 code-generator 的包导入。一般使用tools.go来做这件事

//go:build tools // +build tools /* Copyright 2019 The Kubernetes Authors. Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. */ // This package imports things required by build scripts, to force `go mod` to see them as dependencies package tools import _ "k8s.io/code-generator"

2.5.编写appcontroller/v1/register.go

- 注意,这是appcontroller目录下的register.go,并非某个版本目录下的,版本目录下的,再使用 code-generator 脚本后,再进行编写

package appcontroller const ( GroupName = "appcontroller.k8s.io" )

2.6.编写doc.go

// +k8s:deepcopy-gen=package

// +groupName=appcontroller.k8s.io

// Package v1 v1版本的api包

package v1

3.自动生成代码

3.1.使用type-scaffold工具生成 types.go

3.1.1.生成types.go

- 需要注意:

- type-scaffold并不会生成文件,而是生成types.go的内容,打印到控制台,我们需要手动copy到types.go文件中去

- 不过使用kubebuilder的时候,会帮我们生成types.go文件的

- 执行 type-scaffold --kind=App,得到types.go的内容

[root@master controller-tools-demo]# type-scaffold --kind=App // AppSpec defines the desired state of App type AppSpec struct { // INSERT ADDITIONAL SPEC FIELDS -- desired state of cluster } // AppStatus defines the observed state of App. // It should always be reconstructable from the state of the cluster and/or outside world. type AppStatus struct { // INSERT ADDITIONAL STATUS FIELDS -- observed state of cluster } // +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object // App is the Schema for the apps API // +k8s:openapi-gen=true type App struct { metav1.TypeMeta `json:",inline"` metav1.ObjectMeta `json:"metadata,omitempty"` Spec AppSpec `json:"spec,omitempty"` Status AppStatus `json:"status,omitempty"` } // +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object // AppList contains a list of App type AppList struct { metav1.TypeMeta `json:",inline"` metav1.ListMeta `json:"metadata,omitempty"` Items []App `json:"items"` } - 在 v1 目录下创建 types.go 文件,将控制台的内容copy进去,记得导包

package v1 import metav1 "k8s.io/apimachinery/pkg/apis/meta/v1" // AppSpec defines the desired state of App type AppSpec struct { // INSERT ADDITIONAL SPEC FIELDS -- desired state of cluster } // AppStatus defines the observed state of App. // It should always be reconstructable from the state of the cluster and/or outside world. type AppStatus struct { // INSERT ADDITIONAL STATUS FIELDS -- observed state of cluster } // +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object // App is the Schema for the apps API // +k8s:openapi-gen=true type App struct { metav1.TypeMeta `json:",inline"` metav1.ObjectMeta `json:"metadata,omitempty"` Spec AppSpec `json:"spec,omitempty"` Status AppStatus `json:"status,omitempty"` } // +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object // AppList contains a list of App type AppList struct { metav1.TypeMeta `json:",inline"` metav1.ListMeta `json:"metadata,omitempty"` Items []App `json:"items"` }

3.1.2.修改types.go文件,并添加自动生成标签

- 修改types.go,并在types.go的struct上面,需要加上一些·client的标签,才能使用code-generator自动生成client

- 修改后,typs.go文件完整内容如下:

package v1 import ( appsv1 "k8s.io/api/apps/v1" corev1 "k8s.io/api/core/v1" metav1 "k8s.io/apimachinery/pkg/apis/meta/v1" ) // +genclient // +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object // App is the Schema for the apps API type App struct { metav1.TypeMeta `json:",inline"` metav1.ObjectMeta `json:"metadata,omitempty"` Spec AppSpec `json:"spec,omitempty"` Status AppStatus `json:"status,omitempty"` } // AppSpec defines the desired state of App type AppSpec struct { DeploymentSpec DeploymentTemplate `json:"deploymentTemplate,omitempty"` ServiceSpec ServiceTemplate `json:"serviceTemplate,omitempty"` } type DeploymentTemplate struct { Name string `json:"name"` Image string `json:"image"` Replicas int32 `json:"replicas"` } type ServiceTemplate struct { Name string `json:"name"` } // AppStatus defines the observed state of App. // It should always be reconstructable from the state of the cluster and/or outside world. type AppStatus struct { DeploymentStatus *appsv1.DeploymentStatus `json:"deploymentStatus,omitempty"` ServiceStatus *corev1.ServiceStatus `json:"serviceStatus,omitempty"` } // +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object // AppList contains a list of App type AppList struct { metav1.TypeMeta `json:",inline"` metav1.ListMeta `json:"metadata,omitempty"` Items []App `json:"items"` }

3.2.使用controller-gen生成 deepcopy 文件

- 执行命令

cd crd-controller-demo controller-gen object paths=pkg/apis/appcontroller/v1/types.go - 命令执行结束后,在v1目录下生成了

zz_generated.deepcopy.go[root@master crd-controller-demo]# controller-gen object paths=pkg/apis/appcontroller/v1/types.go [root@master crd-controller-demo]# tree . ├── go.mod ├── go.sum ├── hack │ ├── boilerplate.go.txt │ ├── tools.go │ └── update-codegen.sh ├── pkg │ └── apis │ └── appcontroller │ ├── register.go │ └── v1 │ ├── docs.go │ ├── register.go │ ├── types.go │ └── zz_generated.deepcopy.go

3.3.使用code-generator生成 client,informer,lister

3.3.1.编写update-codegen.sh

#!/usr/bin/env bash

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# 设置脚本在执行过程中遇到任何错误时立即退出

set -o errexit

# 设置脚本在使用未定义的变量时立即退出

set -o nounset

# 设置脚本在管道命令中任意一条命令失败时立即退出

set -o pipefail

# 对generate-groups.sh 脚本的调用

../vendor/k8s.io/code-generator/generate-groups.sh \

"client,informer,lister" \

crd-controller-demo/pkg/generated \

crd-controller-demo/pkg/apis \

appcontroller:v1 \

--go-header-file $(pwd)/boilerplate.go.txt \

--output-base $(pwd)/../../

3.3.2.执行update-codegen.sh

- 最终会在pkg目录下,生成generated目录

go mod tidy

# 生成vendor文件夹

go mod vendor

# 为vendor中的code-generator赋予权限

chmod -R 777 vendor

# 为hack中的update-codegen.sh脚本赋予权限

chmod -R 777 hack

# 调用脚本生成代码

$ cd hack && ./update-codegen.sh

Generating clientset for appcontroller:v1 at crd-controller-demo/pkg/generated/clientset

Generating listers for appcontroller:v1 at crd-controller-demo/pkg/generated/listers

Generating informers for appcontroller:v1 at crd-controller-demo/pkg/generated/informers

# 此时目录变为如下情况

$ cd .. && tree -L 5

.

├── go.mod

├── go.sum

├── hack

│ ├── boilerplate.go.txt

│ ├── tools.go

│ └── update-codegen.sh

├── pkg

│ ├── apis

│ │ └── appcontroller

│ │ ├── register.go

│ │ └── v1

│ │ ├── docs.go

│ │ ├── register.go

│ │ ├── types.go

│ │ └── zz_generated.deepcopy.go

│ └── generated

│ ├── clientset

│ │ └── versioned

│ │ ├── clientset.go

│ │ ├── fake

│ │ ├── scheme

│ │ └── typed

│ ├── informers

│ │ └── externalversions

│ │ ├── appcontroller

│ │ ├── factory.go

│ │ ├── generic.go

│ │ └── internalinterfaces

│ └── listers

│ └── appcontroller

│ └── v1

└── vendor

├── github.com

3.4.使用controller-gen生成crd

- 生成crd文件

cd crd-controller-demo controller-gen crd paths=./... output:crd:dir=artifacts/crd - crd文件完整内容:

- 记得在 annotations 中加入一个:

api-approved.kubernetes.io: "https://github.com/kubernetes/kubernetes/pull/78458",否則kubernetes不让我们创建这个CRD

--- apiVersion: apiextensions.k8s.io/v1 kind: CustomResourceDefinition metadata: annotations: controller-gen.kubebuilder.io/version: (devel) api-approved.kubernetes.io: "https://github.com/kubernetes/kubernetes/pull/78458" creationTimestamp: null name: apps.appcontroller.k8s.io spec: group: appcontroller.k8s.io names: kind: App listKind: AppList plural: apps singular: app scope: Namespaced versions: - name: v1 schema: openAPIV3Schema: description: App is the Schema for the apps API properties: apiVersion: description: 'APIVersion defines the versioned schema of this representation of an object. Servers should convert recognized schemas to the latest internal value, and may reject unrecognized values. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources' type: string kind: description: 'Kind is a string value representing the REST resource this object represents. Servers may infer this from the endpoint the client submits requests to. Cannot be updated. In CamelCase. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds' type: string metadata: type: object spec: description: AppSpec defines the desired state of App properties: deploymentTemplate: properties: image: type: string name: type: string replicas: format: int32 type: integer required: - image - name - replicas type: object serviceTemplate: properties: name: type: string required: - name type: object type: object status: description: AppStatus defines the observed state of App. It should always be reconstructable from the state of the cluster and/or outside world. properties: deploymentStatus: description: DeploymentStatus is the most recently observed status of the Deployment. properties: availableReplicas: description: Total number of available pods (ready for at least minReadySeconds) targeted by this deployment. format: int32 type: integer collisionCount: description: Count of hash collisions for the Deployment. The Deployment controller uses this field as a collision avoidance mechanism when it needs to create the name for the newest ReplicaSet. format: int32 type: integer conditions: description: Represents the latest available observations of a deployment's current state. items: description: DeploymentCondition describes the state of a deployment at a certain point. properties: lastTransitionTime: description: Last time the condition transitioned from one status to another. format: date-time type: string lastUpdateTime: description: The last time this condition was updated. format: date-time type: string message: description: A human readable message indicating details about the transition. type: string reason: description: The reason for the condition's last transition. type: string status: description: Status of the condition, one of True, False, Unknown. type: string type: description: Type of deployment condition. type: string required: - status - type type: object type: array observedGeneration: description: The generation observed by the deployment controller. format: int64 type: integer readyReplicas: description: readyReplicas is the number of pods targeted by this Deployment with a Ready Condition. format: int32 type: integer replicas: description: Total number of non-terminated pods targeted by this deployment (their labels match the selector). format: int32 type: integer unavailableReplicas: description: Total number of unavailable pods targeted by this deployment. This is the total number of pods that are still required for the deployment to have 100% available capacity. They may either be pods that are running but not yet available or pods that still have not been created. format: int32 type: integer updatedReplicas: description: Total number of non-terminated pods targeted by this deployment that have the desired template spec. format: int32 type: integer type: object serviceStatus: description: ServiceStatus represents the current status of a service. properties: conditions: description: Current service state items: description: "Condition contains details for one aspect of the current state of this API Resource. --- This struct is intended for direct use as an array at the field path .status.conditions. \ For example, \n type FooStatus struct{ // Represents the observations of a foo's current state. // Known .status.conditions.type are: \"Available\", \"Progressing\", and \"Degraded\" // +patchMergeKey=type // +patchStrategy=merge // +listType=map // +listMapKey=type Conditions []metav1.Condition `json:\"conditions,omitempty\" patchStrategy:\"merge\" patchMergeKey:\"type\" protobuf:\"bytes,1,rep,name=conditions\"` \n // other fields }" properties: lastTransitionTime: description: lastTransitionTime is the last time the condition transitioned from one status to another. This should be when the underlying condition changed. If that is not known, then using the time when the API field changed is acceptable. format: date-time type: string message: description: message is a human readable message indicating details about the transition. This may be an empty string. maxLength: 32768 type: string observedGeneration: description: observedGeneration represents the .metadata.generation that the condition was set based upon. For instance, if .metadata.generation is currently 12, but the .status.conditions[x].observedGeneration is 9, the condition is out of date with respect to the current state of the instance. format: int64 minimum: 0 type: integer reason: description: reason contains a programmatic identifier indicating the reason for the condition's last transition. Producers of specific condition types may define expected values and meanings for this field, and whether the values are considered a guaranteed API. The value should be a CamelCase string. This field may not be empty. maxLength: 1024 minLength: 1 pattern: ^[A-Za-z]([A-Za-z0-9_,:]*[A-Za-z0-9_])?$ type: string status: description: status of the condition, one of True, False, Unknown. enum: - "True" - "False" - Unknown type: string type: description: type of condition in CamelCase or in foo.example.com/CamelCase. --- Many .condition.type values are consistent across resources like Available, but because arbitrary conditions can be useful (see .node.status.conditions), the ability to deconflict is important. The regex it matches is (dns1123SubdomainFmt/)?(qualifiedNameFmt) maxLength: 316 pattern: ^([a-z0-9]([-a-z0-9]*[a-z0-9])?(\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*/)?(([A-Za-z0-9][-A-Za-z0-9_.]*)?[A-Za-z0-9])$ type: string required: - lastTransitionTime - message - reason - status - type type: object type: array x-kubernetes-list-map-keys: - type x-kubernetes-list-type: map loadBalancer: description: LoadBalancer contains the current status of the load-balancer, if one is present. properties: ingress: description: Ingress is a list containing ingress points for the load-balancer. Traffic intended for the service should be sent to these ingress points. items: description: 'LoadBalancerIngress represents the status of a load-balancer ingress point: traffic intended for the service should be sent to an ingress point.' properties: hostname: description: Hostname is set for load-balancer ingress points that are DNS based (typically AWS load-balancers) type: string ip: description: IP is set for load-balancer ingress points that are IP based (typically GCE or OpenStack load-balancers) type: string ipMode: description: IPMode specifies how the load-balancer IP behaves, and may only be specified when the ip field is specified. Setting this to "VIP" indicates that traffic is delivered to the node with the destination set to the load-balancer's IP and port. Setting this to "Proxy" indicates that traffic is delivered to the node or pod with the destination set to the node's IP and node port or the pod's IP and port. Service implementations may use this information to adjust traffic routing. type: string ports: description: Ports is a list of records of service ports If used, every port defined in the service should have an entry in it items: properties: error: description: 'Error is to record the problem with the service port The format of the error shall comply with the following rules: - built-in error values shall be specified in this file and those shall use CamelCase names - cloud provider specific error values must have names that comply with the format foo.example.com/CamelCase. --- The regex it matches is (dns1123SubdomainFmt/)?(qualifiedNameFmt)' maxLength: 316 pattern: ^([a-z0-9]([-a-z0-9]*[a-z0-9])?(\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*/)?(([A-Za-z0-9][-A-Za-z0-9_.]*)?[A-Za-z0-9])$ type: string port: description: Port is the port number of the service port of which status is recorded here format: int32 type: integer protocol: default: TCP description: 'Protocol is the protocol of the service port of which status is recorded here The supported values are: "TCP", "UDP", "SCTP"' type: string required: - port - protocol type: object type: array x-kubernetes-list-type: atomic type: object type: array type: object type: object type: object type: object served: true storage: true - 记得在 annotations 中加入一个:

4.手动注册版本v1的 CRD 资源

- 在生成了客户端代码后,我们还需要手动注册版本v1的CRD资源,才能真正使用这个client,不然在编译时会出现 undefined: v1alpha1.AddToScheme 错误、undefined: v1alpha1.Resource 错误。

- v1alpha1.AddToScheme、v1alpha1.Resource 这两个是用于 client 注册的

- 编写 v1/register.go

package v1 import ( "crd-controller-demo/pkg/apis/appcontroller" metav1 "k8s.io/apimachinery/pkg/apis/meta/v1" "k8s.io/apimachinery/pkg/runtime" "k8s.io/apimachinery/pkg/runtime/schema" ) // SchemeGroupVersion is group version used to register these objects var SchemeGroupVersion = schema.GroupVersion{Group: appcontroller.GroupName, Version: "v1"} // Kind takes an unqualified kind and returns back a Group qualified GroupKind func Kind(kind string) schema.GroupKind { return SchemeGroupVersion.WithKind(kind).GroupKind() } // Resource takes an unqualified resource and returns a Group qualified GroupResource func Resource(resource string) schema.GroupResource { return SchemeGroupVersion.WithResource(resource).GroupResource() } var ( // SchemeBuilder initializes a scheme builder SchemeBuilder = runtime.NewSchemeBuilder(addKnownTypes) // AddToScheme is a global function that registers this API group & version to a scheme AddToScheme = SchemeBuilder.AddToScheme ) // Adds the list of known types to Scheme. func addKnownTypes(scheme *runtime.Scheme) error { scheme.AddKnownTypes(SchemeGroupVersion, &App{}, &AppList{}, ) metav1.AddToGroupVersion(scheme, SchemeGroupVersion) return nil }

5.使用client-go开发自定义Controller

5.1.创建utils/consts.go

- 在pkg包下,创建一个utils目录,并在里面创建一个 consts.go 的工具文件,记录一些常量,等会编写controller的时候会用

package utils const ControllerAgentName = "app-controller" const WorkNum = 5 const MaxRetry = 10 const ( // SuccessSynced is used as part of the Event 'reason' when a App is synced SuccessSynced = "Synced" // ErrResourceExists is used as part of the Event 'reason' when a App fails // to sync due to a Deployment of the same name already existing. ErrResourceExists = "ErrResourceExists" // MessageResourceExists is the message used for Events when a resource // fails to sync due to a Deployment already existing MessageResourceExists = "Resource %q already exists and is not managed by App" // MessageResourceSynced is the message used for an Event fired when a App // is synced successfully MessageResourceSynced = "App synced successfully" )

5.2.创建控制器pkg/controller/controller.go

5.2.1.先展示完整 controller.go 文件

package controller

import (

"context"

appcontrollerv1 "crd-controller-demo/pkg/apis/appcontroller/v1"

clientset "crd-controller-demo/pkg/generated/clientset/versioned"

"crd-controller-demo/pkg/generated/clientset/versioned/scheme"

informersv1 "crd-controller-demo/pkg/generated/informers/externalversions/appcontroller/v1"

listerv1 "crd-controller-demo/pkg/generated/listers/appcontroller/v1"

"crd-controller-demo/pkg/utils"

"fmt"

appsv1 "k8s.io/api/apps/v1"

corev1 "k8s.io/api/core/v1"

"k8s.io/apimachinery/pkg/api/errors"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

utilruntime "k8s.io/apimachinery/pkg/util/runtime"

"k8s.io/apimachinery/pkg/util/wait"

appsinformersv1 "k8s.io/client-go/informers/apps/v1"

coreinformersv1 "k8s.io/client-go/informers/core/v1"

"k8s.io/client-go/kubernetes"

typedcorev1 "k8s.io/client-go/kubernetes/typed/core/v1"

appslisterv1 "k8s.io/client-go/listers/apps/v1"

corelisterv1 "k8s.io/client-go/listers/core/v1"

"k8s.io/client-go/tools/cache"

"k8s.io/client-go/tools/record"

"k8s.io/client-go/util/workqueue"

"k8s.io/klog/v2"

"reflect"

"time"

)

type Controller struct {

// kubeClientset kubernetes 所有内置资源的 clientset,用于操作所有内置资源

kubeClientset kubernetes.Interface

// appClientset 为 apps 资源生成的 clientset,用于操作 apps 资源

appClientset clientset.Interface

// deploymentsLister 查询本地缓存中的 deployment 资源

deploymentsLister appslisterv1.DeploymentLister

// servicesLister 查询本地缓存中的 service 资源

servicesLister corelisterv1.ServiceLister

// appsLister 查询本地缓存中的 apps 资源

appsLister listerv1.AppLister

// deploymentsSync 检查 deployments 资源,是否完成同步

deploymentsSync cache.InformerSynced

// servicesSync 检查 services 资源,是否完成同步

servicesSync cache.InformerSynced

// appsSync 检查 apps 资源,是否完成同步

appsSync cache.InformerSynced

// workqueue 队列,存储 待处理资源的key(一般是 namespace/name)

workqueue workqueue.RateLimitingInterface

// recorder 事件记录器

recorder record.EventRecorder

}

func NewController(kubeclientset kubernetes.Interface,

appclientset clientset.Interface,

deploymentInformer appsinformersv1.DeploymentInformer,

serviceInformer coreinformersv1.ServiceInformer,

appInformer informersv1.AppInformer) *Controller {

// 将 为apps资源生成的clientset的Scheme,添加到全局 Scheme 中

utilruntime.Must(scheme.AddToScheme(scheme.Scheme))

klog.V(4).Info("Creating event broadcaster")

// 新建一个事件广播器,用于将事件广播到不同的监听器

eventBroadcaster := record.NewBroadcaster()

// 将事件以结构化日志的形式输出

eventBroadcaster.StartStructuredLogging(0)

// 将事件广播器配置为将事件记录到指定的 EventSink

eventBroadcaster.StartRecordingToSink(&typedcorev1.EventSinkImpl{Interface: kubeclientset.CoreV1().Events("")})

// 创建一个事件记录器,用于发送事件到设置好的事件广播

recorder := eventBroadcaster.NewRecorder(scheme.Scheme, corev1.EventSource{Component: utils.ControllerAgentName})

// 创建一个 Controller 对象

c := &Controller{

kubeClientset: kubeclientset,

appClientset: appclientset,

deploymentsLister: deploymentInformer.Lister(),

servicesLister: serviceInformer.Lister(),

appsLister: appInformer.Lister(),

deploymentsSync: deploymentInformer.Informer().HasSynced,

servicesSync: serviceInformer.Informer().HasSynced,

appsSync: appInformer.Informer().HasSynced,

workqueue: workqueue.NewNamedRateLimitingQueue(workqueue.DefaultControllerRateLimiter(), "Apps"),

recorder: recorder,

}

// 为AppInformer,设置 ResourceEventHandler

klog.Info("Setting up event handlers")

appInformer.Informer().AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: c.AddApp,

UpdateFunc: c.UpdateApp,

})

// 为 DeploymentInformer,设置 ResourceEventHandler

deploymentInformer.Informer().AddEventHandler(cache.ResourceEventHandlerFuncs{

DeleteFunc: c.DeleteDeployment,

})

// 为 ServiceInformer,设置 ResourceEventHandler

serviceInformer.Informer().AddEventHandler(cache.ResourceEventHandlerFuncs{

DeleteFunc: c.DeleteService,

})

// 将控制器实例返回

return c

}

func (c *Controller) enqueue(obj interface{}) {

key, err := cache.MetaNamespaceKeyFunc(obj)

if err != nil {

utilruntime.HandleError(err)

return

}

c.workqueue.Add(key)

}

func (c *Controller) AddApp(obj interface{}) {

c.enqueue(obj)

}

func (c *Controller) UpdateApp(oldObj, newObj interface{}) {

if reflect.DeepEqual(oldObj, newObj) {

key, _ := cache.MetaNamespaceKeyFunc(oldObj)

klog.V(4).Infof("UpdateApp %s: %s", key, "no change")

return

}

c.enqueue(newObj)

}

func (c *Controller) DeleteDeployment(obj interface{}) {

deploy := obj.(*appsv1.Deployment)

ownerReference := metav1.GetControllerOf(deploy)

if ownerReference == nil || ownerReference.Kind != "App" {

return

}

c.enqueue(obj)

}

func (c *Controller) DeleteService(obj interface{}) {

service := obj.(*corev1.Service)

ownerReference := metav1.GetControllerOf(service)

if ownerReference == nil || ownerReference.Kind != "App" {

return

}

c.enqueue(obj)

}

func (c *Controller) Run(workerNum int, stopCh <-chan struct{}) error {

// 用于处理程序崩溃,发生未捕获的异常(panic)时,调用HandleCrash()方法,记录日志并发出报告

defer utilruntime.HandleCrash()

// 控制器程序结束时,清理队列

defer c.workqueue.ShutDown()

klog.V(4).Info("Starting App Controller")

klog.V(4).Info("Waiting for informer cache to sync")

if ok := cache.WaitForCacheSync(stopCh, c.appsSync, c.deploymentsSync, c.servicesSync); !ok {

return fmt.Errorf("failed to wait for caches to sync")

}

klog.V(4).Info("Starting workers")

for i := 0; i < workerNum; i++ {

go wait.Until(c.worker, time.Minute, stopCh)

}

klog.V(4).Info("Started workers")

<-stopCh

klog.V(4).Info("Shutting down workers")

return nil

}

func (c *Controller) worker() {

for c.processNextWorkItem() {

}

}

func (c *Controller) processNextWorkItem() bool {

// 从 workqueue 中获取一个item

item, shutdown := c.workqueue.Get()

// 如果队列已经被回收,返回false

if shutdown {

return false

}

// 最终将这个item标记为已处理

defer c.workqueue.Done(item)

// 将item转成key

key, ok := item.(string)

if !ok {

klog.Warningf("failed convert item [%s] to string", item)

c.workqueue.Forget(item)

return true

}

// 对key这个App,进行具体的调谐。这里面是核心的调谐逻辑

if err := c.syncApp(key); err != nil {

klog.Errorf("failed to syncApp [%s], error: [%s]", key, err.Error())

c.handleError(key, err)

}

return true

}

func (c *Controller) syncApp(key string) error {

// 将key拆分成namespace、name

namespace, name, err := cache.SplitMetaNamespaceKey(key)

if err != nil {

return err

}

// 从informer缓存中,获取到key对应的app对象

app, err := c.appsLister.Apps(namespace).Get(name)

if err != nil {

if errors.IsNotFound(err) {

return fmt.Errorf("app [%s] in work queue no longer exists", key)

}

return err

}

// 取出 app 对象 的 deploymentSpec 部分

deploymentTemplate := app.Spec.DeploymentSpec

// 如果 app 的 deploymentTemplate 不为空

if deploymentTemplate.Name != "" {

// 尝试从缓存获取 对应的 deployment

deploy, err := c.deploymentsLister.Deployments(namespace).Get(deploymentTemplate.Name)

if err != nil {

// 如果没找到

if errors.IsNotFound(err) {

klog.V(4).Info("starting to create deployment [%s] in namespace [%s]", deploymentTemplate.Name, namespace)

// 创建一个deployment对象,然后使用 kubeClientset,与apiserver交互,创建deployment

deploy = newDeployment(deploymentTemplate, app)

_, err := c.kubeClientset.AppsV1().Deployments(namespace).Create(context.TODO(), deploy, metav1.CreateOptions{})

if err != nil {

return fmt.Errorf("failed to create deployment [%s] in namespace [%s], error: [%v]", deploymentTemplate.Name, namespace, err)

}

// 创建完成后,从apiserver中,获取最新的deployment,因为下面要使用它的status.【这里不能从informer缓存获取,因为缓存里暂时未同步新创建的deployment】

deploy, _ = c.kubeClientset.AppsV1().Deployments(namespace).Get(context.TODO(), deploymentTemplate.Name, metav1.GetOptions{})

} else {

return fmt.Errorf("failed to get deployment [%s] in namespace [%s], error: [%v]", deploy.Name, deploy.Namespace, err)

}

}

// 如果获取到的 deployment,并非 app 所控制,报错

if !metav1.IsControlledBy(deploy, app) {

msg := fmt.Sprintf(utils.MessageResourceExists, deploy.Name)

c.recorder.Event(app, corev1.EventTypeWarning, utils.ErrResourceExists, msg)

return fmt.Errorf("%s", msg)

}

// update deploy status

app.Status.DeploymentStatus = &deploy.Status

}

// 取出 app 对象 的 deploymentSpec 部分

serviceTemplate := app.Spec.ServiceSpec

// 如果 app 的 serviceTemplate 不为空

if serviceTemplate.Name != "" {

// 尝试从缓存获取 对应的 service

service, err := c.servicesLister.Services(namespace).Get(serviceTemplate.Name)

if err != nil {

// 如果没找到

if errors.IsNotFound(err) {

klog.V(4).Info("starting to create service [%s] in namespace [%s]", serviceTemplate.Name, namespace)

// 创建一个service对象,然后使用 kubeClientset,与apiserver交互,创建service

service = newService(serviceTemplate, app)

_, err := c.kubeClientset.CoreV1().Services(namespace).Create(context.TODO(), service, metav1.CreateOptions{})

if err != nil {

return fmt.Errorf("failed to create service [%s] in namespace [%s], error: [%v]", serviceTemplate.Name, namespace, err)

}

// 创建完成后,从apiserver中,获取最新的service,因为下面要使用它的status.【这里不能从informer缓存获取,因为缓存里暂时未同步新创建的service】

service, _ = c.kubeClientset.CoreV1().Services(namespace).Get(context.TODO(), serviceTemplate.Name, metav1.GetOptions{})

} else {

return fmt.Errorf("failed to get service [%s] in namespace [%s], error: [%v]", service.Name, service.Namespace, err)

}

}

// 如果获取到的 service,并非 app 所控制,报错

if !metav1.IsControlledBy(service, app) {

msg := fmt.Sprintf(utils.MessageResourceExists, service.Name)

c.recorder.Event(app, corev1.EventTypeWarning, utils.ErrResourceExists, msg)

return fmt.Errorf("%s", msg)

}

// update service status

app.Status.ServiceStatus = &service.Status

}

// 处理完 deploymentSpec、serviceSpec,将设置好的AppStatus更新到环境中去

_, err = c.appClientset.AppcontrollerV1().Apps(namespace).Update(context.TODO(), app, metav1.UpdateOptions{})

if err != nil {

return fmt.Errorf("failed to update app [%s], error: [%v]", key, err)

}

// 记录事件日志

c.recorder.Event(app, corev1.EventTypeNormal, utils.SuccessSynced, utils.MessageResourceSynced)

return nil

}

// newDeployment 创建一个deployment对象

func newDeployment(template appcontrollerv1.DeploymentTemplate, app *appcontrollerv1.App) *appsv1.Deployment {

d := &appsv1.Deployment{

TypeMeta: metav1.TypeMeta{

Kind: "Deployment",

APIVersion: "apps/v1",

},

ObjectMeta: metav1.ObjectMeta{

Name: template.Name,

},

Spec: appsv1.DeploymentSpec{

// Selector 和 pod 的 Labels 必须一致

Selector: &metav1.LabelSelector{

MatchLabels: map[string]string{

"app-key": "app-value",

},

},

Replicas: &template.Replicas,

Template: corev1.PodTemplateSpec{

// pod 的 labels,没有让用户指定,这里设置成默认的

ObjectMeta: metav1.ObjectMeta{

Labels: map[string]string{

"app-key": "app-value",

},

},

Spec: corev1.PodSpec{

Containers: []corev1.Container{

{

Name: "app-deploy-container",

Image: template.Image,

},

},

},

},

},

}

// 将 deploy 的 OwnerReferences,设置成app

d.OwnerReferences = []metav1.OwnerReference{

*metav1.NewControllerRef(app, appcontrollerv1.SchemeGroupVersion.WithKind("App")),

}

return d

}

func newService(template appcontrollerv1.ServiceTemplate, app *appcontrollerv1.App) *corev1.Service {

s := &corev1.Service{

TypeMeta: metav1.TypeMeta{

Kind: "Service",

APIVersion: "v1",

},

ObjectMeta: metav1.ObjectMeta{

Name: template.Name,

},

Spec: corev1.ServiceSpec{

// Selector 和 pod 的 Labels 必须一致

Selector: map[string]string{

"app-key": "app-value",

},

Ports: []corev1.ServicePort{

{

Name: "app-service",

// Service的端口,默认设置成了8080。这里仅仅是为了学习crd,实际开发中可以设置到AppSpec中去

Port: 8080,

},

},

},

}

s.OwnerReferences = []metav1.OwnerReference{

*metav1.NewControllerRef(app, appcontrollerv1.SchemeGroupVersion.WithKind("App")),

}

return s

}

func (c *Controller) handleError(key string, err error) {

// 如果当前key的处理次数,还不到最大重试次数,则再次加入队列

if c.workqueue.NumRequeues(key) < utils.MaxRetry {

c.workqueue.AddRateLimited(key)

return

}

// 运行时统一处理错误

utilruntime.HandleError(err)

// 不再处理这个key

c.workqueue.Forget(key)

}

5.2.2.Controller结构体详解

-

创建一个Controller结构体,我们的控制器,需要操作的资源有 App、Deployment、Service,因此Controller中需要包括一下几部分:

- kubernetes 的 clientset:用于从apiserver获取最新的deployment、service信息

- app 的 clientset:用于从apiserver获取最新的apps信息,这个clientset是我们使用code-generator自动生成的

- dedployment 的 Lister:用于从informer的缓存中获取deployment的信息,避免与apiserver交互的太频繁

- service 的 Lister:用于从informer的缓存中获取service的信息,避免与apiserver交互的太频繁

- app 的 Lister:用于从informer的缓存中获取app的信息,避免与apiserver交互的太频繁

- dedployment 的 HasSynced:用于检查 deployments 资源,是否完成同步

- service 的 HasSynced:检查 services 资源,是否完成同步

- app 的 HasSynced:检查 apps 资源,是否完成同步

- workqueue:存储 待处理资源的key(一般是 namespace/name)的队列

- recorder:事件记录器,用于记录事件,可以被

kubectl get event获取到

-

Controller结构体

type Controller struct { // kubeClientset kubernetes 所有内置资源的 clientset,用于操作所有内置资源 kubeClientset kubernetes.Interface // appClientset 为 apps 资源生成的 clientset,用于操作 apps 资源 appClientset clientset.Interface // deploymentsLister 查询本地缓存中的 deployment 资源 deploymentsLister appslisterv1.DeploymentLister // servicesLister 查询本地缓存中的 service 资源 servicesLister corelisterv1.ServiceLister // appsLister 查询本地缓存中的 apps 资源 appsLister listerv1.AppLister // deploymentsSync 检查 deployments 资源,是否完成同步 deploymentsSync cache.InformerSynced // servicesSync 检查 services 资源,是否完成同步 servicesSync cache.InformerSynced // appsSync 检查 apps 资源,是否完成同步 appsSync cache.InformerSynced // workqueue 队列,存储 待处理资源的key(一般是 namespace/name) workqueue workqueue.RateLimitingInterface // recorder 事件记录器 recorder record.EventRecorder } -

提供一个 NewController 的方法

func NewController(kubeclientset kubernetes.Interface, appclientset clientset.Interface, deploymentInformer appsinformersv1.DeploymentInformer, serviceInformer coreinformersv1.ServiceInformer, appInformer informersv1.AppInformer) *Controller { // 将 为apps资源生成的clientset的Scheme,添加到全局 Scheme 中 utilruntime.Must(scheme.AddToScheme(scheme.Scheme)) klog.V(4).Info("Creating event broadcaster") // 新建一个事件广播器,用于将事件广播到不同的监听器 eventBroadcaster := record.NewBroadcaster() // 将事件以结构化日志的形式输出 eventBroadcaster.StartStructuredLogging(0) // 将事件广播器配置为将事件记录到指定的 EventSink eventBroadcaster.StartRecordingToSink(&typedcorev1.EventSinkImpl{Interface: kubeclientset.CoreV1().Events("")}) // 创建一个事件记录器,用于发送事件到设置好的事件广播 recorder := eventBroadcaster.NewRecorder(scheme.Scheme, corev1.EventSource{Component: utils.ControllerAgentName}) // 创建一个 Controller 对象 c := &Controller{ kubeClientset: kubeclientset, appClientset: appclientset, deploymentsLister: deploymentInformer.Lister(), servicesLister: serviceInformer.Lister(), appsLister: appInformer.Lister(), deploymentsSync: deploymentInformer.Informer().HasSynced, servicesSync: serviceInformer.Informer().HasSynced, appsSync: appInformer.Informer().HasSynced, workqueue: workqueue.NewNamedRateLimitingQueue(workqueue.DefaultControllerRateLimiter(), "Apps"), recorder: recorder, } // 为AppInformer,设置 ResourceEventHandler klog.Info("Setting up event handlers") appInformer.Informer().AddEventHandler(cache.ResourceEventHandlerFuncs{ AddFunc: c.AddApp, UpdateFunc: c.UpdateApp, }) // 为 DeploymentInformer,设置 ResourceEventHandler deploymentInformer.Informer().AddEventHandler(cache.ResourceEventHandlerFuncs{ DeleteFunc: c.DeleteDeployment, }) // 为 ServiceInformer,设置 ResourceEventHandler serviceInformer.Informer().AddEventHandler(cache.ResourceEventHandlerFuncs{ DeleteFunc: c.DeleteService, }) // 将控制器实例返回 return c }

5.2.3.Controller中的ResourceEventHandler处理方法详解

- 共有4个

ResourceEventHandler,方法内容如下:- 这4个 ResourceEventHandler 方法的主要作用,就是将 待调谐的资源key,放入workqueue

func (c *Controller) enqueue(obj interface{}) { key, err := cache.MetaNamespaceKeyFunc(obj) if err != nil { utilruntime.HandleError(err) return } c.workqueue.Add(key) } func (c *Controller) AddApp(obj interface{}) { c.enqueue(obj) } func (c *Controller) UpdateApp(oldObj, newObj interface{}) { if reflect.DeepEqual(oldObj, newObj) { key, _ := cache.MetaNamespaceKeyFunc(oldObj) klog.V(4).Infof("UpdateApp %s: %s", key, "no change") return } c.enqueue(newObj) } func (c *Controller) DeleteDeployment(obj interface{}) { deploy := obj.(*appsv1.Deployment) ownerReference := metav1.GetControllerOf(deploy) if ownerReference == nil || ownerReference.Kind != "App" { return } c.enqueue(obj) } func (c *Controller) DeleteService(obj interface{}) { service := obj.(*corev1.Service) ownerReference := metav1.GetControllerOf(service) if ownerReference == nil || ownerReference.Kind != "App" { return } c.enqueue(obj) }

5.2.4.Controller的启动方法Run()详解

- 用于启动Controller,并启动 workerNum 个 worker 进行工作

func (c *Controller) Run(workerNum int, stopCh <-chan struct{}) error { // 用于处理程序崩溃,发生未捕获的异常(panic)时,调用HandleCrash()方法,记录日志并发出报告 defer utilruntime.HandleCrash() // 控制器程序结束时,清理队列 defer c.workqueue.ShutDown() klog.V(4).Info("Starting App Controller") klog.V(4).Info("Waiting for informer cache to sync") if ok := cache.WaitForCacheSync(stopCh, c.appsSync, c.deploymentsSync, c.servicesSync); !ok { return fmt.Errorf("failed to wait for caches to sync") } klog.V(4).Info("Starting workers") for i := 0; i < workerNum; i++ { go wait.Until(c.worker, time.Minute, stopCh) } klog.V(4).Info("Started workers") <-stopCh klog.V(4).Info("Shutting down workers") return nil }

5.2.5.worker详解

- worker是调谐的核心逻辑

func (c *Controller) worker() { for c.processNextWorkItem() { } } func (c *Controller) processNextWorkItem() bool { // 从 workqueue 中获取一个item item, shutdown := c.workqueue.Get() // 如果队列已经被回收,返回false if shutdown { return false } // 最终将这个item标记为已处理 defer c.workqueue.Done(item) // 将item转成key key, ok := item.(string) if !ok { klog.Warningf("failed convert item [%s] to string", item) c.workqueue.Forget(item) return true } // 对key这个App,进行具体的调谐。这里面是核心的调谐逻辑 if err := c.syncApp(key); err != nil { klog.Errorf("failed to syncApp [%s], error: [%s]", key, err.Error()) c.handleError(key, err) } return true } // syncApp 对App资源的调谐核心逻辑 func (c *Controller) syncApp(key string) error { // 将key拆分成namespace、name namespace, name, err := cache.SplitMetaNamespaceKey(key) if err != nil { return err } // 从informer缓存中,获取到key对应的app对象 app, err := c.appsLister.Apps(namespace).Get(name) if err != nil { if errors.IsNotFound(err) { return fmt.Errorf("app [%s] in work queue no longer exists", key) } return err } // 取出 app 对象 的 deploymentSpec 部分 deploymentTemplate := app.Spec.DeploymentSpec // 如果 app 的 deploymentTemplate 不为空 if deploymentTemplate.Name != "" { // 尝试从缓存获取 对应的 deployment deploy, err := c.deploymentsLister.Deployments(namespace).Get(deploymentTemplate.Name) if err != nil { // 如果没找到 if errors.IsNotFound(err) { klog.V(4).Info("starting to create deployment [%s] in namespace [%s]", deploymentTemplate.Name, namespace) // 创建一个deployment对象,然后使用 kubeClientset,与apiserver交互,创建deployment deploy = newDeployment(deploymentTemplate, app) _, err := c.kubeClientset.AppsV1().Deployments(namespace).Create(context.TODO(), deploy, metav1.CreateOptions{}) if err != nil { return fmt.Errorf("failed to create deployment [%s] in namespace [%s], error: [%v]", deploymentTemplate.Name, namespace, err) } // 创建完成后,从apiserver中,获取最新的deployment,因为下面要使用它的status.【这里不能从informer缓存获取,因为缓存里暂时未同步新创建的deployment】 deploy, _ = c.kubeClientset.AppsV1().Deployments(namespace).Get(context.TODO(), deploymentTemplate.Name, metav1.GetOptions{}) } else { return fmt.Errorf("failed to get deployment [%s] in namespace [%s], error: [%v]", deploy.Name, deploy.Namespace, err) } } // 如果获取到的 deployment,并非 app 所控制,报错 if !metav1.IsControlledBy(deploy, app) { msg := fmt.Sprintf(utils.MessageResourceExists, deploy.Name) c.recorder.Event(app, corev1.EventTypeWarning, utils.ErrResourceExists, msg) return fmt.Errorf("%s", msg) } // update deploy status app.Status.DeploymentStatus = &deploy.Status } // 取出 app 对象 的 deploymentSpec 部分 serviceTemplate := app.Spec.ServiceSpec // 如果 app 的 serviceTemplate 不为空 if serviceTemplate.Name != "" { // 尝试从缓存获取 对应的 service service, err := c.servicesLister.Services(namespace).Get(serviceTemplate.Name) if err != nil { // 如果没找到 if errors.IsNotFound(err) { klog.V(4).Info("starting to create service [%s] in namespace [%s]", serviceTemplate.Name, namespace) // 创建一个service对象,然后使用 kubeClientset,与apiserver交互,创建service service = newService(serviceTemplate, app) _, err := c.kubeClientset.CoreV1().Services(namespace).Create(context.TODO(), service, metav1.CreateOptions{}) if err != nil { return fmt.Errorf("failed to create service [%s] in namespace [%s], error: [%v]", serviceTemplate.Name, namespace, err) } // 创建完成后,从apiserver中,获取最新的service,因为下面要使用它的status.【这里不能从informer缓存获取,因为缓存里暂时未同步新创建的service】 service, _ = c.kubeClientset.CoreV1().Services(namespace).Get(context.TODO(), serviceTemplate.Name, metav1.GetOptions{}) } else { return fmt.Errorf("failed to get service [%s] in namespace [%s], error: [%v]", service.Name, service.Namespace, err) } } // 如果获取到的 service,并非 app 所控制,报错 if !metav1.IsControlledBy(service, app) { msg := fmt.Sprintf(utils.MessageResourceExists, service.Name) c.recorder.Event(app, corev1.EventTypeWarning, utils.ErrResourceExists, msg) return fmt.Errorf("%s", msg) } // update service status app.Status.ServiceStatus = &service.Status } // 处理完 deploymentSpec、serviceSpec,将设置好的AppStatus更新到环境中去 _, err = c.appClientset.AppcontrollerV1().Apps(namespace).Update(context.TODO(), app, metav1.UpdateOptions{}) if err != nil { return fmt.Errorf("failed to update app [%s], error: [%v]", key, err) } // 记录事件日志 c.recorder.Event(app, corev1.EventTypeNormal, utils.SuccessSynced, utils.MessageResourceSynced) return nil }

5.2.6.创建deployemnt、service的方法详解

- 根据AppSpec中用户编写的信息,创建对应的deployment和service

- 这里只在AppSpec中添加了几个简单的信息,所以很多信息都是写死在代码里的,大家可以根据需要,更新AppSpec,这样创建的时候,就可以有更多的信息由用户指定

- 更新完AppSpec后,记得重新执行 第3部分 中的命令,重新生成deepcopy文件、generated、crd文件

// newDeployment 创建一个deployment对象 func newDeployment(template appcontrollerv1.DeploymentTemplate, app *appcontrollerv1.App) *appsv1.Deployment { d := &appsv1.Deployment{ TypeMeta: metav1.TypeMeta{ Kind: "Deployment", APIVersion: "apps/v1", }, ObjectMeta: metav1.ObjectMeta{ Name: template.Name, }, Spec: appsv1.DeploymentSpec{ // Selector 和 pod 的 Labels 必须一致 Selector: &metav1.LabelSelector{ MatchLabels: map[string]string{ "app-key": "app-value", }, }, Replicas: &template.Replicas, Template: corev1.PodTemplateSpec{ // pod 的 labels,没有让用户指定,这里设置成默认的 ObjectMeta: metav1.ObjectMeta{ Labels: map[string]string{ "app-key": "app-value", }, }, Spec: corev1.PodSpec{ Containers: []corev1.Container{ { Name: "app-deploy-container", Image: template.Image, }, }, }, }, }, } // 将 deploy 的 OwnerReferences,设置成app d.OwnerReferences = []metav1.OwnerReference{ *metav1.NewControllerRef(app, appcontrollerv1.SchemeGroupVersion.WithKind("App")), } return d } func newService(template appcontrollerv1.ServiceTemplate, app *appcontrollerv1.App) *corev1.Service { s := &corev1.Service{ TypeMeta: metav1.TypeMeta{ Kind: "Service", APIVersion: "v1", }, ObjectMeta: metav1.ObjectMeta{ Name: template.Name, }, Spec: corev1.ServiceSpec{ // Selector 和 pod 的 Labels 必须一致 Selector: map[string]string{ "app-key": "app-value", }, Ports: []corev1.ServicePort{ { Name: "app-service", // Service的端口,默认设置成了8080。这里仅仅是为了学习crd,实际开发中可以设置到AppSpec中去 Port: 8080, }, }, }, } s.OwnerReferences = []metav1.OwnerReference{ *metav1.NewControllerRef(app, appcontrollerv1.SchemeGroupVersion.WithKind("App")), } return s }

6.编写main函数,启动控制器

6.1.编写cmd/main.go

- 编写main方法,创建Controller对象,并启动,同时做好优雅停止设计

package main import ( "crd-controller-demo/pkg/controller" clientset "crd-controller-demo/pkg/generated/clientset/versioned" appinformers "crd-controller-demo/pkg/generated/informers/externalversions" "crd-controller-demo/pkg/signals" "crd-controller-demo/pkg/utils" "flag" kubeinformers "k8s.io/client-go/informers" "k8s.io/client-go/kubernetes" "k8s.io/client-go/tools/clientcmd" "time" "k8s.io/klog/v2" ) var ( masterURL string kubeConfig string ) func main() { klog.InitFlags(nil) flag.Parse() // set up signals so we handle the first shutdown signal gracefully stopCh := signals.SetupSignalHandler() config, err := clientcmd.BuildConfigFromFlags(masterURL, kubeConfig) //config, err := clientcmd.BuildConfigFromFlags("", clientcmd.RecommendedHomeFile) if err != nil { klog.Fatalf("Error building kubeConfig: %s", err.Error()) } kubeClientSet, err := kubernetes.NewForConfig(config) if err != nil { klog.Fatalf("Error building kubernetes clientset: %s", err.Error()) } appClientSet, err := clientset.NewForConfig(config) if err != nil { klog.Fatalf("Error building app clientset: %s", err.Error()) } kubeInformerFactory := kubeinformers.NewSharedInformerFactory(kubeClientSet, time.Second*30) appInformerFactory := appinformers.NewSharedInformerFactory(appClientSet, time.Second*30) controller := controller.NewController(kubeClientSet, appClientSet, kubeInformerFactory.Apps().V1().Deployments(), kubeInformerFactory.Core().V1().Services(), appInformerFactory.Appcontroller().V1().Apps()) // notice that there is no need to run Start methods in a separate goroutine. (i.e. go kubeInformerFactory.Start(stopCh) // Start method is non-blocking and runs all registered informers in a dedicated goroutine. kubeInformerFactory.Start(stopCh) appInformerFactory.Start(stopCh) if err = controller.Run(utils.WorkNum, stopCh); err != nil { klog.Fatalf("Error running controller: %s", err.Error()) } } func init() { flag.StringVar(&kubeConfig, "kubeConfig", "", "Path to a kubeConfig. Only required if out-of-cluster.") flag.StringVar(&masterURL, "master", "", "The address of the Kubernetes API server. Overrides any value in kubeConfig. Only required if out-of-cluster.") }

6.2.pkg/signals包

6.3.编写signal.go

/*

Copyright 2017 The Kubernetes Authors.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

*/

package signals

import (

"os"

"os/signal"

)

var onlyOneSignalHandler = make(chan struct{})

// SetupSignalHandler registered for SIGTERM and SIGINT. A stop channel is returned

// which is closed on one of these signals. If a second signal is caught, the program

// is terminated with exit code 1.

func SetupSignalHandler() (stopCh <-chan struct{}) {

close(onlyOneSignalHandler) // panics when called twice

stop := make(chan struct{})

c := make(chan os.Signal, 2)

signal.Notify(c, shutdownSignals...)

go func() {

<-c

close(stop)

<-c

os.Exit(1) // second signal. Exit directly.

}()

return stop

}

6.4.编写signal_posix.go

//go:build !windows

// +build !windows

/*

Copyright 2017 The Kubernetes Authors.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

*/

package signals

import (

"os"

"syscall"

)

var shutdownSignals = []os.Signal{os.Interrupt, syscall.SIGTERM}

6.5.编写signal_windows.go

/*

Copyright 2017 The Kubernetes Authors.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

*/

package signals

import (

"os"

)

var shutdownSignals = []os.Signal{os.Interrupt}

7.编写测试yaml文件

- 在artifacts包下,创建一个example包,里面创建两个测试文件

- test_app.yaml

apiVersion: appcontroller.k8s.io/v1 kind: App metadata: name: test-app namespace: tcs spec: deploymentTemplate: name: app-deploy image: nginx replicas: 2 serviceTemplate: name: app-service - test_app_2.yaml

apiVersion: appcontroller.k8s.io/v1 kind: App metadata: name: test-app-2 namespace: tcs spec: deploymentTemplate: name: app-deploy-test image: tomcat replicas: 3 serviceTemplate: name: app-service-test

8.Create CRD && Test

- 在kubernetes集群中,创建CRD资源

cd crd-controller-demo kubectl apply -f artifacts/crd/appcontroller.k8s.io_apps.yaml - 启动控制器

- 执行

go run main.go - 可以使用命令行参数指定masterIP、configPath

- 执行

- 然后创建两个App资源,查看情况

cd crd-controller-demo kubectl apply -f artifacts/example/test_app.yaml kubectl apply -f artifacts/example/test_app_2.yaml - 查看deployment和service的创建情况

[root@master crd-controller-demo]# kubectl get deploy -n tcs NAME READY UP-TO-DATE AVAILABLE AGE app-deploy 2/2 2 2 145m app-deploy-test 3/3 3 3 125m [root@master crd-controller-demo]# kubectl get pods -n tcs NAME READY STATUS RESTARTS AGE app-deploy-67677ddc7f-jpggn 1/1 Running 0 145m app-deploy-67677ddc7f-zqgcl 1/1 Running 0 145m app-deploy-test-8fb698bf7-84s8p 1/1 Running 0 126m app-deploy-test-8fb698bf7-dtk4w 1/1 Running 0 126m app-deploy-test-8fb698bf7-wzfj9 1/1 Running 0 126m [root@master crd-controller-demo]# kubectl get svc -n tcs NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE app-service ClusterIP 10.103.185.230 <none> 8080/TCP 138m app-service-test ClusterIP 10.105.182.76 <none> 8080/TCP 127m