ceres之LM算法

Ceres作为一个优化算法库,在许多领域中有着至关重要的作用,比如slam系统中的优化问题-集束调整BA,就可以通过Ceres去实现,官方文档地址:http://ceres-solver.org/nnls_tutorial.html#bundle-adjustment

本文主要是解析ceres中的LM算法过程,参考代码地址:

https://github.com/ceres-solver/ceres-solver/tree/master/internal/ceres

一、主要流程

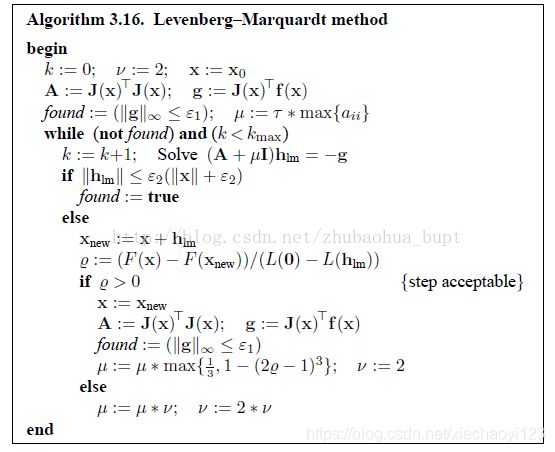

先贴个图,LM算法的大概流程如下

可以看到,LM算法的输入为(1)雅可比矩阵J(x);(2)残差向量f(x);(3)待优化变量初值x0;(4)控制参数等

LM算法要求解的问题为:

其中![]() 为残差函数,它的导函数为,二阶导函数的近似为

为残差函数,它的导函数为,二阶导函数的近似为

分为几个步骤:

(1)初始化:首先计算系数矩阵A和残差向量g,初始化参数

(2)while循环:如果达到收敛条件就停止迭代

(3)求解 (A+miu*I)dx = -g

(4) xnew = x+dx

(5) ,其中Fx,Fxnew是所有残差的平方和

(6)如果第五步结果大于零,表示这个迭代是有效的,可以接受

然后更新x = xnew;Fx =Fnew ,A = J'*J, g = J'*f

(7)否则这个dx得到的结果是无效的,收缩搜索半径,相当于增大![]()

ceres对应代码:https://github.com/ceres-solver/ceres-solver/blob/master/internal/ceres/trust_region_minimizer.cc

以及:https://github.com/ceres-solver/ceres-solver/blob/master/internal/ceres/levenberg_marquardt_strategy.cc

void TrustRegionMinimizer::Minimize(const Minimizer::Options& options,

double* parameters,

Solver::Summary* solver_summary) {

start_time_in_secs_ = WallTimeInSeconds();

iteration_start_time_in_secs_ = start_time_in_secs_;

//初始化

Init(options, parameters, solver_summary);

//得到初始的雅可比矩阵以及残差矩阵

RETURN_IF_ERROR_AND_LOG(IterationZero());

// Create the TrustRegionStepEvaluator. The construction needs to be

// delayed to this point because we need the cost for the starting

// point to initialize the step evaluator.

step_evaluator_.reset(new TrustRegionStepEvaluator(

x_cost_,

options_.use_nonmonotonic_steps

? options_.max_consecutive_nonmonotonic_steps

: 0));

//while循环:是否达到迭代终止条件

while (FinalizeIterationAndCheckIfMinimizerCanContinue()) {

iteration_start_time_in_secs_ = WallTimeInSeconds();

iteration_summary_ = IterationSummary();

iteration_summary_.iteration =

solver_summary->iterations.back().iteration + 1;

//计算(J'*J+D'*D/radius)*dx = -J'*f

RETURN_IF_ERROR_AND_LOG(ComputeTrustRegionStep());

if (!iteration_summary_.step_is_valid) {

//如果当前迭代不是有效的,则收缩搜索半径

RETURN_IF_ERROR_AND_LOG(HandleInvalidStep());

continue;

}

if (options_.is_constrained &&

options_.max_num_line_search_step_size_iterations > 0) {

// Use a projected line search to enforce the bounds constraints

// and improve the quality of the step.

DoLineSearch(x_, gradient_, x_cost_, &delta_);

}

//计算Xnew = x+dx

ComputeCandidatePointAndEvaluateCost();

DoInnerIterationsIfNeeded();

//是否||dx||太小了,太小了直接终止

if (ParameterToleranceReached()) {

return;

}

//是否||Fx-Fxnew||太小了,太小了意味着残差平方和更新不动直接终止

if (FunctionToleranceReached()) {

return;

}

//如果是有效的

if (IsStepSuccessful()) {

//更新x,雅可比矩阵,残差向量等

RETURN_IF_ERROR_AND_LOG(HandleSuccessfulStep());

continue;

}

//否则收缩搜索半径

HandleUnsuccessfulStep();

}

}上面为什么是信赖域算法过程,Ceres里面把LM算法和dogleg算法(也叫狗腿算法)集成到统一的框架下--信赖域算法框架,不同的是LM算法求解dx的过程和狗腿算法不同,下面是LM算法求解dx的过程以及搜索半径的更新

TrustRegionStrategy::Summary LevenbergMarquardtStrategy::ComputeStep(

const TrustRegionStrategy::PerSolveOptions& per_solve_options,

SparseMatrix* jacobian,

const double* residuals,

double* step) {

CHECK(jacobian != nullptr);

CHECK(residuals != nullptr);

CHECK(step != nullptr);

//计算对角矩阵D

const int num_parameters = jacobian->num_cols();

if (!reuse_diagonal_) {

if (diagonal_.rows() != num_parameters) {

diagonal_.resize(num_parameters, 1);

}

jacobian->SquaredColumnNorm(diagonal_.data());

for (int i = 0; i < num_parameters; ++i) {

diagonal_[i] = std::min(std::max(diagonal_[i], min_diagonal_),

max_diagonal_);

}

}

// D = diag{J'*J}/radius

lm_diagonal_ = (diagonal_ / radius_).array().sqrt();

LinearSolver::PerSolveOptions solve_options;

solve_options.D = lm_diagonal_.data();

solve_options.q_tolerance = per_solve_options.eta;

solve_options.r_tolerance = -1.0;

InvalidateArray(num_parameters, step);

//求解 (A+D'*D)dx = -g

LinearSolver::Summary linear_solver_summary =

linear_solver_->Solve(jacobian, residuals, solve_options, step);

if (linear_solver_summary.termination_type == LINEAR_SOLVER_FATAL_ERROR) {

LOG(WARNING) << "Linear solver fatal error: "

<< linear_solver_summary.message;

} else if (linear_solver_summary.termination_type == LINEAR_SOLVER_FAILURE) {

LOG(WARNING) << "Linear solver failure. Failed to compute a step: "

<< linear_solver_summary.message;

} else if (!IsArrayValid(num_parameters, step)) {

LOG(WARNING) << "Linear solver failure. Failed to compute a finite step.";

linear_solver_summary.termination_type = LINEAR_SOLVER_FAILURE;

} else {

VectorRef(step, num_parameters) *= -1.0;

}

reuse_diagonal_ = true;

if (per_solve_options.dump_format_type == CONSOLE ||

(per_solve_options.dump_format_type != CONSOLE &&

!per_solve_options.dump_filename_base.empty())) {

if (!DumpLinearLeastSquaresProblem(per_solve_options.dump_filename_base,

per_solve_options.dump_format_type,

jacobian,

solve_options.D,

residuals,

step,

0)) {

LOG(ERROR) << "Unable to dump trust region problem."

<< " Filename base: " << per_solve_options.dump_filename_base;

}

}

TrustRegionStrategy::Summary summary;

summary.residual_norm = linear_solver_summary.residual_norm;

summary.num_iterations = linear_solver_summary.num_iterations;

summary.termination_type = linear_solver_summary.termination_type;

return summary;

}

//如果当前迭代是有效的,更新搜索半径等参数

void LevenbergMarquardtStrategy::StepAccepted(double step_quality) {

CHECK_GT(step_quality, 0.0);

radius_ = radius_ / std::max(1.0 / 3.0,

1.0 - pow(2.0 * step_quality - 1.0, 3));

radius_ = std::min(max_radius_, radius_);

decrease_factor_ = 2.0;

reuse_diagonal_ = false;

}

//如果当前迭代是无效的,收缩搜索半径等参数

void LevenbergMarquardtStrategy::StepRejected(double step_quality) {

radius_ = radius_ / decrease_factor_;

decrease_factor_ *= 2.0;

reuse_diagonal_ = true;

}