elasticsearch-6.5.4集群部署(图文详细)及常见错误

目录

服务器规划

部署包下载

服务器初始化(所有节点都要操作)

内核参数修改

/etc/security/limits.conf修改

/etc/sysctl.conf文件修改

禁用selinux

关闭防火墙

创建es用户

安装jdk

es部署

单节点(节点1)部署

部署包上传解压

配置文件修改

修改文件属组

部署包分发

单节点(节点2)部署

配置文件修改

修改文件属组

单节点(节点3)部署

配置文件修改

修改文件属组

启动ES集群

验证

单节点验证

查看集群状态

查看集群效果

本次测试环境服务器系统:Red Hat Enterprise Linux Server release 7.3 (Maipo),其他版本系统在服务器初始化环节可能存在差异,请自行查找对应命令

服务器规划

| 服务器ip | jdk | 节点 | 端口 |

|---|---|---|---|

| 192.168.54.131 | jdk1.8 | node131 | 9200 |

| 192.168.54.132 | jdk1.8 | node132 | 9200 |

| 192.168.54.133 | jdk1.8 | node133 | 9200 |

部署包下载

官网下载地址Elasticsearch 6.5.4 | Elastic 下载对应系统的部署包

服务器初始化(所有节点都要操作)

内核参数修改

/etc/security/limits.conf修改

修改/etc/security/limits.conf文件,末尾追加如下内容(需退出重新登录用户或直接重启系统才会生效)

* soft nofile 65536

* hard nofile 65536

* soft nproc 4096

* hard nproc 4096

否则es启动会报错::bootstrap checks failed [1]: max file descriptors [65535] for elasticsearch

/etc/sysctl.conf文件修改

编辑 /etc/sysctl.conf,追加以下内容:

vm.max_map_count=655360

保存后,执行:sysctl -p生效

否则es启动报错:: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

禁用selinux

查看状态:getenforce

临时关闭:setenforce 0

永久关闭:修改/etc/sysconfig/selinux文件 修改SELINUX的值为disabled

[root@localhost ~]# cat /etc/sysconfig/selinux

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

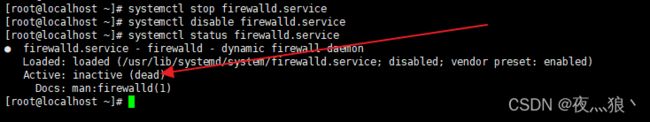

关闭防火墙

依次执行以下三条命令 关闭防火墙、禁用防火墙、查看防火墙状态,最后的状态应该如下箭头所示为“dead”

[root@localhost ~]# systemctl stop firewalld.service

[root@localhost ~]# systemctl disable firewalld.service

[root@localhost ~]# systemctl status firewalld.service

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

[root@localhost ~]# ^C

[root@localhost ~]#

创建es用户

因为安全问题 es库不允许直接root启动,所以需要创建一个用户,所以一般就创建名为“es”的用户,修改密码时候提示无效密码不用管,重新输入密码验证即可

[root@localhost ~]# useradd es

[root@localhost ~]# passwd es

更改用户 es 的密码 。

新的 密码:

无效的密码: 密码少于 8 个字符

重新输入新的 密码:

passwd:所有的身份验证令牌已经成功更新。

[root@localhost ~]# 安装jdk

es需要依赖JDK,如果没有需要安装,请自行安装(es6.5.4推荐安装1.8的jdk)

es部署

单节点(节点1)部署

部署包上传解压

1.登录131服务器进入/opt目录并创建es目录,

[root@localhost es]#cd /opt

[root@localhost es]#mkdir es

2.上传部署包到当前目录下并解压

[root@localhost es]#cd es

[root@localhost es]# tar -xvzf elasticsearch-6.5.4.tar.gz

[root@localhost es]# ll

总用量 110668

-rw-r--r-- 1 root root 113322649 1月 18 11:54 elasticsearch-6.5.4.tar.gz

drwxr-xr-x 9 es es 145 1月 18 10:43 elasticsearch-6.5.4

3.进入es解压目录(/opt/es/elastticsearch-6.5.4)创建数据存储目录及日志目录(如果没有就创建 有就忽略这一步)

[root@localhost es]# cd elastticsearch-6.5.4/

[root@localhost es]# mkdir data

[root@localhost es]# mkdir logs

[root@localhost elastticsearch-6.5.4]# ll

总用量 440

drwxr-xr-x 3 es es 4096 1月 18 10:41 bin

drwxr-xr-x 2 es es 4096 1月 18 11:31 config

drwxrwxr-x 2 es es 6 1月 18 10:43 data

drwxr-xr-x 3 es es 4096 1月 18 10:41 lib

-rw-r--r-- 1 es es 13675 1月 18 10:41 LICENSE.txt

drwxr-xr-x 2 es es 29 1月 18 11:05 logs

drwxr-xr-x 28 es es 4096 1月 18 10:41 modules

-rw-r--r-- 1 es es 403816 1月 18 10:41 NOTICE.txt

drwxr-xr-x 2 es es 6 1月 18 10:41 plugins

-rw-r--r-- 1 es es 8519 1月 18 10:41 README.textile

[root@localhost elastticsearch-6.5.4]#

配置文件修改

进入es配置文件目录(/opt/es/elastticsearch-6.5.4/config),修改如下配置

[root@localhost config]# vim /opt/es/elasticsearch-6.5.4/config/elasticsearch.yml

#集群名称,所有节点保持一致

cluster.name: my-es

#节点名称 保持每个节点不一样

node.name: node131

#数据存储目录

path.data: /opt/es/maste-elasticsearch-6.5.4/data

#日志存储目录

path.logs: /opt/es/maste-elasticsearch-6.5.4/logs

#当前节点的IP地址

network.host: 192.168.65.131

#集群服务的端口

transport.tcp.port: 9300

#对外提供服务的端口

http.port: 9200

#集群节点IP地址,也可以使用els、els.shuaiguoxia.com等名称,但需要各节点能够解析

discovery.zen.ping.unicast.hosts: ["192.168.65.131","192.168.65.132","192.168.65.132"]

#是否作为管理节点

node.master: true

#是否作为数据节点

node.data: true

# 为了避免脑裂,集群节点数最少为所有管理节点的半数+1,即有3个管理节点时候 改参数为2,如果有10个管理节点则为10/2+1=6 如果是2个管理节点则为2/2+1(该情况可能出现脑裂)

discovery.zen.minimum_master_nodes: 2 修改文件属组

进入opt目录将es目录及下级目录属组更改为es用户,到此,节点一部署完成

[root@localhost opt]# cd /opt/

[root@localhost opt]# chown -R es:es es部署包分发

将131(节点1)修改好配置的es复制到132(节点2)和133(节点3)

[root@localhost opt]# pwd

/opt

[root@localhost opt]# ll

总用量 0

drwxr-xr-x 4 es es 99 1月 18 12:20 es

[root@localhost opt]#

[root@localhost opt]# scp -r es/ [email protected]/opt/

[root@localhost opt]#

[root@localhost opt]# scp -r es/ [email protected]/opt/

单节点(节点2)部署

配置文件修改

登录132(节点2)进入es配置目录(/opt/es/elastticsearch-6.5.4/config)修改配置文件如下

注意:仅在原基础上修改节点名称和当前节点ip即可

#节点名称 保持每个节点不一样

node.name: node132

#当前节点的IP地址

network.host: 192.168.65.132

修改后如下

[root@localhost config]# vim /opt/es/elasticsearch-6.5.4/config/elasticsearch.yml

#集群名称,所有节点保持一致

cluster.name: my-es

#节点名称 保持每个节点不一样

node.name: node132

#数据存储目录

path.data: /opt/es/maste-elasticsearch-6.5.4/data

#日志存储目录

path.logs: /opt/es/maste-elasticsearch-6.5.4/logs

#当前节点的IP地址

network.host: 192.168.65.132

#集群服务的端口

transport.tcp.port: 9300

#对外提供服务的端口

http.port: 9200

#集群节点IP地址,也可以使用els、els.shuaiguoxia.com等名称,但需要各节点能够解析

discovery.zen.ping.unicast.hosts: ["192.168.65.131","192.168.65.132","192.168.65.132"]

#是否作为管理节点

node.master: true

#是否作为数据节点

node.data: true

# 为了避免脑裂,集群节点数最少为所有管理节点的半数+1,即有3个管理节点时候 改参数为2,如果有10个管理节点则为10/2+1=6 如果是2个管理节点则为2/2+1(该情况可能出现脑裂)

discovery.zen.minimum_master_nodes: 2 修改文件属组

进入opt目录将es目录及下级目录属组更改为es用户,到此,节点二部署完成

[root@localhost opt]# cd /opt/

[root@localhost opt]# chown -R es:es es单节点(节点3)部署

配置文件修改

登录133(节点3)进入es配置目录(/opt/es/elastticsearch-6.5.4/config)修改配置文件如下

注意:仅在原基础上修改节点名称和当前节点ip即可

#节点名称 保持每个节点不一样

node.name: node133

#当前节点的IP地址

network.host: 192.168.65.133

修改后如下

[root@localhost config]# vim /opt/es/elasticsearch-6.5.4/config/elasticsearch.yml

#集群名称,所有节点保持一致

cluster.name: my-es

#节点名称 保持每个节点不一样

node.name: node133

#数据存储目录

path.data: /opt/es/maste-elasticsearch-6.5.4/data

#日志存储目录

path.logs: /opt/es/maste-elasticsearch-6.5.4/logs

#当前节点的IP地址

network.host: 192.168.65.133

#集群服务的端口

transport.tcp.port: 9300

#对外提供服务的端口

http.port: 9200

#集群节点IP地址,也可以使用els、els.shuaiguoxia.com等名称,但需要各节点能够解析

discovery.zen.ping.unicast.hosts: ["192.168.65.131","192.168.65.132","192.168.65.132"]

#是否作为管理节点

node.master: true

#是否作为数据节点

node.data: true

# 为了避免脑裂,集群节点数最少为所有管理节点的半数+1,即有3个管理节点时候 改参数为2,如果有10个管理节点则为10/2+1=6 如果是2个管理节点则为2/2+1(该情况可能出现脑裂)

discovery.zen.minimum_master_nodes: 2 修改文件属组

进入opt目录将es目录及下级目录属组更改为es用户,到此,节点三部署完成

[root@localhost opt]# cd /opt/

[root@localhost opt]# chown -R es:es es启动ES集群

分别进入每个节点的es启动目录(/opt/es/elastticsearch-6.5.4/bin/) 执行./elasticsearch启动每一个节点,末尾输出[master131] license [bdab27e6-59b5-4ada-a10e-2410f1ee0db9] mode [basic] - valid表示启动正常

[root@localhost bin]# cd /opt/es/elastticsearch-6.5.4/bin/

[root@localhost bin]# pwd

/opt/es/elastticsearch-6.5.4/bin

[es@localhost bin]$ ./elasticsearch

[2022-01-18T12:35:21,774][INFO ][o.e.e.NodeEnvironment ] [master131] using [1] data paths, mounts [[/ (/dev/mapper/ol-root)]], net usable_space [12.8gb], net total_space [16.9gb], types [xfs]

[2022-01-18T12:35:21,776][INFO ][o.e.e.NodeEnvironment ] [master131] heap size [990.7mb], compressed ordinary object pointers [true]

[2022-01-18T12:35:21,776][INFO ][o.e.n.Node ] [master131] node name [master131], node ID [7ZFg24w9T6u3aHBbL_gHAw]

[2022-01-18T12:35:21,777][INFO ][o.e.n.Node ] [master131] version[6.5.4], pid[4648], build[default/tar/d2ef93d/2018-12-17T21:17:40.758843Z], OS[Linux/4.1.12-61.1.18.el7uek.x86_64/amd64], JVM[Oracle Corporation/OpenJDK 64-Bit Server VM/1.8.0_102/25.102-b14]

[2022-01-18T12:35:21,777][INFO ][o.e.n.Node ] [master131] JVM arguments [-Xms1g, -Xmx1g, -XX:+UseConcMarkSweepGC, -XX:CMSInitiatingOccupancyFraction=75, -XX:+UseCMSInitiatingOccupancyOnly, -XX:+AlwaysPreTouch, -Xss1m, -Djava.awt.headless=true, -Dfile.encoding=UTF-8, -Djna.nosys=true, -XX:-OmitStackTraceInFastThrow, -Dio.netty.noUnsafe=true, -Dio.netty.noKeySetOptimization=true, -Dio.netty.recycler.maxCapacityPerThread=0, -Dlog4j.shutdownHookEnabled=false, -Dlog4j2.disable.jmx=true, -Djava.io.tmpdir=/tmp/elasticsearch.K8TSVePq, -XX:+HeapDumpOnOutOfMemoryError, -XX:HeapDumpPath=data, -XX:ErrorFile=logs/hs_err_pid%p.log, -XX:+PrintGCDetails, -XX:+PrintGCDateStamps, -XX:+PrintTenuringDistribution, -XX:+PrintGCApplicationStoppedTime, -Xloggc:logs/gc.log, -XX:+UseGCLogFileRotation, -XX:NumberOfGCLogFiles=32, -XX:GCLogFileSize=64m, -Des.path.home=/opt/es/elastticsearch-6.5.4, -Des.path.conf=/opt/es/elastticsearch-6.5.4/config, -Des.distribution.flavor=default, -Des.distribution.type=tar]

[2022-01-18T12:35:22,758][INFO ][o.e.p.PluginsService ] [master131] loaded module [aggs-matrix-stats]

[2022-01-18T12:35:22,758][INFO ][o.e.p.PluginsService ] [master131] loaded module [analysis-common]

[2022-01-18T12:35:22,759][INFO ][o.e.p.PluginsService ] [master131] loaded module [ingest-common]

[2022-01-18T12:35:22,759][INFO ][o.e.p.PluginsService ] [master131] loaded module [lang-expression]

[2022-01-18T12:35:22,759][INFO ][o.e.p.PluginsService ] [master131] loaded module [lang-mustache]

[2022-01-18T12:35:22,759][INFO ][o.e.p.PluginsService ] [master131] loaded module [lang-painless]

[2022-01-18T12:35:22,759][INFO ][o.e.p.PluginsService ] [master131] loaded module [mapper-extras]

[2022-01-18T12:35:22,759][INFO ][o.e.p.PluginsService ] [master131] loaded module [parent-join]

[2022-01-18T12:35:22,759][INFO ][o.e.p.PluginsService ] [master131] loaded module [percolator]

[2022-01-18T12:35:22,759][INFO ][o.e.p.PluginsService ] [master131] loaded module [rank-eval]

[2022-01-18T12:35:22,759][INFO ][o.e.p.PluginsService ] [master131] loaded module [reindex]

[2022-01-18T12:35:22,759][INFO ][o.e.p.PluginsService ] [master131] loaded module [repository-url]

[2022-01-18T12:35:22,759][INFO ][o.e.p.PluginsService ] [master131] loaded module [transport-netty4]

[2022-01-18T12:35:22,759][INFO ][o.e.p.PluginsService ] [master131] loaded module [tribe]

[2022-01-18T12:35:22,759][INFO ][o.e.p.PluginsService ] [master131] loaded module [x-pack-ccr]

[2022-01-18T12:35:22,759][INFO ][o.e.p.PluginsService ] [master131] loaded module [x-pack-core]

[2022-01-18T12:35:22,760][INFO ][o.e.p.PluginsService ] [master131] loaded module [x-pack-deprecation]

[2022-01-18T12:35:22,760][INFO ][o.e.p.PluginsService ] [master131] loaded module [x-pack-graph]

[2022-01-18T12:35:22,760][INFO ][o.e.p.PluginsService ] [master131] loaded module [x-pack-logstash]

[2022-01-18T12:35:22,760][INFO ][o.e.p.PluginsService ] [master131] loaded module [x-pack-ml]

[2022-01-18T12:35:22,760][INFO ][o.e.p.PluginsService ] [master131] loaded module [x-pack-monitoring]

[2022-01-18T12:35:22,760][INFO ][o.e.p.PluginsService ] [master131] loaded module [x-pack-rollup]

[2022-01-18T12:35:22,760][INFO ][o.e.p.PluginsService ] [master131] loaded module [x-pack-security]

[2022-01-18T12:35:22,760][INFO ][o.e.p.PluginsService ] [master131] loaded module [x-pack-sql]

[2022-01-18T12:35:22,760][INFO ][o.e.p.PluginsService ] [master131] loaded module [x-pack-upgrade]

[2022-01-18T12:35:22,760][INFO ][o.e.p.PluginsService ] [master131] loaded module [x-pack-watcher]

[2022-01-18T12:35:22,761][INFO ][o.e.p.PluginsService ] [master131] no plugins loaded

[2022-01-18T12:35:25,190][INFO ][o.e.x.s.a.s.FileRolesStore] [master131] parsed [0] roles from file [/opt/es/elastticsearch-6.5.4/config/roles.yml]

[2022-01-18T12:35:25,489][INFO ][o.e.x.m.j.p.l.CppLogMessageHandler] [master131] [controller/4710] [Main.cc@109] controller (64 bit): Version 6.5.4 (Build b616085ef32393) Copyright (c) 2018 Elasticsearch BV

[2022-01-18T12:35:25,737][DEBUG][o.e.a.ActionModule ] [master131] Using REST wrapper from plugin org.elasticsearch.xpack.security.Security

[2022-01-18T12:35:25,879][INFO ][o.e.d.DiscoveryModule ] [master131] using discovery type [zen] and host providers [settings]

[2022-01-18T12:35:26,460][INFO ][o.e.n.Node ] [master131] initialized

[2022-01-18T12:35:26,460][INFO ][o.e.n.Node ] [master131] starting ...

[2022-01-18T12:35:26,581][INFO ][o.e.t.TransportService ] [master131] publish_address {192.168.65.131:9300}, bound_addresses {192.168.65.131:9300}

[2022-01-18T12:35:26,592][INFO ][o.e.b.BootstrapChecks ] [master131] bound or publishing to a non-loopback address, enforcing bootstrap checks

[2022-01-18T12:35:29,825][INFO ][o.e.c.s.ClusterApplierService] [master131] detected_master {svale132}{C6UYOV5qRje4Urzcic9-TA}{U6bor1FdSWiDpvKXNJSvZQ}{192.168.65.132}{192.168.65.132:9300}{ml.machine_memory=3855503360, ml.max_open_jobs=20, xpack.installed=true, ml.enabled=true}, added {{svale132}{C6UYOV5qRje4Urzcic9-TA}{U6bor1FdSWiDpvKXNJSvZQ}{192.168.65.132}{192.168.65.132:9300}{ml.machine_memory=3855503360, ml.max_open_jobs=20, xpack.installed=true, ml.enabled=true},{selve133}{_bodEcZRRieOl7wxwU3dIw}{OqIxRga1SjSqQzJmXXPk_w}{192.168.65.133}{192.168.65.133:9300}{ml.machine_memory=3855503360, ml.max_open_jobs=20, xpack.installed=true, ml.enabled=true},}, reason: apply cluster state (from master [master {svale132}{C6UYOV5qRje4Urzcic9-TA}{U6bor1FdSWiDpvKXNJSvZQ}{192.168.65.132}{192.168.65.132:9300}{ml.machine_memory=3855503360, ml.max_open_jobs=20, xpack.installed=true, ml.enabled=true} committed version [7]])

[2022-01-18T12:35:29,951][WARN ][o.e.x.s.a.s.m.NativeRoleMappingStore] [master131] Failed to clear cache for realms [[]]

[2022-01-18T12:35:29,952][INFO ][o.e.x.s.a.TokenService ] [master131] refresh keys

[2022-01-18T12:35:30,118][INFO ][o.e.x.s.a.TokenService ] [master131] refreshed keys

[2022-01-18T12:35:30,140][INFO ][o.e.l.LicenseService ] [master131] license [bdab27e6-59b5-4ada-a10e-2410f1ee0db9] mode [basic] - valid

验证

单节点验证

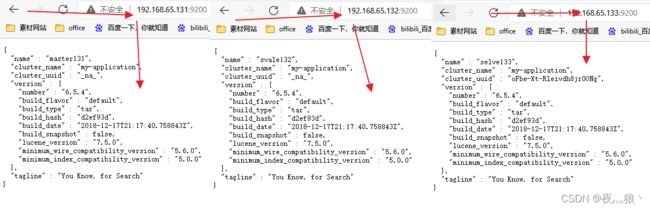

分别访问三个节点http://ip:9200查看是否正常

查看集群状态

192.168.65.132:9200/_cat/health?v

查看集群效果

192.168.65.132:9200/_cat/nodes?pretty