在Ubuntu14.04上OpenStack Juno安装部署

0 安装方式

0.1 安装方式

| 安装方式 |

说明 |

目标 |

备注 |

| 单结点 |

一台服务器运行所有的nova-xxx组件,同时也驱动虚拟实例。 |

这种配置只为尝试Nova,或者为了开发目的进行安装。 |

|

| 1控制节点+N个计算节点 |

一个控制结点运行除nova-compute外的所有nova-services,然后其他compute结点运行nova-compute。所有的计算节点需要和控制节点进行镜像交互,网络交互,控制节点是整个架构的瓶颈。 |

这种配置主要用于概念证明或实验环境。 |

|

| 多节点 |

增加节点单独运行nova-volume,同时在计算节点上运行nova-network,并且根据不同的网络硬件架构选择DHCP或者VLan模式,让控制网络和公共网络的流量分离。 |

主要用于生产环境。 |

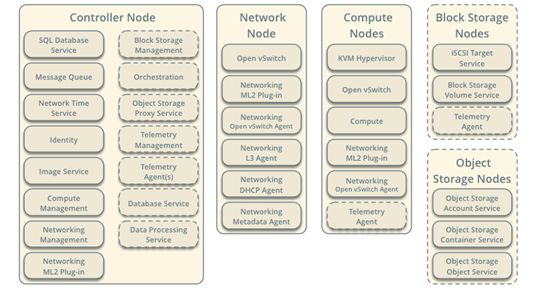

我们选择:多节点。

0.2 部署工具

按官网文档进行部署。

本文档需结合官网部署文档。

openstack-install-guide-apt-juno.pdf

1 基础环境

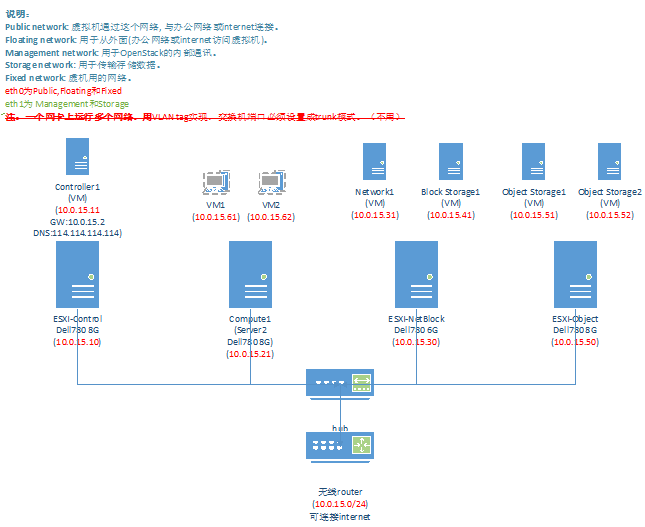

1.1 实验环境

所有系统:

账号:root

密码:abcd1234!

1.2 角色列表

2 安装部署

2.1 前提工作

| 主机 |

配置 |

系统 |

备注 |

| ESXI-control |

Dell780 8G 10.0.15.10 |

ESXI Server 5.1 |

物理机 用于安装虚拟机: Controller1 |

| ESXI-object |

Dell780 8G 10.0.15.50 |

ESXI Server 5.1 |

物理机 用于安装虚拟机: Object Storage1和 Object Storage2 |

| ESXI-NetBlock |

Dell780 6G 10.0.15.30 |

ESXI Server 5.1 |

物理机 用于安装虚拟机: Network1和 Block Storage1 |

| Compute1 |

Dell780 8G 10.0.15.21 |

Ubuntu14.04 Server 64bit |

物理机: 用于compute节点。 |

| Controller1 |

RAM:6G Disk:100G 10.0.15.11 |

Ubuntu14.04 Server 64bit |

虚拟机: 用于control节点 |

| Network1 |

RAM:2G Disk:100G 10.0.15.31 |

Ubuntu14.04 Server 64bit |

虚拟机: 用于network节点 |

| BlockStorage1 |

RAM:2G Disk:100G 10.0.15.41 |

Ubuntu14.04 Server 64bit |

虚拟机: 用于Block Storage节点 |

| ObjectStorage1 |

RAM:3G Disk:100G 10.0.15.51 |

Ubuntu14.04 Server 64bit |

虚拟机: 用于Object Storage节点 |

| ObjectStorage2 |

RAM:3G Disk:100G 10.0.15.52 |

Ubuntu14.04 Server 64bit |

虚拟机: 用于Object Storage节点 |

密码列表:

密码为:abcd1234!

| 密码名称 |

备注 |

| Database password |

数据库root的密码 |

| RABBIT_PASS |

Password of user guest of RabbitMQ |

| KEYSTONE_DBPASS |

Database password of Identity service |

| DEMO_PASS |

Password of user demo |

| ADMIN_PASS |

Password of user admin |

| GLANCE_DBPASS |

Database password for Image Service |

| GLANCE_PASS |

Password of Image Service user glance |

| NOVA_DBPASS |

Database password for Compute service |

| NOVA_PASS |

Password of Compute service user nova |

| DASH_DBPASS |

Database password for the dashboard |

| CINDER_DBPASS |

Database password for the Block Storage service |

| CINDER_PASS |

Password of Block Storage service user cinder |

| NEUTRON_DBPASS |

Database password for the Networking service |

| NEUTRON_PASS |

Password of Networking service user neutron |

| HEAT_DBPASS |

Database password for the Orchestration service |

| HEAT_PASS |

Password of Orchestration service user heat |

| CEILOMETER_DBPASS |

Database password for the Telemetry service |

| CEILOMETER_PASS |

Password of Telemetry service user ceilometer |

| TROVE_DBPASS |

Database password of Database service |

| TROVE_PASS |

Password of Database Service user trove |

2.1.1 ESXI安装配置

-

准备U盘。

-

进行ESXI安装。

-

配置网络。

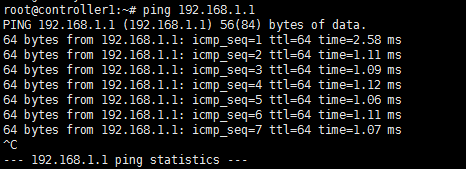

4,测试网络是否连通。

2.1.2 基础环境安装配置

2.1.2.1 系统安装

Ubuntu14.04-base,用作基础系统。

其他各虚拟机在此基础系统上进行复制和导入。

在Compute1上也同样进行下面的操作。

Ubuntu系统安装:

http://jingyan.baidu.com/article/6dad5075dd615ca123e36e00.html

使用下面的命令来给root账号设置密码:

sudo passwd root

切换到root用户:

su root

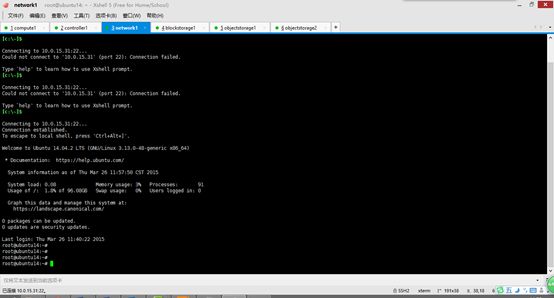

用XShell5连接各个节点。

用xshell5登录时,出现ssh服务器拒绝密码的问题:

ssh是远程登陆工具 ,首先确定服务器是否允许root登陆 默认不允许的

/etc/ssh/sshd_config 找到

#PermitRootLogin no

去掉注释 即可 允许root 远程登录

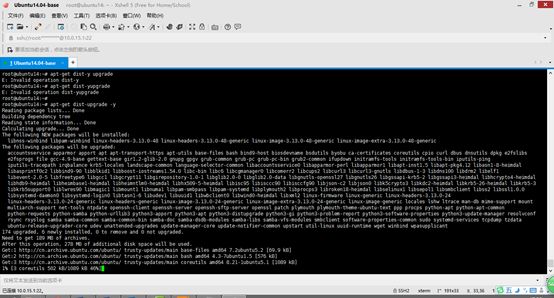

更新系统:

apt-get update -y

apt-get upgrade -y

apt-get dist-upgrade -y

更新过程:

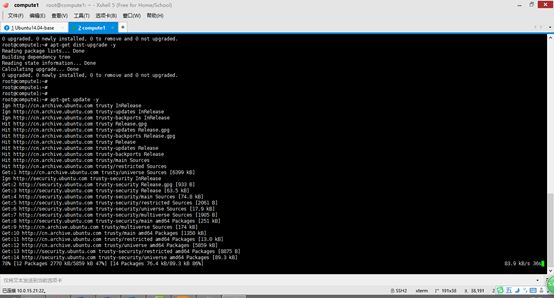

Compute1更新系统:

安装OpenStack Packages:

1,安装

apt-get install ubuntu-cloud-keyring

echo "deb http://ubuntu-cloud.archive.canonical.com/ubuntu" \

"trusty-updates/juno main" > /etc/apt/sources.list.d/cloudarchive-juno.list

2,更新

apt-get update && apt-get dist-upgrade

安装NTP(Network Time Protocol):

1,安装

apt-get install ntp

2,配置NTP

Controller1上这么配置:

Edit the /etc/ntp.conf file and add, change, or remove the following keys as necessary for your environment:

server ntp.ubuntu.com iburst

restrict -4 default kod notrap nomodify

restrict -6 default kod notrap nomodify

--------------------3.25号安装到这里------------------------

其他节点上这么配置:

Edit the /etc/ntp.conf file and add, change, or remove the following keys as necessary for your environment:

server controller1 iburst

3,重启ntp

service ntp restart

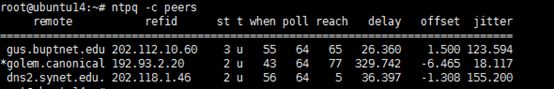

4,测试

ntpq -c peers

ntpq -c assoc

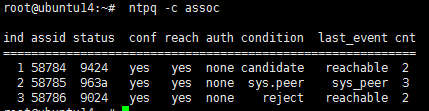

2.1.2.2 新建Controller1节点虚拟机

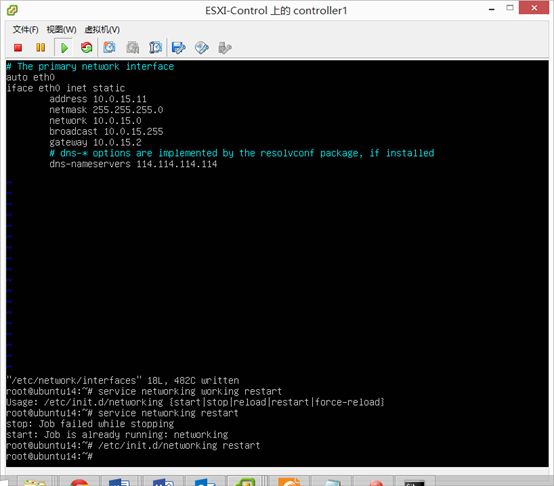

在10.0.15.10上,复制一份ubuntu14.04-base.vmdk到controller1目录,并重命名为controller1(vsphere client不支持重命名):

然后,新建controller1虚拟机,选择使用现有的虚拟磁盘。

创建controller1虚拟机完成。

设置IP地址:10.0.15.11

设置hostname为controller1

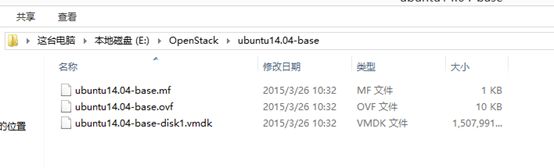

2.1.2.3 导出OVF模板

在vsphere client(10.0.15.10)中将ubuntu14.04-base系统导出到我的笔记本中。

然后再分别对各个节点虚拟机进行导入。

速度比较慢。等待….

一开始进度一直不走,后来就快了很多。

导出完成。

导出到本地,我的笔记本。

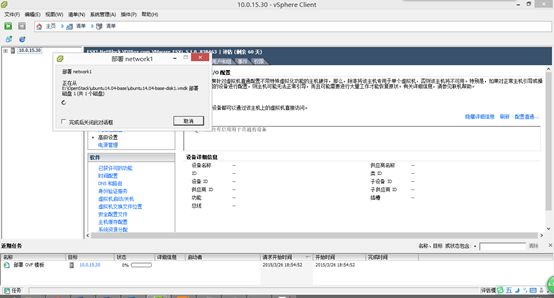

2.1.2.4 新建Network1节点虚拟机

Vsphere client连接到10.0.15.30,导入ovf模板,部署network1节点。

在导入ovf的时候,出现错误:未能部署OVF 用户取消了任务

解决方案:

在导出OVF的时候,CD/DVD得设置为客户端设备,而不能设置为iso。

设置IP地址为:10.0.15.31

设置HostName为:network1

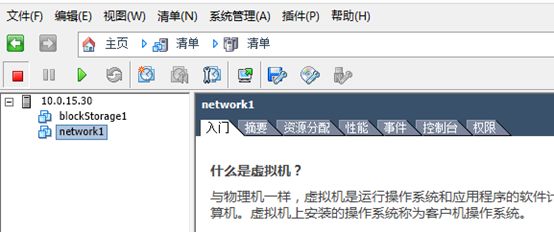

2.1.2.5 新建BlockStorage1节点虚拟机

在10.0.15.30上,复制一份network1.vmdk到blockStorage1目录

新建blockStorage1虚拟机,选择使用现有的虚拟磁盘。

设置IP地址为:10.0.15.41

设置HostName为:blockStorage1

2.1.2.6 新建ObjectStorage1节点虚拟机

Vsphere client连接到10.0.15.50,导入 ovf模板,部署ObjectStorage1节点。

设置IP地址为:10.0.15.51

设置HostName为:objectStorage1

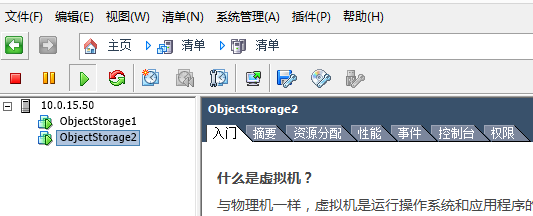

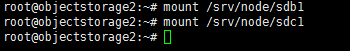

2.1.2.7 新建ObjectStorage2节点虚拟机

复制ObjectStorage1.vmdk到ObjectStorage2目录:

新建ObjectStorage2虚拟机,选择使用现有的虚拟磁盘。

设置IP地址为:10.0.15.52

设置HostName为:objectStorage2

xShell5连接各节点。

至此,所有基础环境搭建完成。

下面,开始部署OpenStack的各个节点。

2.2 安装部署

2.2.1 Controller1节点部署

2.2.1.1 Database

安装:

apt-get install mariadb-server python-mysqldb

root密码为:abcd1234!

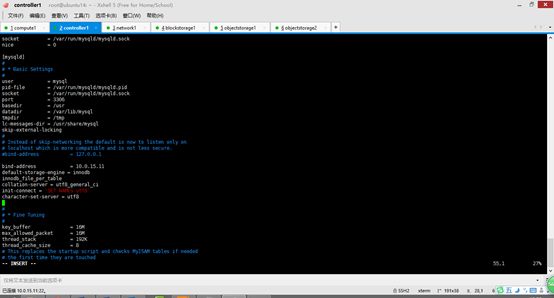

配置:

vi /etc/mysql/my.cnf

[mysqld]

bind-address = 10.0.15.11

default-storage-engine = innodb

innodb_file_per_table

collation-server = utf8_general_ci

init-connect = 'SET NAMES utf8'

character-set-server = utf8

重启:

service mysql restart

安装完成。

2.2.1.2 Messaging Server

安装RabbitMQ:

apt-get install rabbitmq-server

![]()

看上图,似乎是添加了 rabbitmq这个用户,而不是官方文档里给的guest

先看看执行下面的修改密码的命令会不会出错。

配置:

RabbitMQ默认配置了一个用户guest,密码也默认为guest,建议使用此用户,但修改其密码为:abcd1234!

rabbitmqctl change_password guest abcd1234!

这里并没有出错,所以官方文档里给的应该是正确的。

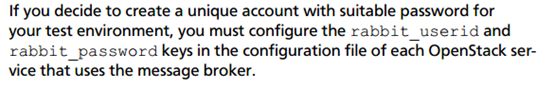

注意:

![]()

rabbitmqctl status | grep rabbit

版本是3.2.4,小于3.3.0,所以下面的b步骤,不必再进行。

vi /etc/rabbitmq/rabbitmq.config

[{rabbit, [{loopback_users, []}]}].

![]()

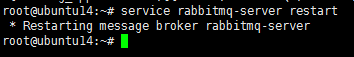

service rabbitmq-server restart

安装完成。

2.2.1.3 Identity Service

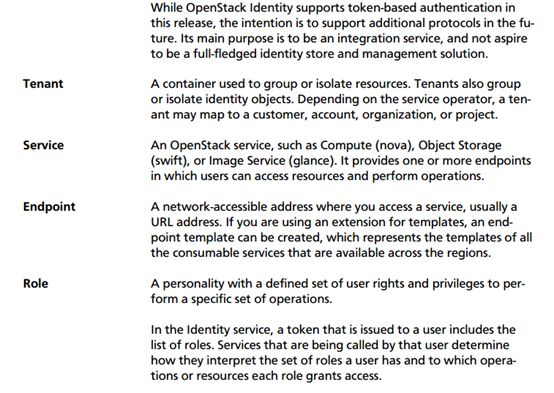

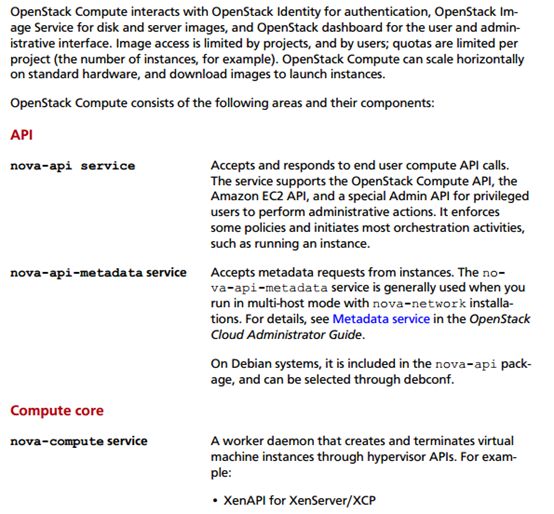

2.2.1.3.1 理论知识

功能:

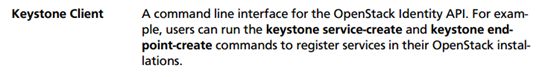

KeyStone处理流程:

2.2.1.3.2 安装并配置Indentity Service

创建mysql数据库:

![]()

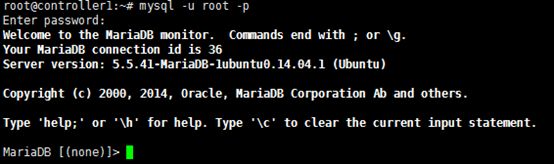

mysql -u root -p

登录成功。

CREATE DATABASE keystone;

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' \

IDENTIFIED BY 'abcd1234!';

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' \

IDENTIFIED BY 'abcd1234!';

注:

这里的密码部分有空格,所以导致了后面的错误。

![]()

quit;

生成administration token:

openssl rand -hex 10

20501b9e8d0a541af5ee

注意:

此处只是为了生成一个随机的token,用于代替下面的ADMIN_TOKEN。

安装identity service:

apt-get install keystone python-keystoneclient

![]()

配置:

vi /etc/keystone/keystone.conf

配置以下内容:

[DEFAULT]

admin_token = 20501b9e8d0a541af5ee

注:

这串数字是上面生成的token。

[database]

connection = mysql://keystone:abcd1234!@10.0.15.11/keystone

[token]

provider = keystone.token.providers.uuid.Provider

driver = keystone.token.persistence.backends.sql.Token

注:

黄色标识的一句需要删除。否则会导致下面的错误。

[revoke]

driver = keystone.contrib.revoke.backends.sql.Revoke

[DEFAULT]

verbose = True

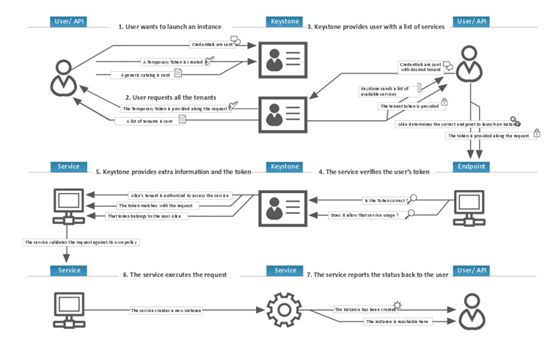

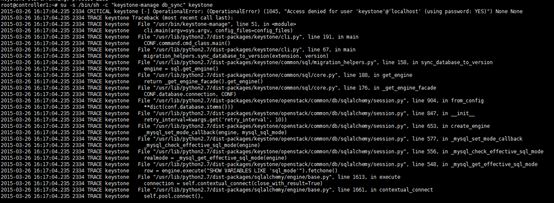

Populate Indentity Service 数据库:

su -s /bin/sh -c "keystone-manage db_sync" keystone

出现错误:

CRITICAL keystone [-] OperationalError: (OperationalError) (1045, "Access denied for user 'keystone'@'localhost' (using password: YES)") None None

解决方案:

是上面的Grant命令中的密码部分有空格导致的。

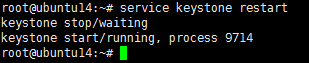

重启:

service keystone restart

重启服务时,出错:

stop: Unknown instance:

删除sqlite文件:

rm -f /var/lib/keystone/keystone.db

注:

因为keystone安装会默认生成一个SQLite文件,但我们这里用的是mysql数据库,所以用不到此文件,将其删除。

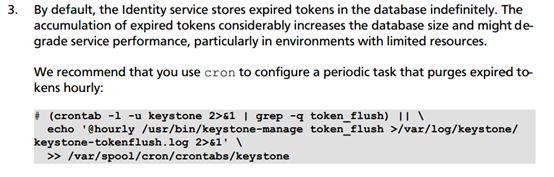

下面的步骤是为了提高数据库的性能,所以,可以暂时不做。

2.2.1.3.3 创建tenants,users,roles

配置administration token:

export OS_SERVICE_TOKEN=20501b9e8d0a541af5ee

注:

20501b9e8d0a541af5ee为上面生成的。

![]()

配置endpoint:

export OS_SERVICE_ENDPOINT=http://10.0.15.11:35357/v2.0

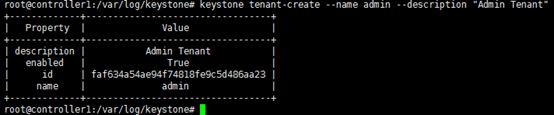

创建tenants,users,roles:

![]()

keystone tenant-create --name admin --description "Admin Tenant"

出现问题:

开启Keystone的日志,需要在

/etc/keystone/keystone.conf:

[DEFAULT]

log_file = /var/log/keystone/keystone.log

log_dir = /var/log/keystone

查看 /var/log/keystone/keystone.log

ImportError: No module named persistence.backends.sql

解决方案:

删除 /etc/keystone/keystone.conf

driver = keystone.token.persistence.backends.sql.Token

解决后的效果:

keystone user-create --name admin --pass abcd1234! --email [email protected]

![]()

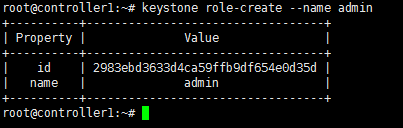

keystone role-create --name admin

keystone user-role-add --user admin --tenant admin --role admin

注:

此条命令没有输出。

keystone tenant-create --name demo --description "Demo Tenant"

![]()

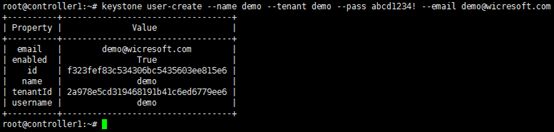

keystone user-create --name demo --tenant demo --pass abcd1234! --email [email protected]

![]()

![]()

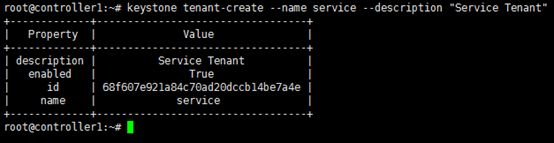

keystone tenant-create --name service --description "Service Tenant"

注:

上面这些创建过程,可以用脚本来实现。

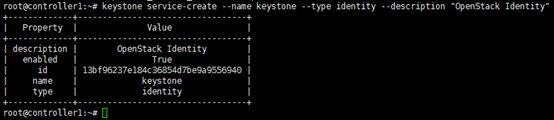

2.2.1.3.4 创建service entity and API endpoint

前提条件:

![]()

配置administration token:

export OS_SERVICE_TOKEN=20501b9e8d0a541af5ee

注:

20501b9e8d0a541af5ee为上面生成的。

![]()

配置endpoint:

export OS_SERVICE_ENDPOINT=http://10.0.15.11:35357/v2.0

创建service entity and API endpoint

keystone service-create --name keystone --type identity --description "OpenStack Identity"

keystone endpoint-create --service-id $(keystone service-list | awk '/ identity / {print $2}') --publicurl http://controller1:5000/v2.0 --internalurl http://controller1:5000/v2.0 --adminurl http://controller1:35357/v2.0 --region regionOne

2.2.1.3.5 验证安装

unset OS_SERVICE_TOKEN OS_SERVICE_ENDPOINT

![]()

keystone --os-tenant-name admin --os-username admin --ospassword abcd1234! --os-auth-url http://controller1:35357/v2.0 token-get

出现错误:

![]()

keystone: error: argument

解决方案:

命令改成:

keystone --os-tenant-name admin --os-username admin --os-password abcd1234! --os-auth-url http://controller1:35357/v2.0 token-get

keystone --os-tenant-name admin --os-username admin --os-password abcd1234! --os-auth-url http://controller1:35357/v2.0 tenant-list

keystone --os-tenant-name admin --os-username admin --os-password abcd1234! --os-auth-url http://controller1:35357/v2.0 user-list

keystone --os-tenant-name admin --os-username admin --os-password abcd1234! --os-auth-url http://controller1:35357/v2.0 role-list

keystone --os-tenant-name demo --os-username demo --os-password abcd1234! --os-auth-url http://controller1:35357/v2.0 token-get

![]()

keystone --os-tenant-name demo --os-username demo --os-password abcd1234! --os-auth-url http://controller1:35357/v2.0 user-list

![]()

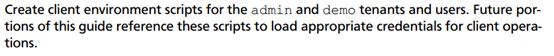

2.2.1.3.6 创建OpenStack客户端环境脚本

vi admin-openrc.sh

export OS_TENANT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=abcd1234!

export OS_AUTH_URL=http://controller1:35357/v2.0

![]() vi demo-openrc.sh

vi demo-openrc.sh

export OS_TENANT_NAME=demo

export OS_USERNAME=demo

export OS_PASSWORD=abcd1234!

export OS_AUTH_URL=http://controller1:5000/v2.0

![]()

加载脚本:

source admin-openrc.sh

![]()

这些脚本,可以放到系统文件中,系统重启后可以自动加载。

Indentity Service安装完成。

------------------------------------2015.3.26号安装到这里-----------------------------

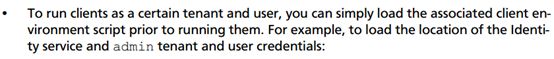

2.2.1.4 Image Service

2.2.1.4.1 基础知识

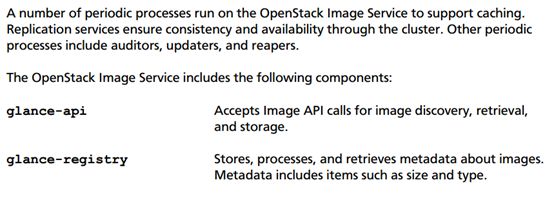

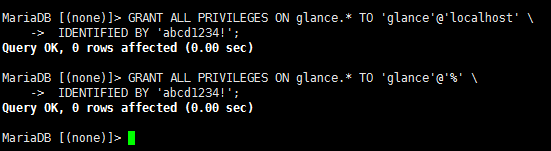

2.2.1.4.2 安装和配置

安装:

前提条件:

![]()

mysql -u root -p

![]()

CREATE DATABASE glance;

![]()

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \

IDENTIFIED BY 'abcd1234!';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \

IDENTIFIED BY 'abcd1234!';

![]()

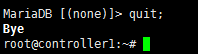

quit;

![]()

source admin-openrc.sh

![]()

![]()

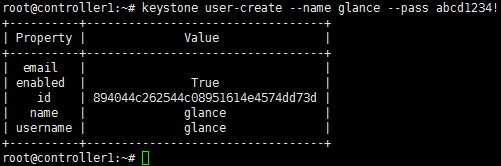

keystone user-create --name glance --pass abcd1234!

![]()

keystone user-role-add --user glance --tenant service --role admin

![]()

![]()

keystone service-create --name glance --type image --description "OpenStack Image Service"

keystone endpoint-create --service-id $(keystone service-list | awk '/ image / {print $2}') --publicurlhttp://controller1:9292 --internalurl http://controller1:9292 --adminurl http://controller1:9292 --region regionOne

![]()

安装:

apt-get install glance python-glanceclient

配置:

![]()

vi /etc/glance/glance-api.conf

[DEFAULT]

notification_driver = noop

verbose = True

[database]

connection = mysql://glance:abcd1234!@controller1/glance

[keystone_authtoken]

auth_uri = http://controller1:5000/v2.0

identity_uri = http://controller1:35357

admin_tenant_name = service

admin_user = glance

admin_password = abcd1234!

[paste_deploy]

flavor = keystone

[glance_store]

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

![]()

vi /etc/glance/glance-registry.conf

[DEFAULT]

notification_driver = noop

verbose = True

[database]

connection = mysql://glance:abcd1234!@controller1/glance

[keystone_authtoken]

auth_uri = http://controller1:5000/v2.0

identity_uri = http://controller1:35357

admin_tenant_name = service

admin_user = glance

admin_password = abcd1234!

[paste_deploy]

flavor = keystone

![]()

su -s /bin/sh -c "glance-manage db_sync" glance

重启:

service glance-registry restart

service glance-api restart

删除默认的SQLite文件:

rm -f /var/lib/glance/glance.sqlite

![]()

2.2.1.4.3验证安装

![]()

mkdir /tmp/images

![]()

wget -P /tmp/images http://cdn.download.cirros-cloud.net/0.3.3/cirros-0.3.3-x86_64-disk.img

![]()

source admin-openrc.sh

![]()

glance image-create --name "cirros-0.3.3-x86_64" --file /tmp/images/cirros-0.3.3-x86_64-disk.img --disk-format qcow2 --container-format bare --is-public True --progress

出现错误:

Request returned failure status.

Invalid OpenStack Identity credentials.

解决方案:

1,查看日志 cat /var/log/glance/api.log

日志内容:

2015-03-27 10:22:40.300 18493 DEBUG glance.api.middleware.version_negotiation [-] Determining version of request: POST /v1/images Accept: process_request /usr/lib/python2.7/dist-packages/gla

nce/api/middleware/version_negotiation.py:442015-03-27 10:22:40.300 18493 DEBUG glance.api.middleware.version_negotiation [-] Using url versioning process_request /usr/lib/python2.7/dist-packages/glance/api/middleware/version_negotiati

on.py:572015-03-27 10:22:40.300 18493 DEBUG glance.api.middleware.version_negotiation [-] Matched version: v1 process_request /usr/lib/python2.7/dist-packages/glance/api/middleware/version_negotiatio

n.py:692015-03-27 10:22:40.301 18493 DEBUG glance.api.middleware.version_negotiation [-] new path /v1/images process_request /usr/lib/python2.7/dist-packages/glance/api/middleware/version_negotiatio

n.py:702015-03-27 10:22:40.301 18493 DEBUG keystoneclient.middleware.auth_token [-] Authenticating user token __call__ /usr/lib/python2.7/dist-packages/keystoneclient/middleware/auth_token.py:569

2015-03-27 10:22:40.301 18493 DEBUG keystoneclient.middleware.auth_token [-] Removing headers from request environment: X-Identity-Status,X-Domain-Id,X-Domain-Name,X-Project-Id,X-Project-Name

,X-Project-Domain-Id,X-Project-Domain-Name,X-User-Id,X-User-Name,X-User-Domain-Id,X-User-Domain-Name,X-Roles,X-Service-Catalog,X-User,X-Tenant-Id,X-Tenant-Name,X-Tenant,X-Role _remove_auth_headers /usr/lib/python2.7/dist-packages/keystoneclient/middleware/auth_token.py:6282015-03-27 10:22:40.302 18493 INFO urllib3.connectionpool [-] Starting new HTTPS connection (1): 127.0.0.1

2015-03-27 10:22:40.316 18493 WARNING keystoneclient.middleware.auth_token [-] Retrying on HTTP connection exception: [Errno 1] _ssl.c:510: error:140770FC:SSL routines:SSL23_GET_SERVER_HELLO:

unknown protocol2015-03-27 10:22:40.817 18493 INFO urllib3.connectionpool [-] Starting new HTTPS connection (1): 127.0.0.1

2015-03-27 10:22:40.829 18493 WARNING keystoneclient.middleware.auth_token [-] Retrying on HTTP connection exception: [Errno 1] _ssl.c:510: error:140770FC:SSL routines:SSL23_GET_SERVER_HELLO:

unknown protocol2015-03-27 10:22:41.830 18493 INFO urllib3.connectionpool [-] Starting new HTTPS connection (1): 127.0.0.1

2015-03-27 10:22:41.841 18493 WARNING keystoneclient.middleware.auth_token [-] Retrying on HTTP connection exception: [Errno 1] _ssl.c:510: error:140770FC:SSL routines:SSL23_GET_SERVER_HELLO:

unknown protocol2015-03-27 10:22:43.844 18493 INFO urllib3.connectionpool [-] Starting new HTTPS connection (1): 127.0.0.1

2015-03-27 10:22:43.856 18493 ERROR keystoneclient.middleware.auth_token [-] HTTP connection exception: [Errno 1] _ssl.c:510: error:140770FC:SSL routines:SSL23_GET_SERVER_HELLO:unknown protoc

ol2015-03-27 10:22:43.856 18493 DEBUG keystoneclient.middleware.auth_token [-] Token validation failure. _validate_user_token /usr/lib/python2.7/dist-packages/keystoneclient/middleware/auth_tok

en.py:8272015-03-27 10:22:43.856 18493 TRACE keystoneclient.middleware.auth_token Traceback (most recent call last):

2015-03-27 10:22:43.856 18493 TRACE keystoneclient.middleware.auth_token File "/usr/lib/python2.7/dist-packages/keystoneclient/middleware/auth_token.py", line 821, in _validate_user_token

2015-03-27 10:22:43.856 18493 TRACE keystoneclient.middleware.auth_token data = self.verify_uuid_token(user_token, retry)

2015-03-27 10:22:43.856 18493 TRACE keystoneclient.middleware.auth_token File "/usr/lib/python2.7/dist-packages/keystoneclient/middleware/auth_token.py", line 1169, in verify_uuid_token

2015-03-27 10:22:43.856 18493 TRACE keystoneclient.middleware.auth_token self.auth_version = self._choose_api_version()

2015-03-27 10:22:43.856 18493 TRACE keystoneclient.middleware.auth_token File "/usr/lib/python2.7/dist-packages/keystoneclient/middleware/auth_token.py", line 520, in _choose_api_version

2015-03-27 10:22:43.856 18493 TRACE keystoneclient.middleware.auth_token versions_supported_by_server = self._get_supported_versions()

2015-03-27 10:22:43.856 18493 TRACE keystoneclient.middleware.auth_token File "/usr/lib/python2.7/dist-packages/keystoneclient/middleware/auth_token.py", line 540, in _get_supported_version

s2015-03-27 10:22:43.856 18493 TRACE keystoneclient.middleware.auth_token response, data = self._json_request('GET', '/')

2015-03-27 10:22:43.856 18493 TRACE keystoneclient.middleware.auth_token File "/usr/lib/python2.7/dist-packages/keystoneclient/middleware/auth_token.py", line 748, in _json_request

2015-03-27 10:22:43.856 18493 TRACE keystoneclient.middleware.auth_token response = self._http_request(method, path, **kwargs)

2015-03-27 10:22:43.856 18493 TRACE keystoneclient.middleware.auth_token File "/usr/lib/python2.7/dist-packages/keystoneclient/middleware/auth_token.py", line 715, in _http_request

2015-03-27 10:22:43.856 18493 TRACE keystoneclient.middleware.auth_token raise NetworkError('Unable to communicate with keystone')

2015-03-27 10:22:43.856 18493 TRACE keystoneclient.middleware.auth_token NetworkError: Unable to communicate with keystone

2015-03-27 10:22:43.856 18493 TRACE keystoneclient.middleware.auth_token

2015-03-27 10:22:43.857 18493 WARNING keystoneclient.middleware.auth_token [-] Authorization failed for token

2015-03-27 10:22:43.857 18493 INFO keystoneclient.middleware.auth_token [-] Invalid user token - deferring reject downstream

2015-03-27 10:22:43.892 18493 INFO glance.wsgi.server [-] 10.0.15.11 - - [27/Mar/2015 10:22:43] "POST /v1/images HTTP/1.1" 401 381 3.592769

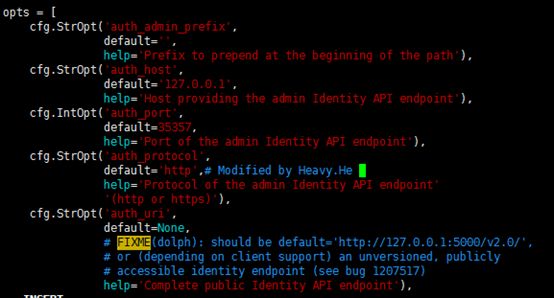

修改源码:

vi /usr/local/lib/python2.7/dist-packages/keystoneclient/middleware/auth_token.py

cfg.StrOpt('auth_protocol',

default='https', #修改为http

help='Protocol of the admin Identity API endpoint'

'(http or https)'),

将上面的https修改为http

参考:

http://www.cnblogs.com/yuxc/archive/2012/12/06/2805552.html

还是不行,

![]()

所以方案 1,不可行。

解决方案2:

修改配置文件

vi /etc/glance/glance-api.conf

[DEFAULT]

notification_driver = noop

verbose = True

[database]

connection = mysql://glance:abcd1234!@controller1/glance

[keystone_authtoken]

auth_uri = http://controller1:5000/v2.0

identity_uri = http://controller1:35357

注:

这两句,应该按配置文件中的格式配置,否则会导致上面的错误。

admin_tenant_name = service

admin_user = glance

admin_password = abcd1234!

vi /etc/glance/glance-registry.conf

[keystone_authtoken]

auth_uri = http://controller1:5000/v2.0

identity_uri = http://controller1:35357

注:

这两句,应该按配置文件中的格式配置,否则会导致上面的错误。

admin_tenant_name = service

admin_user = glance

admin_password = abcd1234!

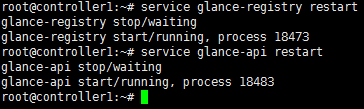

修改完成后,重启服务:

service glance-registry restart

service glance-api restart

再运行上面的命令:

glance image-create --name "cirros-0.3.3-x86_64" --file /tmp/images/cirros-0.3.3-x86_64-disk.img --disk-format qcow2 --container-format bare --is-public True --progress

运行正常:

参考:

https://ask.openstack.org/en/question/57155/image-service-invalid-openstack-identity-credentials/

![]()

glance image-list

![]()

![]()

rm -r /tmp/images

完成安装。

2.2.1.5 Compute Service

Compute Service需要在Controller1节点和Compute1节点进行部署。

![]()

下面是在Controller1节点的部署:

前提条件:

![]()

![]()

mysql -u root -p

![]()

CREATE DATABASE nova;

![]()

![]()

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' \

IDENTIFIED BY 'abcd1234!';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' \

IDENTIFIED BY 'abcd1234!';

![]()

quit;

![]()

source admin-openrc.sh

![]()

![]()

![]()

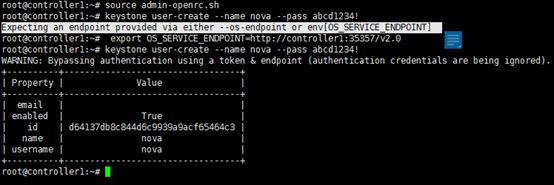

keystone user-create --name nova --pass abcd1234!

出现错误:

Expecting an endpoint provided via either --os-endpoint or env[OS_SERVICE_ENDPOINT]

解决方案:

export OS_SERVICE_ENDPOINT=http://controller1:35357/v2.0

![]()

keystone user-role-add --user nova --tenant service --role admin

出现错误:

WARNING: Bypassing authentication using a token & endpoint (authentication credentials are being ignored).

解决方案:

查找了一下资料,说此提示可忽略。

![]()

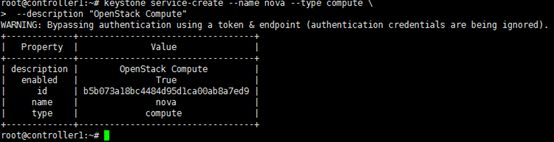

keystone service-create --name nova --type compute \

--description "OpenStack Compute"

![]()

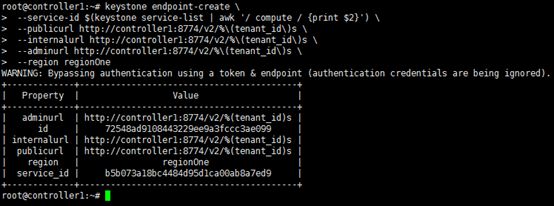

keystone endpoint-create \

--service-id $(keystone service-list | awk '/ compute / {print $2}') \

--publicurl http://controller1:8774/v2/%\(tenant_id\)s \

--internalurl http://controller1:8774/v2/%\(tenant_id\)s \

--adminurl http://controller1:8774/v2/%\(tenant_id\)s \

--region regionOne

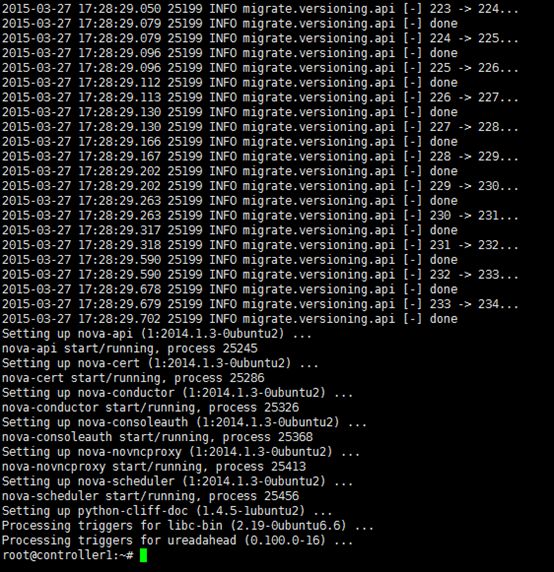

安装和配置

安装

apt-get install nova-api nova-cert nova-conductor nova-consoleauth \

nova-novncproxy nova-scheduler python-novaclient

配置

vi /etc/nova/nova.conf

[DEFAULT]

my_ip = 10.0.15.11

vncserver_listen = 10.0.15.11

vncserver_proxyclient_address = 10.0.15.11

rpc_backend = rabbit

rabbit_host = controller1

rabbit_password = abcd1234!

auth_strategy = keystone

verbose = True

[keystone_authtoken]

auth_uri = http://controller1:5000/v2.0

#identity_uri = http://controller1:35357

auth_host = 10.0.15.11

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = nova

admin_password = abcd1234!

[database]

connection = mysql://nova:abcd1234!@controller1/nova

[glance]

host = controller1

同步数据

su -s /bin/sh -c "nova-manage db sync" nova

出现错误:

root@controller1:~# nova-manage --debug db sync

No handlers could be found for logger "oslo_config.cfg"

Line 4769 : 2015-04-06 16:06:57.085 24288 ERROR stevedore.extension [-] Could not load 'file': cannot import name util

Line 4752 : 2015-04-06 16:06:57.082 24288 ERROR stevedore.extension [-] cannot import name util

Line 229 : 2015-04-06 14:31:18.620 22694 ERROR oslo_messaging._drivers.common [-] Returning exception Compute host 1 could not be found. to caller

Line 205 : 2015-04-06 14:31:18.618 22694 ERROR oslo_messaging.rpc.dispatcher [-] Exception during message handling: Compute host 1 could not be found.

导致在UI中,看不到相应的虚拟主机。

nova-manage logs errors

nova-manage logs syslog

https://wiki.openstack.org/wiki/NovaManage

https://ask.openstack.org/en/question/62200/no-handlers-could-be-found-for-logger-oslo_configcfg/

重启服务

service nova-api restart

service nova-cert restart

service nova-consoleauth restart

service nova-scheduler restart

service nova-conductor restart

service nova-novncproxy restart

删除默认的SQLite文件

rm -f /var/lib/nova/nova.sqlite

---------------------------------3.27号到这里--------------------------------------

2.2.1.6 Network Service

Network service需要在controller1节点和network1节点进行安装和配置。

以下是在controller1节点的安装和配置。

前提条件:

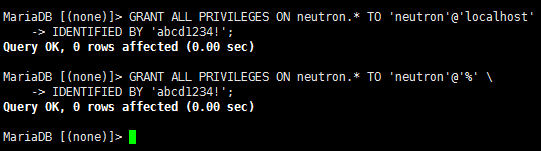

创建neutron数据库

mysql -u root -p

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' \

IDENTIFIED BY 'abcd1234!';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' \

IDENTIFIED BY 'abcd1234!';

quit;

![]()

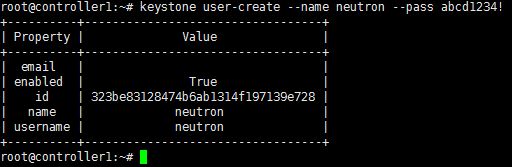

创建service credentials

source admin-openrc.sh

![]()

keystone user-create --name neutron --pass abcd1234!

![]()

keystone user-role-add --user neutron --tenant service --role admin

![]()

keystone service-create --name neutron --type network \

--description "OpenStack Networking"

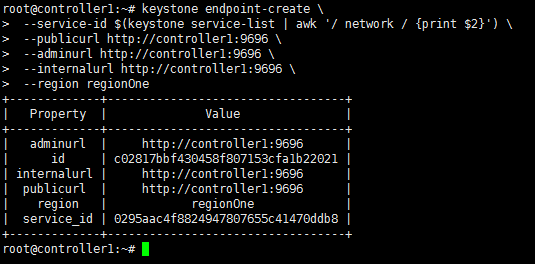

创建API endpoints

keystone endpoint-create \

--service-id $(keystone service-list | awk '/ network / {print $2}') \

--publicurl http://controller1:9696 \

--adminurl http://controller1:9696 \

--internalurl http://controller1:9696 \

--region regionOne

安装和配置

安装:

apt-get install neutron-server neutron-plugin-ml2 python-neutronclient

出现错误:

Fetched 1279 kB in 15min 20s (1389 B/s)

E: Failed to fetch http://cn.archive.ubuntu.com/ubuntu/pool/main/m/mako/python-mako_0.9.1-1_all.deb Connection failed [IP: 115.28.122.210 80]

E: Unable to fetch some archives, maybe run apt-get update or try with --fix-missing?

解决方案:

重新运行了一次:安装命令行。

![]()

配置:

vi /etc/neutron/neutron.conf

[DEFAULT]

verbose = True

#rpc_backend = rabbit

注:

rpc_backend = neutron.openstack.common.rpc.impl_kombu

否则,将引起下面的错误。Neutron ext-list出错。

rabbit_host = controller1

rabbit_password = abcd1234!

auth_strategy = keystone

core_plugin = ml2

#service_plugins = router

修改为:

service_plugins = neutron.services.l3_router.l3_router_plugin.L3RouterPlugin

allow_overlapping_ips = True

notify_nova_on_port_status_changes = True

notify_nova_on_port_data_changes = True

nova_url = http://controller1:8774/v2

nova_admin_auth_url = http://controller1:35357/v2.0

nova_region_name = regionOne

nova_admin_username = nova

#nova_admin_tenant_id = SERVICE_TENANT_ID

nova_admin_tenant_id = 68f607e921a84c70ad20dccb14be7a4e

注:

获取SERVICE_TENANT_ID的方法:

source admin-openrc.sh

keystone tenant-get service

b2564ad802354cb0aebb09013ba3f220

nova_admin_password = abcd1234!

[keystone_authtoken]

auth_uri = http://controller1:5000/v2.0

identity_uri = http://controller1:35357

admin_tenant_name = service

admin_user = neutron

admin_password = abcd1234!

[database]

connection = mysql://neutron:abcd1234!@controller1/neutron

![]()

配置Modular Layer 2 (ML2) :

vi /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = flat,gre

tenant_network_types = gre

mechanism_drivers = openvswitch

[ml2_type_gre]

tunnel_id_ranges = 1:1000

[securitygroup]

enable_security_group = True

enable_ipset = True

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

配置Compute节点使用network:

vi /etc/nova/nova.conf

[DEFAULT]

network_api_class = nova.network.neutronv2.api.API

security_group_api = neutron

linuxnet_interface_driver = nova.network.linux_net.LinuxOVSInterfaceDriver

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[neutron]

url = http://controller1:9696

auth_strategy = keystone

admin_auth_url = http://controller1:35357/v2.0

admin_tenant_name = service

admin_username = neutron

admin_password = abcd1234!

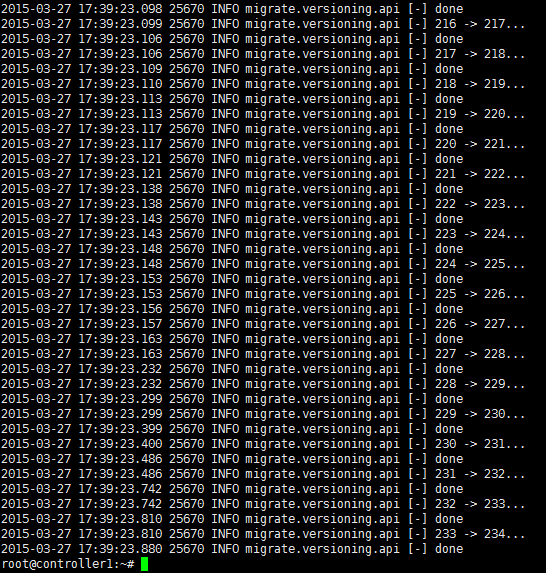

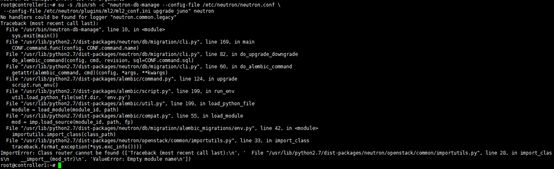

同步数据库:

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade juno" neutron

出现错误:

No handlers could be found for logger "neutron.common.legacy"

Traceback (most recent call last):

File "/usr/bin/neutron-db-manage", line 10, in

sys.exit(main())

File "/usr/lib/python2.7/dist-packages/neutron/db/migration/cli.py", line 169, in main

CONF.command.func(config, CONF.command.name)

File "/usr/lib/python2.7/dist-packages/neutron/db/migration/cli.py", line 82, in do_upgrade_downgrade

do_alembic_command(config, cmd, revision, sql=CONF.command.sql)

File "/usr/lib/python2.7/dist-packages/neutron/db/migration/cli.py", line 60, in do_alembic_command

getattr(alembic_command, cmd)(config, *args, **kwargs)

File "/usr/lib/python2.7/dist-packages/alembic/command.py", line 124, in upgrade

script.run_env()

File "/usr/lib/python2.7/dist-packages/alembic/script.py", line 199, in run_env

util.load_python_file(self.dir, 'env.py')

File "/usr/lib/python2.7/dist-packages/alembic/util.py", line 199, in load_python_file

module = load_module(module_id, path)

File "/usr/lib/python2.7/dist-packages/alembic/compat.py", line 55, in load_module

mod = imp.load_source(module_id, path, fp)

File "/usr/lib/python2.7/dist-packages/neutron/db/migration/alembic_migrations/env.py", line 42, in

importutils.import_class(class_path)

File "/usr/lib/python2.7/dist-packages/neutron/openstack/common/importutils.py", line 33, in import_class

traceback.format_exception(*sys.exc_info())))

ImportError: Class router cannot be found (['Traceback (most recent call last):\n', ' File "/usr/lib/python2.7/dist-packages/neutron/openstack/common/importutils.py", line 28, in import_clas

s\n __import__(mod_str)\n', 'ValueError: Empty module name\n'])

解决方案:

neutron.services.l3_router.l3_router_plugin.L3RouterPlugin

修改之后 ,再运行:

还是出错:

No handlers could be found for logger "neutron.common.legacy"

Traceback (most recent call last):

sqlalchemy.exc.OperationalError: (OperationalError) (1045, "Access denied for user 'neutron'@'controller1' (using password: YES)") None None

修改:

vi /etc/neutron/neutron.conf

[database]

connection = mysql://neutron:abcd1234!@controller1/neutron

密码处去掉空格。

再运行命令:

![]()

还有错误:

No handlers could be found for logger "neutron.common.legacy"

INFO [alembic.migration] Context impl MySQLImpl.

INFO [alembic.migration] Will assume non-transactional DDL.

No such revision 'juno'

解决方案:

vi /etc/neutron/neutron.conf

core_plugin = neutron.plugins.ml2.plugin.Ml2Plugin

service_plugin = neutron.services.l3_router.l3_router_plugin.L3RouterPlugin

参考:

https://ask.openstack.org/en/question/62519/installing-neutron-no-handlers-could-be-found-for-logger-neutroncommonlegacy/

将命令改为:

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

![]()

![]()

错误解决。

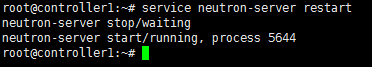

重启服务:

service nova-api restart

service nova-scheduler restart

service nova-conductor restart

重启networking服务:

service neutron-server restart

验证安装:

source admin-openrc.sh

![]()

neutron ext-list

出现错误:

unsupported locale setting

参考:

http://stackoverflow.com/questions/14547631/python-locale-error-unsupported-locale-setting

查看日志:

Vi /var/log/neutron/server.log

2015-03-30 16:45:44.671 18513 TRACE neutron.service raise RuntimeError(msg)

2015-03-30 16:45:44.671 18513 TRACE neutron.service RuntimeError: Unable to load neutron from configuration file /etc/neutron/api-paste.ini.

2015-03-30 16:45:44.679 18513 TRACE neutron.common.config LookupError: No section 'quantum' (prefixed by 'app' or 'application' or 'composite' or 'composit' or

'pipeline' or 'filter-app') fou

nd in config /etc/neutron/api-paste.ini2015-03-30 16:45:44.679 18513 TRACE neutron.common.config

2015-03-30 16:45:44.681 18513 ERROR neutron.service [-] Unrecoverable error: please check log for details.

2015-03-30 16:45:44.681 18513 TRACE neutron.service raise RuntimeError(msg)

2015-03-30 16:45:44.681 18513 TRACE neutron.service RuntimeError: Unable to load quantum from configuration file /etc/neutron/api-paste.ini.

2015-03-30 16:45:45.417 18523 TRACE neutron.common.config LookupError: No section 'quantum' (prefixed by 'app' or 'application' or 'composite' or 'composit' or

'pipeline' or 'filter-app') fou

nd in config /etc/neutron/api-paste.ini2015-03-30 16:45:45.417 18523 TRACE neutron.common.config

2015-03-30 16:45:45.418 18523 ERROR neutron.service [-] Unrecoverable error: please check log for details.

2015-03-30 16:45:45.418 18523 TRACE neutron.service raise RuntimeError(msg)

2015-03-30 16:45:45.418 18523 TRACE neutron.service RuntimeError: Unable to load quantum from configuration file /etc/neutron/api-paste.ini.

参考:

https://ask.openstack.org/en/question/28431/unable-to-load-quantum-from-configuration-file-etcneutronapi-pasteini-icehouse/

解决方案:

vi /etc/neutron/api-paste.ini

[filter:authtoken]

admin_tenant_name = service

admin_user = neutron

admin_password = abcd1234!

重启服务:

service neutron-server restart

运行命令:

neutron ext-list

还有错误:

Connection to neutron failed: Maximum attempts reached

解决方案:

修改:

vi /etc/neutron/neutron.conf

rpc_backend = neutron.openstack.common.rpc.impl_kombu

![]()

配置Network1节点的metadata agent时,还需要在Controller1节点进行以下配置:

配置metadata agent:

vi /etc/nova/nova.conf

[neutron]

service_metadata_proxy = True

metadata_proxy_shared_secret = METADATA_SECRET

![]()

重启服务

service nova-api restart

![]()

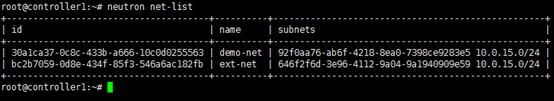

2.2.1.6.2 创建初始网络

在配置完controller1节点,network1节点和compute1节点后。再进行下面的步骤。

![]()

注:

因为我们搭建环境时采用的网络结构与官网给出的文档不相符,所以,此处的网络配置,可能与官网文档不相对应,应做相应的更改。

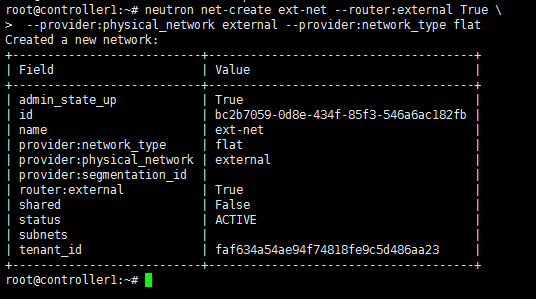

外部网络

source admin-openrc.sh

neutron net-create ext-net --router:external True \

--provider:physical_network external --provider:network_type flat

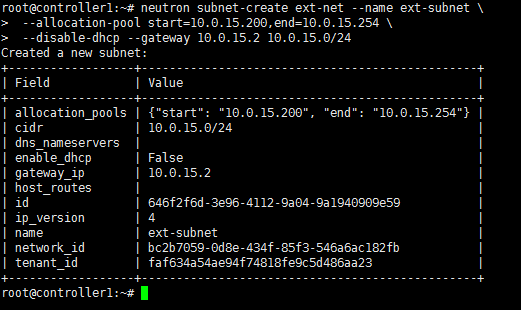

创建子网:

neutron subnet-create ext-net --name ext-subnet \

--allocation-pool start=FLOATING_IP_START,end=FLOATING_IP_END \

--disable-dhcp --gateway EXTERNAL_NETWORK_GATEWAY EXTERNAL_NETWORK_CIDR

neutron subnet-create ext-net --name ext-subnet \

--allocation-pool start=192.168.1.200,end=192.168.1.254 \

--disable-dhcp --gateway 192.168.1.1 192.168.1.0/24

![]()

![]()

例如:

neutron subnet-create ext-net --name ext-subnet \

--allocation-pool start=203.0.113.101,end=203.0.113.200 \

--disable-dhcp --gateway 203.0.113.1 203.0.113.0/24

租户网络

vi demo-openrc.sh

export OS_TENANT_NAME=demo

export OS_USERNAME=demo

export OS_PASSWORD=abcd1234!

export OS_AUTH_URL=http://controller1:5000/v2.0

source demo-openrc.sh

![]()

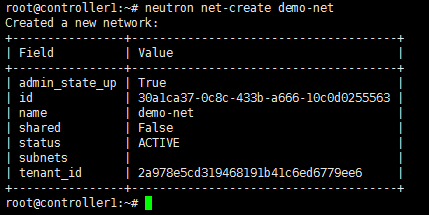

neutron net-create demo-net

创建子网:

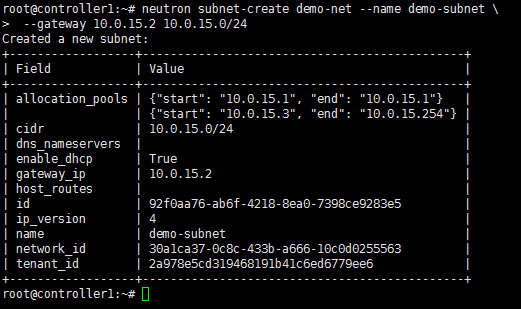

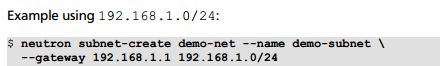

neutron subnet-create demo-net --name demo-subnet \

--gateway TENANT_NETWORK_GATEWAY TENANT_NETWORK_CIDR

neutron subnet-create demo-net --name demo-subnet \

--gateway 192.168.0.1 192.168.0.0/24

neutron subnet-update --name demo-subnet --gateway 192.168.0.1 192.168.0.0/24

创建router:

![]()

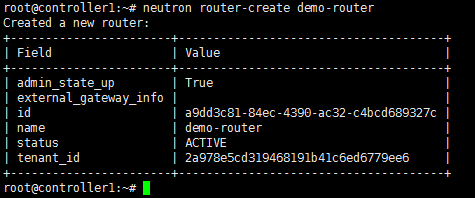

neutron router-create demo-router

![]()

neutron router-interface-add demo-router demo-subnet

![]()

![]()

neutron router-gateway-set demo-router ext-net

![]()

出现错误:

400-{u'NeutronError': {u'message': u'Bad router request: Cidr 10.0.15.0/24 of subnet 646f2f6d-3e96-4112-9a04-9a1940909e59 overlaps with cidr 10.0.15.0/24 of subnet 92f0aa76-ab6f-4218-8ea0-739

8ce9283e5', u'type': u'BadRequest', u'detail': u''}}

查看日志:

root@controller1:~# neutron -v router-gateway-set demo-router ext-net

DEBUG: neutronclient.neutron.v2_0.router.SetGatewayRouter run(Namespace(disable_snat=False, external_network_id=u'ext-net', request_format='json', router_id=u'demo-router'))

DEBUG: neutronclient.client

REQ: curl -i http://controller1:35357/v2.0/tokens -X POST -H "Content-Type: application/json" -H "Accept: application/json" -H "User-Agent: python-neutronclient" -d '{"auth": {"tenantName": "

admin", "passwordCredentials": {"username": "admin", "password": "abcd1234!"}}}'

DEBUG: neutronclient.client RESP:{'status': '200', 'content-length': '2780', 'vary': 'X-Auth-Token', 'date': 'Wed, 01 Apr 2015 06:18:38 GMT', 'content-type': 'application/json', 'x-distributi

on': 'Ubuntu'} {"access": {"token": {"issued_at": "2015-04-01T06:18:38.282134", "expires": "2015-04-01T07:18:38Z", "id": "118239e5c21848e495ba2c895de32737", "tenant": {"description": "Admin Tenant", "enabled": true, "id": "faf634a54ae94f74818fe9c5d486aa23", "name": "admin"}}, "serviceCatalog": [{"endpoints": [{"adminURL": "http://controller1:8774/v2/faf634a54ae94f74818fe9c5d486aa23", "region": "regionOne", "internalURL": "http://controller1:8774/v2/faf634a54ae94f74818fe9c5d486aa23", "id": "5dbe867ef2d4439c924ed9278fa472f7", "publicURL": "http://controller1:8774/v2/faf634a54ae94f74818fe9c5d486aa23"}], "endpoints_links": [], "type": "compute", "name": "nova"}, {"endpoints": [{"adminURL": "http://controller1:9696", "region": "regionOne", "internalURL": "http://controller1:9696", "id": "0e330a14c019416ba01b2c984152f042", "publicURL": "http://controller1:9696"}], "endpoints_links": [], "type": "network", "name": "neutron"}, {"endpoints": [{"adminURL": "http://controller1:8776/v2/faf634a54ae94f74818fe9c5d486aa23", "region": "regionOne", "internalURL": "http://controller1:8776/v2/faf634a54ae94f74818fe9c5d486aa23", "id": "3f3090539a3149f5b95bb11a45fa58c6", "publicURL": "http://controller1:8776/v2/faf634a54ae94f74818fe9c5d486aa23"}], "endpoints_links": [], "type": "volumev2", "name": "cinderv2"}, {"endpoints": [{"adminURL": "http://controller1:9292", "region": "regionOne", "internalURL": "http://controller1:9292", "id": "84125112850840c781eb7710d3b2ba8a", "publicURL": "http://controller1:9292"}], "endpoints_links": [], "type": "image", "name": "glance"}, {"endpoints": [{"adminURL": "http://controller1:8776/v1/faf634a54ae94f74818fe9c5d486aa23", "region": "regionOne", "internalURL": "http://controller1:8776/v1/faf634a54ae94f74818fe9c5d486aa23", "id": "11c99a62f72a4eec92059e08550dd9f7", "publicURL": "http://controller1:8776/v1/faf634a54ae94f74818fe9c5d486aa23"}], "endpoints_links": [], "type": "volume", "name": "cinder"}, {"endpoints": [{"adminURL": "http://controller1:8080", "region": "regionOne", "internalURL": "http://controller1:8080/v1/AUTH_faf634a54ae94f74818fe9c5d486aa23", "id": "46ba3b251b7441359e00452e4e949dc1", "publicURL": "http://controller1:8080/v1/AUTH_faf634a54ae94f74818fe9c5d486aa23"}], "endpoints_links": [], "type": "object-store", "name": "swift"}, {"endpoints": [{"adminURL": "http://controller1:35357/v2.0", "region": "regionOne", "internalURL": "http://controller1:5000/v2.0", "id": "44cd99800c0c4c10be79dd9e5966587f", "publicURL": "http://controller1:5000/v2.0"}], "endpoints_links": [], "type": "identity", "name": "keystone"}], "user": {"username": "admin", "roles_links": [], "id": "8dbc737b432a49f6a8de85131ef1de83", "roles": [{"name": "admin"}], "name": "admin"}, "metadata": {"is_admin": 0, "roles": ["2983ebd3633d4ca59ffb9df654e0d35d"]}}}

DEBUG: neutronclient.client

REQ: curl -i http://controller1:9696/v2.0/routers.json?fields=id&name=demo-router -X GET -H "X-Auth-Token: 118239e5c21848e495ba2c895de32737" -H "Content-Type: application/json" -H "Accept: ap

plication/json" -H "User-Agent: python-neutronclient"

DEBUG: neutronclient.client RESP:{'status': '200', 'content-length': '61', 'content-location': 'http://controller1:9696/v2.0/routers.json?fields=id&name=demo-router', 'date': 'Wed, 01 Apr 201

5 06:18:38 GMT', 'content-type': 'application/json; charset=UTF-8', 'x-openstack-request-id': 'req-3a8131ab-b6d5-4612-9dca-110ff99bf9b0'} {"routers": [{"id": "a9dd3c81-84ec-4390-ac32-c4bcd689327c"}]}

DEBUG: neutronclient.client

REQ: curl -i http://controller1:9696/v2.0/networks.json?fields=id&name=ext-net -X GET -H "X-Auth-Token: 118239e5c21848e495ba2c895de32737" -H "Content-Type: application/json" -H "Accept: appli

cation/json" -H "User-Agent: python-neutronclient"

DEBUG: neutronclient.client RESP:{'status': '200', 'content-length': '62', 'content-location': 'http://controller1:9696/v2.0/networks.json?fields=id&name=ext-net', 'date': 'Wed, 01 Apr 2015 0

6:18:38 GMT', 'content-type': 'application/json; charset=UTF-8', 'x-openstack-request-id': 'req-f674a694-343e-437a-8d74-2853ac11c0d3'} {"networks": [{"id": "bc2b7059-0d8e-434f-85f3-546a6ac182fb"}]}

DEBUG: neutronclient.client

REQ: curl -i http://controller1:9696/v2.0/routers/a9dd3c81-84ec-4390-ac32-c4bcd689327c.json -X PUT -H "X-Auth-Token: 118239e5c21848e495ba2c895de32737" -H "Content-Type: application/json" -H "

Accept: application/json" -H "User-Agent: python-neutronclient" -d '{"router": {"external_gateway_info": {"network_id": "bc2b7059-0d8e-434f-85f3-546a6ac182fb"}}}'

DEBUG: neutronclient.client RESP:{'date': 'Wed, 01 Apr 2015 06:18:38 GMT', 'status': '400', 'content-length': '232', 'content-type': 'application/json; charset=UTF-8', 'x-openstack-request-id

': 'req-6db8f506-cc3f-468a-8c90-2fa06143cf2a'} {"NeutronError": {"message": "Bad router request: Cidr 10.0.15.0/24 of subnet 646f2f6d-3e96-4112-9a04-9a1940909e59 overlaps with cidr 10.0.15.0/24 of subnet 92f0aa76-ab6f-4218-8ea0-7398ce9283e5", "type": "BadRequest", "detail": ""}}

DEBUG: neutronclient.v2_0.client Error message: {"NeutronError": {"message": "Bad router request: Cidr 10.0.15.0/24 of subnet 646f2f6d-3e96-4112-9a04-9a1940909e59 overlaps with cidr 10.0.15.0

/24 of subnet 92f0aa76-ab6f-4218-8ea0-7398ce9283e5", "type": "BadRequest", "detail": ""}}ERROR: neutronclient.shell 400-{u'NeutronError': {u'message': u'Bad router request: Cidr 10.0.15.0/24 of subnet 646f2f6d-3e96-4112-9a04-9a1940909e59 overlaps with cidr 10.0.15.0/24 of subnet

92f0aa76-ab6f-4218-8ea0-7398ce9283e5', u'type': u'BadRequest', u'detail': u''}}Traceback (most recent call last):

File "/usr/lib/python2.7/dist-packages/neutronclient/shell.py", line 526, in run_subcommand

return run_command(cmd, cmd_parser, sub_argv)

File "/usr/lib/python2.7/dist-packages/neutronclient/shell.py", line 79, in run_command

return cmd.run(known_args)

File "/usr/lib/python2.7/dist-packages/neutronclient/neutron/v2_0/router.py", line 205, in run

neutron_client.add_gateway_router(_router_id, router_dict)

File "/usr/lib/python2.7/dist-packages/neutronclient/v2_0/client.py", line 111, in with_params

ret = self.function(instance, *args, **kwargs)

File "/usr/lib/python2.7/dist-packages/neutronclient/v2_0/client.py", line 424, in add_gateway_router

body={'router': {'external_gateway_info': body}})

File "/usr/lib/python2.7/dist-packages/neutronclient/v2_0/client.py", line 1245, in put

headers=headers, params=params)

File "/usr/lib/python2.7/dist-packages/neutronclient/v2_0/client.py", line 1221, in retry_request

headers=headers, params=params)

File "/usr/lib/python2.7/dist-packages/neutronclient/v2_0/client.py", line 1164, in do_request

self._handle_fault_response(status_code, replybody)

File "/usr/lib/python2.7/dist-packages/neutronclient/v2_0/client.py", line 1134, in _handle_fault_response

exception_handler_v20(status_code, des_error_body)

File "/usr/lib/python2.7/dist-packages/neutronclient/v2_0/client.py", line 96, in exception_handler_v20

message=msg)

NeutronClientException: 400-{u'NeutronError': {u'message': u'Bad router request: Cidr 10.0.15.0/24 of subnet 646f2f6d-3e96-4112-9a04-9a1940909e59 overlaps with cidr 10.0.15.0/24 of subnet 92f

0aa76-ab6f-4218-8ea0-7398ce9283e5', u'type': u'BadRequest', u'detail': u''}}DEBUG: neutronclient.shell clean_up SetGatewayRouter

DEBUG: neutronclient.shell Got an error: 400-{u'NeutronError': {u'message': u'Bad router request: Cidr 10.0.15.0/24 of subnet 646f2f6d-3e96-4112-9a04-9a1940909e59 overlaps with cidr 10.0.15.0

/24 of subnet 92f0aa76-ab6f-4218-8ea0-7398ce9283e5', u'type': u'BadRequest', u'detail': u''}}

解决方案:

未解决。

可能是因为我现在的网络环境是,只有一个10.0.15.0/24网段,并且,所有的机器都可以访问外网。

需要详细理解,各个网络间的关系。

理清楚。

验证网络的连通性

Ping the tenant router gateway:

2.2.1.7 DashBoard

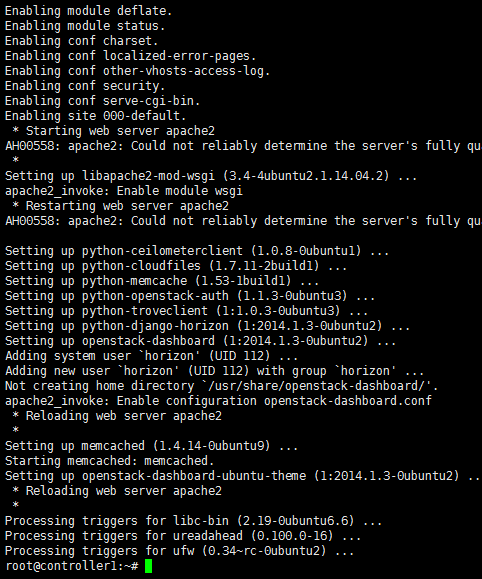

安装:

apt-get install openstack-dashboard apache2 libapache2-mod-wsgi

memcached python-memcache

配置:

vi /etc/openstack-dashboard/local_settings.py

OPENSTACK_HOST = "controller1"

ALLOWED_HOSTS = ['*']

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.

MemcachedCache',

'LOCATION': '127.0.0.1:11211',

}

}

TIME_ZONE = "UTC"

重启服务:

service apache2 restart

![]()

提示:

AH00558: apache2: Could not reliably determine the server's fully qualified domain name, using 10.0.15.11. Set the 'ServerName' directive globally to suppress this message

可忽略此提示。

service memcached restart

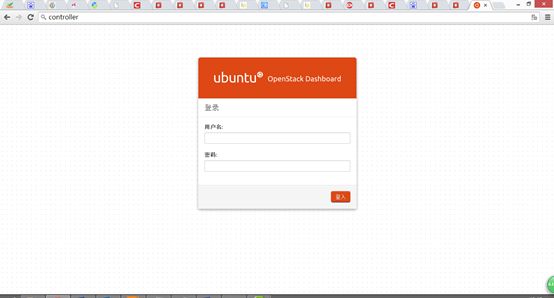

验证安装:

访问:

http://controller1/horizon

admin 或 demo

passwd: abcd1234!

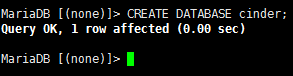

2.2.1.8 Block Storage

以下在controller1节点进行安装配置。

前提条件:

创建数据库:

mysql -u root -p

![]()

CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' \

IDENTIFIED BY 'abcd1234!';

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' \

IDENTIFIED BY 'abcd1234!';

quit;

![]()

source admin-openrc.sh

![]()

keystone user-create --name cinder --pass abcd1234!

![]()

![]()

keystone user-role-add --user cinder --tenant service --role admin

![]()

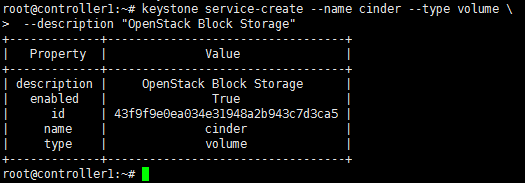

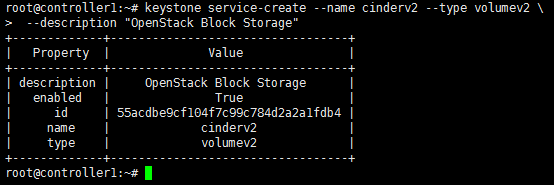

keystone service-create --name cinder --type volume \

--description "OpenStack Block Storage"

keystone service-create --name cinderv2 --type volumev2 \

--description "OpenStack Block Storage"

![]()

keystone endpoint-create \

--service-id $(keystone service-list | awk '/ volume / {print $2}') \

--publicurl http://controller1:8776/v1/%\(tenant_id\)s \

--internalurl http://controller1:8776/v1/%\(tenant_id\)s \

--adminurl http://controller1:8776/v1/%\(tenant_id\)s \

--region regionOne

keystone endpoint-create \

--service-id $(keystone service-list | awk '/ volumev2 / {print $2}') \

--publicurl http://controller1:8776/v2/%\(tenant_id\)s \

--internalurl http://controller1:8776/v2/%\(tenant_id\)s \

--adminurl http://controller1:8776/v2/%\(tenant_id\)s \

--region regionOne

安装:

apt-get install cinder-api cinder-scheduler python-cinderclient

配置:

vi /etc/cinder/cinder.conf

[database]

connection = mysql://cinder:abcd1234!@controller1/cinder

[DEFAULT]

verbose = True

my_ip = 10.0.15.11

rpc_backend = cinder.openstack.common.rpc.impl_kombu

rabbit_host = controller1

rabbit_password = abcd1234!

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller1:5000/v2.0

identity_uri = http://controller1:35357

admin_tenant_name = service

admin_user = cinder

admin_password = abcd1234!

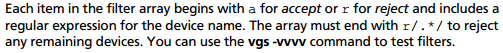

同步数据:

su -s /bin/sh -c "cinder-manage db sync" cinder

出现错误:

From oslo import messaging

Can't import module messaging

解决方法:

pip install --upgrade oslo.messaging

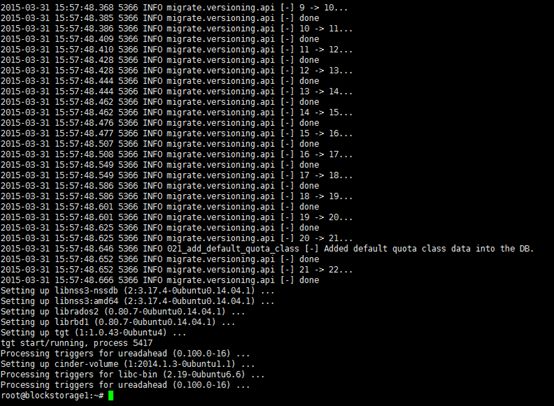

重启服务:

service cinder-scheduler restart

service cinder-api restart

删除默认生成的SQLite文件:

rm -f /var/lib/cinder/cinder.sqlite

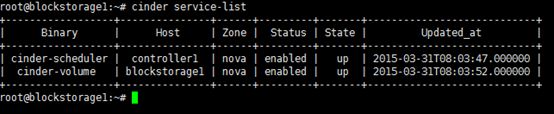

![]()

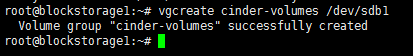

部署完成后,转到BlockStorage1节点,继续进行安装部署。

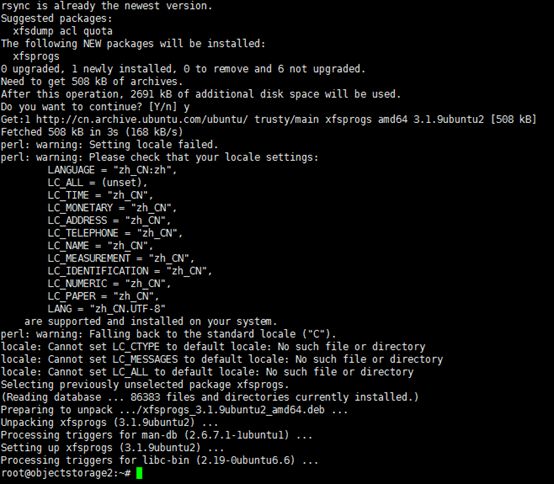

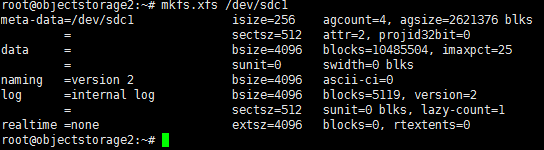

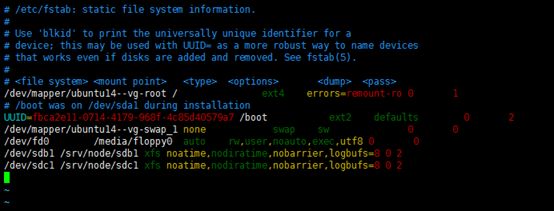

2.2.1.9 Object Storage

不使用SQL数据库。

![]()

![]()

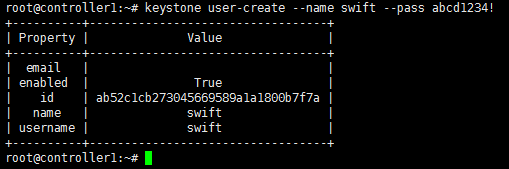

keystone user-create --name swift --pass abcd1234!

![]()

keystone user-role-add --user swift --tenant service --role admin

![]()

keystone service-create --name swift --type object-store \

--description "OpenStack Object Storage"

![]()

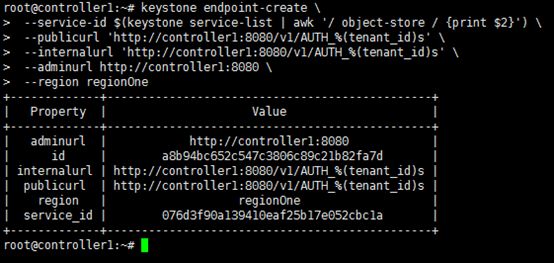

keystone endpoint-create \

--service-id $(keystone service-list | awk '/ object-store / {print $2}') \

--publicurl 'http://controller1:8080/v1/AUTH_%(tenant_id)s' \

--internalurl 'http://controller1:8080/v1/AUTH_%(tenant_id)s' \

--adminurl http://controller1:8080 \

--region regionOne

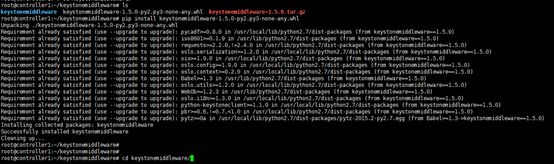

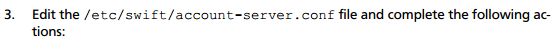

apt-get install swift swift-proxy python-swiftclient python-keystoneclient \

python-keystonemiddleware memcached

出错:

Unable to locate package python-keystonemiddleware

解决方案:

从官网上下载https://pypi.python.org/pypi/keystonemiddleware/

.whl文件。

用命令:

pip install keystonemiddleware-1.5.0-py2.py3-none-any.whl

service swift-proxy restart

![]()

mkdir /etc/swift

![]()

![]()

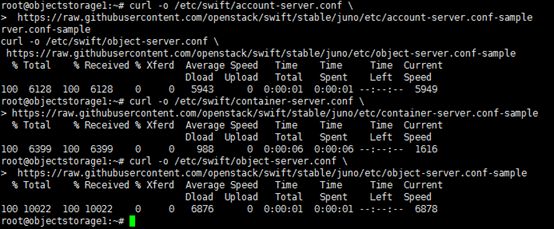

curl -o /etc/swift/proxy-server.conf \

https://raw.githubusercontent.com/openstack/swift/stable/juno/etc/proxyserver.conf-sample

出现错误:

这里没有找到此url。

上面的命令替换为:

curl -o /etc/swift/proxy-server.conf \

https://raw.githubusercontent.com/openstack/swift/master/etc/proxy-server.conf-sample

![]()

vi /etc/swift/proxy-server.conf

[DEFAULT]

bind_port = 8080

user = swift

swift_dir = /etc/swift

[pipeline:main]

pipeline = authtoken cache healthcheck keystoneauth proxy-logging proxy-server

[app:proxy-server]

allow_account_management = true

account_autocreate = true

[filter:keystoneauth]

use = egg:swift#keystoneauth

operator_roles = admin,_member_

[filter:authtoken]

paste.filter_factory = keystonemiddleware.auth_token:filter_factory

auth_uri = http://controller1:5000/v2.0

identity_uri = http://controller1:35357

admin_tenant_name = service

admin_user = swift

admin_password = abcd1234!

delay_auth_decision = true

[filter:cache]

memcache_servers = 127.0.0.1:11211

![]()

Service swift-proxy restart

出现错误:

查看日志:

root@controller1:~# cat /var/log/upstart/swift-proxy.log

Starting proxy-server...(/etc/swift/proxy-server.conf)

Error trying to load config from /etc/swift/proxy-server.conf: No loader given in section 'app:proxy-server'

Signal proxy-server pid: 28708 signal: 15

No proxy-server running

Starting proxy-server...(/etc/swift/proxy-server.conf)

Traceback (most recent call last):

File "/usr/bin/swift-proxy-server", line 23, in

sys.exit(run_wsgi(conf_file, 'proxy-server', default_port=8080, **options))

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 389, in run_wsgi

loadapp(conf_path, global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 316, in loadapp

ctx = loadcontext(loadwsgi.APP, conf_file, global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 307, in loadcontext

global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 296, in loadcontext

global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 320, in _loadconfig

return loader.get_context(object_type, name, global_conf)

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 59, in get_context

object_type, name=name, global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 450, in get_context

global_additions=global_additions)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 562, in _pipeline_app_context

for name in pipeline[:-1]]

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 59, in get_context

object_type, name=name, global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 458, in get_context

section)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 517, in _context_from_explicit

value = import_string(found_expr)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 22, in import_string

return pkg_resources.EntryPoint.parse("x=" + s).load(False)

File "/usr/lib/python2.7/dist-packages/pkg_resources.py", line 2088, in load

entry = __import__(self.module_name, globals(),globals(), ['__name__'])

ImportError: No module named keystonemiddleware.auth_token

Signal proxy-server pid: 29710 signal: 15

No proxy-server running

Starting proxy-server...(/etc/swift/proxy-server.conf)

Traceback (most recent call last):

File "/usr/bin/swift-proxy-server", line 23, in

sys.exit(run_wsgi(conf_file, 'proxy-server', default_port=8080, **options))

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 389, in run_wsgi

loadapp(conf_path, global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 316, in loadapp

ctx = loadcontext(loadwsgi.APP, conf_file, global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 307, in loadcontext

global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 296, in loadcontext

global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 320, in _loadconfig

return loader.get_context(object_type, name, global_conf)

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 59, in get_context

object_type, name=name, global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 450, in get_context

global_additions=global_additions)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 562, in _pipeline_app_context

for name in pipeline[:-1]]

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 59, in get_context

object_type, name=name, global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 458, in get_context

section)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 517, in _context_from_explicit

value = import_string(found_expr)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 22, in import_string

return pkg_resources.EntryPoint.parse("x=" + s).load(False)

File "/usr/lib/python2.7/dist-packages/pkg_resources.py", line 2088, in load

entry = __import__(self.module_name, globals(),globals(), ['__name__'])

ImportError: No module named keystonemiddleware.auth_token

Signal proxy-server pid: 29771 signal: 15

No proxy-server running

Starting proxy-server...(/etc/swift/proxy-server.conf)

Traceback (most recent call last):

File "/usr/bin/swift-proxy-server", line 23, in

sys.exit(run_wsgi(conf_file, 'proxy-server', default_port=8080, **options))

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 389, in run_wsgi

loadapp(conf_path, global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 316, in loadapp

ctx = loadcontext(loadwsgi.APP, conf_file, global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 307, in loadcontext

global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 296, in loadcontext

global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 320, in _loadconfig

return loader.get_context(object_type, name, global_conf)

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 59, in get_context

object_type, name=name, global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 450, in get_context

global_additions=global_additions)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 562, in _pipeline_app_context

for name in pipeline[:-1]]

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 59, in get_context

object_type, name=name, global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 458, in get_context

section)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 517, in _context_from_explicit

value = import_string(found_expr)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 22, in import_string

return pkg_resources.EntryPoint.parse("x=" + s).load(False)

File "/usr/lib/python2.7/dist-packages/pkg_resources.py", line 2088, in load

entry = __import__(self.module_name, globals(),globals(), ['__name__'])

ImportError: No module named keystonemiddleware.auth_token

Signal proxy-server pid: 29818 signal: 15

No proxy-server running

Starting proxy-server...(/etc/swift/proxy-server.conf)

Traceback (most recent call last):

File "/usr/bin/swift-proxy-server", line 23, in

sys.exit(run_wsgi(conf_file, 'proxy-server', default_port=8080, **options))

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 389, in run_wsgi

loadapp(conf_path, global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 316, in loadapp

ctx = loadcontext(loadwsgi.APP, conf_file, global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 307, in loadcontext

global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 296, in loadcontext

global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 320, in _loadconfig

return loader.get_context(object_type, name, global_conf)

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 59, in get_context

object_type, name=name, global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 450, in get_context

global_additions=global_additions)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 562, in _pipeline_app_context

for name in pipeline[:-1]]

File "/usr/lib/python2.7/dist-packages/swift/common/wsgi.py", line 59, in get_context

object_type, name=name, global_conf=global_conf)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 458, in get_context

section)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 517, in _context_from_explicit

value = import_string(found_expr)

File "/usr/lib/python2.7/dist-packages/paste/deploy/loadwsgi.py", line 22, in import_string

return pkg_resources.EntryPoint.parse("x=" + s).load(False)

File "/usr/lib/python2.7/dist-packages/pkg_resources.py", line 2088, in load

entry = __import__(self.module_name, globals(),globals(), ['__name__'])

ImportError: No module named keystonemiddleware.auth_token

Signal proxy-server pid: 30228 signal: 15

No proxy-server running

解决方案:

从官网上下载https://pypi.python.org/pypi/keystonemiddleware/

.whl文件。

用命令:

pip install keystonemiddleware-1.5.0-py2.py3-none-any.whl

如果没有pip

apt-get install python-pip

root@controller1:/etc/swift# cat /var/log/upstart/swift-proxy.log

No proxy-server running

No proxy-server running

No proxy-server running

No proxy-server running

Starting proxy-server...(/etc/swift/proxy-server.conf)

Error: [swift-hash]: both swift_hash_path_suffix and swift_hash_path_prefix are missing from /etc/swift/swift.conf

Signal proxy-server pid: 29930 signal: 15

No proxy-server running

Starting proxy-server...(/etc/swift/proxy-server.conf)

Error: [swift-hash]: both swift_hash_path_suffix and swift_hash_path_prefix are missing from /etc/swift/swift.conf

Signal proxy-server pid: 29948 signal: 15

No proxy-server running

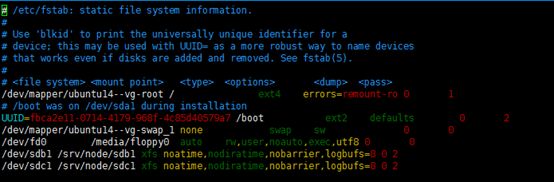

转到ObjectStorage1和ObjectStorage2节点进行安装部署。

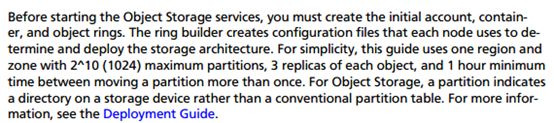

2.2.1.9.2 Create Init Rings

Account Ring

以下在controller1节点进行。

![]()

cd /etc/swift

![]()

swift-ring-builder account.builder create 10 3 1

![]()

swift-ring-builder account.builder \

add

r1z1-STORAGE_NODE_MANAGEMENT_INTERFACE_IP_ADDRESS:6002/DEVICE_NAME DEVICE_WEIGHT

swift-ring-builder account.builder \

add r1z1-10.0.15.51:6002/sdb1 40

swift-ring-builder account.builder \

add r1z1-10.0.15.52:6002/sdb1 40

![]()

![]()

swift-ring-builder account.builder

![]()

swift-ring-builder account.builder rebalance

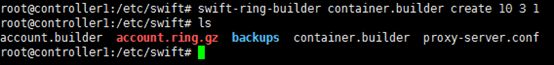

Container Ring

![]()

以下在controller1节点进行。

![]()

cd /etc/swift

![]()

swift-ring-builder container.builder create 10 3 1

![]()

swift-ring-builder container.builder \

add

r1z1-STORAGE_NODE_MANAGEMENT_INTERFACE_IP_ADDRESS:6001/DEVICE_NAME DEVICE_WEIGHT

swift-ring-builder container.builder \

add r1z1-10.0.15.51:6001/sdb1 40

swift-ring-builder container.builder \

add r1z1-10.0.15.52:6001/sdb1 40

![]()

![]()

swift-ring-builder container.builder

![]()

swift-ring-builder container.builder rebalance

![]()

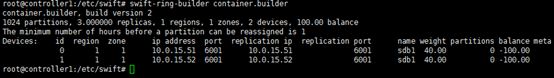

Object Ring

![]()

以下在controller1节点进行。

![]()

cd /etc/swift

![]()

![]()

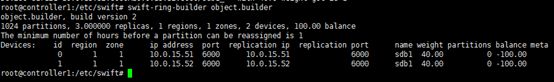

swift-ring-builder object.builder create 10 3 1

![]()

swift-ring-builder object.builder \

add

r1z1-STORAGE_NODE_MANAGEMENT_INTERFACE_IP_ADDRESS:6000/DEVICE_NAME DEVICE_WEIGHT

swift-ring-builder object.builder \

add r1z1-10.0.15.51:6000/sdb1 40

swift-ring-builder object.builder \

add r1z1-10.0.15.52:6000/sdb1 40

![]()

swift-ring-builder object.builder

![]()

swift-ring-builder object.builder rebalance

![]()

![]()

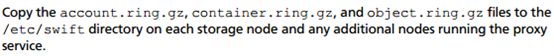

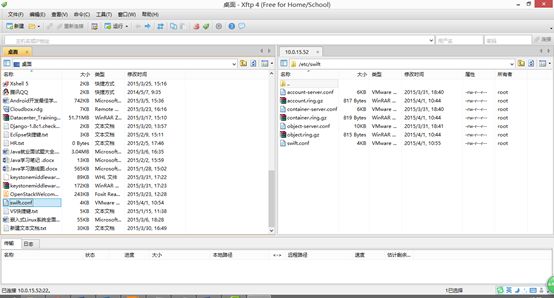

借助Xshell + xftp 工具实现文件共享。

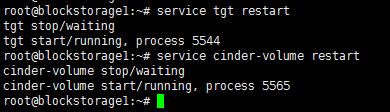

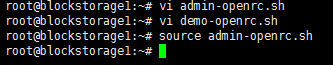

安装最后

以下在controller1节点上进行。

![]()

![]()

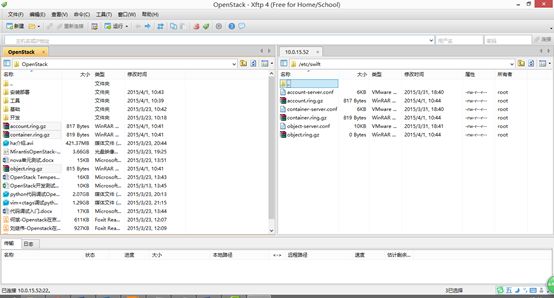

curl -o /etc/swift/swift.conf \

https://raw.githubusercontent.com/openstack/swift/stable/juno/etc/swift.conf-sample

![]()

vi /etc/swift/swift.conf

[swift-hash]

swift_hash_path_suffix = abcd1234!

swift_hash_path_prefix = abcd1234!

[storage-policy:0]

name = Policy-0

default = yes

借助xshell+xftp工具。

![]()

chown -R swift:swift /etc/swift

![]()

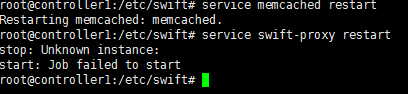

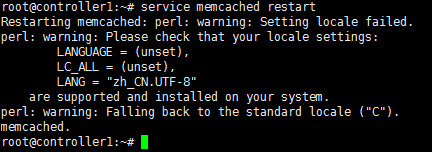

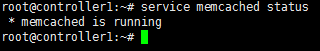

service memcached restart

service swift-proxy restart

出现错误:

Restarting memcached: perl: warning: Setting locale failed.

perl: warning: Please check that your locale settings:

LANGUAGE = (unset),

LC_ALL = (unset),

LANG = "zh_CN.UTF-8"

are supported and installed on your system.

perl: warning: Falling back to the standard locale ("C").

memcached.

解决方案:

export LANGUAGE=en_US.UTF-8

export LANG=en_US.UTF-8

export LC_ALL=en_US.UTF-8

locale-gen en_US.UTF-8

dpkg-reconfigure locales

运行正常:

![]()

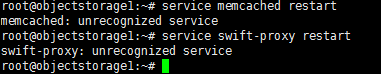

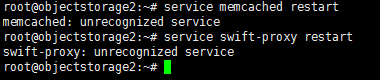

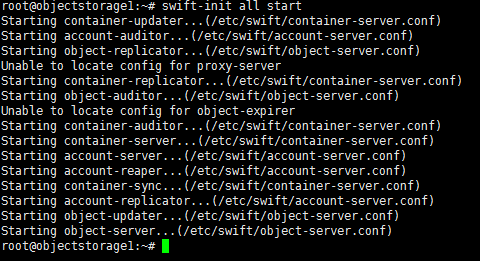

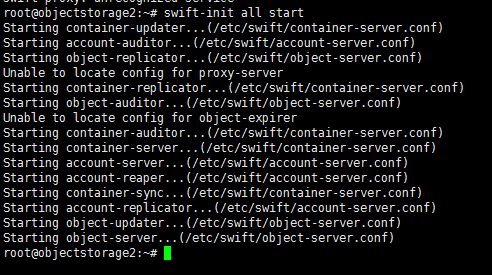

在objectStorage1和objectstorage2节点上进行。

swift-init all start

出现错误:

Unable to locate config for object-expirer

Unable to locate config for proxy-server

解决方案:

未解决。

验证安装

以下在controller1节点进行。

![]()

source demo-openrc.sh

![]()

swift stat

![]()

出现错误:

HTTPConnectionPool(host='controller1', port=8080): Max retries exceeded with url: /v1/AUTH_2a978e5cd319468191b41c6ed6779ee6 (Caused by

查看日志:

root@controller1:/var/log# swift --debug stat

DEBUG:keystoneclient.session:REQ: curl -i -X POST http://controller1:5000/v2.0/tokens -H "Content-Type: application/json" -H "User-Agent: python-keystoneclient" -d '{"auth": {"tenantName": "d

emo", "passwordCredentials": {"username": "demo", "password": "abcd1234!"}}}'INFO:urllib3.connectionpool:Starting new HTTP connection (1): controller1

DEBUG:urllib3.connectionpool:Setting read timeout to None

DEBUG:urllib3.connectionpool:"POST /v2.0/tokens HTTP/1.1" 200 2779

DEBUG:keystoneclient.session:RESP: [200] CaseInsensitiveDict({'date': 'Wed, 01 Apr 2015 03:22:05 GMT', 'vary': 'X-Auth-Token', 'content-length': '2779', 'content-type': 'application/json', 'x

-distribution': 'Ubuntu'})RESP BODY: {"access": {"token": {"issued_at": "2015-04-01T03:22:05.347112", "expires": "2015-04-01T04:22:05Z", "id": "a59ba4cab68f40ebb616998524d9c147", "tenant": {"description": "Demo Tenant

", "enabled": true, "id": "2a978e5cd319468191b41c6ed6779ee6", "name": "demo"}}, "serviceCatalog": [{"endpoints": [{"adminURL": "http://controller1:8774/v2/2a978e5cd319468191b41c6ed6779ee6", "region": "regionOne", "internalURL": "http://controller1:8774/v2/2a978e5cd319468191b41c6ed6779ee6", "id": "5dbe867ef2d4439c924ed9278fa472f7", "publicURL": "http://controller1:8774/v2/2a978e5cd319468191b41c6ed6779ee6"}], "endpoints_links": [], "type": "compute", "name": "nova"}, {"endpoints": [{"adminURL": "http://controller1:9696", "region": "regionOne", "internalURL": "http://controller1:9696", "id": "0e330a14c019416ba01b2c984152f042", "publicURL": "http://controller1:9696"}], "endpoints_links": [], "type": "network", "name": "neutron"}, {"endpoints": [{"adminURL": "http://controller1:8776/v2/2a978e5cd319468191b41c6ed6779ee6", "region": "regionOne", "internalURL": "http://controller1:8776/v2/2a978e5cd319468191b41c6ed6779ee6", "id": "3f3090539a3149f5b95bb11a45fa58c6", "publicURL": "http://controller1:8776/v2/2a978e5cd319468191b41c6ed6779ee6"}], "endpoints_links": [], "type": "volumev2", "name": "cinderv2"}, {"endpoints": [{"adminURL": "http://controller1:9292", "region": "regionOne", "internalURL": "http://controller1:9292", "id": "84125112850840c781eb7710d3b2ba8a", "publicURL": "http://controller1:9292"}], "endpoints_links": [], "type": "image", "name": "glance"}, {"endpoints": [{"adminURL": "http://controller1:8776/v1/2a978e5cd319468191b41c6ed6779ee6", "region": "regionOne", "internalURL": "http://controller1:8776/v1/2a978e5cd319468191b41c6ed6779ee6", "id": "11c99a62f72a4eec92059e08550dd9f7", "publicURL": "http://controller1:8776/v1/2a978e5cd319468191b41c6ed6779ee6"}], "endpoints_links": [], "type": "volume", "name": "cinder"}, {"endpoints": [{"adminURL": "http://controller1:8080", "region": "regionOne", "internalURL": "http://controller1:8080/v1/AUTH_2a978e5cd319468191b41c6ed6779ee6", "id": "46ba3b251b7441359e00452e4e949dc1", "publicURL": "http://controller1:8080/v1/AUTH_2a978e5cd319468191b41c6ed6779ee6"}], "endpoints_links": [], "type": "object-store", "name": "swift"}, {"endpoints": [{"adminURL": "http://controller1:35357/v2.0", "region": "regionOne", "internalURL": "http://controller1:5000/v2.0", "id": "44cd99800c0c4c10be79dd9e5966587f", "publicURL": "http://controller1:5000/v2.0"}], "endpoints_links": [], "type": "identity", "name": "keystone"}], "user": {"username": "demo", "roles_links": [], "id": "f323fef83c534306bc5435603ee815e6", "roles": [{"name": "_member_"}], "name": "demo"}, "metadata": {"is_admin": 0, "roles": ["9fe2ff9ee4384b1894a90878d3e92bab"]}}}

DEBUG:iso8601.iso8601:Parsed 2015-04-01T04:22:05Z into {'tz_sign': None, 'second_fraction': None, 'hour': u'04', 'daydash': u'01', 'tz_hour': None, 'month': None, 'timezone': u'Z', 'second':

u'05', 'tz_minute': None, 'year': u'2015', 'separator': u'T', 'monthdash': u'04', 'day': None, 'minute': u'22'} with default timezone

DEBUG:iso8601.iso8601:Got u'04' for 'monthdash' with default 1

DEBUG:iso8601.iso8601:Got 4 for 'month' with default 4

DEBUG:iso8601.iso8601:Got u'01' for 'daydash' with default 1

DEBUG:iso8601.iso8601:Got 1 for 'day' with default 1

DEBUG:iso8601.iso8601:Got u'04' for 'hour' with default None

DEBUG:iso8601.iso8601:Got u'22' for 'minute' with default None

DEBUG:iso8601.iso8601:Got u'05' for 'second' with default None

INFO:urllib3.connectionpool:Starting new HTTP connection (1): controller1

INFO:urllib3.connectionpool:Starting new HTTP connection (1): controller1

INFO:urllib3.connectionpool:Starting new HTTP connection (1): controller1

INFO:urllib3.connectionpool:Starting new HTTP connection (1): controller1

INFO:urllib3.connectionpool:Starting new HTTP connection (1): controller1

INFO:urllib3.connectionpool:Starting new HTTP connection (1): controller1

ERROR:swiftclient:HTTPConnectionPool(host='controller1', port=8080): Max retries exceeded with url: /v1/AUTH_2a978e5cd319468191b41c6ed6779ee6 (Caused by

nnection refused)Traceback (most recent call last):

File "/usr/lib/python2.7/dist-packages/swiftclient/client.py", line 1189, in _retry

rv = func(self.url, self.token, rgs, wargs)

File "/usr/lib/python2.7/dist-packages/swiftclient/client.py", line 460, in head_account

conn.request(method, parsed.path, '', headers)

File "/usr/lib/python2.7/dist-packages/swiftclient/client.py", line 188, in request

files=files, elf.requests_args)

File "/usr/lib/python2.7/dist-packages/swiftclient/client.py", line 177, in _request

return requests.request(rg, warg)

File "/usr/lib/python2.7/dist-packages/requests/api.py", line 44, in request

return session.request(method=method, url=url, wargs)

File "/usr/lib/python2.7/dist-packages/requests/sessions.py", line 455, in request

resp = self.send(prep, end_kwargs)

File "/usr/lib/python2.7/dist-packages/requests/sessions.py", line 558, in send

r = adapter.send(request, wargs)

File "/usr/lib/python2.7/dist-packages/requests/adapters.py", line 378, in send

raise ConnectionError(e)

ConnectionError: HTTPConnectionPool(host='controller1', port=8080): Max retries exceeded with url: /v1/AUTH_2a978e5cd319468191b41c6ed6779ee6 (Caused by

nection refused)HTTPConnectionPool(host='controller1', port=8080): Max retries exceeded with url: /v1/AUTH_2a978e5cd319468191b41c6ed6779ee6 (Caused by

root@controller1:/var/log#

解决方案:

解决了以上的几个问题,此问题也就解决了。主要是配置文件/etc/swift/proxy-server.conf的问题。

运行正常。

![]()

swift upload demo-container1 admin-openrc.sh

![]()

swift list

![]()

swift download demo-container1 admin-openrc.sh

![]()

2.2.2 Compute1节点部署

2.2.2.1 NTP配置文件的修改

因为在基础环境的安装和配置中,已经安装了ntp服务。所以,这里只需要配置一下就可以了。

配置:

vi /etc/ntp.conf

server controller1 iburst

重启:

service ntp restart

2.2.2.2 Compute Service

在controller1节点,已经配置了Compute Service,在Compute1节点,还需要做一些配置。

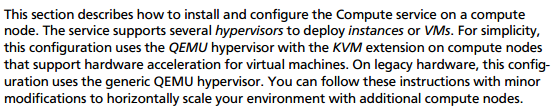

本次部署,使用QEMU和KVM。

上面的内容,指导我们如何增加额外的Compute节点。

2.2.2.2.1 安装和配置

![]()

安装:

apt-get install nova-compute sysfsutils

![]()

配置:

vi /etc/nova/nova.conf

[DEFAULT]

verbose = True

rpc_backend = rabbit

rabbit_host = controller1

rabbit_password = abcd1234!

auth_strategy = keystone

my_ip = 10.0.15.21

vnc_enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = 10.0.15.21

novncproxy_base_url = http://controller1:6080/vnc_auto.html

[glance]

host = controller1

[keystone_authtoken]

auth_uri = http://controller1:5000/v2.0

#identity_uri = http://controller1:35357

auth_host = 10.0.15.11

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = nova

admin_password = abcd1234!

![]()

![]()

检查服务器是否支持虚拟化:

egrep -c '(vmx|svm)' /proc/cpuinfo

因为返回值为4,表示支持虚拟化,所以不必进行下面的配置。

如果不支持(返回值为0):

vi /etc/nova/nova-compute.conf

[libvirt]

virt_type = qemu

如果支持,不需配置。

重启:

service nova-compute restart

删除默认生成的SQLite文件:

rm -f /var/lib/nova/nova.sqlite

![]()

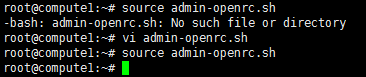

2.2.2.2.2 验证安装

![]()

source admin-openrc.sh

解决方案:

vi admin-openrc.sh

export OS_TENANT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=abcd1234!

export OS_AUTH_URL=http://controller1:35357/v2.0

source admin-openrc.sh

解决了:

![]()

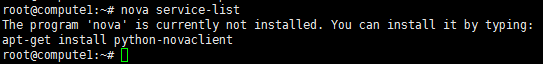

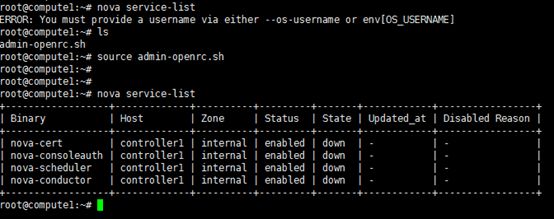

nova service-list

出现错误:

The program 'nova' is currently not installed. You can install it by typing:

apt-get install python-novaclient

解决方案:

apt-get install python-novaclient

安装后,再运行:

nova service-list

![]()

出现错误:

ERROR: HTTPConnectionPool(host='controller1', port=35357): Max retries exceeded with url: /v2.0/tokens (Caused by

解决方案:

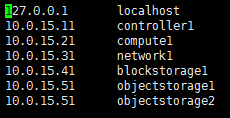

vi /etc/hosts

增加以下内容:

10.0.15.11 controller1

10.0.15.31 network1

重启服务:

/etc/init.d/networking restart

注:

其他各个节点,也需要如此修改。

nova image-list

2.2.2.3 Network Service

Network Service在controller1节点和Network1节点配置完后,还需要在Compute1节点进行配置。

2.2.2.3.1 安装和配置

前提条件:

vi /etc/sysctl.conf

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0

使改动生效:

sysctl -p

安装:

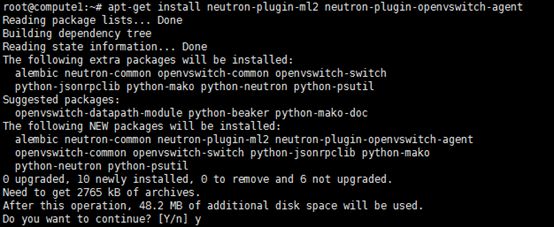

apt-get install neutron-plugin-ml2 neutron-plugin-openvswitch-agent

![]()

配置:

vi /etc/neutron/neutron.conf

[DEFAULT]

rpc_backend = neutron.openstack.common.rpc.im

rabbit_host = controller1

rabbit_password = abcd1234!

auth_strategy = keystone

#core_plugin = ml2

#service_plugins = router

core_plugin = neutron.plugins.ml2.plugin.Ml2Plugin

service_plugins = neutron.services.l3_router.l3_router_plugin.L3RouterPlugin

allow_overlapping_ips = True

verbose = True

[keystone_authtoken]

auth_uri = http://controller1:5000/v2.0

identity_uri = http://controller1:35357

admin_tenant_name = service

admin_user = neutron

admin_password = abcd1234!

配置ML2:

vi /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = flat,gre

tenant_network_types = gre

mechanism_drivers = openvswitch

[ml2_type_gre]

tunnel_id_ranges = 1:1000

[securitygroup]

enable_security_group = True

enable_ipset = True

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

[ovs]

local_ip = INSTANCE_TUNNELS_INTERFACE_IP_ADDRESS

local_ip = 10.0.15.22

![]()

enable_tunneling = True

[agent]

tunnel_types = gre

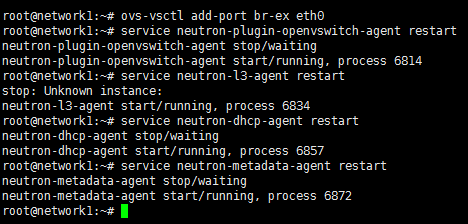

配置OVS:

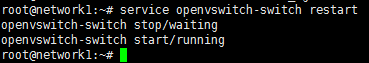

service openvswitch-switch restart

![]()

配置Compute to use Networking:

vi /etc/nova/nova.conf

[DEFAULT]

network_api_class = nova.network.neutronv2.api.API

security_group_api = neutron

linuxnet_interface_driver = nova.network.linux_net.LinuxOVSInterfaceDriver

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[neutron]

url = http://controller1:9696

auth_strategy = keystone

admin_auth_url = http://controller1:35357/v2.0

admin_tenant_name = service

admin_username = neutron

admin_password = abcd1234!

![]()

关闭防火墙:

ufw disable

最后,重启服务:

service nova-compute restart

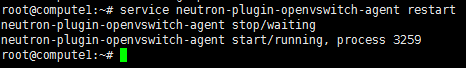

service neutron-plugin-openvswitch-agent restart

验证安装:

source admin-openrc.sh

![]()

neutron agent-list

出现错误:

与Controller1节点和Network1节点相同的错误。

unsupported locale setting

查看日志:

2015-03-31 11:16:52.136 27425 INFO neutron.common.config [-] Logging enabled!

2015-03-31 11:16:52.602 27425 INFO neutron.openstack.common.rpc.common [req-935f6274-308e-4979-8527-a41be789e818 None] Connected to AMQP server on controller1:5672

2015-03-31 11:16:52.638 27425 INFO neutron.openstack.common.rpc.common [req-935f6274-308e-4979-8527-a41be789e818 None] Connected to AMQP server on controller1:5672

2015-03-31 11:16:53.744 27425 INFO neutron.plugins.openvswitch.agent.ovs_neutron_agent [req-935f6274-308e-4979-8527-a41be789e818 None] Agent initialized successfully, now running...

2015-03-31 11:16:53.748 27425 INFO neutron.plugins.openvswitch.agent.ovs_neutron_agent [req-935f6274-308e-4979-8527-a41be789e818 None] Agent out of sync with plugin!

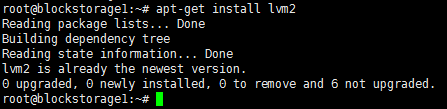

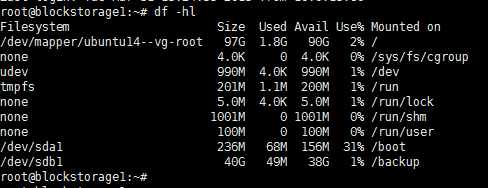

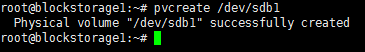

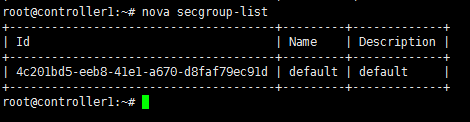

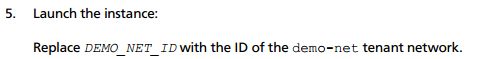

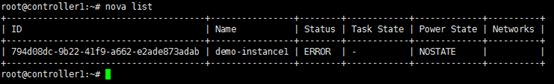

2015-03-31 11:16:53.814 27425 INFO neutron.plugins.openvswitch.agent.ovs_neutron_agent [req-935f6274-308e-4979-8527-a41be789e818 None] Agent tunnel out of sync with plugin!