基于 DRBD 的 KVM 群集

基于 DRBD 的 KVM 群集

说明:

LinuxPlus.org 给朋友的实验手册均是一个“骨架”。我们希望您在学习过程中,根据自

己实验将其补充完整,从而形成自己专属的、更加详实的手册.

1. 环境准备

1.2. 操作系统安装

修改了之前的 kicksart 文件,添加的 HA 的组件

#Kickstart file for KVM + HA

#17:35 2016/3/8 通过 CentOS-7-x86_64-DVD-1511.iso

#10:54 2016/10/12 增加 HA 相关组件

#12:04 2016/10/19 增加 CLVM GFS2 相关组件

auth --enableshadow --passalgo=sha512

install

cdrom

text

firstboot --disable

ignoredisk --only-use=sda

keyboard --vckeymap=us --xlayouts='us'

lang en_US.UTF-8

network --bootproto=dhcp --device=eth0 --noipv6

network --hostname=localhost.localdomain

reboot

rootpw --plaintext 123456

skipx

timezone Asia/Shanghai --isUtc

bootloader --append=" crashkernel=auto" --location=mbr

--boot-drive=sda

autopart --type=lvm

clearpart --none --initlabel

%packages

@base

@core

@gnome-desktop

@virtualization-client

@virtualization-hypervisor

@virtualization-platform

@virtualization-tools

corosync

dlm

fence-agents-all

gfs2-utils

iscsi-initiator-utils

kexec-tools

lvm2-cluster

pacemaker

pcs

policycoreutils-python

psmisc

tigervnc-server

%end

%addon com_redhat_kdump --enable --reserve-mb='auto'

%end

1.3. 配置操作系统

1.3.1. 检查网络

确认这两个节点能够通讯,相互 ping 一下三个地址。

1.3.2. 配置主机名

使用相对短的名称

在所有节点上配置主机名,例如在节点 1 上。

hostnamectl set-hostname labkvm1

1.3.3. 配置名称解析

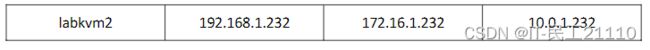

所有节点:配置名称解析。本实验中使用 hosts 文件。生产环境中建议使用使用 DNS。

[ALL]# vi /etc/hosts

添加如下内容

192.168.206.180 vip1

192.168.206.181 labkvm1

192.168.206.182 labkvm2

172.16.206.181 labkvm1-cr

172.16.206.182 labkvm2-cr

10.0.1.231 labkvm1-stor

10.0.1.232 labkvm2-stor

尝试是通过可以名称访问

ping labkvm2

ping labkvm2-cr

ping labkvm2-stor

1.3.4. SSH KEY 等价

[ALL]# ssh-keygen -t rsa -P ''

[root@labkvm1 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@labkvm2

1.3.5. 配置时钟

[ALL]# /sbin/ntpdate time.windows.com

[ALL]# crontab -e

添加如下一行,每 30 分钟同步一次时钟。

*/30 * * * * /sbin/ntpdate time.windows.com &> /dev/null

1.4. 配置群集组件

[ALL]# yum -y install pacemaker pcs corosync psmisc

policycoreutils-python

1.4.1. 配置防火墙

允许群集服务通过防火墙

[ALL]# systemctl enable firewalld

[ALL]# systemctl start firewalld

[ALL]# firewall-cmd --permanent --add-service=high-availability

[ALL]# firewall-cmd --reload

配置心跳及存储网络全通过

[ALL]# firewall-cmd --permanent --zone=trusted

--add-source=172.16.206.0/24

[ALL]# firewall-cmd --permanent --zone=trusted

--add-source=10.0.206.0/24

[ALL]# firewall-cmd --reload

允许动态迁移

[ALL]# firewall-cmd --add-port=16509/tcp --permanent

[ALL]# firewall-cmd --add-port=49152-49215/tcp --permanent

[ALL]# firewall-cmd --reload

1.4.2. 启用 pcs Daemon 为自动启动

[ALL]# systemctl enable pcsd

[ALL]# systemctl start pcsd

1.4.3. 配置 hacluster 账户密码

[ALL]# echo "linuxplus" | passwd --stdin hacluster

1.4.4. 配置节点身份验证

pcs cluster auth labkvm1-cr labkvm2-cr

2. DRBD 资源准备

2.1. DRBD 软件安装

修改节点 1 的 yum 的配置中 cache 设置。

# vi /etc/yum.conf

[main]

cachedir=/var/cache/yum/$basearch/$releasever

keepcache=1 默认为 0,修改为 1,以保存下载的 RPM 包。

debuglevel=2

logfile=/var/log/yum.log

exactarch=1

obsoletes=1

gpgcheck=1

plugins=1

installonly_limit=5

bugtracker_url=http://bugs.centos.org/set_project.php?project_id=

23&ref=http://bugs.centos.org/bug_report_page.php?category=yum

distroverpkg=centos-release

通过 elerpo 来安装

# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

# rpm -Uvh http://www.elrepo.org/elrepo-release-7.0--2.el7.elrepo.noarch.rpm

# yum list | grep drbd

drbd84-utils.x86_64 8.9.5-1.el7.elrepo elrepo

drbd84-utils-sysvinit.x86_64 8.9.5-1.el7.elrepo elrepo

# yum -y install -y kmod-drbd84 drbd84-utils

这个时间可能会比较长,要耐心一些。

下载安装了两个包:

kmod-drbd84-8.4.7-1_1.el7.elrepo.x86_64.rpm

drbd84-utils-8.9.5-1.el7.elrepo.x86_64.rpm

# cd /var/cache/yum/x86_64/7/elrepo/packages/

# ls

drbd84-utils-8.9.5-1.el7.elrepo.x86_64.rpm

kmod-drbd84-8.4.7-1_1.el7.elrepo.x86_64.rpm

复制到另外一个节点,并且进行安装。

# scp *.rpm labkvm2:/tmp

# ssh labkvm2

[root@labkvm2 tmp]# ls *.rpm

drbd84-utils-8.9.5-1.el7.elrepo.x86_64.rpm

kmod-drbd84-8.4.7-1_1.el7.elrepo.x86_64.rpm

[root@labkvm2 tmp]# rpm -ivh *.rpm

......

[root@labkvm2 tmp]# exit

logout

Connection to labkvm2 closed.

# vi /etc/drbd.d/global_common.conf

修改#usage-count 的值,从 yes 修改为 no。修改所有节点的这个配置。

这是一个类似用户体验的统计。

2.2. 配置 SELinux 及防火墙

配置 SELinux。

[ALL]# yum -y install policycoreutils-python

[ALL]# semanage permissive -a drbd_t

我们配置防火墙,将 corosync、drbd 的专用网段设置为全开放。

[ALL]# firewall-cmd --permanent --zone=trusted

--add-source=172.16.206.0/24

[ALL]# firewall-cmd --permanent --zone=trusted

--add-source=10.0.206.0/24

[ALL]# firewall-cmd --reload

2.3. 准备磁盘

for i in /sys/class/scsi_host/*; do echo "- - -" > $i/scan; done

2.4. 准备 LV

使用 fdisk 等分区工具创建一个分区,使用全部空间

[ALL]# fdisk -l /dev/vdb

Disk /dev/vdb: 85.9 GB, 85899345920 bytes, 167772160 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x1a932db5

Device Boot Start End Blocks Id System

/dev/vdb1 2048 167772159 83885056 8e Linux LVM

[ALL]# partprobe

[ALL]# pvcreate /dev/vdb1

[ALL]# vgcreate vgdrbd0 /dev/vdb1

[ALL]# lvcreate -n lvdrbd0 -L 5G vgdrbd0

[ALL]# partprobe

2.5. 配置 DRBD 参数文件

[root@labkvm1 ~]# cd /etc/drbd.d/

[root@labkvm1 drbd.d]# vi global_common.conf

修改一下其中的统计计数。

新创建配置文件

[root@labkvm1 drbd.d]# vi r0.res

resource r0 {

protocol C;

meta-disk internal;

device /dev/drbd0;

disk /dev/vgdrbd0/lvdrbd0;

syncer {

verify-alg sha1;

}

on labkvm1 {

address 10.0.1.231:7789;

}

on labkvm2 {

address 10.0.1.232:7789;

}

net {

allow-two-primaries;

after-sb-0pri discard-zero-changes;

after-sb-1pri discard-secondary;

after-sb-2pri disconnect;

}

disk {

fencing resource-and-stonith;

}

handlers {

fence-peer "/usr/lib/drbd/crm-fence-peer.sh";

after-resync-target "/usr/lib/drbd/crm-unfence-peer.sh";

}

}

注意:要使用 DRBD 专用的 IP 地址。

将此参数文件复制到其他的节点上。

[root@labkvm1 drbd.d]# scp r0.res labkvm2:/etc/drbd.d/

2.6. 初始化 DRBD

[ALL]# drbdadm create-md r0

[ALL]# modprobe drbd

[ALL]# drbdadm up r0

2.7. 同步 DRBD 设备

在第一个节点上执行以下命令.

[root@labkvm1 ~]# drbdadm primary --force r0

[root@labkvm1 ~]# cat /proc/drbd

version: 8.4.8-1 (api:1/proto:86-101)

GIT-hash: 22b4c802192646e433d3f7399d578ec7fecc6272 build by mockbuild@, 2016-10-13

19:58:26

0: cs:SyncSource ro:Primary/Secondary ds:UpToDate/Inconsistent C r-----

ns:6382492 nr:0 dw:0 dr:6383404 al:8 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:77496896

[>...................] sync'ed: 7.7% (75680/81912)M

finish: 0:58:07 speed: 22,212 (25,840) K/sec

[root@labkvm1 ~]# drbd-overview

0:r0/0 Connected Primary/Secondary UpToDate/UpToDate

[root@labkvm1 ~]# cat /proc/drbd

[root@labkvm1 drbd.d]# cat /proc/drbd

version: 8.4.7-1 (api:1/proto:86-101)

GIT-hash: 3a6a769340ef93b1ba2792c6461250790795db49 build by

phil@Build64R7, 2016-01-12 14:29:40

0: cs:Connected ro:Primary/Secondary ds:UpToDate/UpToDate C r-----

ns:4194140 nr:0 dw:0 dr:4195052 al:8 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1

wo:f oos:0

在每个节点上执行以下命令,以保证在启动时每个节点加载 DRBD 模块。

[all]# echo drbd >/etc/modules-load.d/drbd.conf

3. 配置

3.1. 创建群集

# pcs cluster setup --name cluster1 labkvm1-cr labkvm2-cr

# pcs cluster start --all

labkvm1-cr: Starting Cluster...

labkvm2-cr: Starting Cluster...

查看状态。

# pcs status

3.2. 配置 STONITH

KVM

由于实验环境是 UCS 上的两个 KVM 做嵌套实现,所以可以做 kvm 的 fence

# pcs status

Cluster name: cluster1

WARNING: no stonith devices and stonith-enabled is not false

Last updated: Mon Oct 24 11:42:38 2016 Last change: Mon Oct

24 11:42:29 2016 by hacluster via crmd on labkvm2-cr

Stack: corosync

Current DC: labkvm2-cr (version 1.1.13-10.el7-44eb2dd) - partition

with quorum

2 nodes and 0 resources configured

Online: [ labkvm1-cr labkvm2-cr ]

Full list of resources:

PCSD Status:

labkvm1-cr: Online

labkvm2-cr: Online

Daemon Status:

corosync: active/disabled

pacemaker: active/disabled

pcsd: active/enabled

http://klwang.info/kvm-guest-fence/

Host 服务器配置

先在 UCS 服务器上进行配置。

1、安装软件先装上

[root@zzkvm1 ~]# yum install -y fence-virt fence-virtd

fence-virtd-libvirt fence-virtd-multicast

2、创建一个认证文件

# mkdir /etc/cluster

# dd if=/dev/urandom of=/etc/cluster/fence_xvm.key bs=4096 count=1

1+0 records in

1+0 records out

4096 bytes (4.1 kB) copied, 0.00080152 s, 5.1 MB/s

3. 生成配置文件

[root@zzkvm1 ~]# fence_virtd --help

fence_virtd: invalid option -- '-'

Usage: fence_virtd [options]

-F Do not daemonize.

-f <file> Use <file> as configuration file.

-d <level> Set debugging level to <level>.

-c Configuration mode.

-l List plugins.

[root@zzkvm1 ~]# fence_virtd –c

全部使用默认,一路回车

Module search path [/usr/lib64/fence-virt]:

Available backends:

libvirt 0.1

Available listeners:

multicast 1.2

Listener modules are responsible for accepting requests

from fencing clients.

Listener module [multicast]:

The multicast listener module is designed for use environments

where the guests and hosts may communicate over a network using

multicast.

The multicast address is the address that a client will use to

send fencing requests to fence_virtd.

Multicast IP Address [225.0.0.12]:

Using ipv4 as family.

Multicast IP Port [1229]:

Setting a preferred interface causes fence_virtd to listen only

on that interface. Normally, it listens on all interfaces.

In environments where the virtual machines are using the host

machine as a gateway, this *must* be set (typically to virbr0).

Set to 'none' for no interface.

Interface [virbr0]:

在我的环境中要修改为的网桥的 br0 地址

The key file is the shared key information which is used to

authenticate fencing requests. The contents of this file must

be distributed to each physical host and virtual machine within

a cluster.

Key File [/etc/cluster/fence_xvm.key]:

Backend modules are responsible for routing requests to

the appropriate hypervisor or management layer.

Backend module [libvirt]:

Configuration complete.

=== Begin Configuration ===

backends {

libvirt {

uri = "qemu:///system";

}

}

listeners {

multicast {

port = "1229";

family = "ipv4";

interface = "virbr0";

address = "225.0.0.12";

key_file = "/etc/cluster/fence_xvm.key";

}

}

fence_virtd {

module_path = "/usr/lib64/fence-virt";

backend = "libvirt";

listener = "multicast";

}

=== End Configuration ===

Replace /etc/fence_virt.conf with the above [y/N]? y

4.配置文件 review

生成的配置文件/etc/fence_virt.conf 的内容:

backends {

libvirt {

uri = "qemu:///system";}}

listeners {

multicast {interface = "br0";

port = "1229";

family = "ipv4";

address = "225.0.0.12";

key_file = "/etc/cluster/fence_xvm.key";}}

fence_virtd {

module_path = "/usr/lib64/fence-virt";

backend = "libvirt";

listener = "multicast";}

5.启动服务

[root@zzkvm1 ~]# systemctl start fence_virtd.service

[root@zzkvm1 ~]# systemctl enable fence_virtd.service

Created symlink from

/etc/systemd/system/multi-user.target.wants/fence_virtd.service to

/usr/lib/systemd/system/fence_virtd.service.

[root@zzkvm1 ~]# systemctl status fence_virtd.service

● fence_virtd.service - Fence-Virt system host daemon

Loaded: loaded (/usr/lib/systemd/system/fence_virtd.service;

enabled; vendor preset: disabled)

Active: active (running) since Mon 2016-10-24 12:14:43 CST; 15s ago

Main PID: 24925 (fence_virtd)

CGroup: /system.slice/fence_virtd.service

└─24925 /usr/sbin/fence_virtd -w

Oct 24 12:14:43 zzkvm1 systemd[1]: Starting Fence-Virt system host

daemon...

Oct 24 12:14:43 zzkvm1 fence_virtd[24925]: fence_virtd starting.

Listener: libvirt Backend: multicast

Oct 24 12:14:43 zzkvm1 systemd[1]: Started Fence-Virt system host

daemon.

Oct 24 12:14:50 zzkvm1 systemd[1]:

[/usr/lib/systemd/system/fence_virtd.service:20] Support for option

SysVStartPriority= has been removed and it is ignored

- 验证配置

[root@zzkvm1 ~]# fence_xvm -h

usage: fence_xvm [args]

-d Specify (stdin) or increment (command line) debug level

-i <family> IP Family ([auto], ipv4, ipv6)

-a <address> Multicast address (default=225.0.0.12 / ff05::3:1)

-p <port> TCP, Multicast, or VMChannel IP port (default=1229)

-r <retrans> Multicast retransmit time (in 1/10sec; default=20)

-C <auth> Authentication (none, sha1, [sha256], sha512)

-c <hash> Packet hash strength (none, sha1, [sha256], sha512)

-k <file> Shared key file (default=/etc/cluster/fence_xvm.key)

-H <domain> Virtual Machine (domain name) to fence

-u Treat [domain] as UUID instead of domain name. This is

provided for compatibility with older fence_xvmd

installations.

-o <operation> Fencing action (null, off, on, [reboot], status, list,

monitor, metadata)

-t <timeout> Fencing timeout (in seconds; default=30)

-? Help (alternate)

-h Help

-V Display version and exit

-w <delay> Fencing delay (in seconds; default=0)

With no command line argument, arguments are read from standard input.

Arguments read from standard input take the form of:

arg1=value1

arg2=value2

debug Specify (stdin) or increment (command line) debug level

ip_family IP Family ([auto], ipv4, ipv6)

multicast_address Multicast address (default=225.0.0.12 / ff05::3:1)

ipport TCP, Multicast, or VMChannel IP port (default=1229)

retrans Multicast retransmit time (in 1/10sec; default=20)

auth Authentication (none, sha1, [sha256], sha512)

hash Packet hash strength (none, sha1, [sha256], sha512)

key_file Shared key file (default=/etc/cluster/fence_xvm.key)

port Virtual Machine (domain name) to fence

use_uuid Treat [domain] as UUID instead of domain name. This is

provided for compatibility with older fence_xvmd

installations.

action Fencing action (null, off, on, [reboot], status, list,

monitor, metadata)

timeout Fencing timeout (in seconds; default=30)

delay Fencing delay (in seconds; default=0)

[root@zzkvm1 ~]# fence_xvm -o list

iscsitarget1 23d2c251-dc6b-410a-ad7a-a8742196c067 off

labkvm1 55f50304-af8d-4ec4-8849-1d875721e08a on

labkvm2 8a098c06-b223-4a18-88f3-3f32c6d3a1ed on

node1 e7e695ae-b2b5-41ed-bb10-d62834d84dd8 off

......

vm1 2c5fdb62-3581-43c7-af7c-efb54edcc40f off

vm2 cdbd2bae-ddde-44d8-a99e-e771dc6f8e0d off

测试:重启一个 guest 机

[root@zzkvm1 ~]# virsh start vm1

Domain vm1 started

# fence_xvm -o reboot -H vm1

机器重新启动了!

Guest 机配置

- 安装软件包

# yum install -y fence-virt

[root@labkvm1 ~]# rpm -qi fence-virt

Name : fence-virt

Version : 0.3.2

Release : 2.el7

Architecture: x86_64

Install Date: Wed 12 Oct 2016 11:20:43 AM CST

Group : System Environment/Base

Size : 76434

License : GPLv2+

Signature : RSA/SHA256, Wed 25 Nov 2015 10:29:36 PM CST, Key ID

24c6a8a7f4a80eb5

Source RPM : fence-virt-0.3.2-2.el7.src.rpm

Build Date : Sat 21 Nov 2015 03:26:31 AM CST

Build Host : worker1.bsys.centos.org

Relocations : (not relocatable)

Packager : CentOS BuildSystem <http://bugs.centos.org>

Vendor : CentOS

URL : http://fence-virt.sourceforge.net

Summary : A pluggable fencing framework for virtual machines

Description :

Fencing agent for virtual machines.

- 同步配置文件

把之前在 host 上生成的 /etc/cluster/fence_xvm.key 文件拷贝到所有 guest

的对应位置

[root@labkvm1 ~]# mkdir /etc/cluster

[root@labkvm2 ~]# mkdir /etc/cluster

[root@zzkvm1 ~]# scp /etc/cluster/fence_xvm.key

192.168.1.231:/etc/cluster/

[root@zzkvm1 ~]# scp /etc/cluster/fence_xvm.key

192.168.1.232:/etc/cluster/

如果不安装这个 key,后续操作会报错

[root@labkvm1 ~]# fence_xvm -o list

Could not read /etc/cluster/fence_xvm.key; trying without

authentication

Timed out waiting for response

Operation failed

3、配置防火墙:

如果不配置防火墙,会一直在等。

[root@labkvm1 ~]# firewall-cmd --add-port=1229/tcp --permanent

[root@labkvm1 ~]# firewall-cmd --reload

4、测试

[root@labkvm1 ~]# fence_xvm -o list

......

labkvm1 55f50304-af8d-4ec4-8849-1d875721e08a on

labkvm2 8a098c06-b223-4a18-88f3-3f32c6d3a1ed on

......

vm1 2c5fdb62-3581-43c7-af7c-efb54edcc40f on

…

注意:要注意 LABKVM1 的防火墙,否则会出现以下错误:

Timed out waiting for response

Operation failed

试试?别把自己 fence 掉啦

[root@labkvm1 ~]# fence_xvm -o reboot -H vm1

ok 啦,可以使用这个 fence 设备了

为群集配置 STONITH

# pcs stonith create kvm-shooter fence_xvm pcmk_host_list="labkvm1

labkvm2"

3.3. 安装群集文件系统软件

[all]# yum -y install gfs2-utils dlm

3.4. 配置 DLM

# pcs resource create dlm ocf:pacemaker:controld op monitor

interval=30s on-fail=fence clone interleave=true ordered=true

3.5. 在群集中添加 DRBD 资源

首先,保证 DRBD 同步结束,两个节点一致。

# cat /proc/drbd

version: 8.4.8-1 (api:1/proto:86-101)

GIT-hash: 22b4c802192646e433d3f7399d578ec7fecc6272 build by

mockbuild@, 2016-10-13 19:58:26

0: cs:Connected ro:Secondary/Secondary ds:UpToDate/UpToDate C

r-----

ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:0

# cd

# pcs cluster cib drbd_cfg

# pcs -f drbd_cfg resource create VMData ocf:linbit:drbd \

drbd_resource=r0 op monitor interval=60s

# pcs -f drbd_cfg resource master VMDataClone VMData \

master-max=2 master-node-max=1 clone-max=2 clone-node-max=1 \

notify=true

# pcs -f drbd_cfg resource show

提交更改。

# pcs cluster cib-push drbd_cfg

CIB updated

3.6. 配置 CLVM

3.6.1. 安装并启用 CLVM

[ALL]# yum -y install lvm2-cluster

[ALL]# lvmconf --enable-cluster

每个节点均要重新启动

3.6.2. 向群集中添加 CLVM 资源

# pcs resource create clvmd ocf:heartbeat:clvm op monitor interval=30s

on-fail=fence clone interleave=true ordered=true

3.6.3. 配置约束

DLM ->

DRBD --> CLVM -->File System --> Virtual Domain

# pcs constraint order start dlm-clone then clvmd-clone

# pcs constraint colocation add clvmd-clone with dlm-clone

# pcs constraint order promote VMDataClone then start clvmd-clone

# pcs constraint colocation add clvmd-clone with VMDataClone

3.6.4. 创建 LV

[ALL]# vi /etc/lvm/lvm.conf

搜索 filter 字符串,添加如下一行

filter = [ "a|/dev/vd.*|","a|/dev/drbd*|", "r/.*/" ]

a 表示接受 r 表示拒绝

运行 vgscan -v 以重建 LVM 2 缓存

[ALL]# vgscan -v

# pvcreate /dev/drbd0

# partprobe ; multipath -r

# vgcreate vgvm0 /dev/drbd0

# lvcreate -n lvvm0 -l 100%FREE vgvm0

Logical volume "lvvm0" created.

3.7. 配置 GFS2

3.7.1. 创建 GFS2 文件系统

# lvscan

ACTIVE '/dev/vgdrbd0/lvdrbd0' [5.00 GiB] inherit

ACTIVE '/dev/centos/swap' [3.88 GiB] inherit

ACTIVE '/dev/centos/home' [25.57 GiB] inherit

ACTIVE '/dev/centos/root' [50.00 GiB] inherit

ACTIVE '/dev/vgvm0/lvvm0' [5.00 GiB] inherit

# mkfs.gfs2 -p lock_dlm -j 2 -t cluster1:labkvm1 /dev/vgvm0/lvvm0

3.7.2. 向群集添加 GFS2 文件系统

[ALL]# mkdir /vm

# pcs resource create VMFS Filesystem \

device="/dev/vgvm0/lvvm0" directory="/vm" fstype="gfs2" clone

# pcs constraint order clvmd-clone then VMFS-clone

# pcs constraint colocation add VMFS-clone with clvmd-clone

查看一下现在群集的状态。

# pcs status

C Cluster name: cluster1

Last updated: Mon Oct 24 07:48:09 2016 Last change: Mon Oct

24 07:48:03 2016 by root via cibadmin on labkvm1-cr

Stack: corosync

Current DC: labkvm1-cr (version 1.1.13-10.el7-44eb2dd) - partition

with quorum

2 nodes and 9 resources configured

Online: [ labkvm1-cr labkvm2-cr ]

Full list of resources:

ipmi-fencing (stonith:fence_ipmilan): Started labkvm1-cr

Clone Set: dlm-clone [dlm]

Started: [ labkvm1-cr labkvm2-cr ]

Clone Set: clvmd-clone [clvmd]

Started: [ labkvm1-cr labkvm2-cr ]

Master/Slave Set: VMDataClone [VMData]

Masters: [ labkvm1-cr labkvm2-cr ]

Clone Set: VMFS-clone [VMFS]

Started: [ labkvm1-cr labkvm2-cr ]

PCSD Status:

labkvm1-cr: Online

labkvm2-cr: Online

Daemon Status:

corosync: active/disabled

pacemaker: active/disabled

pcsd: active/enabled

3.7.3. 配置 SELinux

[ALL]# semanage fcontext -a -t virt_image_t "/vm(/.*)?"

[ALL]# restorecon -R -v /vm

3.8. 准备测试用的虚拟机

virsh # migrate --domain centos64a qemu+ssh://labkvm1-cr/system

3.9. 添加资源

Centos

在虚拟机的活动节点上,将虚拟机关闭

# virsh shutdown centos64a

# mkdir /vm/qemu_config

# virsh dumpxml centos64a > /vm/qemu_config/centos64a.xml

# virsh undefine centos64a

#pcs resource create centos64a_res VirtualDomain \

hypervisor="qemu:///system" \

config="/vm/qemu_config/centos64a.xml" \

migration_transport=ssh \

meta allow-migrate="true"

# pcs constraint order start VMFS-clone then centos64a_res

3.10. 迁移测试

pcs resource move

pcs cluster standby

pcs cluster stop

强制 reboot 一个节点