Mask-RCNN网络——实例分割

Mask-RCNN网络——实例分割

实例分割任务可以看做分为两部分:目标检测和语义分割

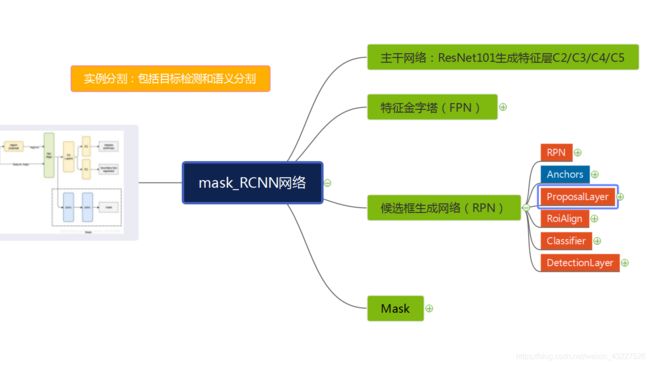

1、Mask-RCNN的网络结构框架

2、Mask-RCNN网络的的具体步骤

2.1 主干特征提取网络ResNet101

这里默认输入图片大小为1024*1024

图片来自https://blog.csdn.net/weixin_44791964/article/details/104629135

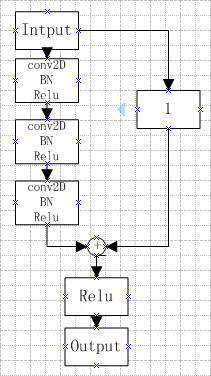

残差网络的残差块分为两类:

- Identity block

- Conv Block

Conv Block

两个残差块的区别在于残差边是否包含卷积网络+归一化网络。

代码:

def identity_block(input_tensor, kernel_size, filters, stage, block, use_bias=True, train_bn=True):

# 卷积输出的通道数

filter1, filter2, filter3 = filters

conv_name_base = 'res' + str(stage) + block + '_branch'

bn_name_base = 'bn' + str(stage) + block + '_branch'

x = Conv2D(filter1, (1, 1), name=conv_name_base + '2a', use_bias = use_bias)(input_tensor)

x = BatchNormalization(name=bn_name_base + '2a')(x, training=train_bn)

x = Activation('relu')(x)

x = Conv2D(filter2, (kernel_size, kernel_size), padding='same',

name=conv_name_base + '2b', use_bias=use_bias)(x)

x = BatchNormalization(name=bn_name_base + '2b')(x, training=train_bn)

x = Activation('relu')(x)

x = Conv2D(filter3, (1, 1), name=conv_name_base + '2c',

use_bias=use_bias)(x)

x = BatchNormalization(name=bn_name_base + '2c')(x, training=train_bn)

# identity_block的残差边上没有卷积模块

x = Add()([x, input_tensor])

x = Activation('relu', name='res' + str(stage) + block + '_out')(x)

return x

def conv_block(input_tensor, kernel_size, filters, stage, block, strides=(2, 2), use_bias=True, train_bn=True):#stride=(2, 2)输出的长宽减半

# 卷积输出的通道数

filter1, filter2, filter3 = filters

conv_name_base = 'res' + str(stage) + block + '_branch'

bn_name_base = 'bn' + str(stage) + block + '_branch'

x = Conv2D(filter1, (1, 1), strides=strides,

name=conv_name_base + '2a', use_bias=use_bias)(input_tensor)

x = BatchNormalization(name=bn_name_base + '2a')(x, training=train_bn)

x = Activation('relu')(x)

x = Conv2D(filter2, (kernel_size, kernel_size), padding='same',

name=conv_name_base + '2b', use_bias=use_bias)(x)

x = BatchNormalization(name=bn_name_base + '2b')(x, training=train_bn)

x = Activation('relu')(x)

x = Conv2D(filter3, (1, 1), name=conv_name_base + '2c', use_bias=use_bias)(x)

x = BatchNormalization(name=bn_name_base + '2c')(x, training=train_bn)

# shortcut残差边是一层输入的卷积核和一个归一化网络

shortcut = Conv2D(filter3, (1, 1), strides=strides,

name=conv_name_base + '1', use_bias=use_bias)(input_tensor)

shortcut = BatchNormalization(name=bn_name_base + '1')(shortcut, training=train_bn)

x = Add()([x, shortcut])

x = Activation('relu', name='res' + str(stage) + block + '_out')(x)

return x

经过一次identity_block网络卷积后输出宽高不变,通道数变为filter3。

经过一次conv_block网络卷积后,输出宽和高分别减半,通道数变为filter3。

filter1, filter2, filter3 = filters三个值分别表示卷积操作输出的通道数。残差块中有多个1×1的卷积。优点有两个:经过多个1×1卷积可以改变输出的通道数,与直接进行3×3卷积相比减少了参数的个数。同时增加了网络的深度。

经过残差网络的特征提取得到特征图C2、C3、C4、C5

def resnet(input_image,stage5=False, train_bn=True):

# stage1 C1和原始输入图像相比Height/4, Width/4, channel=64

x = ZeroPadding2D((3, 3))(input_image)

x = Conv2D(64, (7, 7), strides=(2, 2), name='conv1', use_bias=True)(x)

x = BatchNormalization(name='bn_conv1')(x, training=train_bn)

x = Activation('relu')(x)

C1 = x = MaxPooling2D((3, 3), strides=(2, 2), padding="same")(x)

# Stage 2 C2和原始输入图像相比height/4, width/4, channel=256

x = conv_block(x, 3, [64, 64, 256], stage=2, block='a', strides=(1, 1), train_bn=train_bn)

x = identity_block(x, 3, [64, 64, 256], stage