电商数仓笔记6_数据仓库系统(数仓搭建-ODS层,数仓搭建-DIM层)

电商数仓

- 一、数仓搭建-ODS层

- 1、ODS层(用户行为数据)

- (1)创建日志表ods_log

- (2)Shell中单引号和双引号区别

- (3)ODS层日志表加载数据脚本

- 2、ODS层(业务数据)

- (1)ODS层业务表首日数据装载脚本

- (2)ODS层业务表每日数据装载脚本

- 二、数仓搭建-DIM层

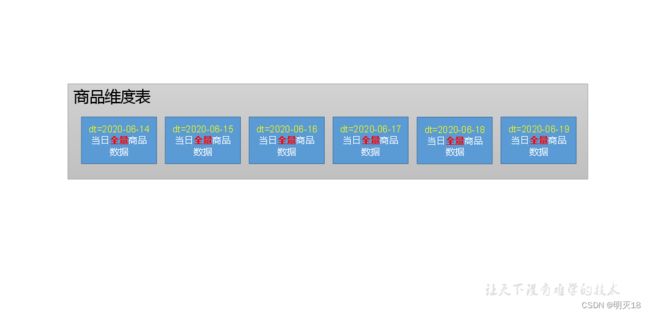

- 1、商品维度表(全量)

- 2、优惠券维度表(全量)

- 3、活动维度表(全量)

- 4、地区维度表(特殊)

- 5、时间维度表(特殊)

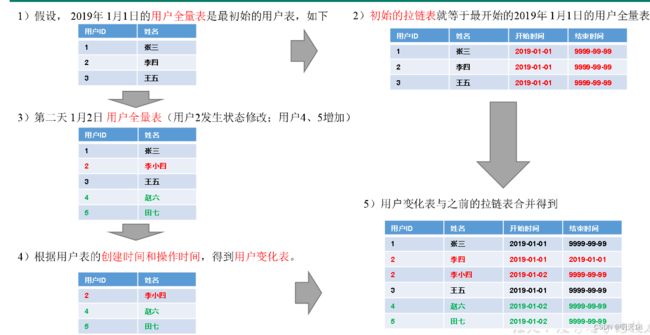

- 6、用户维度表(拉链表)

- (1)拉链表概述

- (2)制作拉链表

- 7、DIM层首日数据装载脚本

- 8、DIM层每日数据装载脚本

一、数仓搭建-ODS层

1)保持数据原貌不做任何修改,起到备份数据的作用。

2)数据采用LZO压缩,减少磁盘存储空间。100G数据可以压缩到10G以内。

3)创建分区表,防止后续的全表扫描,在企业开发中大量使用分区表。

4)创建外部表。在企业开发中,除了自己用的临时表,创建内部表外,绝大多数场景都是创建外部表。

1、ODS层(用户行为数据)

(1)创建日志表ods_log

1)创建支持lzo压缩的分区表

(1)建表语句

hive (gmall)>

drop table if exists ods_log;

CREATE EXTERNAL TABLE ods_log (`line` string)

PARTITIONED BY (`dt` string) -- 按照时间创建分区

STORED AS -- 指定存储方式,读数据采用LzoTextInputFormat;

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_log' -- 指定数据在hdfs上的存储位置

;

说明Hive的LZO压缩:https://cwiki.apache.org/confluence/display/Hive/LanguageManual+LZO

hive (gmall)>

load data inpath '/origin_data/gmall/log/topic_log/2020-06-14' into table ods_log partition(dt='2020-06-14');

注意:时间格式都配置成YYYY-MM-DD格式,这是Hive默认支持的时间格式

3)为lzo压缩文件创建索引

[lyh@hadoop102 bin]$ hadoop jar /opt/module/hadoop-3.1.3/share/hadoop/common/hadoop-lzo-0.4.20.jar com.hadoop.compression.lzo.DistributedLzoIndexer /warehouse/gmall/ods/ods_log/dt=2020-06-14

(2)Shell中单引号和双引号区别

1)在/home/lyh/bin创建一个test.sh文件

[lyh@hadoop102 bin]$ vim test.sh

在文件中添加如下内容

#!/bin/bash

do_date=$1

echo '$do_date'

echo "$do_date"

echo "'$do_date'"

echo '"$do_date"'

echo `date`

2)查看执行结果

[lyh@hadoop102 bin]$ test.sh 2020-06-14

$do_date

2020-06-14

'2020-06-14'

"$do_date"

2020年 06月 18日 星期四 21:02:08 CST

3)总结:

(1)单引号不取变量值

(2)双引号取变量值

(3)反引号`,执行引号中命令

(4)双引号内部嵌套单引号,取出变量值

(5)单引号内部嵌套双引号,不取出变量值

(3)ODS层日志表加载数据脚本

1)编写脚本

(1)在hadoop102的/home/lyh/bin目录下创建脚本

[lyh@hadoop102 bin]$ vim hdfs_to_ods_log.sh

在脚本中编写如下内容

#!/bin/bash

# 定义变量方便修改

APP=gmall

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$1" ] ;then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

echo ================== 日志日期为 $do_date ==================

sql="

load data inpath '/origin_data/$APP/log/topic_log/$do_date' into table ${APP}.ods_log partition(dt='$do_date');

"

hive -e "$sql"

hadoop jar /opt/module/hadoop-3.1.3/share/hadoop/common/hadoop-lzo-0.4.20.jar com.hadoop.compression.lzo.DistributedLzoIndexer /warehouse/$APP/ods/ods_log/dt=$do_date

(1)说明1:

[ -n 变量值 ] 判断变量的值,是否为空

-- 变量的值,非空,返回true

-- 变量的值,为空,返回false

注意:[ -n 变量值 ]不会解析数据,使用[ -n 变量值 ]时,需要对变量加上双引号(" ")

(2)说明2:

查看date命令的使用,date --help

(2)增加脚本执行权限

[lyh@hadoop102 bin]$ chmod 777 hdfs_to_ods_log.sh

2)脚本使用

(1)执行脚本

[lyh@hadoop102 module]$ hdfs_to_ods_log.sh 2020-06-14

(2)查看导入数据

2、ODS层(业务数据)

4.2.1 活动信息表

DROP TABLE IF EXISTS ods_activity_info;

CREATE EXTERNAL TABLE ods_activity_info(

`id` STRING COMMENT '编号',

`activity_name` STRING COMMENT '活动名称',

`activity_type` STRING COMMENT '活动类型',

`start_time` STRING COMMENT '开始时间',

`end_time` STRING COMMENT '结束时间',

`create_time` STRING COMMENT '创建时间'

) COMMENT '活动信息表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_activity_info/';

4.2.2 活动规则表

DROP TABLE IF EXISTS ods_activity_rule;

CREATE EXTERNAL TABLE ods_activity_rule(

`id` STRING COMMENT '编号',

`activity_id` STRING COMMENT '活动ID',

`activity_type` STRING COMMENT '活动类型',

`condition_amount` DECIMAL(16,2) COMMENT '满减金额',

`condition_num` BIGINT COMMENT '满减件数',

`benefit_amount` DECIMAL(16,2) COMMENT '优惠金额',

`benefit_discount` DECIMAL(16,2) COMMENT '优惠折扣',

`benefit_level` STRING COMMENT '优惠级别'

) COMMENT '活动规则表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_activity_rule/';

4.2.3 一级品类表

DROP TABLE IF EXISTS ods_base_category1;

CREATE EXTERNAL TABLE ods_base_category1(

`id` STRING COMMENT 'id',

`name` STRING COMMENT '名称'

) COMMENT '商品一级分类表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_base_category1/';

4.2.4 二级品类表

DROP TABLE IF EXISTS ods_base_category2;

CREATE EXTERNAL TABLE ods_base_category2(

`id` STRING COMMENT ' id',

`name` STRING COMMENT '名称',

`category1_id` STRING COMMENT '一级品类id'

) COMMENT '商品二级分类表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_base_category2/';

4.2.5 三级品类表

DROP TABLE IF EXISTS ods_base_category3;

CREATE EXTERNAL TABLE ods_base_category3(

`id` STRING COMMENT ' id',

`name` STRING COMMENT '名称',

`category2_id` STRING COMMENT '二级品类id'

) COMMENT '商品三级分类表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_base_category3/';

4.2.6 编码字典表

DROP TABLE IF EXISTS ods_base_dic;

CREATE EXTERNAL TABLE ods_base_dic(

`dic_code` STRING COMMENT '编号',

`dic_name` STRING COMMENT '编码名称',

`parent_code` STRING COMMENT '父编码',

`create_time` STRING COMMENT '创建日期',

`operate_time` STRING COMMENT '操作日期'

) COMMENT '编码字典表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_base_dic/';

4.2.7 省份表

DROP TABLE IF EXISTS ods_base_province;

CREATE EXTERNAL TABLE ods_base_province (

`id` STRING COMMENT '编号',

`name` STRING COMMENT '省份名称',

`region_id` STRING COMMENT '地区ID',

`area_code` STRING COMMENT '地区编码',

`iso_code` STRING COMMENT 'ISO-3166编码,供可视化使用',

`iso_3166_2` STRING COMMENT 'IOS-3166-2编码,供可视化使用'

) COMMENT '省份表'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_base_province/';

4.2.8 地区表

DROP TABLE IF EXISTS ods_base_region;

CREATE EXTERNAL TABLE ods_base_region (

`id` STRING COMMENT '编号',

`region_name` STRING COMMENT '地区名称'

) COMMENT '地区表'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_base_region/';

4.2.9 品牌表

DROP TABLE IF EXISTS ods_base_trademark;

CREATE EXTERNAL TABLE ods_base_trademark (

`id` STRING COMMENT '编号',

`tm_name` STRING COMMENT '品牌名称'

) COMMENT '品牌表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_base_trademark/';

4.2.10 购物车表

DROP TABLE IF EXISTS ods_cart_info;

CREATE EXTERNAL TABLE ods_cart_info(

`id` STRING COMMENT '编号',

`user_id` STRING COMMENT '用户id',

`sku_id` STRING COMMENT 'skuid',

`cart_price` DECIMAL(16,2) COMMENT '放入购物车时价格',

`sku_num` BIGINT COMMENT '数量',

`sku_name` STRING COMMENT 'sku名称 (冗余)',

`create_time` STRING COMMENT '创建时间',

`operate_time` STRING COMMENT '修改时间',

`is_ordered` STRING COMMENT '是否已经下单',

`order_time` STRING COMMENT '下单时间',

`source_type` STRING COMMENT '来源类型',

`source_id` STRING COMMENT '来源编号'

) COMMENT '加购表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_cart_info/';

4.2.11 评论表

DROP TABLE IF EXISTS ods_comment_info;

CREATE EXTERNAL TABLE ods_comment_info(

`id` STRING COMMENT '编号',

`user_id` STRING COMMENT '用户ID',

`sku_id` STRING COMMENT '商品sku',

`spu_id` STRING COMMENT '商品spu',

`order_id` STRING COMMENT '订单ID',

`appraise` STRING COMMENT '评价',

`create_time` STRING COMMENT '评价时间'

) COMMENT '商品评论表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_comment_info/';

4.2.12 优惠券信息表

DROP TABLE IF EXISTS ods_coupon_info;

CREATE EXTERNAL TABLE ods_coupon_info(

`id` STRING COMMENT '购物券编号',

`coupon_name` STRING COMMENT '购物券名称',

`coupon_type` STRING COMMENT '购物券类型 1 现金券 2 折扣券 3 满减券 4 满件打折券',

`condition_amount` DECIMAL(16,2) COMMENT '满额数',

`condition_num` BIGINT COMMENT '满件数',

`activity_id` STRING COMMENT '活动编号',

`benefit_amount` DECIMAL(16,2) COMMENT '减金额',

`benefit_discount` DECIMAL(16,2) COMMENT '折扣',

`create_time` STRING COMMENT '创建时间',

`range_type` STRING COMMENT '范围类型 1、商品 2、品类 3、品牌',

`limit_num` BIGINT COMMENT '最多领用次数',

`taken_count` BIGINT COMMENT '已领用次数',

`start_time` STRING COMMENT '开始领取时间',

`end_time` STRING COMMENT '结束领取时间',

`operate_time` STRING COMMENT '修改时间',

`expire_time` STRING COMMENT '过期时间'

) COMMENT '优惠券表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_coupon_info/';

4.2.13 优惠券领用表

DROP TABLE IF EXISTS ods_coupon_use;

CREATE EXTERNAL TABLE ods_coupon_use(

`id` STRING COMMENT '编号',

`coupon_id` STRING COMMENT '优惠券ID',

`user_id` STRING COMMENT 'skuid',

`order_id` STRING COMMENT 'spuid',

`coupon_status` STRING COMMENT '优惠券状态',

`get_time` STRING COMMENT '领取时间',

`using_time` STRING COMMENT '使用时间(下单)',

`used_time` STRING COMMENT '使用时间(支付)',

`expire_time` STRING COMMENT '过期时间'

) COMMENT '优惠券领用表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_coupon_use/';

4.2.14 收藏表

DROP TABLE IF EXISTS ods_favor_info;

CREATE EXTERNAL TABLE ods_favor_info(

`id` STRING COMMENT '编号',

`user_id` STRING COMMENT '用户id',

`sku_id` STRING COMMENT 'skuid',

`spu_id` STRING COMMENT 'spuid',

`is_cancel` STRING COMMENT '是否取消',

`create_time` STRING COMMENT '收藏时间',

`cancel_time` STRING COMMENT '取消时间'

) COMMENT '商品收藏表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_favor_info/';

4.2.15 订单明细表

DROP TABLE IF EXISTS ods_order_detail;

CREATE EXTERNAL TABLE ods_order_detail(

`id` STRING COMMENT '编号',

`order_id` STRING COMMENT '订单号',

`sku_id` STRING COMMENT '商品id',

`sku_name` STRING COMMENT '商品名称',

`order_price` DECIMAL(16,2) COMMENT '商品价格',

`sku_num` BIGINT COMMENT '商品数量',

`create_time` STRING COMMENT '创建时间',

`source_type` STRING COMMENT '来源类型',

`source_id` STRING COMMENT '来源编号',

`split_final_amount` DECIMAL(16,2) COMMENT '分摊最终金额',

`split_activity_amount` DECIMAL(16,2) COMMENT '分摊活动优惠',

`split_coupon_amount` DECIMAL(16,2) COMMENT '分摊优惠券优惠'

) COMMENT '订单详情表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_order_detail/';

4.2.16 订单明细活动关联表

DROP TABLE IF EXISTS ods_order_detail_activity;

CREATE EXTERNAL TABLE ods_order_detail_activity(

`id` STRING COMMENT '编号',

`order_id` STRING COMMENT '订单号',

`order_detail_id` STRING COMMENT '订单明细id',

`activity_id` STRING COMMENT '活动id',

`activity_rule_id` STRING COMMENT '活动规则id',

`sku_id` BIGINT COMMENT '商品id',

`create_time` STRING COMMENT '创建时间'

) COMMENT '订单详情活动关联表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_order_detail_activity/';

4.2.17 订单明细优惠券关联表

DROP TABLE IF EXISTS ods_order_detail_coupon;

CREATE EXTERNAL TABLE ods_order_detail_coupon(

`id` STRING COMMENT '编号',

`order_id` STRING COMMENT '订单号',

`order_detail_id` STRING COMMENT '订单明细id',

`coupon_id` STRING COMMENT '优惠券id',

`coupon_use_id` STRING COMMENT '优惠券领用记录id',

`sku_id` STRING COMMENT '商品id',

`create_time` STRING COMMENT '创建时间'

) COMMENT '订单详情活动关联表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_order_detail_coupon/';

4.2.18 订单表

DROP TABLE IF EXISTS ods_order_info;

CREATE EXTERNAL TABLE ods_order_info (

`id` STRING COMMENT '订单号',

`final_amount` DECIMAL(16,2) COMMENT '订单最终金额',

`order_status` STRING COMMENT '订单状态',

`user_id` STRING COMMENT '用户id',

`payment_way` STRING COMMENT '支付方式',

`delivery_address` STRING COMMENT '送货地址',

`out_trade_no` STRING COMMENT '支付流水号',

`create_time` STRING COMMENT '创建时间',

`operate_time` STRING COMMENT '操作时间',

`expire_time` STRING COMMENT '过期时间',

`tracking_no` STRING COMMENT '物流单编号',

`province_id` STRING COMMENT '省份ID',

`activity_reduce_amount` DECIMAL(16,2) COMMENT '活动减免金额',

`coupon_reduce_amount` DECIMAL(16,2) COMMENT '优惠券减免金额',

`original_amount` DECIMAL(16,2) COMMENT '订单原价金额',

`feight_fee` DECIMAL(16,2) COMMENT '运费',

`feight_fee_reduce` DECIMAL(16,2) COMMENT '运费减免'

) COMMENT '订单表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_order_info/';

4.2.19 退单表

DROP TABLE IF EXISTS ods_order_refund_info;

CREATE EXTERNAL TABLE ods_order_refund_info(

`id` STRING COMMENT '编号',

`user_id` STRING COMMENT '用户ID',

`order_id` STRING COMMENT '订单ID',

`sku_id` STRING COMMENT '商品ID',

`refund_type` STRING COMMENT '退单类型',

`refund_num` BIGINT COMMENT '退单件数',

`refund_amount` DECIMAL(16,2) COMMENT '退单金额',

`refund_reason_type` STRING COMMENT '退单原因类型',

`refund_status` STRING COMMENT '退单状态',--退单状态应包含买家申请、卖家审核、卖家收货、退款完成等状态。此处未涉及到,故该表按增量处理

`create_time` STRING COMMENT '退单时间'

) COMMENT '退单表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_order_refund_info/';

4.2.20 订单状态日志表

DROP TABLE IF EXISTS ods_order_status_log;

CREATE EXTERNAL TABLE ods_order_status_log (

`id` STRING COMMENT '编号',

`order_id` STRING COMMENT '订单ID',

`order_status` STRING COMMENT '订单状态',

`operate_time` STRING COMMENT '修改时间'

) COMMENT '订单状态表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_order_status_log/';

4.2.21 支付表

DROP TABLE IF EXISTS ods_payment_info;

CREATE EXTERNAL TABLE ods_payment_info(

`id` STRING COMMENT '编号',

`out_trade_no` STRING COMMENT '对外业务编号',

`order_id` STRING COMMENT '订单编号',

`user_id` STRING COMMENT '用户编号',

`payment_type` STRING COMMENT '支付类型',

`trade_no` STRING COMMENT '交易编号',

`payment_amount` DECIMAL(16,2) COMMENT '支付金额',

`subject` STRING COMMENT '交易内容',

`payment_status` STRING COMMENT '支付状态',

`create_time` STRING COMMENT '创建时间',

`callback_time` STRING COMMENT '回调时间'

) COMMENT '支付流水表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_payment_info/';

4.2.22 退款表

DROP TABLE IF EXISTS ods_refund_payment;

CREATE EXTERNAL TABLE ods_refund_payment(

`id` STRING COMMENT '编号',

`out_trade_no` STRING COMMENT '对外业务编号',

`order_id` STRING COMMENT '订单编号',

`sku_id` STRING COMMENT 'SKU编号',

`payment_type` STRING COMMENT '支付类型',

`trade_no` STRING COMMENT '交易编号',

`refund_amount` DECIMAL(16,2) COMMENT '支付金额',

`subject` STRING COMMENT '交易内容',

`refund_status` STRING COMMENT '支付状态',

`create_time` STRING COMMENT '创建时间',

`callback_time` STRING COMMENT '回调时间'

) COMMENT '支付流水表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_refund_payment/';

4.2.23 商品平台属性表

DROP TABLE IF EXISTS ods_sku_attr_value;

CREATE EXTERNAL TABLE ods_sku_attr_value(

`id` STRING COMMENT '编号',

`attr_id` STRING COMMENT '平台属性ID',

`value_id` STRING COMMENT '平台属性值ID',

`sku_id` STRING COMMENT '商品ID',

`attr_name` STRING COMMENT '平台属性名称',

`value_name` STRING COMMENT '平台属性值名称'

) COMMENT 'sku平台属性表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_sku_attr_value/';

4.2.24 商品(SKU)表

DROP TABLE IF EXISTS ods_sku_info;

CREATE EXTERNAL TABLE ods_sku_info(

`id` STRING COMMENT 'skuId',

`spu_id` STRING COMMENT 'spuid',

`price` DECIMAL(16,2) COMMENT '价格',

`sku_name` STRING COMMENT '商品名称',

`sku_desc` STRING COMMENT '商品描述',

`weight` DECIMAL(16,2) COMMENT '重量',

`tm_id` STRING COMMENT '品牌id',

`category3_id` STRING COMMENT '品类id',

`is_sale` STRING COMMENT '是否在售',

`create_time` STRING COMMENT '创建时间'

) COMMENT 'SKU商品表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_sku_info/';

4.2.25 商品销售属性表

DROP TABLE IF EXISTS ods_sku_sale_attr_value;

CREATE EXTERNAL TABLE ods_sku_sale_attr_value(

`id` STRING COMMENT '编号',

`sku_id` STRING COMMENT 'sku_id',

`spu_id` STRING COMMENT 'spu_id',

`sale_attr_value_id` STRING COMMENT '销售属性值id',

`sale_attr_id` STRING COMMENT '销售属性id',

`sale_attr_name` STRING COMMENT '销售属性名称',

`sale_attr_value_name` STRING COMMENT '销售属性值名称'

) COMMENT 'sku销售属性名称'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_sku_sale_attr_value/';

4.2.26 商品(SPU)表

DROP TABLE IF EXISTS ods_spu_info;

CREATE EXTERNAL TABLE ods_spu_info(

`id` STRING COMMENT 'spuid',

`spu_name` STRING COMMENT 'spu名称',

`category3_id` STRING COMMENT '品类id',

`tm_id` STRING COMMENT '品牌id'

) COMMENT 'SPU商品表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_spu_info/';

4.2.27 用户表

DROP TABLE IF EXISTS ods_user_info;

CREATE EXTERNAL TABLE ods_user_info(

`id` STRING COMMENT '用户id',

`login_name` STRING COMMENT '用户名称',

`nick_name` STRING COMMENT '用户昵称',

`name` STRING COMMENT '用户姓名',

`phone_num` STRING COMMENT '手机号码',

`email` STRING COMMENT '邮箱',

`user_level` STRING COMMENT '用户等级',

`birthday` STRING COMMENT '生日',

`gender` STRING COMMENT '性别',

`create_time` STRING COMMENT '创建时间',

`operate_time` STRING COMMENT '操作时间'

) COMMENT '用户表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_user_info/';

(1)ODS层业务表首日数据装载脚本

1)编写脚本

(1)在/home/lyh/bin目录下创建脚本hdfs_to_ods_db_init.sh

[lyh@hadoop102 bin]$ vim hdfs_to_ods_db_init.sh

在脚本中填写如下内容

#!/bin/bash

APP=gmall

if [ -n "$2" ] ;then

do_date=$2

else

echo "请传入日期参数"

exit

fi

ods_order_info="

load data inpath '/origin_data/$APP/db/order_info/$do_date' OVERWRITE into table ${APP}.ods_order_info partition(dt='$do_date');"

ods_order_detail="

load data inpath '/origin_data/$APP/db/order_detail/$do_date' OVERWRITE into table ${APP}.ods_order_detail partition(dt='$do_date');"

ods_sku_info="

load data inpath '/origin_data/$APP/db/sku_info/$do_date' OVERWRITE into table ${APP}.ods_sku_info partition(dt='$do_date');"

ods_user_info="

load data inpath '/origin_data/$APP/db/user_info/$do_date' OVERWRITE into table ${APP}.ods_user_info partition(dt='$do_date');"

ods_payment_info="

load data inpath '/origin_data/$APP/db/payment_info/$do_date' OVERWRITE into table ${APP}.ods_payment_info partition(dt='$do_date');"

ods_base_category1="

load data inpath '/origin_data/$APP/db/base_category1/$do_date' OVERWRITE into table ${APP}.ods_base_category1 partition(dt='$do_date');"

ods_base_category2="

load data inpath '/origin_data/$APP/db/base_category2/$do_date' OVERWRITE into table ${APP}.ods_base_category2 partition(dt='$do_date');"

ods_base_category3="

load data inpath '/origin_data/$APP/db/base_category3/$do_date' OVERWRITE into table ${APP}.ods_base_category3 partition(dt='$do_date'); "

ods_base_trademark="

load data inpath '/origin_data/$APP/db/base_trademark/$do_date' OVERWRITE into table ${APP}.ods_base_trademark partition(dt='$do_date'); "

ods_activity_info="

load data inpath '/origin_data/$APP/db/activity_info/$do_date' OVERWRITE into table ${APP}.ods_activity_info partition(dt='$do_date'); "

ods_cart_info="

load data inpath '/origin_data/$APP/db/cart_info/$do_date' OVERWRITE into table ${APP}.ods_cart_info partition(dt='$do_date'); "

ods_comment_info="

load data inpath '/origin_data/$APP/db/comment_info/$do_date' OVERWRITE into table ${APP}.ods_comment_info partition(dt='$do_date'); "

ods_coupon_info="

load data inpath '/origin_data/$APP/db/coupon_info/$do_date' OVERWRITE into table ${APP}.ods_coupon_info partition(dt='$do_date'); "

ods_coupon_use="

load data inpath '/origin_data/$APP/db/coupon_use/$do_date' OVERWRITE into table ${APP}.ods_coupon_use partition(dt='$do_date'); "

ods_favor_info="

load data inpath '/origin_data/$APP/db/favor_info/$do_date' OVERWRITE into table ${APP}.ods_favor_info partition(dt='$do_date'); "

ods_order_refund_info="

load data inpath '/origin_data/$APP/db/order_refund_info/$do_date' OVERWRITE into table ${APP}.ods_order_refund_info partition(dt='$do_date'); "

ods_order_status_log="

load data inpath '/origin_data/$APP/db/order_status_log/$do_date' OVERWRITE into table ${APP}.ods_order_status_log partition(dt='$do_date'); "

ods_spu_info="

load data inpath '/origin_data/$APP/db/spu_info/$do_date' OVERWRITE into table ${APP}.ods_spu_info partition(dt='$do_date'); "

ods_activity_rule="

load data inpath '/origin_data/$APP/db/activity_rule/$do_date' OVERWRITE into table ${APP}.ods_activity_rule partition(dt='$do_date');"

ods_base_dic="

load data inpath '/origin_data/$APP/db/base_dic/$do_date' OVERWRITE into table ${APP}.ods_base_dic partition(dt='$do_date'); "

ods_order_detail_activity="

load data inpath '/origin_data/$APP/db/order_detail_activity/$do_date' OVERWRITE into table ${APP}.ods_order_detail_activity partition(dt='$do_date'); "

ods_order_detail_coupon="

load data inpath '/origin_data/$APP/db/order_detail_coupon/$do_date' OVERWRITE into table ${APP}.ods_order_detail_coupon partition(dt='$do_date'); "

ods_refund_payment="

load data inpath '/origin_data/$APP/db/refund_payment/$do_date' OVERWRITE into table ${APP}.ods_refund_payment partition(dt='$do_date'); "

ods_sku_attr_value="

load data inpath '/origin_data/$APP/db/sku_attr_value/$do_date' OVERWRITE into table ${APP}.ods_sku_attr_value partition(dt='$do_date'); "

ods_sku_sale_attr_value="

load data inpath '/origin_data/$APP/db/sku_sale_attr_value/$do_date' OVERWRITE into table ${APP}.ods_sku_sale_attr_value partition(dt='$do_date'); "

ods_base_province="

load data inpath '/origin_data/$APP/db/base_province/$do_date' OVERWRITE into table ${APP}.ods_base_province;"

ods_base_region="

load data inpath '/origin_data/$APP/db/base_region/$do_date' OVERWRITE into table ${APP}.ods_base_region;"

case $1 in

"ods_order_info"){

hive -e "$ods_order_info"

};;

"ods_order_detail"){

hive -e "$ods_order_detail"

};;

"ods_sku_info"){

hive -e "$ods_sku_info"

};;

"ods_user_info"){

hive -e "$ods_user_info"

};;

"ods_payment_info"){

hive -e "$ods_payment_info"

};;

"ods_base_category1"){

hive -e "$ods_base_category1"

};;

"ods_base_category2"){

hive -e "$ods_base_category2"

};;

"ods_base_category3"){

hive -e "$ods_base_category3"

};;

"ods_base_trademark"){

hive -e "$ods_base_trademark"

};;

"ods_activity_info"){

hive -e "$ods_activity_info"

};;

"ods_cart_info"){

hive -e "$ods_cart_info"

};;

"ods_comment_info"){

hive -e "$ods_comment_info"

};;

"ods_coupon_info"){

hive -e "$ods_coupon_info"

};;

"ods_coupon_use"){

hive -e "$ods_coupon_use"

};;

"ods_favor_info"){

hive -e "$ods_favor_info"

};;

"ods_order_refund_info"){

hive -e "$ods_order_refund_info"

};;

"ods_order_status_log"){

hive -e "$ods_order_status_log"

};;

"ods_spu_info"){

hive -e "$ods_spu_info"

};;

"ods_activity_rule"){

hive -e "$ods_activity_rule"

};;

"ods_base_dic"){

hive -e "$ods_base_dic"

};;

"ods_order_detail_activity"){

hive -e "$ods_order_detail_activity"

};;

"ods_order_detail_coupon"){

hive -e "$ods_order_detail_coupon"

};;

"ods_refund_payment"){

hive -e "$ods_refund_payment"

};;

"ods_sku_attr_value"){

hive -e "$ods_sku_attr_value"

};;

"ods_sku_sale_attr_value"){

hive -e "$ods_sku_sale_attr_value"

};;

"ods_base_province"){

hive -e "$ods_base_province"

};;

"ods_base_region"){

hive -e "$ods_base_region"

};;

"all"){

hive -e "$ods_order_info$ods_order_detail$ods_sku_info$ods_user_info$ods_payment_info$ods_base_category1$ods_base_category2$ods_base_category3$ods_base_trademark$ods_activity_info$ods_cart_info$ods_comment_info$ods_coupon_info$ods_coupon_use$ods_favor_info$ods_order_refund_info$ods_order_status_log$ods_spu_info$ods_activity_rule$ods_base_dic$ods_order_detail_activity$ods_order_detail_coupon$ods_refund_payment$ods_sku_attr_value$ods_sku_sale_attr_value$ods_base_province$ods_base_region"

};;

esac

(2)增加执行权限

[lyh@hadoop102 bin]$ chmod +x hdfs_to_ods_db_init.sh

2)脚本使用

(1)执行脚本

[lyh@hadoop102 bin]$ hdfs_to_ods_db_init.sh all 2020-06-14

(2)查看数据是否导入成功

(2)ODS层业务表每日数据装载脚本

1)编写脚本

(1)在/home/lyh/bin目录下创建脚本hdfs_to_ods_db.sh

[lyh@hadoop102 bin]$ vim hdfs_to_ods_db.sh

在脚本中填写如下内容

#!/bin/bash

APP=gmall

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$2" ] ;then

do_date=$2

else

do_date=`date -d "-1 day" +%F`

fi

ods_order_info="

load data inpath '/origin_data/$APP/db/order_info/$do_date' OVERWRITE into table ${APP}.ods_order_info partition(dt='$do_date');"

ods_order_detail="

load data inpath '/origin_data/$APP/db/order_detail/$do_date' OVERWRITE into table ${APP}.ods_order_detail partition(dt='$do_date');"

ods_sku_info="

load data inpath '/origin_data/$APP/db/sku_info/$do_date' OVERWRITE into table ${APP}.ods_sku_info partition(dt='$do_date');"

ods_user_info="

load data inpath '/origin_data/$APP/db/user_info/$do_date' OVERWRITE into table ${APP}.ods_user_info partition(dt='$do_date');"

ods_payment_info="

load data inpath '/origin_data/$APP/db/payment_info/$do_date' OVERWRITE into table ${APP}.ods_payment_info partition(dt='$do_date');"

ods_base_category1="

load data inpath '/origin_data/$APP/db/base_category1/$do_date' OVERWRITE into table ${APP}.ods_base_category1 partition(dt='$do_date');"

ods_base_category2="

load data inpath '/origin_data/$APP/db/base_category2/$do_date' OVERWRITE into table ${APP}.ods_base_category2 partition(dt='$do_date');"

ods_base_category3="

load data inpath '/origin_data/$APP/db/base_category3/$do_date' OVERWRITE into table ${APP}.ods_base_category3 partition(dt='$do_date'); "

ods_base_trademark="

load data inpath '/origin_data/$APP/db/base_trademark/$do_date' OVERWRITE into table ${APP}.ods_base_trademark partition(dt='$do_date'); "

ods_activity_info="

load data inpath '/origin_data/$APP/db/activity_info/$do_date' OVERWRITE into table ${APP}.ods_activity_info partition(dt='$do_date'); "

ods_cart_info="

load data inpath '/origin_data/$APP/db/cart_info/$do_date' OVERWRITE into table ${APP}.ods_cart_info partition(dt='$do_date'); "

ods_comment_info="

load data inpath '/origin_data/$APP/db/comment_info/$do_date' OVERWRITE into table ${APP}.ods_comment_info partition(dt='$do_date'); "

ods_coupon_info="

load data inpath '/origin_data/$APP/db/coupon_info/$do_date' OVERWRITE into table ${APP}.ods_coupon_info partition(dt='$do_date'); "

ods_coupon_use="

load data inpath '/origin_data/$APP/db/coupon_use/$do_date' OVERWRITE into table ${APP}.ods_coupon_use partition(dt='$do_date'); "

ods_favor_info="

load data inpath '/origin_data/$APP/db/favor_info/$do_date' OVERWRITE into table ${APP}.ods_favor_info partition(dt='$do_date'); "

ods_order_refund_info="

load data inpath '/origin_data/$APP/db/order_refund_info/$do_date' OVERWRITE into table ${APP}.ods_order_refund_info partition(dt='$do_date'); "

ods_order_status_log="

load data inpath '/origin_data/$APP/db/order_status_log/$do_date' OVERWRITE into table ${APP}.ods_order_status_log partition(dt='$do_date'); "

ods_spu_info="

load data inpath '/origin_data/$APP/db/spu_info/$do_date' OVERWRITE into table ${APP}.ods_spu_info partition(dt='$do_date'); "

ods_activity_rule="

load data inpath '/origin_data/$APP/db/activity_rule/$do_date' OVERWRITE into table ${APP}.ods_activity_rule partition(dt='$do_date');"

ods_base_dic="

load data inpath '/origin_data/$APP/db/base_dic/$do_date' OVERWRITE into table ${APP}.ods_base_dic partition(dt='$do_date'); "

ods_order_detail_activity="

load data inpath '/origin_data/$APP/db/order_detail_activity/$do_date' OVERWRITE into table ${APP}.ods_order_detail_activity partition(dt='$do_date'); "

ods_order_detail_coupon="

load data inpath '/origin_data/$APP/db/order_detail_coupon/$do_date' OVERWRITE into table ${APP}.ods_order_detail_coupon partition(dt='$do_date'); "

ods_refund_payment="

load data inpath '/origin_data/$APP/db/refund_payment/$do_date' OVERWRITE into table ${APP}.ods_refund_payment partition(dt='$do_date'); "

ods_sku_attr_value="

load data inpath '/origin_data/$APP/db/sku_attr_value/$do_date' OVERWRITE into table ${APP}.ods_sku_attr_value partition(dt='$do_date'); "

ods_sku_sale_attr_value="

load data inpath '/origin_data/$APP/db/sku_sale_attr_value/$do_date' OVERWRITE into table ${APP}.ods_sku_sale_attr_value partition(dt='$do_date'); "

ods_base_province="

load data inpath '/origin_data/$APP/db/base_province/$do_date' OVERWRITE into table ${APP}.ods_base_province;"

ods_base_region="

load data inpath '/origin_data/$APP/db/base_region/$do_date' OVERWRITE into table ${APP}.ods_base_region;"

case $1 in

"ods_order_info"){

hive -e "$ods_order_info"

};;

"ods_order_detail"){

hive -e "$ods_order_detail"

};;

"ods_sku_info"){

hive -e "$ods_sku_info"

};;

"ods_user_info"){

hive -e "$ods_user_info"

};;

"ods_payment_info"){

hive -e "$ods_payment_info"

};;

"ods_base_category1"){

hive -e "$ods_base_category1"

};;

"ods_base_category2"){

hive -e "$ods_base_category2"

};;

"ods_base_category3"){

hive -e "$ods_base_category3"

};;

"ods_base_trademark"){

hive -e "$ods_base_trademark"

};;

"ods_activity_info"){

hive -e "$ods_activity_info"

};;

"ods_cart_info"){

hive -e "$ods_cart_info"

};;

"ods_comment_info"){

hive -e "$ods_comment_info"

};;

"ods_coupon_info"){

hive -e "$ods_coupon_info"

};;

"ods_coupon_use"){

hive -e "$ods_coupon_use"

};;

"ods_favor_info"){

hive -e "$ods_favor_info"

};;

"ods_order_refund_info"){

hive -e "$ods_order_refund_info"

};;

"ods_order_status_log"){

hive -e "$ods_order_status_log"

};;

"ods_spu_info"){

hive -e "$ods_spu_info"

};;

"ods_activity_rule"){

hive -e "$ods_activity_rule"

};;

"ods_base_dic"){

hive -e "$ods_base_dic"

};;

"ods_order_detail_activity"){

hive -e "$ods_order_detail_activity"

};;

"ods_order_detail_coupon"){

hive -e "$ods_order_detail_coupon"

};;

"ods_refund_payment"){

hive -e "$ods_refund_payment"

};;

"ods_sku_attr_value"){

hive -e "$ods_sku_attr_value"

};;

"ods_sku_sale_attr_value"){

hive -e "$ods_sku_sale_attr_value"

};;

"all"){

hive -e "$ods_order_info$ods_order_detail$ods_sku_info$ods_user_info$ods_payment_info$ods_base_category1$ods_base_category2$ods_base_category3$ods_base_trademark$ods_activity_info$ods_cart_info$ods_comment_info$ods_coupon_info$ods_coupon_use$ods_favor_info$ods_order_refund_info$ods_order_status_log$ods_spu_info$ods_activity_rule$ods_base_dic$ods_order_detail_activity$ods_order_detail_coupon$ods_refund_payment$ods_sku_attr_value$ods_sku_sale_attr_value"

};;

esac

(2)修改权限

[lyh@hadoop102 bin]$ chmod +x hdfs_to_ods_db.sh

2)脚本使用

(1)执行脚本

[lyh@hadoop102 bin]$ hdfs_to_ods_db.sh all 2020-06-14

(2)查看数据是否导入成功

二、数仓搭建-DIM层

1、商品维度表(全量)

1.建表语句

DROP TABLE IF EXISTS dim_sku_info;

CREATE EXTERNAL TABLE dim_sku_info (

`id` STRING COMMENT '商品id',

`price` DECIMAL(16,2) COMMENT '商品价格',

`sku_name` STRING COMMENT '商品名称',

`sku_desc` STRING COMMENT '商品描述',

`weight` DECIMAL(16,2) COMMENT '重量',

`is_sale` BOOLEAN COMMENT '是否在售',

`spu_id` STRING COMMENT 'spu编号',

`spu_name` STRING COMMENT 'spu名称',

`category3_id` STRING COMMENT '三级分类id',

`category3_name` STRING COMMENT '三级分类名称',

`category2_id` STRING COMMENT '二级分类id',

`category2_name` STRING COMMENT '二级分类名称',

`category1_id` STRING COMMENT '一级分类id',

`category1_name` STRING COMMENT '一级分类名称',

`tm_id` STRING COMMENT '品牌id',

`tm_name` STRING COMMENT '品牌名称',

`sku_attr_values` ARRAY<STRUCT<attr_id:STRING,value_id:STRING,attr_name:STRING,value_name:STRING>> COMMENT '平台属性',

`sku_sale_attr_values` ARRAY<STRUCT<sale_attr_id:STRING,sale_attr_value_id:STRING,sale_attr_name:STRING,sale_attr_value_name:STRING>> COMMENT '销售属性',

`create_time` STRING COMMENT '创建时间'

) COMMENT '商品维度表'

PARTITIONED BY (`dt` STRING)

STORED AS PARQUET

LOCATION '/warehouse/gmall/dim/dim_sku_info/'

TBLPROPERTIES ("parquet.compression"="lzo");

2.分区规划

3.数据装载

1)Hive读取索引文件问题

(1)两种方式,分别查询数据有多少行

hive (gmall)> select * from ods_log;

Time taken: 0.706 seconds, Fetched: 2955 row(s)

hive (gmall)> select count(*) from ods_log;

2959

(2)两次查询结果不一致。

原因是select * from ods_log不执行MR操作,直接采用的是ods_log建表语句中指定的DeprecatedLzoTextInputFormat,能够识别lzo.index为索引文件。

select count(*) from ods_log执行MR操作,会先经过hive.input.format,其默认值为CombineHiveInputFormat,其会先将索引文件当成小文件合并,将其当做普通文件处理。更严重的是,这会导致LZO文件无法切片。

hive (gmall)>

hive.input.format=org.apache.hadoop.hive.ql.io.CombineHiveInputFormat;

解决办法:修改CombineHiveInputFormat为HiveInputFormat

hive (gmall)>

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

2)首日装载

with

sku as

(

select

id,

price,

sku_name,

sku_desc,

weight,

is_sale,

spu_id,

category3_id,

tm_id,

create_time

from ods_sku_info

where dt='2020-06-14'

),

spu as

(

select

id,

spu_name

from ods_spu_info

where dt='2020-06-14'

),

c3 as

(

select

id,

name,

category2_id

from ods_base_category3

where dt='2020-06-14'

),

c2 as

(

select

id,

name,

category1_id

from ods_base_category2

where dt='2020-06-14'

),

c1 as

(

select

id,

name

from ods_base_category1

where dt='2020-06-14'

),

tm as

(

select

id,

tm_name

from ods_base_trademark

where dt='2020-06-14'

),

attr as

(

select

sku_id,

collect_set(named_struct('attr_id',attr_id,'value_id',value_id,'attr_name',attr_name,'value_name',value_name)) attrs

from ods_sku_attr_value

where dt='2020-06-14'

group by sku_id

),

sale_attr as

(

select

sku_id,

collect_set(named_struct('sale_attr_id',sale_attr_id,'sale_attr_value_id',sale_attr_value_id,'sale_attr_name',sale_attr_name,'sale_attr_value_name',sale_attr_value_name)) sale_attrs

from ods_sku_sale_attr_value

where dt='2020-06-14'

group by sku_id

)

insert overwrite table dim_sku_info partition(dt='2020-06-14')

select

sku.id,

sku.price,

sku.sku_name,

sku.sku_desc,

sku.weight,

sku.is_sale,

sku.spu_id,

spu.spu_name,

sku.category3_id,

c3.name,

c3.category2_id,

c2.name,

c2.category1_id,

c1.name,

sku.tm_id,

tm.tm_name,

attr.attrs,

sale_attr.sale_attrs,

sku.create_time

from sku

left join spu on sku.spu_id=spu.id

left join c3 on sku.category3_id=c3.id

left join c2 on c3.category2_id=c2.id

left join c1 on c2.category1_id=c1.id

left join tm on sku.tm_id=tm.id

left join attr on sku.id=attr.sku_id

left join sale_attr on sku.id=sale_attr.sku_id;

3)每日装载

with

sku as

(

select

id,

price,

sku_name,

sku_desc,

weight,

is_sale,

spu_id,

category3_id,

tm_id,

create_time

from ods_sku_info

where dt='2020-06-15'

),

spu as

(

select

id,

spu_name

from ods_spu_info

where dt='2020-06-15'

),

c3 as

(

select

id,

name,

category2_id

from ods_base_category3

where dt='2020-06-15'

),

c2 as

(

select

id,

name,

category1_id

from ods_base_category2

where dt='2020-06-15'

),

c1 as

(

select

id,

name

from ods_base_category1

where dt='2020-06-15'

),

tm as

(

select

id,

tm_name

from ods_base_trademark

where dt='2020-06-15'

),

attr as

(

select

sku_id,

collect_set(named_struct('attr_id',attr_id,'value_id',value_id,'attr_name',attr_name,'value_name',value_name)) attrs

from ods_sku_attr_value

where dt='2020-06-15'

group by sku_id

),

sale_attr as

(

select

sku_id,

collect_set(named_struct('sale_attr_id',sale_attr_id,'sale_attr_value_id',sale_attr_value_id,'sale_attr_name',sale_attr_name,'sale_attr_value_name',sale_attr_value_name)) sale_attrs

from ods_sku_sale_attr_value

where dt='2020-06-15'

group by sku_id

)

insert overwrite table dim_sku_info partition(dt='2020-06-15')

select

sku.id,

sku.price,

sku.sku_name,

sku.sku_desc,

sku.weight,

sku.is_sale,

sku.spu_id,

spu.spu_name,

sku.category3_id,

c3.name,

c3.category2_id,

c2.name,

c2.category1_id,

c1.name,

sku.tm_id,

tm.tm_name,

attr.attrs,

sale_attr.sale_attrs,

sku.create_time

from sku

left join spu on sku.spu_id=spu.id

left join c3 on sku.category3_id=c3.id

left join c2 on c3.category2_id=c2.id

left join c1 on c2.category1_id=c1.id

left join tm on sku.tm_id=tm.id

left join attr on sku.id=attr.sku_id

left join sale_attr on sku.id=sale_attr.sku_id;

2、优惠券维度表(全量)

1.建表语句

DROP TABLE IF EXISTS dim_coupon_info;

CREATE EXTERNAL TABLE dim_coupon_info(

`id` STRING COMMENT '购物券编号',

`coupon_name` STRING COMMENT '购物券名称',

`coupon_type` STRING COMMENT '购物券类型 1 现金券 2 折扣券 3 满减券 4 满件打折券',

`condition_amount` DECIMAL(16,2) COMMENT '满额数',

`condition_num` BIGINT COMMENT '满件数',

`activity_id` STRING COMMENT '活动编号',

`benefit_amount` DECIMAL(16,2) COMMENT '减金额',

`benefit_discount` DECIMAL(16,2) COMMENT '折扣',

`create_time` STRING COMMENT '创建时间',

`range_type` STRING COMMENT '范围类型 1、商品 2、品类 3、品牌',

`limit_num` BIGINT COMMENT '最多领取次数',

`taken_count` BIGINT COMMENT '已领取次数',

`start_time` STRING COMMENT '可以领取的开始日期',

`end_time` STRING COMMENT '可以领取的结束日期',

`operate_time` STRING COMMENT '修改时间',

`expire_time` STRING COMMENT '过期时间'

) COMMENT '优惠券维度表'

PARTITIONED BY (`dt` STRING)

STORED AS PARQUET

LOCATION '/warehouse/gmall/dim/dim_coupon_info/'

TBLPROPERTIES ("parquet.compression"="lzo");

insert overwrite table dim_coupon_info partition(dt='2020-06-14')

select

id,

coupon_name,

coupon_type,

condition_amount,

condition_num,

activity_id,

benefit_amount,

benefit_discount,

create_time,

range_type,

limit_num,

taken_count,

start_time,

end_time,

operate_time,

expire_time

from ods_coupon_info

where dt='2020-06-14';

2)每日装载

insert overwrite table dim_coupon_info partition(dt='2020-06-15')

select

id,

coupon_name,

coupon_type,

condition_amount,

condition_num,

activity_id,

benefit_amount,

benefit_discount,

create_time,

range_type,

limit_num,

taken_count,

start_time,

end_time,

operate_time,

expire_time

from ods_coupon_info

where dt='2020-06-15';

3、活动维度表(全量)

1.建表语句

DROP TABLE IF EXISTS dim_activity_rule_info;

CREATE EXTERNAL TABLE dim_activity_rule_info(

`activity_rule_id` STRING COMMENT '活动规则ID',

`activity_id` STRING COMMENT '活动ID',

`activity_name` STRING COMMENT '活动名称',

`activity_type` STRING COMMENT '活动类型',

`start_time` STRING COMMENT '开始时间',

`end_time` STRING COMMENT '结束时间',

`create_time` STRING COMMENT '创建时间',

`condition_amount` DECIMAL(16,2) COMMENT '满减金额',

`condition_num` BIGINT COMMENT '满减件数',

`benefit_amount` DECIMAL(16,2) COMMENT '优惠金额',

`benefit_discount` DECIMAL(16,2) COMMENT '优惠折扣',

`benefit_level` STRING COMMENT '优惠级别'

) COMMENT '活动信息表'

PARTITIONED BY (`dt` STRING)

STORED AS PARQUET

LOCATION '/warehouse/gmall/dim/dim_activity_rule_info/'

TBLPROPERTIES ("parquet.compression"="lzo");

insert overwrite table dim_activity_rule_info partition(dt='2020-06-14')

select

ar.id,

ar.activity_id,

ai.activity_name,

ar.activity_type,

ai.start_time,

ai.end_time,

ai.create_time,

ar.condition_amount,

ar.condition_num,

ar.benefit_amount,

ar.benefit_discount,

ar.benefit_level

from

(

select

id,

activity_id,

activity_type,

condition_amount,

condition_num,

benefit_amount,

benefit_discount,

benefit_level

from ods_activity_rule

where dt='2020-06-14'

)ar

left join

(

select

id,

activity_name,

start_time,

end_time,

create_time

from ods_activity_info

where dt='2020-06-14'

)ai

on ar.activity_id=ai.id;

2)每日装载

insert overwrite table dim_activity_rule_info partition(dt='2020-06-15')

select

ar.id,

ar.activity_id,

ai.activity_name,

ar.activity_type,

ai.start_time,

ai.end_time,

ai.create_time,

ar.condition_amount,

ar.condition_num,

ar.benefit_amount,

ar.benefit_discount,

ar.benefit_level

from

(

select

id,

activity_id,

activity_type,

condition_amount,

condition_num,

benefit_amount,

benefit_discount,

benefit_level

from ods_activity_rule

where dt='2020-06-15'

)ar

left join

(

select

id,

activity_name,

start_time,

end_time,

create_time

from ods_activity_info

where dt='2020-06-15'

)ai

on ar.activity_id=ai.id;

4、地区维度表(特殊)

1.建表语句

DROP TABLE IF EXISTS dim_base_province;

CREATE EXTERNAL TABLE dim_base_province (

`id` STRING COMMENT 'id',

`province_name` STRING COMMENT '省市名称',

`area_code` STRING COMMENT '地区编码',

`iso_code` STRING COMMENT 'ISO-3166编码,供可视化使用',

`iso_3166_2` STRING COMMENT 'IOS-3166-2编码,供可视化使用',

`region_id` STRING COMMENT '地区id',

`region_name` STRING COMMENT '地区名称'

) COMMENT '地区维度表'

STORED AS PARQUET

LOCATION '/warehouse/gmall/dim/dim_base_province/'

TBLPROPERTIES ("parquet.compression"="lzo");

2.数据装载

insert overwrite table dim_base_province

select

bp.id,

bp.name,

bp.area_code,

bp.iso_code,

bp.iso_3166_2,

bp.region_id,

br.region_name

from ods_base_province bp

join ods_base_region br on bp.region_id = br.id;

5、时间维度表(特殊)

1.建表语句

DROP TABLE IF EXISTS dim_date_info;

CREATE EXTERNAL TABLE dim_date_info(

`date_id` STRING COMMENT '日',

`week_id` STRING COMMENT '周ID',

`week_day` STRING COMMENT '周几',

`day` STRING COMMENT '每月的第几天',

`month` STRING COMMENT '第几月',

`quarter` STRING COMMENT '第几季度',

`year` STRING COMMENT '年',

`is_workday` STRING COMMENT '是否是工作日',

`holiday_id` STRING COMMENT '节假日'

) COMMENT '时间维度表'

STORED AS PARQUET

LOCATION '/warehouse/gmall/dim/dim_date_info/'

TBLPROPERTIES ("parquet.compression"="lzo");

2.数据装载

- 通常情况下,时间维度表的数据并不是来自于业务系统,而是手动写入,并且由于时间维度表数据的可预见性,无须每日导入,一般可一次性导入一年的数据。

1)创建临时表

DROP TABLE IF EXISTS tmp_dim_date_info;

CREATE EXTERNAL TABLE tmp_dim_date_info (

`date_id` STRING COMMENT '日',

`week_id` STRING COMMENT '周ID',

`week_day` STRING COMMENT '周几',

`day` STRING COMMENT '每月的第几天',

`month` STRING COMMENT '第几月',

`quarter` STRING COMMENT '第几季度',

`year` STRING COMMENT '年',

`is_workday` STRING COMMENT '是否是工作日',

`holiday_id` STRING COMMENT '节假日'

) COMMENT '时间维度表'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LOCATION '/warehouse/gmall/tmp/tmp_dim_date_info/';

2)将数据文件上传到HFDS上临时表指定路径/warehouse/gmall/tmp/tmp_dim_date_info/

2020-01-01 1 3 1 1 1 2020 0 元旦

2020-01-02 1 4 2 1 1 2020 1 \N

2020-01-03 1 5 3 1 1 2020 1 \N

2020-01-04 1 6 4 1 1 2020 0 \N

2020-01-05 1 7 5 1 1 2020 0 \N

2020-01-06 2 1 6 1 1 2020 1 \N

2020-01-07 2 2 7 1 1 2020 1 \N

2020-01-08 2 3 8 1 1 2020 1 \N

2020-01-09 2 4 9 1 1 2020 1 \N

2020-01-10 2 5 10 1 1 2020 1 \N

2020-01-11 2 6 11 1 1 2020 0 \N

2020-01-12 2 7 12 1 1 2020 0 \N

2020-01-13 3 1 13 1 1 2020 1 \N

2020-01-14 3 2 14 1 1 2020 1 \N

2020-01-15 3 3 15 1 1 2020 1 \N

2020-01-16 3 4 16 1 1 2020 1 \N

2020-01-17 3 5 17 1 1 2020 1 \N

2020-01-18 3 6 18 1 1 2020 0 \N

2020-01-19 3 7 19 1 1 2020 1 \N

2020-01-20 4 1 20 1 1 2020 1 \N

2020-01-21 4 2 21 1 1 2020 1 \N

2020-01-22 4 3 22 1 1 2020 1 \N

2020-01-23 4 4 23 1 1 2020 1 \N

2020-01-24 4 5 24 1 1 2020 0 春节

2020-01-25 4 6 25 1 1 2020 0 春节

2020-01-26 4 7 26 1 1 2020 0 春节

2020-01-27 5 1 27 1 1 2020 0 春节

2020-01-28 5 2 28 1 1 2020 0 春节

2020-01-29 5 3 29 1 1 2020 0 春节

2020-01-30 5 4 30 1 1 2020 0 春节

2020-01-31 5 5 31 1 1 2020 0 春节

2020-02-01 5 6 1 2 1 2020 0 春节

2020-02-02 5 7 2 2 1 2020 0 春节

2020-02-03 6 1 3 2 1 2020 1 \N

2020-02-04 6 2 4 2 1 2020 1 \N

2020-02-05 6 3 5 2 1 2020 1 \N

2020-02-06 6 4 6 2 1 2020 1 \N

2020-02-07 6 5 7 2 1 2020 1 \N

2020-02-08 6 6 8 2 1 2020 0 \N

2020-02-09 6 7 9 2 1 2020 0 \N

2020-02-10 7 1 10 2 1 2020 1 \N

2020-02-11 7 2 11 2 1 2020 1 \N

2020-02-12 7 3 12 2 1 2020 1 \N

2020-02-13 7 4 13 2 1 2020 1 \N

2020-02-14 7 5 14 2 1 2020 1 \N

2020-02-15 7 6 15 2 1 2020 0 \N

2020-02-16 7 7 16 2 1 2020 0 \N

2020-02-17 8 1 17 2 1 2020 1 \N

2020-02-18 8 2 18 2 1 2020 1 \N

2020-02-19 8 3 19 2 1 2020 1 \N

2020-02-20 8 4 20 2 1 2020 1 \N

2020-02-21 8 5 21 2 1 2020 1 \N

2020-02-22 8 6 22 2 1 2020 0 \N

2020-02-23 8 7 23 2 1 2020 0 \N

2020-02-24 9 1 24 2 1 2020 1 \N

2020-02-25 9 2 25 2 1 2020 1 \N

2020-02-26 9 3 26 2 1 2020 1 \N

2020-02-27 9 4 27 2 1 2020 1 \N

2020-02-28 9 5 28 2 1 2020 1 \N

2020-02-29 9 6 29 2 1 2020 0 \N

2020-03-01 9 7 1 3 1 2020 0 \N

2020-03-02 10 1 2 3 1 2020 1 \N

2020-03-03 10 2 3 3 1 2020 1 \N

2020-03-04 10 3 4 3 1 2020 1 \N

2020-03-05 10 4 5 3 1 2020 1 \N

2020-03-06 10 5 6 3 1 2020 1 \N

2020-03-07 10 6 7 3 1 2020 0 \N

2020-03-08 10 7 8 3 1 2020 0 \N

2020-03-09 11 1 9 3 1 2020 1 \N

2020-03-10 11 2 10 3 1 2020 1 \N

2020-03-11 11 3 11 3 1 2020 1 \N

2020-03-12 11 4 12 3 1 2020 1 \N

2020-03-13 11 5 13 3 1 2020 1 \N

2020-03-14 11 6 14 3 1 2020 0 \N

2020-03-15 11 7 15 3 1 2020 0 \N

2020-03-16 12 1 16 3 1 2020 1 \N

2020-03-17 12 2 17 3 1 2020 1 \N

2020-03-18 12 3 18 3 1 2020 1 \N

2020-03-19 12 4 19 3 1 2020 1 \N

2020-03-20 12 5 20 3 1 2020 1 \N

2020-03-21 12 6 21 3 1 2020 0 \N

2020-03-22 12 7 22 3 1 2020 0 \N

2020-03-23 13 1 23 3 1 2020 1 \N

2020-03-24 13 2 24 3 1 2020 1 \N

2020-03-25 13 3 25 3 1 2020 1 \N

2020-03-26 13 4 26 3 1 2020 1 \N

2020-03-27 13 5 27 3 1 2020 1 \N

2020-03-28 13 6 28 3 1 2020 0 \N

2020-03-29 13 7 29 3 1 2020 0 \N

2020-03-30 14 1 30 3 1 2020 1 \N

2020-03-31 14 2 31 3 1 2020 1 \N

2020-04-01 14 3 1 4 2 2020 1 \N

2020-04-02 14 4 2 4 2 2020 1 \N

2020-04-03 14 5 3 4 2 2020 1 \N

2020-04-04 14 6 4 4 2 2020 0 清明节

2020-04-05 14 7 5 4 2 2020 0 清明节

2020-04-06 15 1 6 4 2 2020 0 清明节

2020-04-07 15 2 7 4 2 2020 1 \N

2020-04-08 15 3 8 4 2 2020 1 \N

2020-04-09 15 4 9 4 2 2020 1 \N

2020-04-10 15 5 10 4 2 2020 1 \N

2020-04-11 15 6 11 4 2 2020 0 \N

2020-04-12 15 7 12 4 2 2020 0 \N

2020-04-13 16 1 13 4 2 2020 1 \N

2020-04-14 16 2 14 4 2 2020 1 \N

2020-04-15 16 3 15 4 2 2020 1 \N

2020-04-16 16 4 16 4 2 2020 1 \N

2020-04-17 16 5 17 4 2 2020 1 \N

2020-04-18 16 6 18 4 2 2020 0 \N

2020-04-19 16 7 19 4 2 2020 0 \N

2020-04-20 17 1 20 4 2 2020 1 \N

2020-04-21 17 2 21 4 2 2020 1 \N

2020-04-22 17 3 22 4 2 2020 1 \N

2020-04-23 17 4 23 4 2 2020 1 \N

2020-04-24 17 5 24 4 2 2020 1 \N

2020-04-25 17 6 25 4 2 2020 0 \N

2020-04-26 17 7 26 4 2 2020 1 \N

2020-04-27 18 1 27 4 2 2020 1 \N

2020-04-28 18 2 28 4 2 2020 1 \N

2020-04-29 18 3 29 4 2 2020 1 \N

2020-04-30 18 4 30 4 2 2020 1 \N

2020-05-01 18 5 1 5 2 2020 0 劳动节

2020-05-02 18 6 2 5 2 2020 0 劳动节

2020-05-03 18 7 3 5 2 2020 0 劳动节

2020-05-04 19 1 4 5 2 2020 0 劳动节

2020-05-05 19 2 5 5 2 2020 0 劳动节

2020-05-06 19 3 6 5 2 2020 1 \N

2020-05-07 19 4 7 5 2 2020 1 \N

2020-05-08 19 5 8 5 2 2020 1 \N

2020-05-09 19 6 9 5 2 2020 1 \N

2020-05-10 19 7 10 5 2 2020 0 \N

2020-05-11 20 1 11 5 2 2020 1 \N

2020-05-12 20 2 12 5 2 2020 1 \N

2020-05-13 20 3 13 5 2 2020 1 \N

2020-05-14 20 4 14 5 2 2020 1 \N

2020-05-15 20 5 15 5 2 2020 1 \N

2020-05-16 20 6 16 5 2 2020 0 \N

2020-05-17 20 7 17 5 2 2020 0 \N

2020-05-18 21 1 18 5 2 2020 1 \N

2020-05-19 21 2 19 5 2 2020 1 \N

2020-05-20 21 3 20 5 2 2020 1 \N

2020-05-21 21 4 21 5 2 2020 1 \N

2020-05-22 21 5 22 5 2 2020 1 \N

2020-05-23 21 6 23 5 2 2020 0 \N

2020-05-24 21 7 24 5 2 2020 0 \N

2020-05-25 22 1 25 5 2 2020 1 \N

2020-05-26 22 2 26 5 2 2020 1 \N

2020-05-27 22 3 27 5 2 2020 1 \N

2020-05-28 22 4 28 5 2 2020 1 \N

2020-05-29 22 5 29 5 2 2020 1 \N

2020-05-30 22 6 30 5 2 2020 0 \N

2020-05-31 22 7 31 5 2 2020 0 \N

2020-06-01 23 1 1 6 2 2020 1 \N

2020-06-02 23 2 2 6 2 2020 1 \N

2020-06-03 23 3 3 6 2 2020 1 \N

2020-06-04 23 4 4 6 2 2020 1 \N

2020-06-05 23 5 5 6 2 2020 1 \N

2020-06-06 23 6 6 6 2 2020 0 \N

2020-06-07 23 7 7 6 2 2020 0 \N

2020-06-08 24 1 8 6 2 2020 1 \N

2020-06-09 24 2 9 6 2 2020 1 \N

2020-06-10 24 3 10 6 2 2020 1 \N

2020-06-11 24 4 11 6 2 2020 1 \N

2020-06-12 24 5 12 6 2 2020 1 \N

2020-06-13 24 6 13 6 2 2020 0 \N

2020-06-14 24 7 14 6 2 2020 0 \N

2020-06-15 25 1 15 6 2 2020 1 \N

2020-06-16 25 2 16 6 2 2020 1 \N

2020-06-17 25 3 17 6 2 2020 1 \N

2020-06-18 25 4 18 6 2 2020 1 \N

2020-06-19 25 5 19 6 2 2020 1 \N

2020-06-20 25 6 20 6 2 2020 0 \N

2020-06-21 25 7 21 6 2 2020 0 \N

2020-06-22 26 1 22 6 2 2020 1 \N

2020-06-23 26 2 23 6 2 2020 1 \N

2020-06-24 26 3 24 6 2 2020 1 \N

2020-06-25 26 4 25 6 2 2020 0 端午节

2020-06-26 26 5 26 6 2 2020 0 端午节

2020-06-27 26 6 27 6 2 2020 0 端午节

2020-06-28 26 7 28 6 2 2020 1 \N

2020-06-29 27 1 29 6 2 2020 1 \N

2020-06-30 27 2 30 6 2 2020 1 \N

2020-07-01 27 3 1 7 3 2020 1 \N

2020-07-02 27 4 2 7 3 2020 1 \N

2020-07-03 27 5 3 7 3 2020 1 \N

2020-07-04 27 6 4 7 3 2020 0 \N

2020-07-05 27 7 5 7 3 2020 0 \N

2020-07-06 28 1 6 7 3 2020 1 \N

2020-07-07 28 2 7 7 3 2020 1 \N

2020-07-08 28 3 8 7 3 2020 1 \N

2020-07-09 28 4 9 7 3 2020 1 \N

2020-07-10 28 5 10 7 3 2020 1 \N

2020-07-11 28 6 11 7 3 2020 0 \N

2020-07-12 28 7 12 7 3 2020 0 \N

2020-07-13 29 1 13 7 3 2020 1 \N

2020-07-14 29 2 14 7 3 2020 1 \N

2020-07-15 29 3 15 7 3 2020 1 \N

2020-07-16 29 4 16 7 3 2020 1 \N

2020-07-17 29 5 17 7 3 2020 1 \N

2020-07-18 29 6 18 7 3 2020 0 \N

2020-07-19 29 7 19 7 3 2020 0 \N

2020-07-20 30 1 20 7 3 2020 1 \N

2020-07-21 30 2 21 7 3 2020 1 \N

2020-07-22 30 3 22 7 3 2020 1 \N

2020-07-23 30 4 23 7 3 2020 1 \N

2020-07-24 30 5 24 7 3 2020 1 \N

2020-07-25 30 6 25 7 3 2020 0 \N

2020-07-26 30 7 26 7 3 2020 0 \N

2020-07-27 31 1 27 7 3 2020 1 \N

2020-07-28 31 2 28 7 3 2020 1 \N

2020-07-29 31 3 29 7 3 2020 1 \N

2020-07-30 31 4 30 7 3 2020 1 \N

2020-07-31 31 5 31 7 3 2020 1 \N

2020-08-01 31 6 1 8 3 2020 0 \N

2020-08-02 31 7 2 8 3 2020 0 \N

2020-08-03 32 1 3 8 3 2020 1 \N

2020-08-04 32 2 4 8 3 2020 1 \N

2020-08-05 32 3 5 8 3 2020 1 \N

2020-08-06 32 4 6 8 3 2020 1 \N

2020-08-07 32 5 7 8 3 2020 1 \N

2020-08-08 32 6 8 8 3 2020 0 \N

2020-08-09 32 7 9 8 3 2020 0 \N

2020-08-10 33 1 10 8 3 2020 1 \N

2020-08-11 33 2 11 8 3 2020 1 \N

2020-08-12 33 3 12 8 3 2020 1 \N

2020-08-13 33 4 13 8 3 2020 1 \N

2020-08-14 33 5 14 8 3 2020 1 \N

2020-08-15 33 6 15 8 3 2020 0 \N

2020-08-16 33 7 16 8 3 2020 0 \N

2020-08-17 34 1 17 8 3 2020 1 \N

2020-08-18 34 2 18 8 3 2020 1 \N

2020-08-19 34 3 19 8 3 2020 1 \N

2020-08-20 34 4 20 8 3 2020 1 \N

2020-08-21 34 5 21 8 3 2020 1 \N

2020-08-22 34 6 22 8 3 2020 0 \N

2020-08-23 34 7 23 8 3 2020 0 \N

2020-08-24 35 1 24 8 3 2020 1 \N

2020-08-25 35 2 25 8 3 2020 1 \N

2020-08-26 35 3 26 8 3 2020 1 \N

2020-08-27 35 4 27 8 3 2020 1 \N

2020-08-28 35 5 28 8 3 2020 1 \N

2020-08-29 35 6 29 8 3 2020 0 \N

2020-08-30 35 7 30 8 3 2020 0 \N

2020-08-31 36 1 31 8 3 2020 1 \N

2020-09-01 36 2 1 9 3 2020 1 \N

2020-09-02 36 3 2 9 3 2020 1 \N

2020-09-03 36 4 3 9 3 2020 1 \N

2020-09-04 36 5 4 9 3 2020 1 \N

2020-09-05 36 6 5 9 3 2020 0 \N

2020-09-06 36 7 6 9 3 2020 0 \N

2020-09-07 37 1 7 9 3 2020 1 \N

2020-09-08 37 2 8 9 3 2020 1 \N

2020-09-09 37 3 9 9 3 2020 1 \N

2020-09-10 37 4 10 9 3 2020 1 \N

2020-09-11 37 5 11 9 3 2020 1 \N

2020-09-12 37 6 12 9 3 2020 0 \N

2020-09-13 37 7 13 9 3 2020 0 \N

2020-09-14 38 1 14 9 3 2020 1 \N

2020-09-15 38 2 15 9 3 2020 1 \N

2020-09-16 38 3 16 9 3 2020 1 \N

2020-09-17 38 4 17 9 3 2020 1 \N

2020-09-18 38 5 18 9 3 2020 1 \N

2020-09-19 38 6 19 9 3 2020 0 \N

2020-09-20 38 7 20 9 3 2020 0 \N

2020-09-21 39 1 21 9 3 2020 1 \N

2020-09-22 39 2 22 9 3 2020 1 \N

2020-09-23 39 3 23 9 3 2020 1 \N

2020-09-24 39 4 24 9 3 2020 1 \N

2020-09-25 39 5 25 9 3 2020 1 \N

2020-09-26 39 6 26 9 3 2020 0 \N

2020-09-27 39 7 27 9 3 2020 1 \N

2020-09-28 40 1 28 9 3 2020 1 \N

2020-09-29 40 2 29 9 3 2020 1 \N

2020-09-30 40 3 30 9 3 2020 1 \N

2020-10-01 40 4 1 10 4 2020 0 国庆节、中秋节

2020-10-02 40 5 2 10 4 2020 0 国庆节、中秋节

2020-10-03 40 6 3 10 4 2020 0 国庆节、中秋节

2020-10-04 40 7 4 10 4 2020 0 国庆节、中秋节

2020-10-05 41 1 5 10 4 2020 0 国庆节、中秋节

2020-10-06 41 2 6 10 4 2020 0 国庆节、中秋节

2020-10-07 41 3 7 10 4 2020 0 国庆节、中秋节

2020-10-08 41 4 8 10 4 2020 0 国庆节、中秋节

2020-10-09 41 5 9 10 4 2020 1 \N

2020-10-10 41 6 10 10 4 2020 1 \N

2020-10-11 41 7 11 10 4 2020 0 \N

2020-10-12 42 1 12 10 4 2020 1 \N

2020-10-13 42 2 13 10 4 2020 1 \N

2020-10-14 42 3 14 10 4 2020 1 \N

2020-10-15 42 4 15 10 4 2020 1 \N

2020-10-16 42 5 16 10 4 2020 1 \N

2020-10-17 42 6 17 10 4 2020 0 \N

2020-10-18 42 7 18 10 4 2020 0 \N

2020-10-19 43 1 19 10 4 2020 1 \N

2020-10-20 43 2 20 10 4 2020 1 \N

2020-10-21 43 3 21 10 4 2020 1 \N

2020-10-22 43 4 22 10 4 2020 1 \N

2020-10-23 43 5 23 10 4 2020 1 \N

2020-10-24 43 6 24 10 4 2020 0 \N

2020-10-25 43 7 25 10 4 2020 0 \N

2020-10-26 44 1 26 10 4 2020 1 \N

2020-10-27 44 2 27 10 4 2020 1 \N

2020-10-28 44 3 28 10 4 2020 1 \N

2020-10-29 44 4 29 10 4 2020 1 \N

2020-10-30 44 5 30 10 4 2020 1 \N

2020-10-31 44 6 31 10 4 2020 0 \N

2020-11-01 44 7 1 11 4 2020 0 \N

2020-11-02 45 1 2 11 4 2020 1 \N

2020-11-03 45 2 3 11 4 2020 1 \N

2020-11-04 45 3 4 11 4 2020 1 \N

2020-11-05 45 4 5 11 4 2020 1 \N

2020-11-06 45 5 6 11 4 2020 1 \N

2020-11-07 45 6 7 11 4 2020 0 \N

2020-11-08 45 7 8 11 4 2020 0 \N

2020-11-09 46 1 9 11 4 2020 1 \N

2020-11-10 46 2 10 11 4 2020 1 \N

2020-11-11 46 3 11 11 4 2020 1 \N

2020-11-12 46 4 12 11 4 2020 1 \N

2020-11-13 46 5 13 11 4 2020 1 \N

2020-11-14 46 6 14 11 4 2020 0 \N

2020-11-15 46 7 15 11 4 2020 0 \N

2020-11-16 47 1 16 11 4 2020 1 \N

2020-11-17 47 2 17 11 4 2020 1 \N

2020-11-18 47 3 18 11 4 2020 1 \N

2020-11-19 47 4 19 11 4 2020 1 \N

2020-11-20 47 5 20 11 4 2020 1 \N

2020-11-21 47 6 21 11 4 2020 0 \N

2020-11-22 47 7 22 11 4 2020 0 \N

2020-11-23 48 1 23 11 4 2020 1 \N

2020-11-24 48 2 24 11 4 2020 1 \N

2020-11-25 48 3 25 11 4 2020 1 \N

2020-11-26 48 4 26 11 4 2020 1 \N

2020-11-27 48 5 27 11 4 2020 1 \N

2020-11-28 48 6 28 11 4 2020 0 \N

2020-11-29 48 7 29 11 4 2020 0 \N

2020-11-30 49 1 30 11 4 2020 1 \N

2020-12-01 49 2 1 12 4 2020 1 \N

2020-12-02 49 3 2 12 4 2020 1 \N

2020-12-03 49 4 3 12 4 2020 1 \N

2020-12-04 49 5 4 12 4 2020 1 \N

2020-12-05 49 6 5 12 4 2020 0 \N

2020-12-06 49 7 6 12 4 2020 0 \N

2020-12-07 50 1 7 12 4 2020 1 \N

2020-12-08 50 2 8 12 4 2020 1 \N

2020-12-09 50 3 9 12 4 2020 1 \N

2020-12-10 50 4 10 12 4 2020 1 \N

2020-12-11 50 5 11 12 4 2020 1 \N

2020-12-12 50 6 12 12 4 2020 0 \N

2020-12-13 50 7 13 12 4 2020 0 \N

2020-12-14 51 1 14 12 4 2020 1 \N

2020-12-15 51 2 15 12 4 2020 1 \N

2020-12-16 51 3 16 12 4 2020 1 \N

2020-12-17 51 4 17 12 4 2020 1 \N

2020-12-18 51 5 18 12 4 2020 1 \N

2020-12-19 51 6 19 12 4 2020 0 \N

2020-12-20 51 7 20 12 4 2020 0 \N

2020-12-21 52 1 21 12 4 2020 1 \N

2020-12-22 52 2 22 12 4 2020 1 \N

2020-12-23 52 3 23 12 4 2020 1 \N

2020-12-24 52 4 24 12 4 2020 1 \N

2020-12-25 52 5 25 12 4 2020 1 \N

2020-12-26 52 6 26 12 4 2020 0 \N

2020-12-27 52 7 27 12 4 2020 0 \N

2020-12-28 53 1 28 12 4 2020 1 \N

2020-12-29 53 2 29 12 4 2020 1 \N

2020-12-30 53 3 30 12 4 2020 1 \N

2020-12-31 53 4 31 12 4 2020 1 \N

2021-01-01 1 5 1 1 1 2021 0 元旦

2021-01-02 1 6 2 1 1 2021 0 元旦

2021-01-03 1 7 3 1 1 2021 0 元旦

2021-01-04 2 1 4 1 1 2021 1 \N

2021-01-05 2 2 5 1 1 2021 1 \N

2021-01-06 2 3 6 1 1 2021 1 \N

2021-01-07 2 4 7 1 1 2021 1 \N

2021-01-08 2 5 8 1 1 2021 1 \N

2021-01-09 2 6 9 1 1 2021 0 \N

2021-01-10 2 7 10 1 1 2021 0 \N

2021-01-11 3 1 11 1 1 2021 1 \N

2021-01-12 3 2 12 1 1 2021 1 \N

2021-01-13 3 3 13 1 1 2021 1 \N

2021-01-14 3 4 14 1 1 2021 1 \N

2021-01-15 3 5 15 1 1 2021 1 \N

2021-01-16 3 6 16 1 1 2021 0 \N

2021-01-17 3 7 17 1 1 2021 0 \N

2021-01-18 4 1 18 1 1 2021 1 \N

2021-01-19 4 2 19 1 1 2021 1 \N

2021-01-20 4 3 20 1 1 2021 1 \N

2021-01-21 4 4 21 1 1 2021 1 \N

2021-01-22 4 5 22 1 1 2021 1 \N

2021-01-23 4 6 23 1 1 2021 0 \N

2021-01-24 4 7 24 1 1 2021 0 \N

2021-01-25 5 1 25 1 1 2021 1 \N

2021-01-26 5 2 26 1 1 2021 1 \N

2021-01-27 5 3 27 1 1 2021 1 \N

2021-01-28 5 4 28 1 1 2021 1 \N

2021-01-29 5 5 29 1 1 2021 1 \N

2021-01-30 5 6 30 1 1 2021 0 \N

2021-01-31 5 7 31 1 1 2021 0 \N

2021-02-01 6 1 1 2 1 2021 1 \N

2021-02-02 6 2 2 2 1 2021 1 \N

2021-02-03 6 3 3 2 1 2021 1 \N

2021-02-04 6 4 4 2 1 2021 1 \N

2021-02-05 6 5 5 2 1 2021 1 \N

2021-02-06 6 6 6 2 1 2021 0 \N

2021-02-07 6 7 7 2 1 2021 1 \N

2021-02-08 7 1 8 2 1 2021 1 \N

2021-02-09 7 2 9 2 1 2021 1 \N

2021-02-10 7 3 10 2 1 2021 1 \N

2021-02-11 7 4 11 2 1 2021 0 春节

2021-02-12 7 5 12 2 1 2021 0 春节

2021-02-13 7 6 13 2 1 2021 0 春节

2021-02-14 7 7 14 2 1 2021 0 春节

2021-02-15 8 1 15 2 1 2021 0 春节

2021-02-16 8 2 16 2 1 2021 0 春节

2021-02-17 8 3 17 2 1 2021 0 春节

2021-02-18 8 4 18 2 1 2021 1 \N

2021-02-19 8 5 19 2 1 2021 1 \N

2021-02-20 8 6 20 2 1 2021 1 \N

2021-02-21 8 7 21 2 1 2021 0 \N

2021-02-22 9 1 22 2 1 2021 1 \N

2021-02-23 9 2 23 2 1 2021 1 \N

2021-02-24 9 3 24 2 1 2021 1 \N

2021-02-25 9 4 25 2 1 2021 1 \N

2021-02-26 9 5 26 2 1 2021 1 \N

2021-02-27 9 6 27 2 1 2021 0 \N

2021-02-28 9 7 28 2 1 2021 0 \N

2021-03-01 10 1 1 3 1 2021 1 \N

2021-03-02 10 2 2 3 1 2021 1 \N

2021-03-03 10 3 3 3 1 2021 1 \N

2021-03-04 10 4 4 3 1 2021 1 \N

2021-03-05 10 5 5 3 1 2021 1 \N

2021-03-06 10 6 6 3 1 2021 0 \N

2021-03-07 10 7 7 3 1 2021 0 \N

2021-03-08 11 1 8 3 1 2021 1 \N

2021-03-09 11 2 9 3 1 2021 1 \N

2021-03-10 11 3 10 3 1 2021 1 \N

2021-03-11 11 4 11 3 1 2021 1 \N

2021-03-12 11 5 12 3 1 2021 1 \N

2021-03-13 11 6 13 3 1 2021 0 \N

2021-03-14 11 7 14 3 1 2021 0 \N

2021-03-15 12 1 15 3 1 2021 1 \N

2021-03-16 12 2 16 3 1 2021 1 \N

2021-03-17 12 3 17 3 1 2021 1 \N

2021-03-18 12 4 18 3 1 2021 1 \N

2021-03-19 12 5 19 3 1 2021 1 \N

2021-03-20 12 6 20 3 1 2021 0 \N

2021-03-21 12 7 21 3 1 2021 0 \N

2021-03-22 13 1 22 3 1 2021 1 \N

2021-03-23 13 2 23 3 1 2021 1 \N

2021-03-24 13 3 24 3 1 2021 1 \N

2021-03-25 13 4 25 3 1 2021 1 \N

2021-03-26 13 5 26 3 1 2021 1 \N

2021-03-27 13 6 27 3 1 2021 0 \N

2021-03-28 13 7 28 3 1 2021 0 \N

2021-03-29 14 1 29 3 1 2021 1 \N

2021-03-30 14 2 30 3 1 2021 1 \N

2021-03-31 14 3 31 3 1 2021 1 \N

2021-04-01 14 4 1 4 2 2021 1 \N

2021-04-02 14 5 2 4 2 2021 1 \N

2021-04-03 14 6 3 4 2 2021 0 清明节

2021-04-04 14 7 4 4 2 2021 0 清明节

2021-04-05 15 1 5 4 2 2021 0 清明节

2021-04-06 15 2 6 4 2 2021 1 \N

2021-04-07 15 3 7 4 2 2021 1 \N

2021-04-08 15 4 8 4 2 2021 1 \N

2021-04-09 15 5 9 4 2 2021 1 \N

2021-04-10 15 6 10 4 2 2021 0 \N

2021-04-11 15 7 11 4 2 2021 0 \N

2021-04-12 16 1 12 4 2 2021 1 \N

2021-04-13 16 2 13 4 2 2021 1 \N

2021-04-14 16 3 14 4 2 2021 1 \N

2021-04-15 16 4 15 4 2 2021 1 \N

2021-04-16 16 5 16 4 2 2021 1 \N

2021-04-17 16 6 17 4 2 2021 0 \N

2021-04-18 16 7 18 4 2 2021 0 \N

2021-04-19 17 1 19 4 2 2021 1 \N

2021-04-20 17 2 20 4 2 2021 1 \N

2021-04-21 17 3 21 4 2 2021 1 \N

2021-04-22 17 4 22 4 2 2021 1 \N

2021-04-23 17 5 23 4 2 2021 1 \N

2021-04-24 17 6 24 4 2 2021 0 \N

2021-04-25 17 7 25 4 2 2021 1 \N

2021-04-26 18 1 26 4 2 2021 1 \N

2021-04-27 18 2 27 4 2 2021 1 \N

2021-04-28 18 3 28 4 2 2021 1 \N

2021-04-29 18 4 29 4 2 2021 1 \N

2021-04-30 18 5 30 4 2 2021 1 \N

2021-05-01 18 6 1 5 2 2021 0 劳动节

2021-05-02 18 7 2 5 2 2021 0 劳动节

2021-05-03 19 1 3 5 2 2021 0 劳动节

2021-05-04 19 2 4 5 2 2021 0 劳动节

2021-05-05 19 3 5 5 2 2021 0 劳动节

2021-05-06 19 4 6 5 2 2021 1 \N

2021-05-07 19 5 7 5 2 2021 1 \N

2021-05-08 19 6 8 5 2 2021 1 \N

2021-05-09 19 7 9 5 2 2021 0 \N

2021-05-10 20 1 10 5 2 2021 1 \N

2021-05-11 20 2 11 5 2 2021 1 \N

2021-05-12 20 3 12 5 2 2021 1 \N

2021-05-13 20 4 13 5 2 2021 1 \N

2021-05-14 20 5 14 5 2 2021 1 \N

2021-05-15 20 6 15 5 2 2021 0 \N

2021-05-16 20 7 16 5 2 2021 0 \N

2021-05-17 21 1 17 5 2 2021 1 \N

2021-05-18 21 2 18 5 2 2021 1 \N

2021-05-19 21 3 19 5 2 2021 1 \N

2021-05-20 21 4 20 5 2 2021 1 \N

2021-05-21 21 5 21 5 2 2021 1 \N

2021-05-22 21 6 22 5 2 2021 0 \N

2021-05-23 21 7 23 5 2 2021 0 \N

2021-05-24 22 1 24 5 2 2021 1 \N

2021-05-25 22 2 25 5 2 2021 1 \N

2021-05-26 22 3 26 5 2 2021 1 \N

2021-05-27 22 4 27 5 2 2021 1 \N

2021-05-28 22 5 28 5 2 2021 1 \N

2021-05-29 22 6 29 5 2 2021 0 \N

2021-05-30 22 7 30 5 2 2021 0 \N

2021-05-31 23 1 31 5 2 2021 1 \N

2021-06-01 23 2 1 6 2 2021 1 \N

2021-06-02 23 3 2 6 2 2021 1 \N

2021-06-03 23 4 3 6 2 2021 1 \N

2021-06-04 23 5 4 6 2 2021 1 \N

2021-06-05 23 6 5 6 2 2021 0 \N

2021-06-06 23 7 6 6 2 2021 0 \N

2021-06-07 24 1 7 6 2 2021 1 \N

2021-06-08 24 2 8 6 2 2021 1 \N

2021-06-09 24 3 9 6 2 2021 1 \N

2021-06-10 24 4 10 6 2 2021 1 \N

2021-06-11 24 5 11 6 2 2021 1 \N

2021-06-12 24 6 12 6 2 2021 0 端午节

2021-06-13 24 7 13 6 2 2021 0 端午节

2021-06-14 25 1 14 6 2 2021 0 端午节

2021-06-15 25 2 15 6 2 2021 1 \N

2021-06-16 25 3 16 6 2 2021 1 \N

2021-06-17 25 4 17 6 2 2021 1 \N

2021-06-18 25 5 18 6 2 2021 1 \N

2021-06-19 25 6 19 6 2 2021 0 \N

2021-06-20 25 7 20 6 2 2021 0 \N

2021-06-21 26 1 21 6 2 2021 1 \N

2021-06-22 26 2 22 6 2 2021 1 \N

2021-06-23 26 3 23 6 2 2021 1 \N

2021-06-24 26 4 24 6 2 2021 1 \N

2021-06-25 26 5 25 6 2 2021 1 \N

2021-06-26 26 6 26 6 2 2021 0 \N

2021-06-27 26 7 27 6 2 2021 0 \N

2021-06-28 27 1 28 6 2 2021 1 \N

2021-06-29 27 2 29 6 2 2021 1 \N

2021-06-30 27 3 30 6 2 2021 1 \N

2021-07-01 27 4 1 7 3 2021 1 \N

2021-07-02 27 5 2 7 3 2021 1 \N

2021-07-03 27 6 3 7 3 2021 0 \N

2021-07-04 27 7 4 7 3 2021 0 \N

2021-07-05 28 1 5 7 3 2021 1 \N

2021-07-06 28 2 6 7 3 2021 1 \N

2021-07-07 28 3 7 7 3 2021 1 \N

2021-07-08 28 4 8 7 3 2021 1 \N

2021-07-09 28 5 9 7 3 2021 1 \N

2021-07-10 28 6 10 7 3 2021 0 \N

2021-07-11 28 7 11 7 3 2021 0 \N

2021-07-12 29 1 12 7 3 2021 1 \N

2021-07-13 29 2 13 7 3 2021 1 \N

2021-07-14 29 3 14 7 3 2021 1 \N

2021-07-15 29 4 15 7 3 2021 1 \N

2021-07-16 29 5 16 7 3 2021 1 \N

2021-07-17 29 6 17 7 3 2021 0 \N

2021-07-18 29 7 18 7 3 2021 0 \N

2021-07-19 30 1 19 7 3 2021 1 \N

2021-07-20 30 2 20 7 3 2021 1 \N

2021-07-21 30 3 21 7 3 2021 1 \N

2021-07-22 30 4 22 7 3 2021 1 \N

2021-07-23 30 5 23 7 3 2021 1 \N

2021-07-24 30 6 24 7 3 2021 0 \N

2021-07-25 30 7 25 7 3 2021 0 \N

2021-07-26 31 1 26 7 3 2021 1 \N

2021-07-27 31 2 27 7 3 2021 1 \N

2021-07-28 31 3 28 7 3 2021 1 \N

2021-07-29 31 4 29 7 3 2021 1 \N

2021-07-30 31 5 30 7 3 2021 1 \N

2021-07-31 31 6 31 7 3 2021 0 \N

2021-08-01 31 7 1 8 3 2021 0 \N

2021-08-02 32 1 2 8 3 2021 1 \N

2021-08-03 32 2 3 8 3 2021 1 \N

2021-08-04 32 3 4 8 3 2021 1 \N

2021-08-05 32 4 5 8 3 2021 1 \N

2021-08-06 32 5 6 8 3 2021 1 \N

2021-08-07 32 6 7 8 3 2021 0 \N

2021-08-08 32 7 8 8 3 2021 0 \N

2021-08-09 33 1 9 8 3 2021 1 \N

2021-08-10 33 2 10 8 3 2021 1 \N

2021-08-11 33 3 11 8 3 2021 1 \N

2021-08-12 33 4 12 8 3 2021 1 \N

2021-08-13 33 5 13 8 3 2021 1 \N

2021-08-14 33 6 14 8 3 2021 0 \N

2021-08-15 33 7 15 8 3 2021 0 \N

2021-08-16 34 1 16 8 3 2021 1 \N

2021-08-17 34 2 17 8 3 2021 1 \N

2021-08-18 34 3 18 8 3 2021 1 \N

2021-08-19 34 4 19 8 3 2021 1 \N

2021-08-20 34 5 20 8 3 2021 1 \N

2021-08-21 34 6 21 8 3 2021 0 \N

2021-08-22 34 7 22 8 3 2021 0 \N

2021-08-23 35 1 23 8 3 2021 1 \N

2021-08-24 35 2 24 8 3 2021 1 \N

2021-08-25 35 3 25 8 3 2021 1 \N

2021-08-26 35 4 26 8 3 2021 1 \N

2021-08-27 35 5 27 8 3 2021 1 \N

2021-08-28 35 6 28 8 3 2021 0 \N

2021-08-29 35 7 29 8 3 2021 0 \N

2021-08-30 36 1 30 8 3 2021 1 \N

2021-08-31 36 2 31 8 3 2021 1 \N

2021-09-01 36 3 1 9 3 2021 1 \N

2021-09-02 36 4 2 9 3 2021 1 \N

2021-09-03 36 5 3 9 3 2021 1 \N

2021-09-04 36 6 4 9 3 2021 0 \N

2021-09-05 36 7 5 9 3 2021 0 \N

2021-09-06 37 1 6 9 3 2021 1 \N

2021-09-07 37 2 7 9 3 2021 1 \N

2021-09-08 37 3 8 9 3 2021 1 \N

2021-09-09 37 4 9 9 3 2021 1 \N

2021-09-10 37 5 10 9 3 2021 1 \N

2021-09-11 37 6 11 9 3 2021 0 \N

2021-09-12 37 7 12 9 3 2021 0 \N

2021-09-13 38 1 13 9 3 2021 1 \N

2021-09-14 38 2 14 9 3 2021 1 \N

2021-09-15 38 3 15 9 3 2021 1 \N

2021-09-16 38 4 16 9 3 2021 1 \N

2021-09-17 38 5 17 9 3 2021 1 \N

2021-09-18 38 6 18 9 3 2021 1 \N

2021-09-19 38 7 19 9 3 2021 0 中秋节

2021-09-20 39 1 20 9 3 2021 0 中秋节

2021-09-21 39 2 21 9 3 2021 0 中秋节

2021-09-22 39 3 22 9 3 2021 1 \N

2021-09-23 39 4 23 9 3 2021 1 \N

2021-09-24 39 5 24 9 3 2021 1 \N

2021-09-25 39 6 25 9 3 2021 0 \N

2021-09-26 39 7 26 9 3 2021 1 \N

2021-09-27 40 1 27 9 3 2021 1 \N

2021-09-28 40 2 28 9 3 2021 1 \N

2021-09-29 40 3 29 9 3 2021 1 \N

2021-09-30 40 4 30 9 3 2021 1 \N

2021-10-01 40 5 1 10 4 2021 0 国庆节

2021-10-02 40 6 2 10 4 2021 0 国庆节

2021-10-03 40 7 3 10 4 2021 0 国庆节

2021-10-04 41 1 4 10 4 2021 0 国庆节

2021-10-05 41 2 5 10 4 2021 0 国庆节

2021-10-06 41 3 6 10 4 2021 0 国庆节

2021-10-07 41 4 7 10 4 2021 0 国庆节

2021-10-08 41 5 8 10 4 2021 1 \N

2021-10-09 41 6 9 10 4 2021 1 \N

2021-10-10 41 7 10 10 4 2021 0 \N

2021-10-11 42 1 11 10 4 2021 1 \N

2021-10-12 42 2 12 10 4 2021 1 \N

2021-10-13 42 3 13 10 4 2021 1 \N

2021-10-14 42 4 14 10 4 2021 1 \N

2021-10-15 42 5 15 10 4 2021 1 \N

2021-10-16 42 6 16 10 4 2021 0 \N

2021-10-17 42 7 17 10 4 2021 0 \N

2021-10-18 43 1 18 10 4 2021 1 \N

2021-10-19 43 2 19 10 4 2021 1 \N

2021-10-20 43 3 20 10 4 2021 1 \N

2021-10-21 43 4 21 10 4 2021 1 \N

2021-10-22 43 5 22 10 4 2021 1 \N

2021-10-23 43 6 23 10 4 2021 0 \N

2021-10-24 43 7 24 10 4 2021 0 \N

2021-10-25 44 1 25 10 4 2021 1 \N

2021-10-26 44 2 26 10 4 2021 1 \N

2021-10-27 44 3 27 10 4 2021 1 \N

2021-10-28 44 4 28 10 4 2021 1 \N

2021-10-29 44 5 29 10 4 2021 1 \N

2021-10-30 44 6 30 10 4 2021 0 \N

2021-10-31 44 7 31 10 4 2021 0 \N

2021-11-01 45 1 1 11 4 2021 1 \N

2021-11-02 45 2 2 11 4 2021 1 \N

2021-11-03 45 3 3 11 4 2021 1 \N

2021-11-04 45 4 4 11 4 2021 1 \N

2021-11-05 45 5 5 11 4 2021 1 \N

2021-11-06 45 6 6 11 4 2021 0 \N

2021-11-07 45 7 7 11 4 2021 0 \N

2021-11-08 46 1 8 11 4 2021 1 \N

2021-11-09 46 2 9 11 4 2021 1 \N

2021-11-10 46 3 10 11 4 2021 1 \N

2021-11-11 46 4 11 11 4 2021 1 \N

2021-11-12 46 5 12 11 4 2021 1 \N

2021-11-13 46 6 13 11 4 2021 0 \N

2021-11-14 46 7 14 11 4 2021 0 \N

2021-11-15 47 1 15 11 4 2021 1 \N

2021-11-16 47 2 16 11 4 2021 1 \N

2021-11-17 47 3 17 11 4 2021 1 \N

2021-11-18 47 4 18 11 4 2021 1 \N

2021-11-19 47 5 19 11 4 2021 1 \N

2021-11-20 47 6 20 11 4 2021 0 \N

2021-11-21 47 7 21 11 4 2021 0 \N