Hive 窗口函数详解

目录

-

-

- 1、hive窗口函数

- 2、在日常的开发中常用那些类型函数?

- 3、了解哪些窗口函数,都是什么意思?

-

- 窗口函数功能

- over语法

-

- 1、物理窗口(真实往上下移动多少行rows between):

- 2、 逻辑窗口(满足条件上下多少行):(金融行业、保险行业、p2p等)

- 4、窗口聚合函数

-

- count开窗函数

- sum开窗函数

- avg开窗函数

- min开窗函数

- max开窗函数

- 5、窗口分析函数

-

- first_value开窗函数

- last_value开窗函数

- lag开窗函数

- lead开窗函数

- cume_dist开窗函数

- 6、窗口排序函数

-

- rank开窗函数

- dense_rank开窗函数

- ntile开窗函数

- row_number开窗函数

- percent_rank开窗函数

-

1、hive窗口函数

窗口函数是什么鬼?

窗口函数指定了函数工作的数据窗口大小(当前行的上下多少行),这个数据窗口大小可能会随着行的变化而变化。

窗口函数和聚合函数区别?

窗口函数对于每个组返回多行,组内每一行对应返回一行值。

聚合函数对于每个组只返回一行。

2、在日常的开发中常用那些类型函数?

- 1、字符串操作函数?split、concat、ifnull、substr、substring、cast

- 2、聚合函数 : hive适用于分析,所以常用

- 3、时间函数 : 数仓的特征随时间变化而变化,所以时间也特别多

- 4、窗口函数:sum() over() 、 count() over() 、 排名函数

接下来,着重讲解hive的窗口函数。

3、了解哪些窗口函数,都是什么意思?

窗口函数功能

- sum(col) over() : 分组对col累计求和

- count(col) over() : 分组对col累计

- min(col) over() : 分组对col求最小值

- max(col) over() : 分组求col的最大值

- avg(col) over() : 分组求col列的平均值

- first_value(col) over() : 某分组排序后的第一个col值

- last_value(col) over() : 某分组排序后的最后一个col值

- lag(col,n,DEFAULT) : 统计往前n行的col值,n可选,默认为1,DEFAULT当往上第n行为NULL时候,取默认值,如不指定,则为NULL

- lead(col,n,DEFAULT) : 统计往后n行的col值,n可选,默认为1,DEFAULT当往下第n行为NULL时候,取默认值,如不指定,则为NULL

- ntile(n) : 用于将分组数据按照顺序切分成n片,返回当前切片值。注意:n必须为int类型

- row_number() over() : 排名函数,不会重复,适合于生成主键或者不并列排名

- rank() over() : 排名函数,有并列名次,名次不连续。如:1,1,3

- dense_rank() over() : 排名函数,有并列名次,名次连续。如:1,1,2

over语法

over(分组 排序 窗口) 中的order by后的语法:

(ROWS | RANGE) BETWEEN (UNBOUNDED | [num]) PRECEDING AND ([num] PRECEDING | CURRENT ROW | (UNBOUNDED | [num]) FOLLOWING)

(ROWS | RANGE) BETWEEN CURRENT ROW AND (CURRENT ROW | (UNBOUNDED | [num]) FOLLOWING)

(ROWS | RANGE) BETWEEN [num] FOLLOWING AND (UNBOUNDED | [num]) FOLLOWING

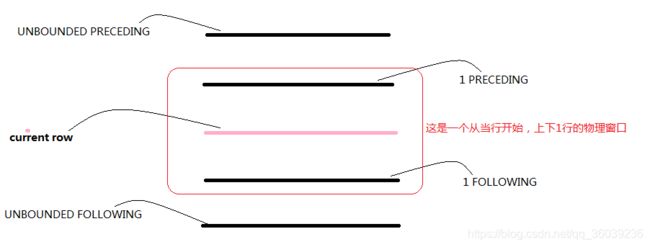

1、物理窗口(真实往上下移动多少行rows between):

CURRENT ROW | UNBOUNDED PRECEDING | [num] PRECEDING AND UNBOUNDED FOLLOWING | [num] FOLLOWING| CURRENT ROW

如: over(partition by col order by 排序字段 rows between 1 preceding and 1 fllowing)

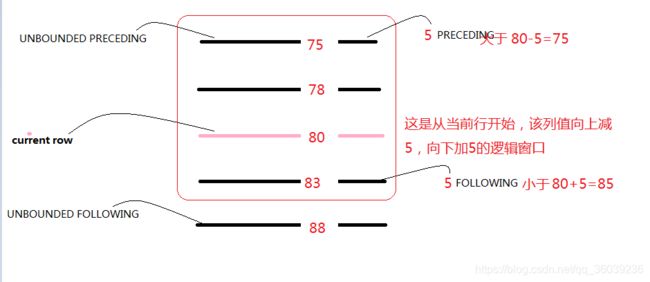

2、 逻辑窗口(满足条件上下多少行):(金融行业、保险行业、p2p等)

range between [num] PRECEDING AND [num] FOLLOWING

如: over(partition by col order by 排序字段 range between 5 preceding and 5 fllowing)

注意: 窗口函数一般不和group by搭配使用。

应用: 某天某产品的累计销售额。

物理窗口:

求多维度累计(累计退款金额、累计交易额、累计订单数量)

逻辑窗口:

范围累计环比情况、某值上下加减。

4、窗口聚合函数

创建测试表,存放当天每半小时的店铺销售数据

CREATE TABLE IF NOT EXISTS shop_data(

shop_id INT comment '店铺id',

stat_date STRING comment '时间',

ordamt DOUBLE comment '销售额'

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

LINES TERMINATED BY '\n'

STORED AS TEXTFILE;

插入数据:

insert into shop_data values

(10026,'201901230030',5170),

(10026,'201901230100',5669),

(10026,'201901230130',2396),

(10026,'201901230200',1498),

(10026,'201901230230',1997),

(10026,'201901230300',1188),

(10026,'201901230330',598),

(10026,'201901230400',479),

(10026,'201901230430',1587),

(10026,'201901230530',799),

(10027,'201901230030',2170),

(10027,'201901230100',1623),

(10027,'201901230130',3397),

(10027,'201901230200',1434),

(10027,'201901230230',1001),

(10028,'201901230300',1687),

(10028,'201901230330',1298),

(10028,'201901230400',149),

(10029,'201901230430',2587),

(10029,'201901230530',589);

count开窗函数

select shop_id,stat_date,ordamt,

-- 以符合条件的所有行作为窗口

count(shop_id) over() as count1,

-- 以按shop_id分组的所有行作为窗口

count(shop_id) over(partition by shop_id) as count2,

-- 以按shop_id分组、按stat_date排序的所有行作为窗口

count(shop_id) over(partition by shop_id order by stat_date) as count3,

-- 以按shop_id分组、按stat_date排序、按当前行+往前1行+往后2行的行作为窗口

count(ordamt) over(partition by shop_id order by stat_date rows between 1 preceding and 2 following) as count4,

-- 以按shop_id分组、按stat_date排序、按从起点到末尾,默认从起点到末尾和count2结果相同

count(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and unbounded following) as count5,

-- 以按shop_id分组、按stat_date排序、按从起点到当前行的前一行

count(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and 1 preceding) as count6,

-- 以按shop_id分组、按stat_date排序、按从起点到当前行

count(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and current row) as count7,

-- 以按shop_id分组、按stat_date排序、按从当前行到末尾

count(ordamt) over(partition by shop_id order by stat_date rows between current row and unbounded following) as count8,

-- 以按shop_id分组、按stat_date排序、按从当前行往后一行到末尾

count(ordamt) over(partition by shop_id order by stat_date rows between 1 following and unbounded following) as count9,

-- 以按shop_id分组、按stat_date排序、按从当前行往后一行到当前行往后2行

count(ordamt) over(partition by shop_id order by stat_date rows between 1 following and 2 following) as count10

from shop_data;

OK

shop_id stat_date ordamt count1 count2 count3 count4 count5 count6 count7 count8 count9 count10

10026 201901230030 5170.0 20 10 1 3 10 0 1 10 9 2

10026 201901230100 5669.0 20 10 2 4 10 1 2 9 8 2

10026 201901230130 2396.0 20 10 3 4 10 2 3 8 7 2

10026 201901230200 1498.0 20 10 4 4 10 3 4 7 6 2

10026 201901230230 1997.0 20 10 5 4 10 4 5 6 5 2

10026 201901230300 1188.0 20 10 6 4 10 5 6 5 4 2

10026 201901230330 598.0 20 10 7 4 10 6 7 4 3 2

10026 201901230400 479.0 20 10 8 4 10 7 8 3 2 2

10026 201901230430 1587.0 20 10 9 3 10 8 9 2 1 1

10026 201901230530 799.0 20 10 10 2 10 9 10 1 0 0

10027 201901230030 2170.0 20 5 1 3 5 0 1 5 4 2

10027 201901230100 1623.0 20 5 2 4 5 1 2 4 3 2

10027 201901230130 3397.0 20 5 3 4 5 2 3 3 2 2

10027 201901230200 1434.0 20 5 4 3 5 3 4 2 1 1

10027 201901230230 1001.0 20 5 5 2 5 4 5 1 0 0

10028 201901230300 1687.0 20 3 1 3 3 0 1 3 2 2

10028 201901230330 1298.0 20 3 2 3 3 1 2 2 1 1

10028 201901230400 149.0 20 3 3 2 3 2 3 1 0 0

10029 201901230430 2587.0 20 2 1 2 2 0 1 2 1 1

10029 201901230530 589.0 20 2 2 2 2 1 2 1 0 0

sum开窗函数

select

shop_id, stat_date, ordamt,

-- 以按shop_id分组、按stat_date排序、统计每个商品截止到当前时间的销售总额,默认从起点到当前行

sum(ordamt) over(partition by shop_id order by stat_date) as sum_amt1,

-- 以按shop_id分组、按stat_date排序、统计每个商品前半小时到后一小时的销售额(按当前行+往前1行+往后2行的行作为窗口)

sum(ordamt) over(partition by shop_id order by stat_date rows between 1 preceding and 2 following) as sum_amt2,

-- 以按shop_id分组、按stat_date排序、统计每个商品的销售总额(从起点到末尾)

sum(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and unbounded following) as sum_amt3,

-- 以按shop_id分组、按stat_date排序、统计截止到前半小时的销售总额(从起点到当前行的前一行)

sum(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and 1 preceding) as sum_amt4,

-- 以按shop_id分组、按stat_date排序、统计每个商品截止到当前时间的销售总额,默认从起点到当前行(从起点到当前行)

sum(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and current row) as sum_amt5,

-- 以按shop_id分组、按stat_date排序、统计当前时间及之后的销售总额(从当前行的末尾)

sum(ordamt) over(partition by shop_id order by stat_date rows between current row and unbounded following) as sum_amt6,

-- 以按shop_id分组、按stat_date排序、统计当前时间的后半小时及之后的销售额(当前行后一行到末尾)

sum(ordamt) over(partition by shop_id order by stat_date rows between 1 following and unbounded following) as sum_amt7,

-- 以按shop_id分组、按stat_date排序、统计当前时间后半小时到后一小时之间的销售额(按从当前行往后一行到当前行往后2行)

sum(ordamt) over(partition by shop_id order by stat_date rows between 1 following and 2 following) as sum_amt8

from shop_data;

OK

shop_id stat_date ordamt sum_amt1 sum_amt2 sum_amt3 sum_amt4 sum_amt5 sum_amt6 sum_amt7 sum_amt8

10026 201901230030 5170.0 5170.0 13235.0 21381.0 NULL 5170.0 21381.0 16211.0 8065.0

10026 201901230100 5669.0 10839.0 14733.0 21381.0 5170.0 10839.0 16211.0 10542.0 3894.0

10026 201901230130 2396.0 13235.0 11560.0 21381.0 10839.0 13235.0 10542.0 8146.0 3495.0

10026 201901230200 1498.0 14733.0 7079.0 21381.0 13235.0 14733.0 8146.0 6648.0 3185.0

10026 201901230230 1997.0 16730.0 5281.0 21381.0 14733.0 16730.0 6648.0 4651.0 1786.0

10026 201901230300 1188.0 17918.0 4262.0 21381.0 16730.0 17918.0 4651.0 3463.0 1077.0

10026 201901230330 598.0 18516.0 3852.0 21381.0 17918.0 18516.0 3463.0 2865.0 2066.0

10026 201901230400 479.0 18995.0 3463.0 21381.0 18516.0 18995.0 2865.0 2386.0 2386.0

10026 201901230430 1587.0 20582.0 2865.0 21381.0 18995.0 20582.0 2386.0 799.0 799.0

10026 201901230530 799.0 21381.0 2386.0 21381.0 20582.0 21381.0 799.0 NULL NULL

10027 201901230030 2170.0 2170.0 7190.0 9625.0 NULL 2170.0 9625.0 7455.0 5020.0

10027 201901230100 1623.0 3793.0 8624.0 9625.0 2170.0 3793.0 7455.0 5832.0 4831.0

10027 201901230130 3397.0 7190.0 7455.0 9625.0 3793.0 7190.0 5832.0 2435.0 2435.0

10027 201901230200 1434.0 8624.0 5832.0 9625.0 7190.0 8624.0 2435.0 1001.0 1001.0

10027 201901230230 1001.0 9625.0 2435.0 9625.0 8624.0 9625.0 1001.0 NULL NULL

10028 201901230300 1687.0 1687.0 3134.0 3134.0 NULL 1687.0 3134.0 1447.0 1447.0

10028 201901230330 1298.0 2985.0 3134.0 3134.0 1687.0 2985.0 1447.0 149.0 149.0

10028 201901230400 149.0 3134.0 1447.0 3134.0 2985.0 3134.0 149.0 NULL NULL

10029 201901230430 2587.0 2587.0 3176.0 3176.0 NULL 2587.0 3176.0 589.0 589.0

10029 201901230530 589.0 3176.0 3176.0 3176.0 2587.0 3176.0 589.0 NULL NULL

avg开窗函数

select

shop_id, stat_date, ordamt,

-- 以按shop_id分组、按stat_date排序、

round(avg(ordamt) over(partition by shop_id order by stat_date),2) as avg_amt1,

-- 以按shop_id分组、按stat_date排序、按当前行+往前1行+往后2行的行作为窗口的平均值

round(avg(ordamt) over(partition by shop_id order by stat_date rows between 1 preceding and 2 following), 2) as avg_amt2,

-- 以按shop_id分组、按stat_date排序、从起点到末尾的平均值

round(avg(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and unbounded following), 2) as avg_amt3,

-- 以按shop_id分组、按stat_date排序、从起点到当前行的前一行的平均值

round(avg(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and 1 preceding), 2) as avg_amt4,

-- 以按shop_id分组、按stat_date排序、从起点到当前行的平均值

round(avg(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and current row), 2) as avg_amt5,

-- 以按shop_id分组、按stat_date排序、从当前行的末尾的平均值

round(avg(ordamt) over(partition by shop_id order by stat_date rows between current row and unbounded following), 2) as avg_amt6,

-- 以按shop_id分组、按stat_date排序、当前行后一行到末尾的平均值

round(avg(ordamt) over(partition by shop_id order by stat_date rows between 1 following and unbounded following), 2) as avg_amt7,

-- 以按shop_id分组、按stat_date排序、按从当前行往后一行到当前行往后2行的平均值

round(avg(ordamt) over(partition by shop_id order by stat_date rows between 1 following and 2 following), 2) as avg_amt8

from shop_data;

OK

shop_id stat_date ordamt avg_amt1 avg_amt2 avg_amt3 avg_amt4 avg_amt5 avg_amt6 avg_amt7 avg_amt8

10026 201901230030 5170.0 5170.0 4411.67 2138.1 NULL 5170.0 2138.1 1801.22 4032.5

10026 201901230100 5669.0 5419.5 3683.25 2138.1 5170.0 5419.5 1801.22 1317.75 1947.0

10026 201901230130 2396.0 4411.67 2890.0 2138.1 5419.5 4411.67 1317.75 1163.71 1747.5

10026 201901230200 1498.0 3683.25 1769.75 2138.1 4411.67 3683.25 1163.71 1108.0 1592.5

10026 201901230230 1997.0 3346.0 1320.25 2138.1 3683.25 3346.0 1108.0 930.2 893.0

10026 201901230300 1188.0 2986.33 1065.5 2138.1 3346.0 2986.33 930.2 865.75 538.5

10026 201901230330 598.0 2645.14 963.0 2138.1 2986.33 2645.14 865.75 955.0 1033.0

10026 201901230400 479.0 2374.38 865.75 2138.1 2645.14 2374.38 955.0 1193.0 1193.0

10026 201901230430 1587.0 2286.89 955.0 2138.1 2374.38 2286.89 1193.0 799.0 799.0

10026 201901230530 799.0 2138.1 1193.0 2138.1 2286.89 2138.1 799.0 NULL NULL

10027 201901230030 2170.0 2170.0 2396.67 1925.0 NULL 2170.0 1925.0 1863.75 2510.0

10027 201901230100 1623.0 1896.5 2156.0 1925.0 2170.0 1896.5 1863.75 1944.0 2415.5

10027 201901230130 3397.0 2396.67 1863.75 1925.0 1896.5 2396.67 1944.0 1217.5 1217.5

10027 201901230200 1434.0 2156.0 1944.0 1925.0 2396.67 2156.0 1217.5 1001.0 1001.0

10027 201901230230 1001.0 1925.0 1217.5 1925.0 2156.0 1925.0 1001.0 NULL NULL

10028 201901230300 1687.0 1687.0 1044.67 1044.67 NULL 1687.0 1044.67 723.5 723.5

10028 201901230330 1298.0 1492.5 1044.67 1044.67 1687.0 1492.5 723.5 149.0 149.0

10028 201901230400 149.0 1044.67 723.5 1044.67 1492.5 1044.67 149.0 NULL NULL

10029 201901230430 2587.0 2587.0 1588.0 1588.0 NULL 2587.0 1588.0 589.0 589.0

10029 201901230530 589.0 1588.0 1588.0 1588.0 2587.0 1588.0 589.0 NULL NULL

min开窗函数

select

shop_id, stat_date, ordamt,

-- 以按shop_id分组、按stat_date排序、

min(ordamt) over(partition by shop_id order by stat_date) as min_amt1,

-- 以按shop_id分组、按stat_date排序、按当前行+往前1行+往后2行的行作为窗口的最小数

min(ordamt) over(partition by shop_id order by stat_date rows between 1 preceding and 2 following) as min_amt2,

-- 以按shop_id分组、按stat_date排序、从起点到末尾的最小数

min(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and unbounded following) as min_amt3,

-- 以按shop_id分组、按stat_date排序、从起点到当前行的前一行的最小数

min(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and 1 preceding) as min_amt4,

-- 以按shop_id分组、按stat_date排序、从起点到当前行的最小数

min(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and current row) as min_amt5,

-- 以按shop_id分组、按stat_date排序、从当前行的末尾的最小数

min(ordamt) over(partition by shop_id order by stat_date rows between current row and unbounded following) as min_amt6,

-- 以按shop_id分组、按stat_date排序、当前行后一行到末尾的最小数

min(ordamt) over(partition by shop_id order by stat_date rows between 1 following and unbounded following) as min_amt7,

-- 以按shop_id分组、按stat_date排序、按从当前行往后一行到当前行往后2行的最小数

min(ordamt) over(partition by shop_id order by stat_date rows between 1 following and 2 following) as min_amt8

from shop_data;

OK

shop_id stat_date ordamt min_amt1 min_amt2 min_amt3 min_amt4 min_amt5 min_amt6 min_amt7 min_amt8

10026 201901230030 5170.0 5170.0 2396.0 479.0 NULL 5170.0 479.0 479.0 2396.0

10026 201901230100 5669.0 5170.0 1498.0 479.0 5170.0 5170.0 479.0 479.0 1498.0

10026 201901230130 2396.0 2396.0 1498.0 479.0 5170.0 2396.0 479.0 479.0 1498.0

10026 201901230200 1498.0 1498.0 1188.0 479.0 2396.0 1498.0 479.0 479.0 1188.0

10026 201901230230 1997.0 1498.0 598.0 479.0 1498.0 1498.0 479.0 479.0 598.0

10026 201901230300 1188.0 1188.0 479.0 479.0 1498.0 1188.0 479.0 479.0 479.0

10026 201901230330 598.0 598.0 479.0 479.0 1188.0 598.0 479.0 479.0 479.0

10026 201901230400 479.0 479.0 479.0 479.0 598.0 479.0 479.0 799.0 799.0

10026 201901230430 1587.0 479.0 479.0 479.0 479.0 479.0 799.0 799.0 799.0

10026 201901230530 799.0 479.0 799.0 479.0 479.0 479.0 799.0 NULL NULL

10027 201901230030 2170.0 2170.0 1623.0 1001.0 NULL 2170.0 1001.0 1001.0 1623.0

10027 201901230100 1623.0 1623.0 1434.0 1001.0 2170.0 1623.0 1001.0 1001.0 1434.0

10027 201901230130 3397.0 1623.0 1001.0 1001.0 1623.0 1623.0 1001.0 1001.0 1001.0

10027 201901230200 1434.0 1434.0 1001.0 1001.0 1623.0 1434.0 1001.0 1001.0 1001.0

10027 201901230230 1001.0 1001.0 1001.0 1001.0 1434.0 1001.0 1001.0 NULL NULL

10028 201901230300 1687.0 1687.0 149.0 149.0 NULL 1687.0 149.0 149.0 149.0

10028 201901230330 1298.0 1298.0 149.0 149.0 1687.0 1298.0 149.0 149.0 149.0

10028 201901230400 149.0 149.0 149.0 149.0 1298.0 149.0 149.0 NULL NULL

10029 201901230430 2587.0 2587.0 589.0 589.0 NULL 2587.0 589.0 589.0 589.0

10029 201901230530 589.0 589.0 589.0 589.0 2587.0 589.0 589.0 NULL NULL

max开窗函数

select

shop_id, stat_date, ordamt,

-- 以按shop_id分组、按stat_date排序、

max(ordamt) over(partition by shop_id order by stat_date) as max_amt1,

-- 以按shop_id分组、按stat_date排序、按当前行+往前1行+往后2行的行作为窗口的最大数

max(ordamt) over(partition by shop_id order by stat_date rows between 1 preceding and 2 following) as max_amt2,

-- 以按shop_id分组、按stat_date排序、从起点到末尾的最大数

max(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and unbounded following) as max_amt3,

-- 以按shop_id分组、按stat_date排序、从起点到当前行的前一行的最大数

max(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and 1 preceding) as max_amt4,

-- 以按shop_id分组、按stat_date排序、从起点到当前行的最大数

max(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and current row) as max_amt5,

-- 以按shop_id分组、按stat_date排序、从当前行的末尾的最大数

max(ordamt) over(partition by shop_id order by stat_date rows between current row and unbounded following) as max_amt6,

-- 以按shop_id分组、按stat_date排序、当前行后一行到末尾的最大数

max(ordamt) over(partition by shop_id order by stat_date rows between 1 following and unbounded following) as max_amt7,

-- 以按shop_id分组、按stat_date排序、按从当前行往后一行到当前行往后2行的最大数

max(ordamt) over(partition by shop_id order by stat_date rows between 1 following and 2 following) as max_amt8

from shop_data;

OK

shop_id stat_date ordamt max_amt1 max_amt2 max_amt3 max_amt4 max_amt5 max_amt6 max_amt7 max_amt8

10026 201901230030 5170.0 5170.0 5669.0 5669.0 NULL 5170.0 5669.0 5669.0 5669.0

10026 201901230100 5669.0 5669.0 5669.0 5669.0 5170.0 5669.0 5669.0 2396.0 2396.0

10026 201901230130 2396.0 5669.0 5669.0 5669.0 5669.0 5669.0 2396.0 1997.0 1997.0

10026 201901230200 1498.0 5669.0 2396.0 5669.0 5669.0 5669.0 1997.0 1997.0 1997.0

10026 201901230230 1997.0 5669.0 1997.0 5669.0 5669.0 5669.0 1997.0 1587.0 1188.0

10026 201901230300 1188.0 5669.0 1997.0 5669.0 5669.0 5669.0 1587.0 1587.0 598.0

10026 201901230330 598.0 5669.0 1587.0 5669.0 5669.0 5669.0 1587.0 1587.0 1587.0

10026 201901230400 479.0 5669.0 1587.0 5669.0 5669.0 5669.0 1587.0 1587.0 1587.0

10026 201901230430 1587.0 5669.0 1587.0 5669.0 5669.0 5669.0 1587.0 799.0 799.0

10026 201901230530 799.0 5669.0 1587.0 5669.0 5669.0 5669.0 799.0 NULL NULL

10027 201901230030 2170.0 2170.0 3397.0 3397.0 NULL 2170.0 3397.0 3397.0 3397.0

10027 201901230100 1623.0 2170.0 3397.0 3397.0 2170.0 2170.0 3397.0 3397.0 3397.0

10027 201901230130 3397.0 3397.0 3397.0 3397.0 2170.0 3397.0 3397.0 1434.0 1434.0

10027 201901230200 1434.0 3397.0 3397.0 3397.0 3397.0 3397.0 1434.0 1001.0 1001.0

10027 201901230230 1001.0 3397.0 1434.0 3397.0 3397.0 3397.0 1001.0 NULL NULL

10028 201901230300 1687.0 1687.0 1687.0 1687.0 NULL 1687.0 1687.0 1298.0 1298.0

10028 201901230330 1298.0 1687.0 1687.0 1687.0 1687.0 1687.0 1298.0 149.0 149.0

10028 201901230400 149.0 1687.0 1298.0 1687.0 1687.0 1687.0 149.0 NULL NULL

10029 201901230430 2587.0 2587.0 2587.0 2587.0 NULL 2587.0 2587.0 589.0 589.0

10029 201901230530 589.0 2587.0 2587.0 2587.0 2587.0 2587.0 589.0 NULL NULL

5、窗口分析函数

first_value开窗函数

select

shop_id, stat_date, ordamt,

-- 以按shop_id分组、按stat_date排序、

first_value(ordamt) over(partition by shop_id order by stat_date) as first_amt1,

-- 以按shop_id分组、按stat_date排序、按当前行+往前1行+往后2行的行作为窗口的第一个值

first_value(ordamt) over(partition by shop_id order by stat_date rows between 1 preceding and 2 following) as first_amt2,

-- 以按shop_id分组、按stat_date排序、从起点到末尾的第一个值

first_value(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and unbounded following) as first_amt3,

-- 以按shop_id分组、按stat_date排序、从起点到当前行的前一行的第一个值

first_value(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and 1 preceding) as first_amt4,

-- 以按shop_id分组、按stat_date排序、从起点到当前行的第一个值

first_value(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and current row) as first_amt5,

-- 以按shop_id分组、按stat_date排序、从当前行的末尾的第一个值

first_value(ordamt) over(partition by shop_id order by stat_date rows between current row and unbounded following) as first_amt6,

-- 以按shop_id分组、按stat_date排序、当前行后一行到末尾的第一个值

first_value(ordamt) over(partition by shop_id order by stat_date rows between 1 following and unbounded following) as first_amt7,

-- 以按shop_id分组、按stat_date排序、按从当前行往后一行到当前行往后2行的第一个值

first_value(ordamt) over(partition by shop_id order by stat_date rows between 1 following and 2 following) as first_amt8

from shop_data;

OK

shop_id stat_date ordamt first_amt1 first_amt2 first_amt3 first_amt4 first_amt5 first_amt6 first_amt7 first_amt8

10026 201901230030 5170.0 5170.0 5170.0 5170.0 NULL 5170.0 5170.0 5669.0 5669.0

10026 201901230100 5669.0 5170.0 5170.0 5170.0 5170.0 5170.0 5669.0 2396.0 2396.0

10026 201901230130 2396.0 5170.0 5669.0 5170.0 5170.0 5170.0 2396.0 1498.0 1498.0

10026 201901230200 1498.0 5170.0 2396.0 5170.0 5170.0 5170.0 1498.0 1997.0 1997.0

10026 201901230230 1997.0 5170.0 1498.0 5170.0 5170.0 5170.0 1997.0 1188.0 1188.0

10026 201901230300 1188.0 5170.0 1997.0 5170.0 5170.0 5170.0 1188.0 598.0 598.0

10026 201901230330 598.0 5170.0 1188.0 5170.0 5170.0 5170.0 598.0 479.0 479.0

10026 201901230400 479.0 5170.0 598.0 5170.0 5170.0 5170.0 479.0 1587.0 1587.0

10026 201901230430 1587.0 5170.0 479.0 5170.0 5170.0 5170.0 1587.0 799.0 799.0

10026 201901230530 799.0 5170.0 1587.0 5170.0 5170.0 5170.0 799.0 NULL NULL

10027 201901230030 2170.0 2170.0 2170.0 2170.0 NULL 2170.0 2170.0 1623.0 1623.0

10027 201901230100 1623.0 2170.0 2170.0 2170.0 2170.0 2170.0 1623.0 3397.0 3397.0

10027 201901230130 3397.0 2170.0 1623.0 2170.0 2170.0 2170.0 3397.0 1434.0 1434.0

10027 201901230200 1434.0 2170.0 3397.0 2170.0 2170.0 2170.0 1434.0 1001.0 1001.0

10027 201901230230 1001.0 2170.0 1434.0 2170.0 2170.0 2170.0 1001.0 NULL NULL

10028 201901230300 1687.0 1687.0 1687.0 1687.0 NULL 1687.0 1687.0 1298.0 1298.0

10028 201901230330 1298.0 1687.0 1687.0 1687.0 1687.0 1687.0 1298.0 149.0 149.0

10028 201901230400 149.0 1687.0 1298.0 1687.0 1687.0 1687.0 149.0 NULL NULL

10029 201901230430 2587.0 2587.0 2587.0 2587.0 NULL 2587.0 2587.0 589.0 589.0

10029 201901230530 589.0 2587.0 2587.0 2587.0 2587.0 2587.0 589.0 NULL NULL

last_value开窗函数

select

shop_id, stat_date, ordamt,

-- 以按shop_id分组、按stat_date排序、

last_value(ordamt) over(partition by shop_id order by stat_date) as last_amt1,

-- 以按shop_id分组、按stat_date排序、按当前行+往前1行+往后2行的行作为窗口的最后一个值

last_value(ordamt) over(partition by shop_id order by stat_date rows between 1 preceding and 2 following) as last_amt2,

-- 以按shop_id分组、按stat_date排序、从起点到末尾的最后一个值

last_value(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and unbounded following) as last_amt3,

-- 以按shop_id分组、按stat_date排序、从起点到当前行的前一行的最后一个值

last_value(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and 1 preceding) as last_amt4,

-- 以按shop_id分组、按stat_date排序、从起点到当前行的最后一个值

last_value(ordamt) over(partition by shop_id order by stat_date rows between unbounded preceding and current row) as last_amt5,

-- 以按shop_id分组、按stat_date排序、从当前行的末尾的最后一个值

last_value(ordamt) over(partition by shop_id order by stat_date rows between current row and unbounded following) as last_amt6,

-- 以按shop_id分组、按stat_date排序、当前行后一行到末尾的最后一个值

last_value(ordamt) over(partition by shop_id order by stat_date rows between 1 following and unbounded following) as last_amt7,

-- 以按shop_id分组、按stat_date排序、按从当前行往后一行到当前行往后2行的最后一个值

last_value(ordamt) over(partition by shop_id order by stat_date rows between 1 following and 2 following) as last_amt8

from shop_data;

OK

shop_id stat_date ordamt last_amt1 last_amt2 last_amt3 last_amt4 last_amt5 last_amt6 last_amt7 last_amt8

10026 201901230030 5170.0 5170.0 2396.0 799.0 NULL 5170.0 799.0 799.0 2396.0

10026 201901230100 5669.0 5669.0 1498.0 799.0 5170.0 5669.0 799.0 799.0 1498.0

10026 201901230130 2396.0 2396.0 1997.0 799.0 5669.0 2396.0 799.0 799.0 1997.0

10026 201901230200 1498.0 1498.0 1188.0 799.0 2396.0 1498.0 799.0 799.0 1188.0

10026 201901230230 1997.0 1997.0 598.0 799.0 1498.0 1997.0 799.0 799.0 598.0

10026 201901230300 1188.0 1188.0 479.0 799.0 1997.0 1188.0 799.0 799.0 479.0

10026 201901230330 598.0 598.0 1587.0 799.0 1188.0 598.0 799.0 799.0 1587.0

10026 201901230400 479.0 479.0 799.0 799.0 598.0 479.0 799.0 799.0 799.0

10026 201901230430 1587.0 1587.0 799.0 799.0 479.0 1587.0 799.0 799.0 799.0

10026 201901230530 799.0 799.0 799.0 799.0 1587.0 799.0 799.0 NULL NULL

10027 201901230030 2170.0 2170.0 3397.0 1001.0 NULL 2170.0 1001.0 1001.0 3397.0

10027 201901230100 1623.0 1623.0 1434.0 1001.0 2170.0 1623.0 1001.0 1001.0 1434.0

10027 201901230130 3397.0 3397.0 1001.0 1001.0 1623.0 3397.0 1001.0 1001.0 1001.0

10027 201901230200 1434.0 1434.0 1001.0 1001.0 3397.0 1434.0 1001.0 1001.0 1001.0

10027 201901230230 1001.0 1001.0 1001.0 1001.0 1434.0 1001.0 1001.0 NULL NULL

10028 201901230300 1687.0 1687.0 149.0 149.0 NULL 1687.0 149.0 149.0 149.0

10028 201901230330 1298.0 1298.0 149.0 149.0 1687.0 1298.0 149.0 149.0 149.0

10028 201901230400 149.0 149.0 149.0 149.0 1298.0 149.0 149.0 NULL NULL

10029 201901230430 2587.0 2587.0 589.0 589.0 NULL 2587.0 589.0 589.0 589.0

10029 201901230530 589.0 589.0 589.0 589.0 2587.0 589.0 589.0 NULL NULL

lag开窗函数

注意:

1、使用第三个参数设置默认值时,默认值的数据类型需要和列的数据类型保持一致,否则设置不生效。

2、使用lag() 和 lead() 不能对窗口限定边界,必须是 unbounded 无界的,如果设置了边界,会出现如下报错信息。

Error while compiling statement: FAILED: SemanticException Failed to breakup Windowing invocations into Groups.

At least 1 group must only depend on input columns. Also check for circular dependencies.

Underlying error: Expecting left window frame boundary for function lag((tok_table_or_col ordamt), 1, 'NA')

Window Spec=[PartitioningSpec=[partitionColumns=[(tok_table_or_col shop_id)]orderColumns=[(tok_table_or_col

stat_date) ASC]]window(start=range(1 FOLLOWING), end=range(Unbounded FOLLOWING))] as _wcol2 to be unbounded.

select

shop_id, stat_date, ordamt,

-- 以按shop_id分组、按stat_date排序、注意第三个参数默认值的类型需要和列类型匹配,否则不生效

lag(ordamt, 1, 0) over(partition by shop_id order by stat_date) as last_amt1,

lag(ordamt, 2, 'NA') over(partition by shop_id order by stat_date rows between unbounded preceding and unbounded following) as last_amt2

from shop_data;

OK

shop_id stat_date ordamt last_amt1 last_amt2

10026 201901230030 5170 0 0

10026 201901230100 5669 5170 0

10026 201901230130 2396 5669 5170

10026 201901230200 1498 2396 5669

10026 201901230230 1997 1498 2396

10026 201901230300 1188 1997 1498

10026 201901230330 598 1188 1997

10026 201901230400 479 598 1188

10026 201901230430 1587 479 598

10026 201901230530 799 1587 479

10027 201901230030 2170 0 0

10027 201901230100 1623 2170 0

10027 201901230130 3397 1623 2170

10027 201901230200 1434 3397 1623

10027 201901230230 1001 1434 3397

10028 201901230300 1687 0 0

10028 201901230330 1298 1687 0

10028 201901230400 149 1298 1687

10029 201901230430 2587 0 0

10029 201901230530 589 2587 0

lead开窗函数

select

shop_id, stat_date, ordamt,

-- 以按shop_id分组、按stat_date排序、注意第三个参数默认值的类型需要和列类型匹配,否则不生效

lead(ordamt, 1, 0) over(partition by shop_id order by stat_date) as last_amt1,

lead(ordamt, 2, 'NA') over(partition by shop_id order by stat_date rows between unbounded preceding and unbounded following) as last_amt2

from shop_data;

OK

shop_id stat_date ordamt last_amt1 last_amt2

10026 201901230030 5170 5669 2396

10026 201901230100 5669 2396 1498

10026 201901230130 2396 1498 1997

10026 201901230200 1498 1997 1188

10026 201901230230 1997 1188 598

10026 201901230300 1188 598 479

10026 201901230330 598 479 1587

10026 201901230400 479 1587 799

10026 201901230430 1587 799 0

10026 201901230530 799 0 0

10027 201901230030 2170 1623 3397

10027 201901230100 1623 3397 1434

10027 201901230130 3397 1434 1001

10027 201901230200 1434 1001 0

10027 201901230230 1001 0 0

10028 201901230300 1687 1298 149

10028 201901230330 1298 149 0

10028 201901230400 149 0 0

10029 201901230430 2587 589 0

10029 201901230530 589 0 0

cume_dist开窗函数

计算某个窗口或分区中某个值的累积分布。假定升序排序,则使用以下公式确定累积分布:

小于等于当前值x的行数 / 窗口或partition分区内的总行数。其中,x 等于 order by 子句中指定的列的当前行中的值。

select

shop_id, stat_date, ordamt,

-- 统计小于等于当前销售额的订单占总订单的比例

cume_dist() over(order by ordamt) as cume_dist1,

-- 统计大于等于当前销售额的订单占总订单的比例

cume_dist() over(order by ordamt desc) as cume_dist2,

-- 统计分区内小于等于当前销售额的订单占总订单的比例

round(cume_dist() over(partition by shop_id order by ordamt), 2) as cume_dist3

from shop_data;

shop_id stat_date ordamt cume_dist1 cume_dist2 cume_dist3

10026 201901230400 479.0 0.1 0.95 0.1

10026 201901230330 598.0 0.2 0.85 0.2

10026 201901230530 799.0 0.25 0.8 0.3

10026 201901230300 1188.0 0.35 0.7 0.4

10026 201901230200 1498.0 0.5 0.55 0.5

10026 201901230430 1587.0 0.55 0.5 0.6

10026 201901230230 1997.0 0.7 0.35 0.7

10026 201901230130 2396.0 0.8 0.25 0.8

10026 201901230030 5170.0 0.95 0.1 0.9

10026 201901230100 5669.0 1.0 0.05 1.0

10027 201901230230 1001.0 0.3 0.75 0.2

10027 201901230200 1434.0 0.45 0.6 0.4

10027 201901230100 1623.0 0.6 0.45 0.6

10027 201901230030 2170.0 0.75 0.3 0.8

10027 201901230130 3397.0 0.9 0.15 1.0

10028 201901230400 149.0 0.05 1.0 0.33

10028 201901230330 1298.0 0.4 0.65 0.67

10028 201901230300 1687.0 0.65 0.4 1.0

10029 201901230530 589.0 0.15 0.9 0.5

10029 201901230430 2587.0 0.85 0.2 1.0

6、窗口排序函数

rank开窗函数

rank 开窗函数基于 over 子句中的 order by 确定一组值中一个值的排名。

如果存在partition by ,则为每个分区组中的每个值排名。排名可能不是连续的。例如,如果两个行的排名为 1,则下一个排名为 3。

select

shop_id, stat_date, ordamt,

-- 以按shop_id排序

rank() over(order by shop_id) as rank_amt1,

-- 以按shop_id分区、按stat_date排序

rank() over(partition by shop_id order by stat_date) as rank_amt2

from shop_data;

OK

shop_id stat_date ordamt rank_amt1 rank_amt2

10026 201901230030 5170.0 1 1

10026 201901230100 5669.0 1 2

10026 201901230130 2396.0 1 3

10026 201901230200 1498.0 1 4

10026 201901230230 1997.0 1 5

10026 201901230300 1188.0 1 6

10026 201901230330 598.0 1 7

10026 201901230400 479.0 1 8

10026 201901230430 1587.0 1 9

10026 201901230530 799.0 1 10

10027 201901230030 2170.0 11 1

10027 201901230100 1623.0 11 2

10027 201901230130 3397.0 11 3

10027 201901230200 1434.0 11 4

10027 201901230230 1001.0 11 5

10028 201901230300 1687.0 16 1

10028 201901230330 1298.0 16 2

10028 201901230400 149.0 16 3

10029 201901230430 2587.0 19 1

10029 201901230530 589.0 19 2

dense_rank开窗函数

dense_rank与rank有一点不同,当排名一样的时候,接下来的行是连续的。如两个行的排名为 1,则下一个排名为 2。

select

shop_id, stat_date, ordamt,

-- 以按shop_id排序

dense_rank() over(order by shop_id) as dense_amt1,

-- 以按shop_id分区、按stat_date排序

dense_rank() over(partition by shop_id order by stat_date) as dense_amt2

from shop_data;

OK

shop_id stat_date ordamt dense_amt1 dense_amt2

10026 201901230030 5170.0 1 1

10026 201901230100 5669.0 1 2

10026 201901230130 2396.0 1 3

10026 201901230200 1498.0 1 4

10026 201901230230 1997.0 1 5

10026 201901230300 1188.0 1 6

10026 201901230330 598.0 1 7

10026 201901230400 479.0 1 8

10026 201901230430 1587.0 1 9

10026 201901230530 799.0 1 10

10027 201901230030 2170.0 2 1

10027 201901230100 1623.0 2 2

10027 201901230130 3397.0 2 3

10027 201901230200 1434.0 2 4

10027 201901230230 1001.0 2 5

10028 201901230300 1687.0 3 1

10028 201901230330 1298.0 3 2

10028 201901230400 149.0 3 3

10029 201901230430 2587.0 4 1

10029 201901230530 589.0 4 2

ntile开窗函数

将分区中已排序的行划分为大小尽可能相等的指定数量的排名的组,并返回给定行所在的组的排名。

select

shop_id, stat_date, ordamt,

-- 以按shop_id分成两组、按stat_date排序

ntile(2) over(partition by shop_id order by stat_date) as ntile_amt1,

-- 以按shop_id分成三组、按stat_date排序

ntile(3) over(partition by shop_id order by stat_date) as ntile_amt2

from shop_data;

OK

shop_id stat_date ordamt ntile_amt1 ntile_amt2

10026 201901230030 5170.0 1 1

10026 201901230100 5669.0 1 1

10026 201901230130 2396.0 1 1

10026 201901230200 1498.0 1 1

10026 201901230230 1997.0 1 2

10026 201901230300 1188.0 2 2

10026 201901230330 598.0 2 2

10026 201901230400 479.0 2 3

10026 201901230430 1587.0 2 3

10026 201901230530 799.0 2 3

10027 201901230030 2170.0 1 1

10027 201901230100 1623.0 1 1

10027 201901230130 3397.0 1 2

10027 201901230200 1434.0 2 2

10027 201901230230 1001.0 2 3

10028 201901230300 1687.0 1 1

10028 201901230330 1298.0 1 2

10028 201901230400 149.0 2 3

10029 201901230430 2587.0 1 1

10029 201901230530 589.0 2 2

row_number开窗函数

从1开始对分区内的数据排序。

select

shop_id, stat_date, ordamt,

-- 以按shop_id分区、按stat_date排序

row_number() over(partition by shop_id order by stat_date) as row_amt

from shop_data;

OK

shop_id stat_date ordamt row_amt

10026 201901230030 5170.0 1

10026 201901230100 5669.0 2

10026 201901230130 2396.0 3

10026 201901230200 1498.0 4

10026 201901230230 1997.0 5

10026 201901230300 1188.0 6

10026 201901230330 598.0 7

10026 201901230400 479.0 8

10026 201901230430 1587.0 9

10026 201901230530 799.0 10

10027 201901230030 2170.0 1

10027 201901230100 1623.0 2

10027 201901230130 3397.0 3

10027 201901230200 1434.0 4

10027 201901230230 1001.0 5

10028 201901230300 1687.0 1

10028 201901230330 1298.0 2

10028 201901230400 149.0 3

10029 201901230430 2587.0 1

10029 201901230530 589.0 2

percent_rank开窗函数

计算给定行的百分比排名。可以用来计算超过了百分之多少的人。如360小助手开机速度超过了百分之多少的人。

(当前行的rank值-1)/(分组内的总行数-1)

select

shop_id, stat_date, ordamt,

-- 以按shop_id分区、按stat_date排序

row_number() over(partition by shop_id order by stat_date) as row_amt,

round(percent_rank() over(partition by shop_id order by stat_date), 2) as percent_amt

from shop_data;

OK

shop_id stat_date ordamt row_amt percent_amt

10026 201901230030 5170.0 1 0.0

10026 201901230100 5669.0 2 0.11

10026 201901230130 2396.0 3 0.22

10026 201901230200 1498.0 4 0.33

10026 201901230230 1997.0 5 0.44

10026 201901230300 1188.0 6 0.56

10026 201901230330 598.0 7 0.67

10026 201901230400 479.0 8 0.78

10026 201901230430 1587.0 9 0.89

10026 201901230530 799.0 10 1.0

10027 201901230030 2170.0 1 0.0

10027 201901230100 1623.0 2 0.25

10027 201901230130 3397.0 3 0.5

10027 201901230200 1434.0 4 0.75

10027 201901230230 1001.0 5 1.0

10028 201901230300 1687.0 1 0.0

10028 201901230330 1298.0 2 0.5

10028 201901230400 149.0 3 1.0

10029 201901230430 2587.0 1 0.0

10029 201901230530 589.0 2 1.0