python 爬虫之 爬取网页并保存(简单基础知识)

基础知识认识

首先导入所需要的库

from fake_useragent import UserAgent#头部库

from urllib.request import Request,urlopen#请求和打开

from urllib.parse import quote#转码

from urllib.parse import urlencode#转码

先获取一个简单的网页

url = "https://www.baidu.com/?tn=02003390_43_hao_pg" #获取一个网址

response = urlopen(url)#将网址打开

info = response.read()#读取网页内容

info.decode()#将其转码,utf-8

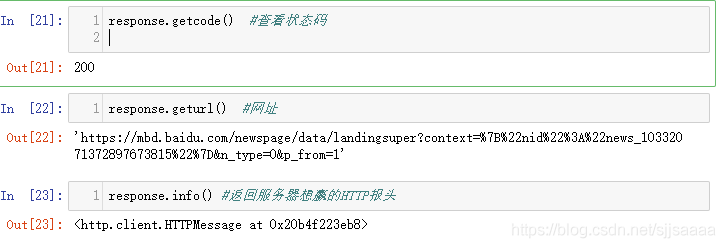

response.getcode() #查看状态码

response.geturl() #查看当前网址

response.info() #返回服务器想赢的HTTP报头

随机获取一个头部

导入专用库

from fake_useragent import UserAgent#头部库

UserAgent().random

ua.choram#这两种都可以

就可以随机获得一个头部。

将头部添加到headers中

首先将随机获得的头部保存在headers中

headers = {"User-Agent":UserAgent().random}

请求

request = Request(url,headers=headers)

获取一个网页

url = "https://www.baidu.com/?tn=02003390_43_hao_pg"

headers = {"UserAgent":UserAgent().random}#头部

request = Request(url,headers = headers)#请求

response = urlopen(request)#打开

info = response.read()#读取

info.decode()#转码

转码:将中文转成网页编码

#转码

from urllib.parse import quote

quote("百度")

url = "https://www.baidu.com/s?wd={}".format(quote("百度"))

from urllib.request import Request,urlopen

from urllib.parse import urlencode

args = {

"wd":"百度"

,"ie":"utf-8"

}

urlencode(args)

url = "https://www.baidu.com/s?{}".format(urlencode(args))

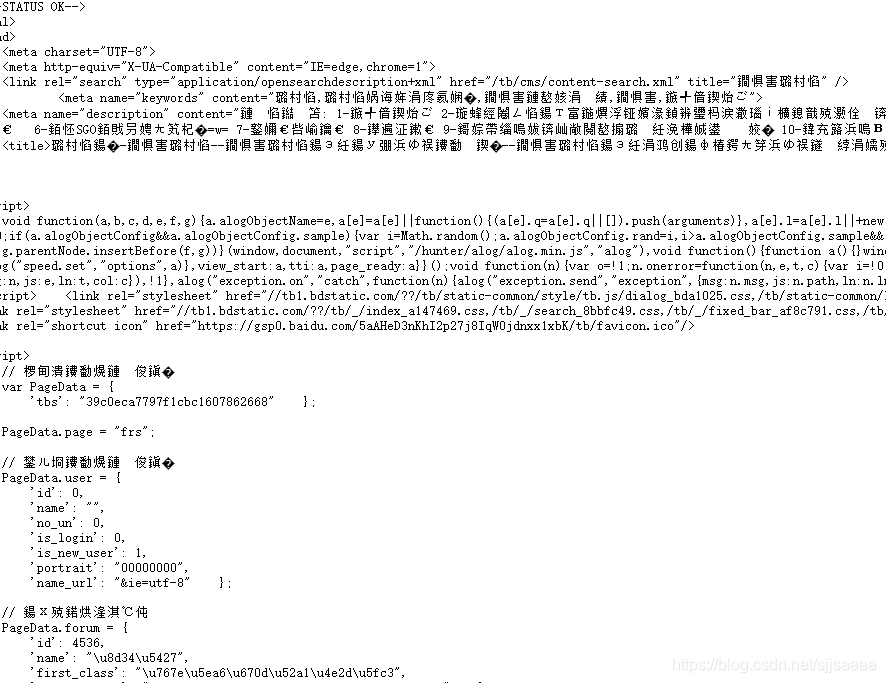

案例

爬取几个网页并保存

简单的爬取十页

#爬取贴吧

from fake_useragent import UserAgent

from urllib.request import Request,urlopen

from urllib.parse import quote

from urllib.parse import urlencode

headers = {"User-Agent":UserAgent().random}

jihe = []

for i in range(0,501,50):

url = "https://tieba.baidu.com/f?kw=%E5%B0%9A%E5%AD%A6%E5%A0%82&ie=utf-8&pn={}".format(i)

headers = {"User-Agent":UserAgent().random}

request = Request(url,headers=headers)

response = urlopen(request)

info = response.read().decode()

jihe.append(info)

print("第{}页保存成功!".format(int(i/50 +1)))

def get_html(url):

"""

获取网页函数

"""

headers = {"User-Agent":UserAgent().random}

request = Request(url,headers=headers)

response = urlopen(request)

return response.read()

def save_html(filename,html_bytes):

"""

保存网页函数

"""

with open("fliename","wb") as f:

f.write(html_bytes)

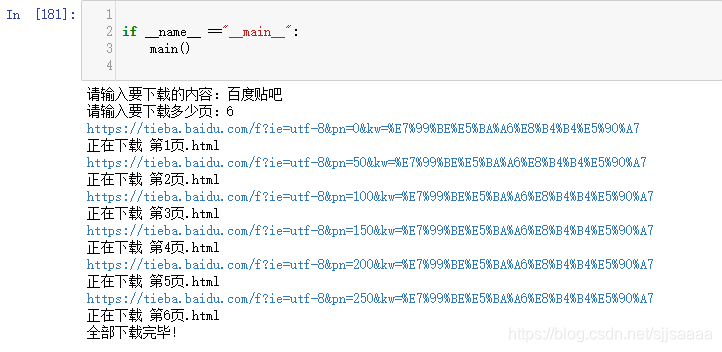

def main():

"""

主函数

"""

content = input("请输入要下载的内容:")

num = int(input("请输入要下载多少页:"))

base_url = "https://tieba.baidu.com/f?ie=utf-8&{}"

for pn in range(num):

args = {

"pn":pn * 50,

"kw":content

}

args = urlencode(args)

url = base_url.format(args)

print(url)

html_bytes = get_html(url)

filrname = "第{}页.html".format(pn+1)

save_html(filrname,html_bytes)

print("正在下载",filrname)

print("全部下载完毕!")

if __name__ =="__main__":

main()