H264和AAC合成FLV案例

目录

- FFmpeg合成流程

- FFmpeg函数:avformat_write_header

- FFmpeg结构体:avformat_alloc_output_context2

- FFmpeg结构体:AVOutputFormat

- FFmpeg函数:avformat_new_stream

- FFmpeg函数:av_interleaved_write_frame

- FFmpeg函数:av_compare_ts

- FFmpeg时间戳详解

- H264和AAC合成FLV代码实现

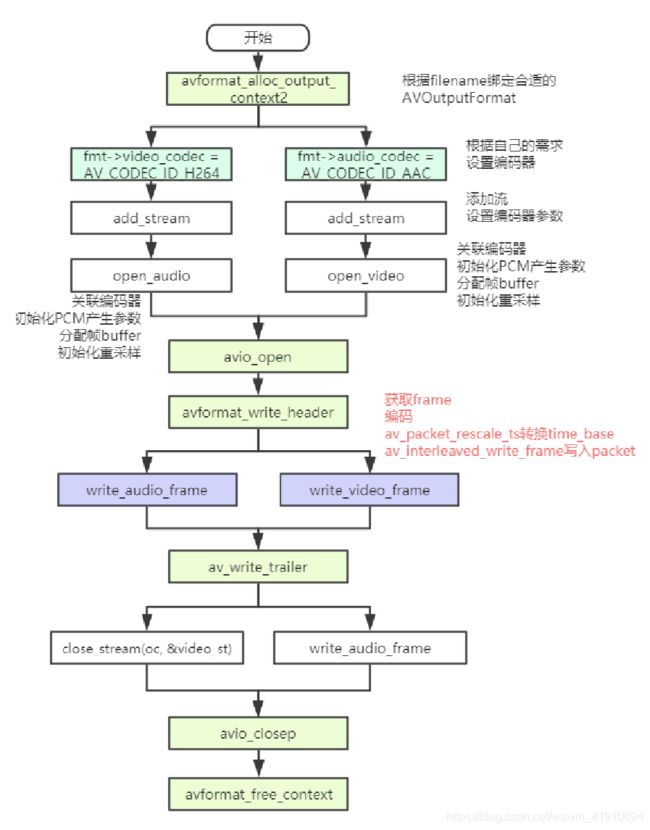

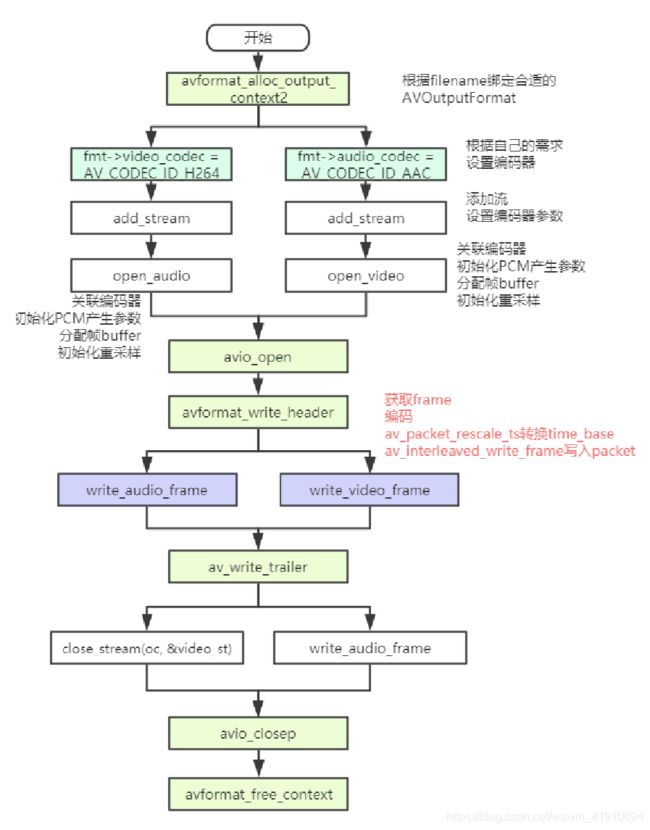

1. FFmpeg合成流程

- 示例本程序会⽣成⼀个合成的⾳频和视频流,并将它们编码和封装输出到输出⽂件,输出格式是根据⽂件扩展名⾃动猜测的。

- 示例的流程图如下所示

- ffmpeg 的 Mux 主要分为 三步操作

- avformat_write_header : 写⽂件头

- av_write_frame/av_interleaved_write_frame: 写packet

- av_write_trailer : 写⽂件尾

- avcodec_parameters_from_context:将AVCodecContext结构体中码流参数拷⻉到 AVCodecParameters结构体中,和avcodec_parameters_to_context刚好相反

2. FFmpeg函数:avformat_write_header

int avformat_write_header(AVFormatContext *s, AVDictionary **options)

{

int ret = 0;

int already_initialized = s->internal->initialized;

int streams_already_initialized = s->internal->streams_initialized;

if (!already_initialized)

if ((ret = avformat_init_output(s, options)) < 0)

return ret;

if (!(s->oformat->flags & AVFMT_NOFILE) && s->pb)

avio_write_marker(s->pb, AV_NOPTS_VALUE, AVIO_DATA_MARKER_HEADER);

if (s->oformat->write_header) {

ret = s->oformat->write_header(s);

if (ret >= 0 && s->pb && s->pb->error < 0)

ret = s->pb->error;

if (ret < 0)

goto fail;

flush_if_needed(s);

}

if (!(s->oformat->flags & AVFMT_NOFILE) && s->pb)

avio_write_marker(s->pb, AV_NOPTS_VALUE, AVIO_DATA_MARKER_UNKNOWN);

if (!s->internal->streams_initialized) {

if ((ret = init_pts(s)) < 0)

goto fail;

}

return streams_already_initialized;

fail:

deinit_muxer(s);

return ret;

}

- 最终调⽤到复⽤器的 write_header,⽐如

AVOutputFormat ff_flv_muxer = {

.name = "flv",

.long_name = NULL_IF_CONFIG_SMALL("FLV (Flash Video)"),

.mime_type = "video/x-flv",

.extensions = "flv",

.priv_data_size = sizeof(FLVContext),

.audio_codec = CONFIG_LIBMP3LAME ? AV_CODEC_ID_MP3 : AV_CODEC_ID_ADPCM_SWF,

.video_codec = AV_CODEC_ID_FLV1,

.init = flv_init,

.write_header = flv_write_header,

.write_packet = flv_write_packet,

.write_trailer = flv_write_trailer,

.check_bitstream= flv_check_bitstream,

.codec_tag = (const AVCodecTag* const []) {

flv_video_codec_ids, flv_audio_codec_ids, 0

},

.flags = AVFMT_GLOBALHEADER | AVFMT_VARIABLE_FPS |

AVFMT_TS_NONSTRICT,

.priv_class = &flv_muxer_class,

};

3. FFmpeg结构体:avformat_alloc_output_context2

- 函数在在libavformat.h⾥⾯的定义

int avformat_alloc_output_context2(AVFormatContext **ctx, ff_const59 AVOutputFormat *oformat,

const char *format_name, const char *filename);

- 函数参数的介绍:

- ctx:需要创建的context,返回NULL表示失败。

- oformat:指定对应的AVOutputFormat,如果不指定,可以通过后⾯format_name、filename两个参数进⾏指定,让ffmpeg⾃⼰推断。

- format_name: 指定⾳视频的格式,⽐如“flv”,“mpeg”等,如果设置为NULL,则由filename进⾏指定,让ffmpeg⾃⼰推断。

- filename: 指定⾳视频⽂件的路径,如果oformat、format_name为NULL,则ffmpeg内部根据filename后缀名选择合适的复⽤器,⽐如xxx.flv则使⽤flv复⽤器。

int avformat_alloc_output_context2(AVFormatContext **avctx, ff_const59 AVOutputFormat *oformat,

const char *format, const char *filename)

{

AVFormatContext *s = avformat_alloc_context();

int ret = 0;

*avctx = NULL;

if (!s)

goto nomem;

if (!oformat) {

if (format) {

oformat = av_guess_format(format, NULL, NULL);

if (!oformat) {

av_log(s, AV_LOG_ERROR, "Requested output format '%s' is not a suitable output format\n", format);

ret = AVERROR(EINVAL);

goto error;

}

} else {

oformat = av_guess_format(NULL, filename, NULL);

if (!oformat) {

ret = AVERROR(EINVAL);

av_log(s, AV_LOG_ERROR, "Unable to find a suitable output format for '%s'\n",

filename);

goto error;

}

}

}

s->oformat = oformat;

if (s->oformat->priv_data_size > 0) {

s->priv_data = av_mallocz(s->oformat->priv_data_size);

if (!s->priv_data)

goto nomem;

if (s->oformat->priv_class) {

*(const AVClass**)s->priv_data= s->oformat->priv_class;

av_opt_set_defaults(s->priv_data);

}

} else

s->priv_data = NULL;

if (filename) {

#if FF_API_FORMAT_FILENAME

FF_DISABLE_DEPRECATION_WARNINGS

av_strlcpy(s->filename, filename, sizeof(s->filename));

FF_ENABLE_DEPRECATION_WARNINGS

#endif

if (!(s->url = av_strdup(filename)))

goto nomem;

}

*avctx = s;

return 0;

nomem:

av_log(s, AV_LOG_ERROR, "Out of memory\n");

ret = AVERROR(ENOMEM);

error:

avformat_free_context(s);

return ret;

}

- 可以看出,⾥⾯最主要的就两个函数,avformat_alloc_context和av_guess_format,⼀个是申请内存分配上下⽂,⼀个是通过后⾯两个参数获取AVOutputFormat。

- av_guess_format这个函数会通过filename和short_name来和所有的编码器进⾏⽐对,找出最接近的编码器然后返回。

ff_const59 AVOutputFormat *av_guess_format(const char *short_name, const char *filename,

const char *mime_type)

{

const AVOutputFormat *fmt = NULL;

AVOutputFormat *fmt_found = NULL;

void *i = 0;

int score_max, score;

#if CONFIG_IMAGE2_MUXER

if (!short_name && filename &&

av_filename_number_test(filename) &&

ff_guess_image2_codec(filename) != AV_CODEC_ID_NONE) {

return av_guess_format("image2", NULL, NULL);

}

#endif

score_max = 0;

while ((fmt = av_muxer_iterate(&i))) {

score = 0;

if (fmt->name && short_name && av_match_name(short_name, fmt->name))

score += 100;

if (fmt->mime_type && mime_type && !strcmp(fmt->mime_type, mime_type))

score += 10;

if (filename && fmt->extensions &&

av_match_ext(filename, fmt->extensions)) {

score += 5;

}

if (score > score_max) {

score_max = score;

fmt_found = (AVOutputFormat*)fmt;

}

}

return fmt_found;

}

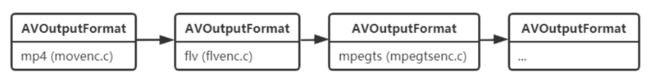

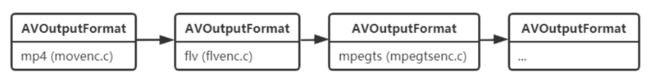

4. FFmpeg结构体:AVOutputFormat

1. 描述

- AVOutpufFormat表示输出⽂件容器格式,AVOutputFormat 结构主要包含的信息有:封装名称描述,编码格式信息(video/audio 默认编码格式,⽀持的编码格式列表),⼀些对封装的操作函数(write_header,write_packet,write_tailer等)。

- ffmpeg⽀持各种各样的输出⽂件格式,MP4,FLV,3GP等等。⽽ AVOutputFormat 结构体则保存了这些格式的信息和⼀些常规设置。

- 每⼀种封装对应⼀个 AVOutputFormat 结构,ffmpeg将AVOutputFormat 按照链表存储

2. 结构体定义

typedef struct AVOutputFormat {

const char *name;

const char *long_name;

const char *mime_type;

const char *extensions;

enum AVCodecID audio_codec;

enum AVCodecID video_codec;

enum AVCodecID subtitle_codec;

int flags;

const struct AVCodecTag * const *codec_tag;

const AVClass *priv_class;

#if FF_API_AVIOFORMAT

#define ff_const59

#else

#define ff_const59 const

#endif

ff_const59 struct AVOutputFormat *next;

int priv_data_size;

int (*write_header)(struct AVFormatContext *);

int (*write_packet)(struct AVFormatContext *, AVPacket *pkt);

int (*write_trailer)(struct AVFormatContext *);

int (*interleave_packet)(struct AVFormatContext *, AVPacket *out,

AVPacket *in, int flush);

int (*query_codec)(enum AVCodecID id, int std_compliance);

void (*get_output_timestamp)(struct AVFormatContext *s, int stream,

int64_t *dts, int64_t *wall);

int (*control_message)(struct AVFormatContext *s, int type,

void *data, size_t data_size);

int (*write_uncoded_frame)(struct AVFormatContext *, int stream_index,

AVFrame **frame, unsigned flags);

int (*get_device_list)(struct AVFormatContext *s, struct AVDeviceInfoList *device_list);

int (*create_device_capabilities)(struct AVFormatContext *s, struct AVDeviceCapabilitiesQuery *caps);

int (*free_device_capabilities)(struct AVFormatContext *s, struct AVDeviceCapabilitiesQuery *caps);

enum AVCodecID data_codec;

int (*init)(struct AVFormatContext *);

void (*deinit)(struct AVFormatContext *);

int (*check_bitstream)(struct AVFormatContext *, const AVPacket *pkt);

} AVOutputFormat;

3. 常见变量及其作用

- const char *name; // 复⽤器名称

- const char *long_name;//格式的描述性名称,易于阅读。

- enum AVCodecID audio_codec; //默认的⾳频编解码器

- enum AVCodecID video_codec; //默认的视频编解码器

- enum AVCodecID subtitle_codec; //默认的字幕编解码器

- ⼤部分复⽤器都有默认的编码器,所以⼤家如果要调整编码器类型则需要⾃⼰⼿动指定。

- ⽐如AVOutputFormat ff_flv_muxer

AVOutputFormat ff_flv_muxer = {

.name = "flv",

.long_name = NULL_IF_CONFIG_SMALL("FLV (Flash Video)"),

.mime_type = "video/x-flv",

.extensions = "flv",

.priv_data_size = sizeof(FLVContext),

.audio_codec = CONFIG_LIBMP3LAME ? AV_CODEC_ID_MP3 : AV_CODEC_ID_ADPCM_SWF,

.video_codec = AV_CODEC_ID_FLV1,

.init = flv_init,

.write_header = flv_write_header,

.write_packet = flv_write_packet,

.write_trailer = flv_write_trailer,

.check_bitstream= flv_check_bitstream,

.codec_tag = (const AVCodecTag* const []) {

flv_video_codec_ids, flv_audio_codec_ids, 0

},

.flags = AVFMT_GLOBALHEADER | AVFMT_VARIABLE_FPS |

AVFMT_TS_NONSTRICT,

.priv_class = &flv_muxer_class,

};

- int (*write_header)(struct AVFormatContext *);

- int (*write_packet)(struct AVFormatContext *, AVPacket *pkt);//写⼀个数据包。 如果在标志中设置AVFMT_ALLOW_FLUSH,则pkt可以为NULL。

- int (*write_trailer)(struct AVFormatContext *);

- int (*interleave_packet)(struct AVFormatContext *, AVPacket *out, AVPacket *in, int flush);

- int (*control_message)(struct AVFormatContext *s, int type, void *data, size_t data_size);//允许从应⽤程序向设备发送消息。

- int (*write_uncoded_frame)(struct AVFormatContext *, int stream_index, AVFrame **frame,

- unsigned flags);//写⼀个未编码的AVFrame。

- int (*init)(struct AVFormatContext *);//初始化格式。 可以在此处分配数据,并设置在发送数据包之前需要设置的任何AVFormatContext或AVStream参数。

- void (*deinit)(struct AVFormatContext *);//取消初始化格式。

- int (*check_bitstream)(struct AVFormatContext *, const AVPacket *pkt);//设置任何必要的⽐特流过滤,并提取全局头部所需的任何额外数据。

5. FFmpeg函数:avformat_new_stream

- AVStream 即是流通道。例如我们将 H264 和 AAC 码流存储为MP4⽂件的时候,就需要在 MP4⽂件中增加两个流通道,⼀个存储Video:H264,⼀个存储Audio:AAC。(假设H264和AAC只包含单个流通道)。

AVStream *avformat_new_stream(AVFormatContext *s, const AVCodec *c);

- avformat_new_stream 在 AVFormatContext 中创建 Stream 通道。

1. 关联的结构体

- AVFormatContext :

- unsigned int nb_streams; 记录stream通道数⽬。

- AVStream **streams; 存储stream通道。

- AVStream :

- int index; 在AVFormatContext 中所处的通道索引

- avformat_new_stream之后便在 AVFormatContext ⾥增加了 AVStream 通道(相关的index已经被设置了)。之后,我们就可以⾃⾏设置 AVStream 的⼀些参数信息。例如 : codec_id , format ,bit_rate,width , height

6. FFmpeg函数:av_interleaved_write_frame

- 函数原型:int av_interleaved_write_frame(AVFormatContext *s, AVPacket *pkt);

- 说明:将数据包写⼊输出媒体⽂件,并确保正确的交织(保持packet dts的增⻓性)。该函数会在内部根据需要缓存packet,以确保输出⽂件中的packet按dts递增的顺序正确交织。如果⾃⼰进⾏交织则应调⽤av_write_frame()。

1. 参数

- s:媒体⽂件句柄

- pkt:要写⼊的packet

- 如果packet使⽤引⽤参考计数的内存⽅式,则此函数将获取此引⽤权(可以理解为move了reference),并在内部在合适的时候进⾏释放。此函数返回后,调⽤者不得通过此引⽤访问数据。如果packet没有引⽤计数,libavformat将进⾏复制。

- 此参数可以为NULL(在任何时候,不仅在结尾),以刷新交织队列。

- Packet的stream_index字段必须设置为s-> streams中相应流的索引。

- 时间戳记(pts,dts)必须设置为stream’s timebase中的正确值(除⾮输出格式⽤AVFMT_NOTIMESTAMPS标志标记,然后可以将其设置为AV_NOPTS_VALUE)。

- 同⼀stream后续packet的dts必须严格递增(除⾮输出格式⽤AVFMT_TS_NONSTRICT标记,则它们只必须不减少)。duration也应设置(如果已知)。

- 返回值:成功时为0,错误时为负AVERROR。即使此函数调⽤失败,Libavformat仍将始终释放该packet

7. FFmpeg函数:av_compare_ts

int av_compare_ts(int64_t ts_a, AVRational tb_a, int64_t ts_b, AVRational tb_b);

- 返回值:

- -1 ts_a 在ts_b之前

- 1 ts_a 在ts_b之后

- 0 ts_a 在ts_b同⼀位置

- ⽤伪代码:return ts_a == ts_b ? 0 : ts_a < ts_b ? -1 : 1

8. FFmpeg时间戳详解

- https://www.cnblogs.com/leisure_chn/p/10584910.html

9. H264和AAC合成FLV代码实现

#include <stdlib.h>

#include <stdio.h>

#include <string.h>

#include <math.h>

#include <libavutil/avassert.h>

#include <libavutil/channel_layout.h>

#include <libavutil/opt.h>

#include <libavutil/mathematics.h>

#include <libavutil/timestamp.h>

#include <libavformat/avformat.h>

#include <libswscale/swscale.h>

#include <libswresample/swresample.h>

#define STREAM_DURATION 5.0

#define STREAM_FRAME_RATE 25

#define STREAM_PIX_FMT AV_PIX_FMT_YUV420P

#define SCALE_FLAGS SWS_BICUBIC

typedef struct OutputStream {

AVStream *st;

AVCodecContext *enc;

int64_t next_pts;

int samples_count;

AVFrame *frame;

AVFrame *tmp_frame;

float t, tincr, tincr2;

struct SwsContext *sws_ctx;

struct SwrContext *swr_ctx;

} OutputStream;

static void log_packet(const AVFormatContext *fmt_ctx, const AVPacket *pkt) {

AVRational *time_base = &fmt_ctx->streams[pkt->stream_index]->time_base;

printf("pts:%s pts_time:%s dts:%s dts_time:%s duration:%s duration_time:%s stream_index:%d\n",

av_ts2str(pkt->pts), av_ts2timestr(pkt->pts, time_base),

av_ts2str(pkt->dts), av_ts2timestr(pkt->dts, time_base),

av_ts2str(pkt->duration), av_ts2timestr(pkt->duration, time_base),

pkt->stream_index);

}

static int write_frame(AVFormatContext *fmt_ctx, const AVRational *time_base,

AVStream *st, AVPacket *pkt) {

av_packet_rescale_ts(pkt, *time_base, st->time_base);

pkt->stream_index = st->index;

log_packet(fmt_ctx, pkt);

return av_interleaved_write_frame(fmt_ctx, pkt);

}

static void add_stream(OutputStream *ost, AVFormatContext *oc,

AVCodec **codec,

enum AVCodecID codec_id) {

AVCodecContext *codec_ctx;

int i;

*codec = avcodec_find_encoder(codec_id);

if (!(*codec)) {

fprintf(stderr, "Could not find encoder for '%s'\n",

avcodec_get_name(codec_id));

exit(1);

}

ost->st = avformat_new_stream(oc, NULL);

if (!ost->st) {

fprintf(stderr, "Could not allocate stream\n");

exit(1);

}

ost->st->id = oc->nb_streams - 1;

codec_ctx = avcodec_alloc_context3(*codec);

if (!codec_ctx) {

fprintf(stderr, "Could not alloc an encoding context\n");

exit(1);

}

ost->enc = codec_ctx;

switch ((*codec)->type) {

case AVMEDIA_TYPE_AUDIO:

codec_ctx->codec_id = codec_id;

codec_ctx->sample_fmt = (*codec)->sample_fmts ?

(*codec)->sample_fmts[0] : AV_SAMPLE_FMT_FLTP;

codec_ctx->bit_rate = 64000;

codec_ctx->sample_rate = 44100;

if ((*codec)->supported_samplerates) {

codec_ctx->sample_rate = (*codec)->supported_samplerates[0];

for (i = 0; (*codec)->supported_samplerates[i]; i++) {

if ((*codec)->supported_samplerates[i] == 44100)

codec_ctx->sample_rate = 44100;

}

}

codec_ctx->channel_layout = AV_CH_LAYOUT_STEREO;

codec_ctx->channels = av_get_channel_layout_nb_channels(codec_ctx->channel_layout);

if ((*codec)->channel_layouts) {

codec_ctx->channel_layout = (*codec)->channel_layouts[0];

for (i = 0; (*codec)->channel_layouts[i]; i++) {

if ((*codec)->channel_layouts[i] == AV_CH_LAYOUT_STEREO)

codec_ctx->channel_layout = AV_CH_LAYOUT_STEREO;

}

}

codec_ctx->channels = av_get_channel_layout_nb_channels(codec_ctx->channel_layout);

ost->st->time_base = (AVRational) {1, codec_ctx->sample_rate};

break;

case AVMEDIA_TYPE_VIDEO:

codec_ctx->codec_id = codec_id;

codec_ctx->bit_rate = 400000;

codec_ctx->width = 352;

codec_ctx->height = 288;

codec_ctx->max_b_frames = 1;

ost->st->time_base = (AVRational) {1, STREAM_FRAME_RATE};

codec_ctx->time_base = ost->st->time_base;

codec_ctx->gop_size = STREAM_FRAME_RATE;

codec_ctx->pix_fmt = STREAM_PIX_FMT;

break;

default:

break;

}

if (oc->oformat->flags & AVFMT_GLOBALHEADER)

codec_ctx->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

}

static AVFrame *alloc_audio_frame(enum AVSampleFormat sample_fmt,

uint64_t channel_layout,

int sample_rate, int nb_samples) {

AVFrame *frame = av_frame_alloc();

int ret;

if (!frame) {

fprintf(stderr, "Error allocating an audio frame\n");

exit(1);

}

frame->format = sample_fmt;

frame->channel_layout = channel_layout;

frame->sample_rate = sample_rate;

frame->nb_samples = nb_samples;

if (nb_samples) {

ret = av_frame_get_buffer(frame, 0);

if (ret < 0) {

fprintf(stderr, "Error allocating an audio buffer\n");

exit(1);

}

}

return frame;

}

static void open_audio(AVFormatContext *oc, AVCodec *codec, OutputStream *ost, AVDictionary *opt_arg) {

AVCodecContext *codec_ctx;

int nb_samples;

int ret;

AVDictionary *opt = NULL;

codec_ctx = ost->enc;

av_dict_copy(&opt, opt_arg, 0);

ret = avcodec_open2(codec_ctx, codec, &opt);

av_dict_free(&opt);

if (ret < 0) {

fprintf(stderr, "Could not open audio codec: %s\n", av_err2str(ret));

exit(1);

}

ost->t = 0;

ost->tincr = 2 * M_PI * 110.0 / codec_ctx->sample_rate;

ost->tincr2 = 2 * M_PI * 110.0 / codec_ctx->sample_rate / codec_ctx->sample_rate;

nb_samples = codec_ctx->frame_size;

ost->frame = alloc_audio_frame(codec_ctx->sample_fmt, codec_ctx->channel_layout,

codec_ctx->sample_rate, nb_samples);

ost->tmp_frame = alloc_audio_frame(AV_SAMPLE_FMT_S16, codec_ctx->channel_layout,

codec_ctx->sample_rate, nb_samples);

ret = avcodec_parameters_from_context(ost->st->codecpar, codec_ctx);

if (ret < 0) {

fprintf(stderr, "Could not copy the stream parameters\n");

exit(1);

}

ost->swr_ctx = swr_alloc();

if (!ost->swr_ctx) {

fprintf(stderr, "Could not allocate resampler context\n");

exit(1);

}

av_opt_set_int(ost->swr_ctx, "in_channel_count", codec_ctx->channels, 0);

av_opt_set_int(ost->swr_ctx, "in_sample_rate", codec_ctx->sample_rate, 0);

av_opt_set_sample_fmt(ost->swr_ctx, "in_sample_fmt", AV_SAMPLE_FMT_S16, 0);

av_opt_set_int(ost->swr_ctx, "out_channel_count", codec_ctx->channels, 0);

av_opt_set_int(ost->swr_ctx, "out_sample_rate", codec_ctx->sample_rate, 0);

av_opt_set_sample_fmt(ost->swr_ctx, "out_sample_fmt", codec_ctx->sample_fmt, 0);

if ((ret = swr_init(ost->swr_ctx)) < 0) {

fprintf(stderr, "Failed to initialize the resampling context\n");

exit(1);

}

}

static AVFrame *get_audio_frame(OutputStream *ost) {

AVFrame *frame = ost->tmp_frame;

int j, i, v;

int16_t *q = (int16_t *) frame->data[0];

if (av_compare_ts(ost->next_pts, ost->enc->time_base,

STREAM_DURATION, (AVRational) {1, 1}) >= 0)

return NULL;

for (j = 0; j < frame->nb_samples; j++) {

v = (int) (sin(ost->t) * 10000);

for (i = 0; i < ost->enc->channels; i++)

*q++ = v;

ost->t += ost->tincr;

ost->tincr += ost->tincr2;

}

frame->pts = ost->next_pts;

ost->next_pts += frame->nb_samples;

return frame;

}

static int write_audio_frame(AVFormatContext *oc, OutputStream *ost) {

AVCodecContext *codec_ctx;

AVPacket pkt = {0};

AVFrame *frame;

int ret;

int got_packet;

int dst_nb_samples;

av_init_packet(&pkt);

codec_ctx = ost->enc;

frame = get_audio_frame(ost);

if (frame) {

dst_nb_samples = av_rescale_rnd(swr_get_delay(ost->swr_ctx, codec_ctx->sample_rate) + frame->nb_samples,

codec_ctx->sample_rate, codec_ctx->sample_rate, AV_ROUND_UP);

av_assert0(dst_nb_samples == frame->nb_samples);

ret = av_frame_make_writable(ost->frame);

if (ret < 0)

exit(1);

ret = swr_convert(ost->swr_ctx,

ost->frame->data, dst_nb_samples,

(const uint8_t **) frame->data, frame->nb_samples);

if (ret < 0) {

fprintf(stderr, "Error while converting\n");

exit(1);

}

frame = ost->frame;

frame->pts = av_rescale_q(ost->samples_count, (AVRational) {1, codec_ctx->sample_rate},

codec_ctx->time_base);

ost->samples_count += dst_nb_samples;

}

ret = avcodec_encode_audio2(codec_ctx, &pkt, frame, &got_packet);

if (ret < 0) {

fprintf(stderr, "Error encoding audio frame: %s\n", av_err2str(ret));

exit(1);

}

if (got_packet) {

ret = write_frame(oc, &codec_ctx->time_base, ost->st, &pkt);

if (ret < 0) {

fprintf(stderr, "Error while writing audio frame: %s\n",

av_err2str(ret));

exit(1);

}

}

return (frame || got_packet) ? 0 : 1;

}

static AVFrame *alloc_picture(enum AVPixelFormat pix_fmt, int width, int height) {

AVFrame *picture;

int ret;

picture = av_frame_alloc();

if (!picture)

return NULL;

picture->format = pix_fmt;

picture->width = width;

picture->height = height;

ret = av_frame_get_buffer(picture, 32);

if (ret < 0) {

fprintf(stderr, "Could not allocate frame data.\n");

exit(1);

}

return picture;

}

static void open_video(AVFormatContext *oc, AVCodec *codec, OutputStream *ost, AVDictionary *opt_arg) {

int ret;

AVCodecContext *codec_ctx = ost->enc;

AVDictionary *opt = NULL;

av_dict_copy(&opt, opt_arg, 0);

ret = avcodec_open2(codec_ctx, codec, &opt);

av_dict_free(&opt);

if (ret < 0) {

fprintf(stderr, "Could not open video codec: %s\n", av_err2str(ret));

exit(1);

}

ost->frame = alloc_picture(codec_ctx->pix_fmt, codec_ctx->width, codec_ctx->height);

if (!ost->frame) {

fprintf(stderr, "Could not allocate video frame\n");

exit(1);

}

ost->tmp_frame = NULL;

if (codec_ctx->pix_fmt != AV_PIX_FMT_YUV420P) {

ost->tmp_frame = alloc_picture(AV_PIX_FMT_YUV420P, codec_ctx->width, codec_ctx->height);

if (!ost->tmp_frame) {

fprintf(stderr, "Could not allocate temporary picture\n");

exit(1);

}

}

ret = avcodec_parameters_from_context(ost->st->codecpar, codec_ctx);

if (ret < 0) {

fprintf(stderr, "Could not copy the stream parameters\n");

exit(1);

}

}

static void fill_yuv_image(AVFrame *pict, int frame_index,

int width, int height) {

int x, y, i;

i = frame_index;

for (y = 0; y < height; y++)

for (x = 0; x < width; x++)

pict->data[0][y * pict->linesize[0] + x] = x + y + i * 3;

for (y = 0; y < height / 2; y++) {

for (x = 0; x < width / 2; x++) {

pict->data[1][y * pict->linesize[1] + x] = 128 + y + i * 2;

pict->data[2][y * pict->linesize[2] + x] = 64 + x + i * 5;

}

}

}

static AVFrame *get_video_frame(OutputStream *ost) {

AVCodecContext *codec_ctx = ost->enc;

if (av_compare_ts(ost->next_pts, codec_ctx->time_base,

STREAM_DURATION, (AVRational) {1, 1}) >= 0)

return NULL;

if (av_frame_make_writable(ost->frame) < 0)

exit(1);

if (codec_ctx->pix_fmt != AV_PIX_FMT_YUV420P) {

if (!ost->sws_ctx) {

ost->sws_ctx = sws_getContext(codec_ctx->width, codec_ctx->height,

AV_PIX_FMT_YUV420P,

codec_ctx->width, codec_ctx->height,

codec_ctx->pix_fmt,

SCALE_FLAGS, NULL, NULL, NULL);

if (!ost->sws_ctx) {

fprintf(stderr,

"Could not initialize the conversion context\n");

exit(1);

}

}

fill_yuv_image(ost->tmp_frame, ost->next_pts, codec_ctx->width, codec_ctx->height);

sws_scale(ost->sws_ctx, (const uint8_t *const *) ost->tmp_frame->data,

ost->tmp_frame->linesize, 0, codec_ctx->height, ost->frame->data,

ost->frame->linesize);

} else {

fill_yuv_image(ost->frame, ost->next_pts, codec_ctx->width, codec_ctx->height);

}

ost->frame->pts = ost->next_pts++;

return ost->frame;

}

static int write_video_frame(AVFormatContext *oc, OutputStream *ost) {

int ret;

AVCodecContext *codec_ctx;

AVFrame *frame;

int got_packet = 0;

AVPacket pkt = {0};

codec_ctx = ost->enc;

frame = get_video_frame(ost);

av_init_packet(&pkt);

ret = avcodec_encode_video2(codec_ctx, &pkt, frame, &got_packet);

if (ret < 0) {

fprintf(stderr, "Error encoding video frame: %s\n", av_err2str(ret));

exit(1);

}

if (got_packet) {

ret = write_frame(oc, &codec_ctx->time_base, ost->st, &pkt);

} else {

ret = 0;

}

if (ret < 0) {

fprintf(stderr, "Error while writing video frame: %s\n", av_err2str(ret));

exit(1);

}

return (frame || got_packet) ? 0 : 1;

}

static void close_stream(AVFormatContext *oc, OutputStream *ost) {

avcodec_free_context(&ost->enc);

av_frame_free(&ost->frame);

av_frame_free(&ost->tmp_frame);

sws_freeContext(ost->sws_ctx);

swr_free(&ost->swr_ctx);

}

int main(int argc, char **argv) {

OutputStream video_st = {0};

OutputStream audio_st = {0};

const char *filename;

AVOutputFormat *fmt;

AVFormatContext *oc;

AVCodec *audio_codec, *video_codec;

int ret;

int have_video = 0, have_audio = 0;

int encode_video = 0, encode_audio = 0;

AVDictionary *opt = NULL;

int i;

if (argc < 2) {

printf("usage: %s output_file\n"

"API example program to output a media file with libavformat.\n"

"This program generates a synthetic audio and video stream, encodes and\n"

"muxes them into a file named output_file.\n"

"The output format is automatically guessed according to the file extension.\n"

"Raw images can also be output by using '%%d' in the filename.\n"

"\n", argv[0]);

return 1;

}

filename = argv[1];

for (i = 2; i + 1 < argc; i += 2) {

if (!strcmp(argv[i], "-flags") || !strcmp(argv[i], "-fflags"))

av_dict_set(&opt, argv[i] + 1, argv[i + 1], 0);

}

avformat_alloc_output_context2(&oc, NULL, NULL, filename);

if (!oc) {

printf("Could not deduce output format from file extension: using flv.\n");

avformat_alloc_output_context2(&oc, NULL, "flv", filename);

}

if (!oc)

return 1;

fmt = oc->oformat;

fmt->video_codec = AV_CODEC_ID_H264;

fmt->audio_codec = AV_CODEC_ID_AAC;

if (fmt->video_codec != AV_CODEC_ID_NONE) {

add_stream(&video_st, oc, &video_codec, fmt->video_codec);

have_video = 1;

encode_video = 1;

}

if (fmt->audio_codec != AV_CODEC_ID_NONE) {

add_stream(&audio_st, oc, &audio_codec, fmt->audio_codec);

have_audio = 1;

encode_audio = 1;

}

if (have_video)

open_video(oc, video_codec, &video_st, opt);

if (have_audio)

open_audio(oc, audio_codec, &audio_st, opt);

av_dump_format(oc, 0, filename, 1);

if (!(fmt->flags & AVFMT_NOFILE)) {

ret = avio_open(&oc->pb, filename, AVIO_FLAG_WRITE);

if (ret < 0) {

fprintf(stderr, "Could not open '%s': %s\n", filename,

av_err2str(ret));

return 1;

}

}

ret = avformat_write_header(oc, &opt);

if (ret < 0) {

fprintf(stderr, "Error occurred when opening output file: %s\n",

av_err2str(ret));

return 1;

}

while (encode_video || encode_audio) {

if (encode_video &&

(!encode_audio || av_compare_ts(video_st.next_pts, video_st.enc->time_base,

audio_st.next_pts, audio_st.enc->time_base) <= 0)) {

printf("\nwrite_video_frame\n");

encode_video = !write_video_frame(oc, &video_st);

} else {

printf("\nwrite_audio_frame\n");

encode_audio = !write_audio_frame(oc, &audio_st);

}

}

av_write_trailer(oc);

if (have_video)

close_stream(oc, &video_st);

if (have_audio)

close_stream(oc, &audio_st);

if (!(fmt->flags & AVFMT_NOFILE))

avio_closep(&oc->pb);

avformat_free_context(oc);

return 0;

}