CUDA 编程简单入门 Advance CUDA 编程基础 (C++ programming)

- Advance CUDA编程基础 (C++ programming)

- GPU 架构

- CUDA 编程基础

- 基本代码框架

- CUDA Execution Model

- Case Study : Vector Add

- 优化方法举例

- SM 共享内存的使用

- case study :一维卷积计算

- SM 共享内存的使用

- Summary

Advance CUDA编程基础 (C++ programming)

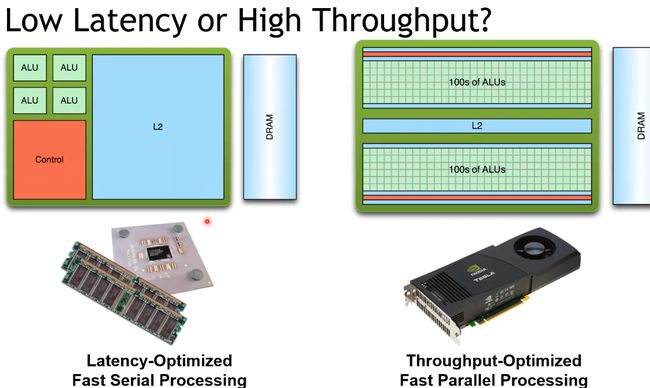

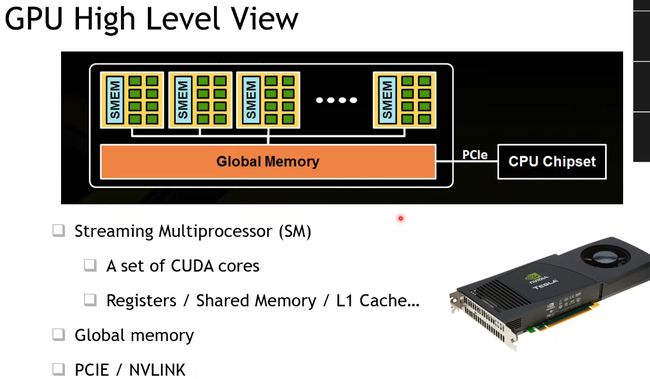

GPU 架构

CUDA 编程基础

基本代码框架

- 整个 GPU 执行 kernel 函数,多个线程执行相同代码;

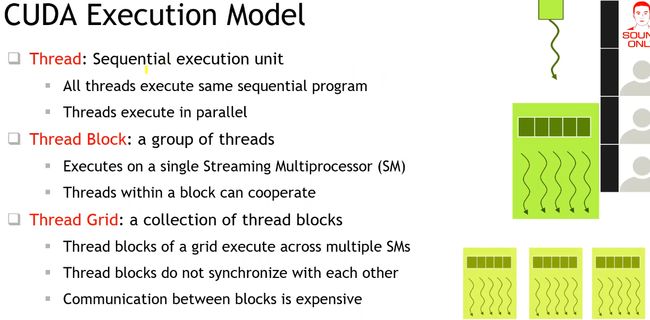

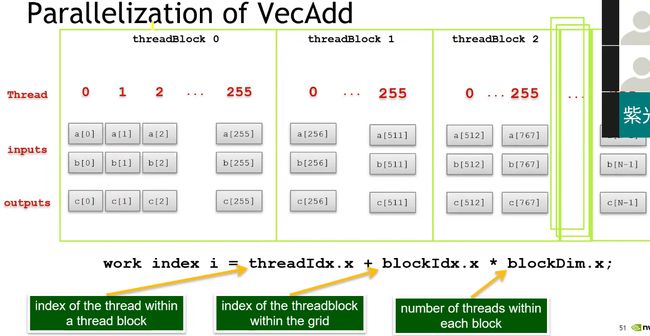

CUDA Execution Model

- 不同 Block 之间是完全异步执行的;

- 且需要通过全局的显存才能进行数据共享,因此开销较大;

- 单个 Block 内的所有线程共享单个 SM 上的共享内存,多个线程可以协作

- 可通过预定义的变量获取当前线程的全局 ID;

Case Study : Vector Add

- 最重要的一点是:找出程序中可以并行加速的地方!

// file: vecAdd.cu

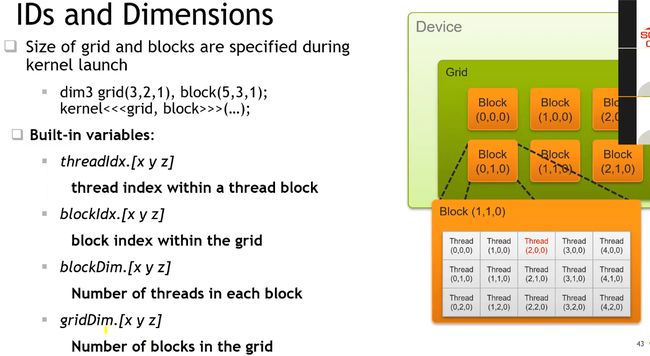

#include >> 表示launch configuration,

// 即 grid 内的 thread blocks 数,和 thread block 内的 threads 数;

// vecAdd<<<(N + 255) / 256, 256>>>(N, d_lhs, d_rhs, d_out);

dim3 grid_conf{(N + 255) / 256, 1, 1}, block_conf{256, 1, 1};

vecAdd<<<grid_conf, block_conf>>>(N, d_lhs, d_rhs, d_out);

// 打印可能的错误信息

cudaError_t err = cudaGetLastError();

if (err != cudaSuccess)

printf(cudaGetErrorString(err));

// 结果拷贝回 CPU

cudaMemcpy(h_out, d_out, N * sizeof(int), cudaMemcpyDeviceToHost);

for (int i = 0; i < N; ++i) printf("%d\n", h_out[i]);

return 0;

}

编译方法:

nvcc vecAdd.cu -o shared_memo.exe- 出现问题:

- GPU 计算结果全为 0;

- 报错:“CUDA Error:no kernel image is available for execution on device”,则说明 cuda 版本与 GPU 不匹配;

- 解决方法:

- 指定显卡架构

-arch参数:nvcc vecAdd.cu -o vecAdd.exe -arch=sm_50 -Wno-deprecated-gpu-targets

- 指定显卡架构

优化方法举例

SM 共享内存的使用

- cudaMalloc 申请在全局显存(global memory),而 Thread 的 Register 或 Block 内的共享内存,速度延迟远低于全局显存;

- 注意:线程块内所有线程,共享一个 shared 数组 a,而不会有多个 a;

- 编译器尽可能将自动变量(标量)存储在寄存器内,而不是片外显存上的栈空间;

- 需要寻址(如索引等)的自动变量,也不能放在寄存器上;

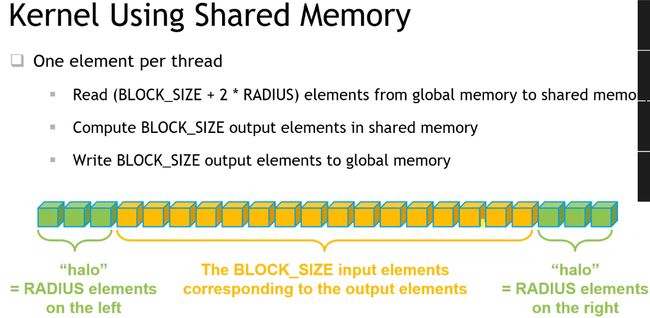

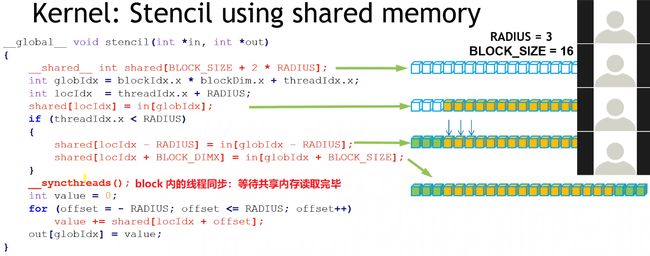

case study :一维卷积计算

- 并行度:每个卷积结果是相互独立的,可以并行计算;

- 优化点:每个线程都需要从显存中读取 (2*radius + 1) 个数据,而所有被访问到的数据总共是 in 灰色部分,线程间存在重复读取的冗余;为提高访问速度,可以提前将单个 Block 用到的所有数据 cache 到 Block 内的共享内存中;

#include >> 表示launch configuration,

// 即 grid 内的 thread blocks 数,和 thread block 内的 threads 数;

// 注意起始位置 d_in 偏移 RADIUS 个元素

conv_1d <<<(N_VALID + BLOCK_SIZE - 1) / BLOCK_SIZE, BLOCK_SIZE>>> (d_in + RADIUS, d_out);

// 打印可能的错误信息

cudaError_t err = cudaGetLastError();

if (err != cudaSuccess)

printf(cudaGetErrorString(err));

// 结果拷贝回 CPU

cudaMemcpy(h_out, d_out, N_VALID * sizeof(int), cudaMemcpyDeviceToHost);

for (int i = 0; i < N_VALID; ++i) printf("%d ", h_out[i]);

return 0;

}