hue4.2.0在ambari2.7.3的集成

hue4.2.0在ambari2.7.3的集成

文章目录

- hue4.2.0在ambari2.7.3的集成

- 前言

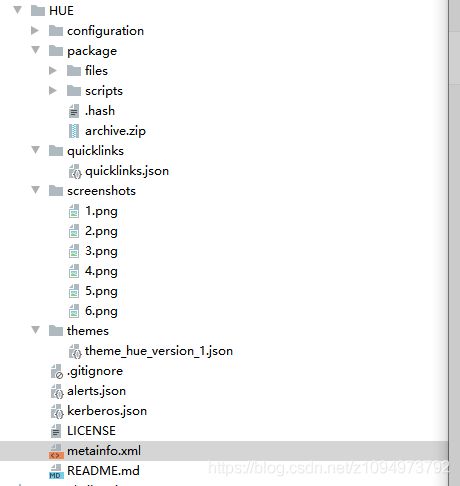

- 一、HUE代码的结构

- 二、代码引入

-

- 1.hue-env.xml

- 2.params.py

- 3.common.py

- 4.hue_server.py

- 5.pseudo-distributed.ini.xml

前言

由于网上的hue适配ambari的版本过低,使用了一些代码都没有成功部署,因此翻阅整理了相关代码,并经过测试,成功将hue集成进ambari中去。

一、HUE代码的结构

首先需要注意的两个目录 configuration 和package,前者是你需要配置的配置选项模板,后者是你执行具体job的action的相关代码。

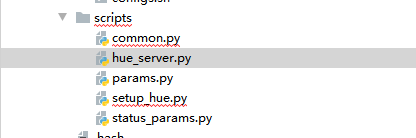

package下的scripts文件下的是hue执行action的核心代码

hue_server.py的HueServer是核心类,他继承了Script类,并重写了install,start等函数。此类方法根据个人生产环境做相应的调整,ambari里面提供了Execute可以方便填写shell,方便运维人员根据自己需求修改。

params.py是一个参数dict集合,执行job的所有参数都是通过此函数获取变量的值。

此为configuration目录下的其中一个xml文件,此文件内容会在HUE配置界面的advanced下的对应文件显示出来 从字面可以大致看出 name标签是键 value是值 empty-value-valid是否可以为空等

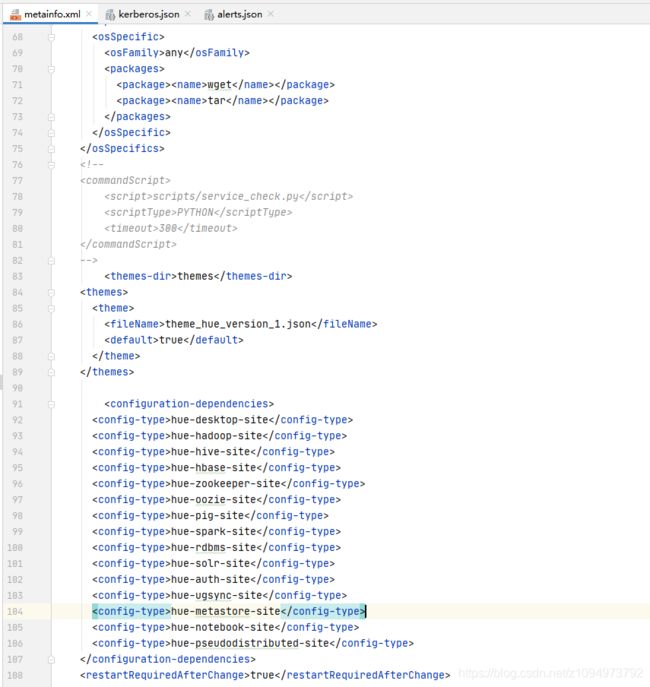

metainfo.xml记录你的service版本,依赖的其他service服务。运行的python脚本。依赖的configuration的配置,以及一些基础环境(例如wget tar等,根据不同的系统会用相应的源命令下载)等。

下面重点的来了,当在平台执行某个action时,configuration下配置xml文件键值对会传入ambari-server,当某个service需要执行某个action时,server会将当前集群所有的配置变量传入command-*.json文件,ambari-agent会通过读取此json文件获取对应的变量值,来执行server交给的job。由于ambari和hue的版本迭代,hue所需要的参数个数以及命名会有修改,因此需要根据不同的版本修改适配于当前版本的变量名称及个数,也就是修改HUE的configuration下的文件及params.py下的变量的值。

二、代码引入

此文章的代码沿用一位大佬的博客基础上做的修改

https://blog.csdn.net/zhouyuanlinli/article/details/83374416

1.hue-env.xml

添加了将user和group集成到cluster-env中去,如果不添加,2.7.3的ambari在验证用户时会发现此用户为非法用户,不会执行用户创建执行等操作.

<property>

<name>hue_user</name>

<value>hue</value>

<display-name>Hue User</display-name>

<property-type>USER</property-type>

<description>hue user</description>

<value-attributes>

<type>user</type>

<overridable>false</overridable>

<!-- 添加内容 -->

<user-groups>

<property>

<type>cluster-env</type>

<name>user_group</name>

</property>

</user-groups>

<!-- 添加内容 -->

</value-attributes>

<on-ambari-upgrade add="true"/>

</property>

2.params.py

底下均因为ambari版本更新导致的key没有正常获取,有些不改会直接导致程序无法运行。

dfs_type = default("/clusterLevelParams/dfs_type", "HDFS")

#原代码路径会找不到dict对应的key

zookeeper_hosts = default("/clusterHostInfo/zookeeper_server_hosts", [])

hive_server_hosts = default("/clusterHostInfo/hive_server_hosts", [])

if len(hive_server_hosts) > 0:

hive_server_host = config['clusterHostInfo']['hive_server_hosts'][0]

hive_transport_mode = config['configurations']['hive-site']['hive.server2.transport.mode']

if hive_transport_mode.lower() == "http":

hive_server_port = config['configurations']['hive-site']['hive.server2.thrift.http.port']

else:

hive_server_port = default('/configurations/hive-site/hive.server2.thrift.port',"10000")

3.common.py

此函数根据实际场景改变,由于看到的大多博客的代码都是通过,需要外网环境下载并编译,环境过于复杂且编译时间过长,且容易出现错误。因此我自己将环境打成了docker包,并通过/etc/yum.repos.d/hdp.repo中获取安装包的url下载镜像及安装包。方便更好适应更多场景。

def explain(repo_file):

groups_dict={}

with open (repo_file,"rb") as f:

for line in f:

if line.startswith("#") or line.startswith(";") or len(line.strip()) == 0:

continue

if line.strip().find('[') == 0 and line.strip().find(']') == len(line.strip()) - 1:

key = line.strip().lstrip('[').rstrip(']')

groups_dict[key] = []

else:

if line.strip().find('baseurl') != -1:

groups_dict[key].append(line.strip().split('=')[1])

return groups_dict

def download_hue():

import params

"""

Download Hue to the installation directory

"""

try:

Execute('docker stop hue2')

Execute('docker rm hue2')

Execute('docker rmi hue4.2-kyv4:v1')

Execute('rm -rf /usr/local/hue')

Execute('rm -rf /usr/hdp/current/hue-server')

except Exception as e:

pass

Download_url="".join(explain('/etc/yum.repos.d/hdp.repo')['HDP-3.1'])+'/my_hue.tar'

Execute('wget -P /usr/local {0}'.format(Download_url))

Download_zip="".join(explain('/etc/yum.repos.d/hdp.repo')['HDP-3.1'])+'/HUE.tar'

Execute('wget -P /usr/local {0}'.format(Download_zip))

Execute('tar -xvf /usr/local/HUE.tar -C /usr/local')

#Execute('{0} | xargs wget -O hue.tgz'.format(params.download_url))

Execute('/usr/bin/docker load -i /usr/local/my_hue.tar && sleep 5 && rm -rf /usr/local/my_hue.tar')

Execute('/usr/bin/docker run -td -u root --name hue2 --net=host -v /etc/hosts:/etc/hosts -v /usr/local/hue:/usr/local/hue -v /usr/hdp:/usr/hdp -v /etc/passwd:/etc/passwd -v /etc/shadow:/etc/shadow -v /etc/hive:/etc/hive --restart=always --privileged hue4.2-kyv4:v1 /bin/bash')

Execute('/usr/bin/docker exec -u root hue2 /bin/bash -c \'mkdir /var/log/hue && chmod 777 /tmp \'')

Execute('/usr/bin/docker exec -u root hue2 /bin/bash -c \'touch /var/log/hue/{access.log,runcpserver.log,supervisor.log,error.log,kt_renewer.log} && chmod -R 777 /var/log\'')

Execute('chown -R {0}:{1} {2}'.format(params.hue_user,params.hue_group,params.hue_dir))

Execute('ln -s {0} /usr/hdp/current/hue-server'.format(params.hue_dir))

Logger.info("Hue Service is installed")

4.hue_server.py

由于docker环境因此启停通过docker exec 去执行

def start(self, env):

import params

self.stop(env)

self.configure(env)

Execute(format("sudo docker exec -u root hue2 /bin/bash -c \'/usr/local/hue/build/env/bin/supervisor\' >> /var/log/hue/hue-install.log 2>&1 &"),

environment={'JAVA_HOME': params.java_home,

'HADOOP_CONF_DIR': params.hadoop_conf_dir,

'SPARK_HOME': '/usr/hdp/current/spark-client',

'PATH': '$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin'

},

user=params.hue_user

)

Execute ('ps -ef | grep \"/usr/local/hue/build/env/bin/python2.7 /usr/local/hue/build/env/bin/supervisor\" | grep supervisor | grep -v grep | awk \'{print $2}\' > ' + params.hue_server_pid_file, user=params.hue_user)

def stop(self, env):

import params

env.set_params(params)

# Kill the process of Hue

Execute ('ps -ef | grep /usr/local/hue/build/env/bin | grep -v grep | awk \'{print $2}\' | xargs sudo kill -9', user=params.hue_user, ignore_failures=True)

File(params.hue_server_pid_file,

action = "delete",

owner = params.hue_user

)

5.pseudo-distributed.ini.xml

由于大多网上用的配置文件模板都是hue.ini这个是hue3.*的配置,本文章hue的配置是4.2的环境的配置,因此将配置文件逐一匹配添加并修改成新版本。现在还在测试阶段有些配置设定还有待考虑,仅供大家参考。

<configuration>

<property>

<name>contentname>

<description>Hue Configure Filedescription>

<value>

[desktop]

secret_key={{desktop_secret_key}}

{% if desktop_secret_key.strip() == '' %}

secret_key_script={{desktop_secret_key_script}}

{% else %}

# secret_key_script=

{% endif %}

http_host=0.0.0.0

http_port={{http_port}}

is_hue_4=true

disable_hue_3=true

is_embedded=false

## hue_load_balancer=

time_zone={{desktop_time_zone}}

django_debug_mode={{desktop_django_debug_mode}}

dev=false

dev_embedded=false

database_logging={{desktop_database_logging}}

send_dbug_messages={{desktop_send_dbug_messages}}

http_500_debug_mode={{desktop_http_500_debug_mode}}

memory_profiler={{desktop_memory_profiler}}

instrumentation=false

django_server_email={{desktop_django_server_email}}

django_email_backend={{desktop_django_email_backend}}

use_cherrypy_server=true

## gunicorn_work_class=eventlet

## gunicorn_number_of_workers=None

server_user={{desktop_server_user}}

server_group={{desktop_server_group}}

default_user={{desktop_default_user}}

default_hdfs_superuser={{desktop_default_hdfs_superuser}}

enable_server={{desktop_enable_server}}

cherrypy_server_threads={{desktop_cherrypy_server_threads}}

sasl_max_buffer=10 * 1024 * 1024

{% if desktop_ssl_enable %}

ssl_certificate={{desktop_ssl_certificate}}

ssl_private_key={{desktop_ssl_private_key}}

ssl_certificate_chain={{desktop_ssl_certificate_chain}}

ssl_password={{desktop_ssl_password}}

{% if ssl_password.strip() == '' %}

ssl_password_script={{desktop_ssl_password_script}}

{% else %}

## ssl_password_script=

{% endif %}

secure_content_type_nosniff={{desktop_secure_content_type_nosniff}}

secure_browser_xss_filter={{desktop_secure_browser_xss_filter}}

secure_content_security_policy={{desktop_secure_content_security_policy}}

secure_ssl_redirect={{desktop_secure_ssl_redirect}}

secure_redirect_host={{desktop_secure_redirect_host}}

secure_redirect_exempt={{desktop_secure_redirect_exempt}}

secure_hsts_seconds={{desktop_secure_hsts_seconds}}

secure_hsts_include_subdomains={{desktop_secure_hsts_include_subdomains}}

ssl_cipher_list={{desktop_ssl_cipher_list}}

ssl_cacerts={{desktop_ssl_cacerts}}

{% else %}

## ssl_certificate=

## ssl_private_key=

## ssl_certificate_chain=

## ssl_password=

## ssl_password_script=

## secure_content_type_nosniff=true

## secure_browser_xss_filter=true

## secure_content_security_policy="script-src 'self' 'unsafe-inline' 'unsafe-eval' *.google-analytics.com *.doubleclick.net data:;img-src 'self' *.google-analytics.com *.doubleclick.net http://*.tile.osm.org *.tile.osm.org *.gstatic.com data:;style-src 'self' 'unsafe-inline' fonts.googleapis.com;connect-src 'self';frame-src *;child-src 'self' data: *.vimeo.com;object-src 'none'"

## secure_ssl_redirect=False

## secure_redirect_host=0.0.0.0

## secure_redirect_exempt=[]

## secure_hsts_seconds=31536000

## secure_hsts_include_subdomains=true

ssl_cipher_list=ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA:ECDHE-RSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-RSA-AES256-SHA256:DHE-RSA-AES256-SHA:ECDHE-ECDSA-DES-CBC3-SHA:ECDHE-RSA-DES-CBC3-SHA:EDH-RSA-DES-CBC3-SHA:AES128-GCM-SHA256:AES256-GCM-SHA384:AES128-SHA256:AES256-SHA256:AES128-SHA:AES256-SHA:DES-CBC3-SHA:!DSS:!DH:!ADH

## ssl_cacerts=/etc/hue/cacerts.pem

{% endif %}

ssl_validate={{desktop_validate}}

auth_username={{desktop_auth_username}}

auth_password={{desktop_auth_password}}

default_site_encoding={{desktop_default_site_encoding}}

collect_usage=false

leaflet_tile_layer={{desktop_leaflet_tile_layer}}

leaflet_tile_layer_attribution={{desktop_leaflet_tile_layer_attribution}}

leaflet_map_options='{}'

leaflet_tile_layer_options='{}'

http_x_frame_options={{desktop_http_x_frame_options}}

use_x_forwarded_host={{desktop_use_x_forwarded_host}}

secure_proxy_ssl_header={{desktop_secure_proxy_ssl_header}}

## middleware={{desktop_middleware}}

app_blacklist={{app_blacklist}}

# impala,pig,sqoop,oozie

cluster_id={{desktop_use_new_editor}}

use_new_editor={{desktop_use_new_editor}}

## enable_download=true

## enable_sql_syntax_check=true

## use_new_side_panels=false

## use_new_charts=false

editor_autocomplete_timeout={{desktop_editor_autocomplete_timeout}}

## use_default_configuration=false

audit_event_log_dir={{desktop_audit_event_log_dir}}

audit_log_max_file_size={{desktop_audit_log_max_file_size}}

## rest_conn_timeout=120

## log_redaction_file={{desktop_log_redaction_file}}

allowed_hosts={{desktop_allowed_hosts}}

[[django_admins]]

## [[[admin1]]]

## name=john

## [email protected]

[[custom]]

## banner_top_html=

## login_splash_html=

## cacheable_ttl=864000000

## logo_svg=

[[auth]]

backend={{desktop_auth_backend}}

user_aug={{desktop_auth_user_aug}}

pam_service={{desktop_auth_pam_service}}

remote_user_header={{desktop_auth_remote_user_header}}

ignore_username_case={{desktop_auth_ignore_username_case}}

force_username_lowercase={{desktop_auth_force_username_lowercase}}

## force_username_uppercase=false

expires_after={{desktop_auth_expires_after}}

expire_superusers={{desktop_auth_expire_superusers}}

idle_session_timeout={{desktop_auth_idle_session_timeout}}

change_default_password={{desktop_auth_change_default_password}}

login_failure_limit={{desktop_auth_login_failure_limit}}

login_lock_out_at_failure={{desktop_auth_login_lock_out_at_failure}}

login_cooloff_time={{desktop_auth_login_cooloff_time}}

login_lock_out_use_user_agent={{desktop_auth_login_lock_out_use_user_agent}}

login_lock_out_by_combination_user_and_ip={{desktop_auth_login_lock_out_by_combination_user_and_ip}}

## behind_reverse_proxy={{desktop_auth_behind_reverse_proxy}}

## reverse_proxy_header={{desktop_auth_reverse_proxy_header}}

[[ldap]]

{% if usersync_enabled and usersync_source == 'ldap' %}

base_dn={{usersync_ldap_base_dn}}

ldap_url={{usersync_ldap_url}}

nt_domain={{usersync_ldap_nt_domain}}

ldap_cert={{usersync_ldap_cert}}

use_start_tls={{usersync_ldap_use_start_tls}}

bind_dn={{usersync_ldap_bind_dn}}

bind_password={{usersync_ldap_bind_password}}

{% if usersync_ldap_bind_password.strip() == '' %}

bind_password_script={{usersync_ldap_bind_password_script}}

{% else %}

## bind_password_script=

{% endif %}

ldap_username_pattern={{usersync_ldap_username_pattern}}

create_users_on_login = {{usersync_ldap_create_users_on_login}}

sync_groups_on_login={{usersync_ldap_sync_groups_on_login}}

ignore_username_case={{usersync_ldap_ignore_username_case}}

force_username_lowercase={{usersync_ldap_force_username_lowercase}}

force_username_uppercase={{usersync_ldap_force_username_uppercase}}

search_bind_authentication={{usersync_ldap_search_bind_authentication}}

subgroups={{usersync_ldap_subgroups}}

nested_members_search_depth={{usersync_ldap_nested_members_search_depth}}

follow_referrals={{usersync_ldap_follow_referrals}}

debug={{usersync_ldap_debug}}

debug_level={{usersync_ldap_debug_level}}

trace_level={{usersync_ldap_trace_level}}

[[[users]]]

user_filter={{usersync_ldap_user_searchfilter}}

user_name_attr={{usersync_ldap_user_name_attribute}}

[[[groups]]]

{% if usersync_ldap_group_searchenabled %}

group_filter={{usersync_ldap_group_searchfilter}}

group_name_attr={{usersync_ldap_group_name_attribute}}

group_member_attr={{usersync_ldap_group_member_attribute}}

{% else %}

## group_filter="objectclass=*"

## group_name_attr=cn

## group_member_attr=members

{% endif %}

[[[ldap_servers]]]

## [[[[mycompany]]]]

## base_dn="DC=mycompany,DC=com"

## ldap_url=ldap://auth.mycompany.com

## nt_domain=mycompany.com

## ldap_cert=

## use_start_tls=true

## bind_dn="CN=ServiceAccount,DC=mycompany,DC=com"

## bind_password=

## bind_password_script=

## ldap_username_pattern=

## search_bind_authentication=true

## follow_referrals=false

## debug=false

## debug_level=255

## trace_level=0

## [[[[[users]]]]]

## user_filter="objectclass=Person"

## user_name_attr=sAMAccountName

## [[[[[groups]]]]]

## group_filter="objectclass=groupOfNames"

## group_name_attr=cn

{% else %}

## base_dn="DC=mycompany,DC=com"

## ldap_url=ldap://auth.mycompany.com

## nt_domain=mycompany.com

## ldap_cert=

## use_start_tls=true

## bind_dn="CN=ServiceAccount,DC=mycompany,DC=com"

## bind_password=

## bind_password_script=

## ldap_username_pattern="uid=(username),ou=People,dc=mycompany,dc=com"

## create_users_on_login = true

## sync_groups_on_login=false

## ignore_username_case=true

## force_username_lowercase=true

## force_username_uppercase=false

## search_bind_authentication=true

## subgroups=suboordinate

## nested_members_search_depth=10

## follow_referrals=false

## debug=false

## debug_level=255

## trace_level=0

[[[users]]]

## user_filter="objectclass=*"

## user_name_attr=sAMAccountName

[[[groups]]]

## group_filter="objectclass=*"

## group_name_attr=cn

## group_member_attr=members

[[[ldap_servers]]]

## [[[[mycompany]]]]

## base_dn="DC=mycompany,DC=com"

## ldap_url=ldap://auth.mycompany.com

## nt_domain=mycompany.com

## ldap_cert=

## use_start_tls=true

## bind_dn="CN=ServiceAccount,DC=mycompany,DC=com"

# anonymous searches

## bind_password=

## bind_password_script=

## ldap_username_pattern="uid=username,ou=People,dc=mycompany,dc=com"

## search_bind_authentication=true

## follow_referrals=false

## debug=false

## debug_level=255

## trace_level=0

## [[[[[users]]]]]

## user_filter="objectclass=Person"

## user_name_attr=sAMAccountName

## [[[[[groups]]]]]

## group_filter="objectclass=groupOfNames"

## group_name_attr=cn

{% endif %}

[[vcs]]

## [[[git-read-only]]]

# remote_url=https://github.com/cloudera/hue/tree/master

# api_url=https://api.github.com

## [[[github]]]

# remote_url=https://github.com/cloudera/hue/tree/master

# api_url=https://api.github.com

# client_id=

# client_secret=

## [[[svn]]

# remote_url=https://github.com/cloudera/hue/tree/master

# api_url=https://api.github.com

# client_id=

# client_secret=

[[database]]

{% if metastore_db_flavor == 'sqlite3' %}

## engine=sqlite3

## host=

## port=

## user=

## password=

## conn_max_age=0

## password_script=/path/script

## name=desktop/desktop.db

## options={}

{% else %}

engine={{metastore_db_flavor}}

host={{metastore_db_host}}

port={{metastore_db_port}}

user={{metastore_db_user}}

{% if metastore_db_password != '' %}

password={{metastore_db_password}}

## password_script={{metastore_db_password_script}}

{% else %}

## password={{metastore_db_password}}

password_script={{metastore_db_password_script}}

{% endif %}

name={{metastore_db_name}}

options={{metastore_db_options}}

## conn_max_age=0

{% endif %}

[[session]]

## cookie_name=sessionid

ttl={{desktop_session_ttl}}

secure={{desktop_session_secure}}

## http_only={{desktop_session_http_only}}

## expire_at_browser_close={{desktop_session_expire_at_browser_close}}

## concurrent_user_session_limit=0

[[smtp]]

host={{desktop_smtp_host}}

port={{desktop_smtp_port}}

user={{desktop_smtp_user}}

password={{desktop_smtp_password}}

tls={{desktop_smtp_tls}}

default_from_email={{desktop_smtp_default_from_email}}

[[kerberos]]

{% if security_enabled %}

# Path to Hue's Kerberos keytab file

hue_keytab={{hue_keytab}}

# Kerberos principal name for Hue

hue_principal={{hue_principal}}

# Path to kinit

kinit_path={{kinit_path}}

{% else %}

# Path to Hue's Kerberos keytab file

## hue_keytab=

# Kerberos principal name for Hue

## hue_principal=hue/hostname.foo.com

# Path to kinit

## kinit_path=/path/to/kinit

{% endif %}

## keytab_reinit_frequency=3600

## ccache_path=/var/run/hue/hue_krb5_ccache

## mutual_authentication="OPTIONAL" or "REQUIRED" or "DISABLED"

[[oauth]]

consumer_key={{desktop_oauth_consumer_key}}

consumer_secret={{desktop_oauth_consumer_secret}}

request_token_url={{desktop_oauth_request_token_url}}

access_token_url={{desktop_oauth_access_token_url}}

authenticate_url={{desktop_oauth_authenticate_url}}

[[metrics]]

enable_web_metrics={{desktop_metrics_enable_web_metrics}}

location={{desktop_metrics_location}}

collection_interval={{desktop_metrics_collection_interval}}

[notebook]

show_notebooks={{notebook_show_notebooks}}

# enable_external_statements=true

enable_batch_execute={{notebook_enable_batch_execute}}

# enable_sql_indexer=false

# enable_presentation=true

enable_query_builder={{notebook_enable_query_builder}}

enable_query_scheduling={{notebook_enable_query_scheduling}}

# enable_dbproxy_server=true

# dbproxy_extra_classpath=

# interpreters_shown_on_wheel=

[[interpreters]]

[[[hive]]]

name=Hive

interface=hiveserver2

[[[impala]]]

name=Impala

interface=hiveserver2

[[[sparksql]]]

name=SparkSql

interface=hiveserver2

[[[spark]]]

name=Scala

interface=livy

[[[pyspark]]]

name=PySpark

interface=livy

[[[r]]]

name=R

interface=livy

[[[jar]]]

name=Spark Submit Jar

interface=livy-batch

[[[py]]]

name=Spark Submit Python

interface=livy-batch

[[[text]]]

name=Text

interface=text

[[[markdown]]]

name=Markdown

interface=text

[[[mysql]]]

name = MySQL

interface=rdbms

[[[sqlite]]]

name = SQLite

interface=rdbms

[[[postgresql]]]

name = PostgreSQL

interface=rdbms

[[[oracle]]]

name = Oracle

interface=rdbms

[[[solr]]]

name = Solr SQL

interface=solr

# options='{"collection": "default"}'

[[[pig]]]

name=Pig

interface=oozie

[[[java]]]

name=Java

interface=oozie

[[[spark2]]]

name=Spark

interface=oozie

[[[mapreduce]]]

name=MapReduce

interface=oozie

[[[sqoop1]]]

name=Sqoop1

interface=oozie

[[[distcp]]]

name=Distcp

interface=oozie

[[[shell]]]

name=Shell

interface=oozie

# [[[mysql]]]

# name=MySql JDBC

# interface=jdbc

# options='{"url": "jdbc:mysql://localhost:3306/hue", "driver": "com.mysql.jdbc.Driver", "user": "root", "password": "root"}'

[dashboard]

## is_enabled=true

## has_sql_enabled=false

## has_query_builder_enabled=false

## has_report_enabled=false.

## use_gridster=true

## has_widget_filter=false

## has_tree_widget=false

[[engines]]

# [[[solr]]]

## analytics=false

## nesting=false

# [[[sql]]]

## analytics=true

## nesting=false

[hadoop]

[[hdfs_clusters]]

[[[default]]]

fs_defaultfs={{namenode_address}}

{% if dfs_ha_enabled %}

logical_name=hdfs://{{logical_name}}

{% else %}

## logical_name=

{% endif %}

webhdfs_url={{webhdfs_url}}

{% if security_enabled %}

security_enabled={{security_enabled}}

{% else %}

## security_enabled=false

{% endif %}

## ssl_cert_ca_verify={{hadoop_ssl_cert_ca_verify}}

hadoop_conf_dir={{hadoop_conf_dir}}

[[yarn_clusters]]

[[[default]]]

resourcemanager_host={{resourcemanager_host1}}

resourcemanager_port={{resourcemanager_port}}

submit_to=True

{% if resourcemanager_ha_enabled %}

logical_name={{logical_name}}

{% else %}

## logical_name=

{% endif %}

{% if security_enabled %}

security_enabled={{security_enabled}}

{% else %}

## security_enabled=false

{% endif %}

resourcemanager_api_url={{resourcemanager_api_url1}}

proxy_api_url={{proxy_api_url1}}

history_server_api_url={{history_server_api_url}}

spark_history_server_url=http://localhost:18088

ssl_cert_ca_verify={{hadoop_ssl_cert_ca_verify}}

{% if resourcemanager_ha_enabled %}

[[[ha]]]

logical_name={{logical_name}}

submit_to=True

resourcemanager_api_url={{resourcemanager_api_url2}}

resourcemanager_host={{resourcemanager_host2}}

resourcemanager_port={{resourcemanager_port}}

proxy_api_url={{proxy_api_url2}}

history_server_api_url={{history_server_api_url}}

spark_history_server_url={{spark_history_server_url}}

{% else %}

# [[[ha]]]

## logical_name=my-rm-name

## submit_to=True

## resourcemanager_api_url=http://localhost:8088

{% endif %}

[beeswax]

hive_server_host={{hive_server_host}}

hive_server_port={{hive_server_port}}

hive_conf_dir={{hive_conf_dir}}

server_conn_timeout={{hive_server_conn_timeout}}

use_get_log_api={{hive_use_get_log_api}}

list_partitions_limit={{hive_list_partitions_limit}}

query_partitions_limit={{hive_query_partitions_limit}}

download_row_limit=100000

download_bytes_limit=-1

close_queries={{hive_close_queries}}

max_number_of_sessions=1

thrift_version={{hive_thrift_version}}

config_whitelist={{hive_config_whitelist}}

auth_username={{hive_auth_username}}

auth_password={{hive_auth_password}}

[[ssl]]

cacerts={{hive_ssl_cacerts}}

validate={{hive_ssl_validate}}

[metastore]

## enable_new_create_table=true

## force_hs2_metadata=false

[impala]

server_host=localhost

server_port=21050

impala_principal=impala/hostname.foo.com

impersonation_enabled=False

querycache_rows=50000

server_conn_timeout=120

close_queries=true

query_timeout_s=600

session_timeout_s=1800

auth_username=hue

auth_password=

config_whitelist=debug_action,explain_level,mem_limit,optimize_partition_key_scans,query_timeout_s,request_pool

impala_conf_dir=${HUE_CONF_DIR}/impala-conf

[[ssl]]

enabled=false

cacerts=/etc/hue/cacerts.pem

validate=true

[spark]

livy_server_host={{livy_server_host}}

livy_server_port={{livy_server_port}}

livy_server_session_kind={{livy_server_session_kind}}

sql_server_host={{spark_thriftserver_host}}

sql_server_port={{spark_hiveserver2_thrift_port}}

security_enabled=false

[oozie]

local_data_dir={{oozie_local_data_dir}}

sample_data_dir={{oozie_sample_data_dir}}

remote_data_dir={{oozie_remote_data_dir}}

oozie_jobs_count={{oozie_jobs_count}}

enable_cron_scheduling={{oozie_enable_cron_scheduling}}

enable_document_action={{oozie_enable_document_action}}

enable_oozie_backend_filtering=true

enable_impala_action=false

[filebrowser]

archive_upload_tempdir={{filebrowser_archive_upload_tempdir}}

show_download_button={{filebrowser_show_download_button}}

show_upload_button={{filebrowser_show_upload_button}}

enable_extract_uploaded_archive=true

[pig]

local_sample_dir={{pig_local_sample_dir}}

remote_data_dir={{pig_remote_data_dir}}

[sqoop]

server_url=http://localhost:12000/sqoop

sqoop_conf_dir=/etc/sqoop2/conf

[proxy]

whitelist=(localhost|127\.0\.0\.1):(50030|50070|50060|50075)

blacklist=

[hbase]

hbase_clusters={{hbase_cluster}}

hbase_conf_dir={{hbase_conf_dir}}

truncate_limit = {{hbase_truncate_limit}}

thrift_transport={{hbase_thrift_transport}}

[search]

solr_url={{solr_url}}

{% if security_enabled %}

security_enabled={{security_enabled}}

{% else %}

## security_enabled=false

{% endif %}

empty_query={{solr_empty_query}}

[libsolr]

ssl_cert_ca_verify={{solr_ssl_cert_ca_verify}}

solr_zk_path={{solr_zk_path}}

[indexer]

solrctl_path={{solr_solrctl_path}}

enable_new_indexer={{solr_enable_new_indexer}}

config_indexer_libs_path=/tmp/smart_indexer_lib

enable_new_importer=false

enable_sqoop=false

[jobsub]

local_data_dir={{jobsub_local_data_dir}}

sample_data_dir={{jobsub_sample_data_dir}}

[jobbrowser]

share_jobs={{jobbrowser_share_jobs}}

disable_killing_jobs={{jobbrowser_disable_killing_jobs}}

log_offset={{jobbrowser_log_offset}}

## max_job_fetch=500

## enable_v2=true

## enable_query_browser=true

[security]

## hive_v1=true

## hive_v2=false

## solr_v2=true

[zookeeper]

[[clusters]]

[[[default]]]

host_ports={{zookeeper_host_port}}

rest_url={{zookeeper_rest_url}}

{% if security_enabled %}

principal_name={{zk_principal}}

{% else %}

## principal_name=zookeeper

{% endif %}

[useradmin]

## home_dir_permissions=0755

## default_user_group=default

[[password_policy]]

## is_enabled=false

## pwd_regex="^(?=.*?[A-Z])(?=(.*[a-z]){1,})(?=(.*[\d]){1,})(?=(.*[\W_]){1,}).{8,}$"

## pwd_hint="The password must be at least 8 characters long, and must contain both uppercase and lowercase letters, at least one number, and at least one special character."

## pwd_error_message="The password must be at least 8 characters long, and must contain both uppercase and lowercase letters, at least one number, and at least one special character."

[liboozie]

oozie_url={{oozie_url}}

{% if security_enabled %}

security_enabled={{security_enabled}}

{% else %}

## security_enabled=false

{% endif %}

remote_deployement_dir={{oozie_remote_deployement_dir}}

[aws]

[[aws_accounts]]

## [[[default]]]

## access_key_id=

## secret_access_key=

## security_token=

## access_key_id_script=/path/access_key_id.sh

## secret_access_key_script=/path/secret_access_key.sh

## allow_environment_credentials=yes

## region=us-east-1

## host=

## proxy_address=

## proxy_port=8080

## proxy_user=

## proxy_pass=

## is_secure=true

[azure]

[[azure_accounts]]

[[[default]]]

## client_id=

## client_id_script=/path/client_id.sh

## client_secret=

## client_secret_script=/path/client_secret.sh

## tenant_id=

## tenant_id_script=/path/tenant_id.sh

[[adls_clusters]]

[[[default]]]

## fs_defaultfs={{namenode_address}}

## webhdfs_url={{webhdfs_url}}

[libsentry]

## hostname=localhost

## port=8038

## sentry_conf_dir=/etc/sentry/conf

## privilege_checker_caching=300

[libzookeeper]

ensemble={{zookeeper_host_port}}

{% if security_enabled %}

principal_name={{zk_principal}}

{% else %}

## principal_name=zookeeper

{% endif %}

[librdbms]

[[databases]]

{% if rdbms_sqlite_engine %}

[[[sqlite]]]

nice_name={{rdbms_sqlite_nice_name}}

name={{rdbms_sqlite_name}}

engine=sqlite

options={{rdbms_sqlite_options}}

{% endif %}

{% if rdbms_mysql_engine %}

[[[mysql]]]

nice_name={{rdbms_mysql_nice_name}}

name={{rdbms_mysql_name}}

engine=mysql

host={{rdbms_mysql_host}}

port={{rdbms_mysql_port}}

user={{rdbms_mysql_user}}

password={{rdbms_mysql_password}}

options={{rdbms_mysql_options}}

{% endif %}

{% if rdbms_postgresql_engine %}

[[[postgresql]]]

nice_name={{rdbms_postgresql_nice_name}}

name={{rdbms_postgresql_name}}

engine=postgresql

host={{rdbms_postgresql_host}}

port={{rdbms_postgresql_port}}

user={{rdbms_postgresql_user}}

password={{rdbms_postgresql_password}}

options={{rdbms_postgresql_options}}

{% endif %}

{% if rdbms_oracle_engine %}

[[[oracle]]]

nice_name={{rdbms_oracle_nice_name}}

name={{rdbms_oracle_name}}

engine=oracle

host={{rdbms_oracle_host}}

port={{rdbms_oracle_port}}

user={{rdbms_oracle_user}}

password={{rdbms_oracle_password}}

options={{rdbms_oracle_options}}

{% endif %}

[libsaml]

## xmlsec_binary=/usr/local/bin/xmlsec1

## entity_id="(base_url)/saml2/metadata/"

## create_users_on_login=true

## required_attributes=uid

## optional_attributes=

## metadata_file=

## key_file=

## cert_file=

## key_file_password=/path/key

## key_file_password_script=/path/pwd.sh

## user_attribute_mapping={'uid': ('username', )}

## authn_requests_signed=false

## logout_requests_signed=false

## username_source=attributes

## logout_enabled=true

value>

<value-attributes>

<type>contenttype>

<show-property-name>falseshow-property-name>

value-attributes>

<on-ambari-upgrade add="true"/>

property>

configuration>