下载输入python之小说下载器version2.0

本文是一篇关于下载输入的帖子

上一版本链接:传送门

这是我用pyinstaller打包成的exe文件,便利体验:传送门(上传了正在审核,请稍等)

这里使用了第三方库pyquery,安装方法见:上一版本链接

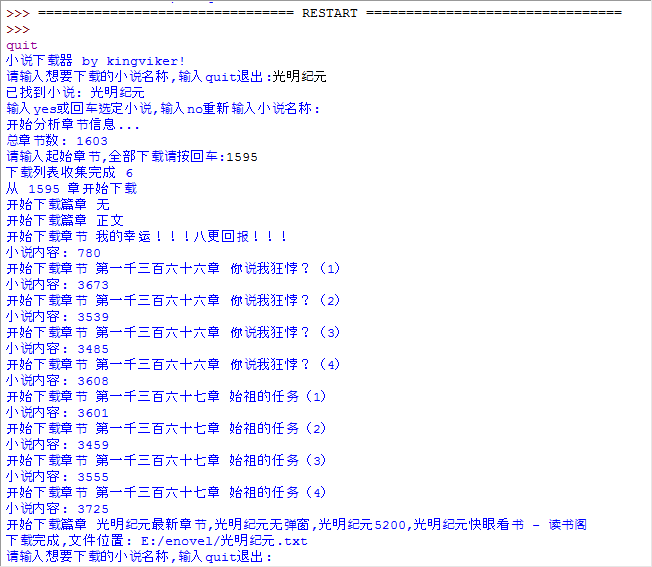

在上一版本的基础上做个改良.先上图:

上面是代码:

# -*- coding:gbk -*- ''' file desc:novel downloader author:kingviker email:[email protected]@gmail.com date:2013-05-31 depends:python 2.7.4,pyquery ''' import os,codecs,urllib,urllib2 from pyquery import PyQuery as pq searchUrl = 'http://www.dushuge.net/modules/article/search.php' baseSavePath="E:/enovel/" print "小说下载器 by kingviker!" def searchNovel(novelName): values = urllib.urlencode({'searchkey' : novelName, 'searchtype' : 'articlename'}) req = urllib2.Request(url=searchUrl,data=values) response = urllib2.urlopen(req) searchHtml_pq = pq(response.read()) novelUrlHtml = searchHtml_pq("#content > .sf-mainbox > .head > h1 > a") #print novelUrlHtml if not novelUrlHtml : return return (novelUrlHtml.eq(2).text(),novelUrlHtml.eq(2).attr("href")) def analyzeNovel(url): #using pyquery to grub the webpage's content html_pq = pq(url=url) #print html_pq("div.book_article_texttable").find(".book_article_texttext") totalChapters = len(html_pq("div.book_article_texttable").find(".book_article_listtext").find("a")) return totalChapters def fetchDownloadLinks(url): #using to save pieces and chapter lists pieceList=[] chapterList=[] html_pq = pq(url=url) #find the first piece of the novel. piece = html_pq("div.book_article_texttable > div").eq(0) isPiece = True if piece.attr("class")=="book_article_texttitle": #get the current piece's text pieceList.append(piece.text()) #print "piece Text:", piece else: isPiece = False pieceList.append("无") #scan out the piece and chapter lists nextPiece=False while nextPiece==False: if isPiece: chapterDiv = piece.next() else: isPiece = True chapterDiv = piece #print "章节div长度:",chapterDiv.length piece = chapterDiv if chapterDiv.length==0: pieceList.append(chapterList[:]) del chapterList[:] nextPiece=True elif chapterDiv.attr("class")=="book_article_texttitle": pieceList.append(chapterList[:]) del chapterList[:] pieceList.append(piece.text()) else: chapterUrls = chapterDiv.find("a"); for urlA in chapterUrls: urlList_temp = [pq(urlA).text(),pq(urlA).attr("href")] chapterList.append(urlList_temp) print "下载列表收集实现",len(pieceList) return pieceList def downloadNovel(novel,startChapterNum): # if os.path.exists(baseSavePath+novel[0]) is not True: # os.mkdir(baseSavePath+novel[0]) #based on the piecelist,grub the special webpage's novel content and save them . if os.path.exists(baseSavePath+novel[0]+".txt"):os.remove(baseSavePath+novel[0]+".txt") #using codecs to create a file. write mode(w+) is appended. novelFile = codecs.open(baseSavePath+novel[0]+".txt","wb+","utf-8") pieceList = fetchDownloadLinks(novel[1]) chapterTotal = 0; print "从",startChapterNum,"章开始下载" #just using two for loops to analyze the piecelist. for pieceNum in range(0,len(pieceList),2): piece = pieceList[pieceNum] print "开始下载篇章",pieceList[pieceNum] chapterList = pieceList[pieceNum+1] for chapterNum in range(0,len(chapterList)): chapterTotal +=1 # print chapterTotal,startChapterNum,startChapterNum>chapterTotal # print type(startChapterNum),type(chapterTotal) if startChapterNum > chapterTotal: continue chapter = chapterList[chapterNum] print "开始下载章节",chapter[0] chapterPage = pq(url=novel[1]+chapter[1]) chapterContent = piece+" "+chapter[0]+"\r\n" chapterContent += chapterPage("#booktext").html().replace("<br />","\r\n") print "小说内容:",len(chapterContent) novelFile.write(chapterContent+"\r\n"+"\r\n") novelFile.close() print "下载实现,文件位置:",baseSavePath+novel[0]+".txt" while(True): #if the novel's file system not exists,created. if os.path.exists(baseSavePath) is not True: os.mkdir(baseSavePath) name = raw_input("请输入想要下载的小说名称,输入quit退出:") if name =="quit": print "bey" break novel = searchNovel(name) if not novel : print "没有查找到小说",name,"或者小说名称输出错误!" else: print "已找到小说:",novel[0] result = raw_input("输入yes或回车选定小说,输入no重新输入小说名称:") if not result or result=="yes": print "开始分析章节信息..." totalChapters = analyzeNovel(novel[1]) print "总章节数:",totalChapters startChapterNum = raw_input("请输入肇端章节,全部下载请按回车:") if not startChapterNum : startChapterNum=0 #print startChapterNum downloadNovel(novel,int(startChapterNum)) elif result =="no": pass

这一版本重要更新如下:

1.函数封装

2,增加用户交互界面.

3,用户可自在输入小说名称查询下载

4.可选肇端下载章节

5.暂时取消单章保存模式

下一版本目标:

1.支撑模糊查询

2,增加热门小说列表

3.优化表现界面

文章结束给大家分享下程序员的一些笑话语录: N多年前,JohnHein博士的一项研究表明:Mac用户平均IQ要比PC用户低15%。超过6000多的参加者接受了测试,结果清晰的显示IQ比较低的人会倾向于使用Mac。Mac用户只答对了基础问题的75%,而PC用户却高达83%。

--------------------------------- 原创文章 By

下载和输入

---------------------------------