我个人平时喜欢逛cnBeta和百度贴吧,我利用之前的写百度贴吧客户端的code, 写了一个cnBeta的阅读器

用python写一个百度贴吧客户端

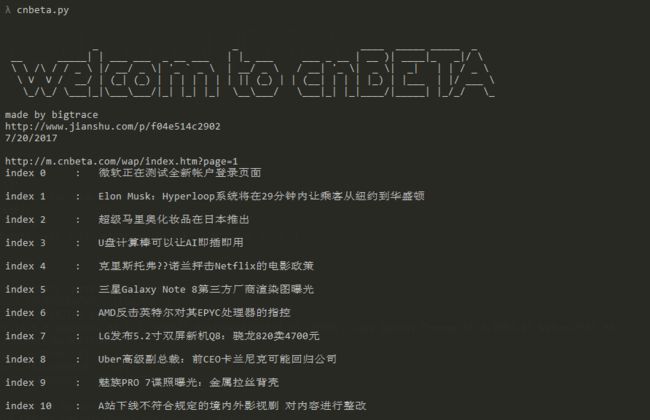

由于cnBeta http://www.cnbeta.com/ 电脑端广告实在太多,要想阅读新闻和评论实在十分费时,于是我用Python抓取手机版 http://m.cnbeta.com/wap 的内容,方便大家阅读。

功能与界面与我之前的百度python客户端十分相似。

一打开便会显示首页的最新新闻,如果过想看第2页的新闻则输入s 2, 以此类推。

阅读某一个新闻,则输入t index , 比如查看index 为1 的新闻

不用你自己亲自查看评论,程序会抓取所有评论直接显示在文章下方,

输入 b 可以返回新闻列表。

由于我自己不喜欢评论,所以我没有添加评论该新闻的功能。想要加评论功能,也很简单,可以参考我的百度客户端的文章。

新增预览图片功能

输入pic, 可以打开由Pyqt库写的一个小窗口,用来预览该新闻内的图片,并且可以上下翻页。

一下附上code:

# coding=utf-8

import sys

import pycurl

import os

import time

from StringIO import StringIO

import re

import lxml.html

import unicodedata

from PyQt4.QtGui import *

from PyQt4 import QtGui

from colorama import Fore, Back, Style,init

from termcolor import colored

# class definition

class Example(QtGui.QWidget):

def __init__(self,all_pic_list):

super(Example, self).__init__()

#self.url_list=['http://static.cnbetacdn.com/article/2017/0831/8eb7de909625140.png','http://static.cnbetacdn.com/article/2017/0831/7f11d5ec94fa123.png','http://static.cnbetacdn.com/article/2017/0831/1b6595175fb5486.jpg']

self.url_list=all_pic_list

self.current_pic_index=0

self.initUI()

#time.sleep(5)

def initUI(self):

QtGui.QToolTip.setFont(QtGui.QFont('Test', 10))

self.setToolTip('This is a QWidget widget')

# Show image

self.pic = QtGui.QLabel(self)

self.pic.setGeometry(0, 0, 600, 500)

#self.pic.setPixmap(QtGui.QPixmap("/home/lpp/Desktop/image1.png"))

pixmap = QPixmap()

data=self.retrieve_from_url(self.url_list[0])

pixmap.loadFromData(data)

self.pic.setPixmap(pixmap)

#self.pic.setPixmap(QtGui.QPixmap.loadFromData(data))

# Show button

btn_next = QtGui.QPushButton('Next', self)

btn_next.setToolTip('This is a QPushButton widget')

btn_next.resize(btn_next.sizeHint())

btn_next.clicked.connect(self.fun_next)

btn_next.move(300, 50)

btn_prev = QtGui.QPushButton('Previous', self)

btn_prev.setToolTip('This is a QPushButton widget')

btn_prev.resize(btn_prev.sizeHint())

btn_prev.clicked.connect(self.fun_prev)

btn_prev.move(50, 50)

self.setGeometry(300, 300, 500, 500)

self.setWindowTitle('ImgViewer')

self.show()

def retrieve_from_url(self,pic_url):

c = pycurl.Curl()

c.setopt(pycurl.PROXY, 'http://192.168.87.15:8080')

c.setopt(pycurl.PROXYUSERPWD, 'LL66269:')

c.setopt(pycurl.PROXYAUTH, pycurl.HTTPAUTH_NTLM)

buffer = StringIO()

c.setopt(pycurl.URL, pic_url)

c.setopt(c.WRITEDATA, buffer)

c.perform()

c.close()

data = buffer.getvalue()

return data

# Connect button to image updating

def fun_next(self):

if self.current_pic_index < len(self.url_list)-1:

self.current_pic_index=self.current_pic_index+1

else:

self.current_pic_index=0

pixmap = QPixmap()

data=self.retrieve_from_url(self.url_list[self.current_pic_index])

pixmap.loadFromData(data)

self.pic.setPixmap(pixmap)

#self.pic.setPixmap(QtGui.QPixmap( "/home/lpp/Desktop/image2.png"))

def fun_prev(self):

if self.current_pic_index > 0:

self.current_pic_index=self.current_pic_index-1

else:

self.current_pic_index=len(self.url_list)-1

pixmap = QPixmap()

data=self.retrieve_from_url(self.url_list[self.current_pic_index])

pixmap.loadFromData(data)

self.pic.setPixmap(pixmap)

#self.pic.setPixmap(QtGui.QPixmap( "/home/lpp/Desktop/image2.png"))

def main(all_pic_list):

app = QtGui.QApplication(sys.argv)

ex = Example(all_pic_list)

sys.exit(app.exec_())

#---------------------------------------------

class Browser_cnbeta:

c = pycurl.Curl()

def __init__(self):

os.system('cls')

print """

_ _ ____ _____ _____ _

__ _____| | ___ ___ _ __ ___ | |_ ___ ___ _ __ | __ )| ____|_ _|/ \

\ \ /\ / / _ \ |/ __/ _ \| '_ ` _ \ | __/ _ \ / __| '_ \| _ \| _| | | / _ \

\ V V / __/ | (_| (_) | | | | | | | || (_) | | (__| | | | |_) | |___ | |/ ___ \

\_/\_/ \___|_|\___\___/|_| |_| |_| \__\___/ \___|_| |_|____/|_____| |_/_/ \_\

made by bigtrace

http://www.jianshu.com/p/f04e514c2902

7/20/2017

"""

time.sleep(2)

self.read_shouye(1)

def wide_chars(self, s):

# return the extra width for wide characters

if isinstance(s, str):

s = s.decode('utf-8')

return sum(unicodedata.east_asian_width(x) in ('F', 'W') for x in s)

def read_shouye(self, index):

os.system('cls')

self.c.setopt(pycurl.PROXY, 'http://192.168.87.15:8080')

self.c.setopt(pycurl.PROXYUSERPWD, 'LL66269:')

self.c.setopt(pycurl.PROXYAUTH, pycurl.HTTPAUTH_NTLM)

USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36'

self.c.setopt(self.c.FOLLOWLOCATION, 1)

self.c.setopt(pycurl.VERBOSE, 0)

self.c.setopt(pycurl.FAILONERROR, True)

self.c.setopt(pycurl.USERAGENT, USER_AGENT)

# ------------------- Need to use each post page's own cookie to login

url_tbs = 'http://m.cnbeta.com/wap/index.htm?page=' + str(index)

print colored(url_tbs,'blue')

print (colored("\n---------------------",'green'))

buffer = StringIO()

self.c.setopt(pycurl.URL, url_tbs)

self.c.setopt(self.c.WRITEDATA, buffer)

self.c.perform()

body = buffer.getvalue().decode('utf-8', 'ignore')

doc = lxml.html.fromstring(body)

news_list = doc.xpath("//div[@class='list']")

# http://m.cnbeta.com/wap/view/633687.htm

Header_list = []

link_list = []

display_shouye = []

self.header_max_width = 12

self.title_max_width = 70

i = 0

for each_news in news_list:

link = each_news.xpath(".//a/@href")[0]

link_url = "http://m.cnbeta.com" + link

title = each_news.xpath(".//a")[0].text_content()

Header = "index " + colored(str(i),'yellow')

each_title = ": " + title

Header_list.append(title)

link_list.append(link_url)

Header_fmt = u'{0:<%s}' % (self.header_max_width - self.wide_chars(Header))

Title_fmt = u'{0:<%s}' % (self.title_max_width - self.wide_chars(each_title))

each_display = ""

try:

each_display = (Header_fmt.format(Header) + Title_fmt.format(each_title)).encode("gb18030")

# print (Header_fmt.format(Header)+Title_fmt.format(each_title)).encode("gb18030")

except:

each_display = (Header_fmt.format(Header) + "Title can't be displayed").encode("gb18030")

# print (Header_fmt.format(Header)+"Title can't be displayed").encode("gb18030")

print each_display

display_shouye.append(each_display)

print ""

i = i + 1

self.tiezi_link = link_list

self.shouye_titles = Header_list

self.display_shouye_list = display_shouye

print (colored("\n---------------------",'green'))

def read_each_news(self, index):

os.system('cls')

link = self.tiezi_link[int(index)]

title = self.shouye_titles[int(index)]

print "===================================================\n\n\n"

print colored(title, 'magenta')+ colored(" <" + link+ "> \n",'blue')

buffer = StringIO()

self.c.setopt(pycurl.URL, link)

self.c.setopt(self.c.WRITEDATA, buffer)

self.c.perform()

body = buffer.getvalue().decode('utf-8', 'ignore')

doc = lxml.html.fromstring(body)

title = doc.xpath("//div[@class='title']")[0].text_content()

time = doc.xpath("//div[@class='time']/span")

time_subtitle = ""

for each_span in time:

time_subtitle = time_subtitle + each_span.text_content()

# print (title).encode("gb18030")

print ""

print colored((time_subtitle).encode("gb18030"),'cyan')

print ""

content = doc.xpath("//div[@class='content']/p")

self.current_thread_pic_list=[]

for each_paragraph in content:

print ""

text_content = each_paragraph.text_content().replace(u'\xa0', u' ') # remove \xa0 from string

print text_content

img = each_paragraph.xpath(".//img/@src")

for each_img in img:

print colored("![]() ",'yellow')

self.current_thread_pic_list.append(each_img)

blockquote = doc.xpath("//div[@class='content']/blockquote")

j = 1

for each_blockquote in blockquote:

print "blockquote <" + str(j) + "> ~~~~~~~~~~~\n"

print each_blockquote.text_content()

print "~~~~~~~~~~~~~~~~~~~~~~~~~~\n"

j = j + 1

self.view_comment(link)

def Get_Back_To_shouye(self):

os.system('cls')

for each_display in self.display_shouye_list:

print each_display

def exit(self):

self.c.close()

os.system('cls')

print """

_ _

| | | |

| |__ _ _ _____ | |__ _ _ _____

| _ \| | | | ___ | | _ \| | | | ___ |

| |_) ) |_| | ____| | |_) ) |_| | ____|

|____/ \__ |_____) |____/ \__ |_____)

(____/ (____/

"""

time.sleep(1)

os.system('cls')

def view_comment(self, url):

# http://m.cnbeta.com/wap/comment/633621.htm

tid = re.search(r"(\d+)", url).group(1)

comment_url = "http://m.cnbeta.com/wap/comment/" + str(tid) + ".htm?page="

buffer = StringIO()

self.c.setopt(pycurl.URL, comment_url + "1")

self.c.setopt(self.c.WRITEDATA, buffer)

self.c.perform()

body = buffer.getvalue().decode('utf-8', 'ignore')

doc = lxml.html.fromstring(body)

comment_all = doc.xpath("//div[@class='content']")[0].text_content()

print colored("\n--------------- comment ---------------",'green')

#print comment_all

print comment_all

print colored("--------------- finished ---------------",'green')

def view_image(self):

print "launch picture viewer..."

viewer_app = QtGui.QApplication(sys.argv)

ex = Example(self.current_thread_pic_list)

sys.exit(viewer_app.exec_())

app = Browser_cnbeta()

while True:

print """

"""

nb = raw_input('Give me your command: \n')

try:

if nb.startswith('s ') == True:

index = re.search(r"s (\d+)", nb).group(1)

app.read_shouye(index)

elif nb.startswith('t ') == True:

index = re.search(r"t\s+(\d+)", nb).group(1)

app.read_each_news(index)

elif nb == "b":

app.Get_Back_To_shouye()

elif nb =="c":

os.system('cls') # on windows

elif nb == "e":

break

elif nb == "pic":

app.view_image()

else:

print "type correct command"

except:

print ""

app.exit()

",'yellow')

self.current_thread_pic_list.append(each_img)

blockquote = doc.xpath("//div[@class='content']/blockquote")

j = 1

for each_blockquote in blockquote:

print "blockquote <" + str(j) + "> ~~~~~~~~~~~\n"

print each_blockquote.text_content()

print "~~~~~~~~~~~~~~~~~~~~~~~~~~\n"

j = j + 1

self.view_comment(link)

def Get_Back_To_shouye(self):

os.system('cls')

for each_display in self.display_shouye_list:

print each_display

def exit(self):

self.c.close()

os.system('cls')

print """

_ _

| | | |

| |__ _ _ _____ | |__ _ _ _____

| _ \| | | | ___ | | _ \| | | | ___ |

| |_) ) |_| | ____| | |_) ) |_| | ____|

|____/ \__ |_____) |____/ \__ |_____)

(____/ (____/

"""

time.sleep(1)

os.system('cls')

def view_comment(self, url):

# http://m.cnbeta.com/wap/comment/633621.htm

tid = re.search(r"(\d+)", url).group(1)

comment_url = "http://m.cnbeta.com/wap/comment/" + str(tid) + ".htm?page="

buffer = StringIO()

self.c.setopt(pycurl.URL, comment_url + "1")

self.c.setopt(self.c.WRITEDATA, buffer)

self.c.perform()

body = buffer.getvalue().decode('utf-8', 'ignore')

doc = lxml.html.fromstring(body)

comment_all = doc.xpath("//div[@class='content']")[0].text_content()

print colored("\n--------------- comment ---------------",'green')

#print comment_all

print comment_all

print colored("--------------- finished ---------------",'green')

def view_image(self):

print "launch picture viewer..."

viewer_app = QtGui.QApplication(sys.argv)

ex = Example(self.current_thread_pic_list)

sys.exit(viewer_app.exec_())

app = Browser_cnbeta()

while True:

print """

"""

nb = raw_input('Give me your command: \n')

try:

if nb.startswith('s ') == True:

index = re.search(r"s (\d+)", nb).group(1)

app.read_shouye(index)

elif nb.startswith('t ') == True:

index = re.search(r"t\s+(\d+)", nb).group(1)

app.read_each_news(index)

elif nb == "b":

app.Get_Back_To_shouye()

elif nb =="c":

os.system('cls') # on windows

elif nb == "e":

break

elif nb == "pic":

app.view_image()

else:

print "type correct command"

except:

print ""

app.exit()