为了方便学习elasticsearch,通过docker compose快速搭建环境。没有安装docker和docker compose的小伙伴可以先看关于docker及docker compose的安装

建议:不要使用root用户,最好另外创建用户

目录结构

/---

---home

------docker

---------docker-compose.yml

---------elasticsearch

-------------Dockerfile

-------------config

-----------------elasticsearch.yml

-------------data

-------------logs

-------------plugins

---------kibana

-------------Dockerfile

-------------config

-----------------kibana.yml

配置

Dockerfile for ES

可查看Dockerfile for elasticsearch

FROM docker.elastic.co/elasticsearch/elasticsearch:7.3.0@sha256:8c7075b17918a954e34d71ab1661ea14737429426376d045a23e79c3632346ab

docker-compose.yml

version: "3"

services:

elasticsearch:

build: ./elasticsearch

container_name: "elasticsearch"

ports:

- "9200:9200"

- "9300:9300"

volumes:

- "./elasticsearch/data:/usr/share/elasticsearch/data:rw"

- "./elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml"

- "./elasticsearch/logs:/user/share/elasticsearch/logs:rw"

- "./elasticsearch/plugins/ik:/usr/share/elasticsearch/plugins/ik"

kibana:

build: ./kibana

container_name: kibana

ports:

- "5601:5601"

volumes:

- ./kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml

depends_on:

- "elasticsearch"

elasticsearch.yml

discovery.type: "single-node"

bootstrap.memory_lock: true

network.host: 0.0.0.0

http.port: 9200

transport.tcp.port: 9300

path.logs: /usr/share/elasticsearch/logs

http.cors.enabled: true

http.cors.allow-origin: "*"

xpack.security.audit.enabled: true

Dockerfile for kibana

可查看Dockerfile for kibana

FROM docker.elastic.co/kibana/kibana:7.3.0@sha256:f283049e917e678e2cf160e28f0739abf562fe02e9a61b318f840e70c45135de

kibana.yml

# 访问ES的url,根据自己的配置修改

elasticsearch.hosts: "http://elasticsearch:9200"

server.name: kibana

server.host: "0"

指定的配置文件都放到指定位置后,进入docker-compose.yml所在文件夹

cd /home/docker

再执行

docker-compose build

docker-compose up -d

注意

1.启动时有可能会出现java.nio.file.AccessDeniedException

解决办法:是因为对应的文件夹没有相关权限,可以通过

chown linux用户名 elasticsearch安装目录 -R

需要先切换到root用户才能执行以上命令。

2.max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

解决办法:在 /etc/sysctl.conf文件最后添加一行

vm.max_map_count=262144

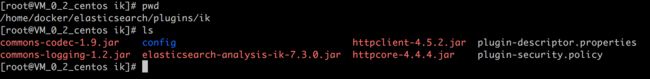

安装IK中文分词插件

点击IK分词插件下载,下载zip压缩文件,解压缩到plugins指定的ik文件夹

注意需要重启es实例

测试

通过访问kibana,在Dev Tools中我们开始测试中文分词插件是否生效:

GET /_analyze

{

"analyzer": "ik_max_word",

"text":"你好,我是中国人"

}

响应结果:

{

"tokens" : [

{

"token" : "你好",

"start_offset" : 0,

"end_offset" : 2,

"type" : "CN_WORD",

"position" : 0

},

{

"token" : "我",

"start_offset" : 3,

"end_offset" : 4,

"type" : "CN_CHAR",

"position" : 1

},

{

"token" : "是",

"start_offset" : 4,

"end_offset" : 5,

"type" : "CN_CHAR",

"position" : 2

},

{

"token" : "中国人",

"start_offset" : 5,

"end_offset" : 8,

"type" : "CN_WORD",

"position" : 3

},

{

"token" : "中国",

"start_offset" : 5,

"end_offset" : 7,

"type" : "CN_WORD",

"position" : 4

},

{

"token" : "国人",

"start_offset" : 6,

"end_offset" : 8,

"type" : "CN_WORD",

"position" : 5

}

]

}

至此,我们就搭建了一个简单的es和kibana环境。