hive练习(二)

1.创建orders表

CREATE TABLE orders (

order_id string, #订单id

user_id string, #用户id

eval_set string, #标测训练集还是预测集

order_number string, #下订单的排序

order_dow string, #0-6的星期几

order_hour_of_day string, #24小时

days_since_prior_order) #距离上一个订单多少天

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS TEXTFILE

加载数据

LOAD DATA LOCAL INPATH '/home/wl/hive/data/data/orders.csv' OVERWRITE INTO TABLE orders;

2.创建trains表

CREATE TABLE trains (

order_id string,

product_id string,

add_to_cart_order int,

reordered int)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS TEXTFILE;

加载数据

LOAD DATA LOCAL INPATH '/home/wl/hive/data/data/order_products__train.csv' OVERWRITE INTO TABLE trains;

hadoop杀掉任务命令

hadoop job -kill job_1553218998062_0015

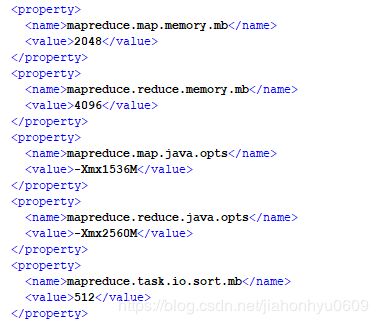

修改jvm内存在hadoop/etc/hadoop下的,mapred-site.xml中:

--用上面两个表中的数据,查看每个user购买了什么商品

方法一:

select user_id,product_id

from

(select order_id,user_id from orders)ord

join

(select order_id,product_id from trains)tra

on ord.order_id=tra.order_id

limit 10;

方法二:

select user_id,product_id

from orders join trains

on orders.order_id=trains.order_id

limit 2;

###1.每个用户有多少个订单

select user_id,count(1) as orders_cnt

from orders group by user_id limit 10;

####2、每个用户平均每个订单平均是多少商品

select ord.user_id,avg(pri.products_cnt) as avg_prod

from

(select order_id,user_id from orders)ord

join

(select order_id,count(1) as products_cnt from priors group by order_id)pri

on ord.order_id=pri.order_id

group by ord.user_id

limit 10;

####3. 每个用户在一周中的购买订单的分布

set hive.cli.print.header=true;

select

user_id,

sum(case order_dow when '0' then 1 else 0 end) as dow_0,

sum(case order_dow when '1' then 1 else 0 end) as dow_1,

sum(case order_dow when '2' then 1 else 0 end) as dow_2,

sum(case order_dow when '3' then 1 else 0 end) as dow_3,

sum(case order_dow when '4' then 1 else 0 end) as dow_4,

sum(case order_dow when '5' then 1 else 0 end) as dow_5,

sum(case order_dow when '6' then 1 else 0 end) as dow_6

from orders

group by user_id

limit 20;

###4、平均每个购买天中,购买的商品数量:

比如有一个user ,有两天是购买商品的 :

>day 1 :两个订单,1订单:10个product,2订单:15个product , 共25个

day 2:一个订单, 1订单:12个product

day 3: 没有购买

>这个user 平均每个购买天的商品数量:

(25+12)/2 ,这里是**2天**有购买行为,不是**三天**。

select ord.user_id,sum(pri.products_cnt)/ count(distinct ord.days_since_prior_order) as avg_prod

from

(select order_id,user_id, if(days_since_prior_order='',0,days_since_prior_order) as days_since_prior_order from orders)ord

join

(select order_id,count(1) as products_cnt from priors group by order_id)pri

on ord.order_id=pri.order_id

group by ord.user_id

limit 10;

####### 5、每个用户最喜爱购买的三个product是什么,最终表结构可以是三个列,也可以是一个字符串

比如:其中一个用户user_1 经常买

>product_1:购买了10次

product_2:购买了4次

product_3:购买了2次

product_4:购买了1次

select user_id, collect_list(product_id) as pro_top3,size(collect_list(product_id)) as top_size

from

(

select user_id,product_id, row_number() over(partition by user_id order by top_cnt desc) as row_num

from

(select ord.user_id as user_id,pri.product_id as product_id,count(1) over(partition by user_id,product_id) as top_cnt

from orders ord

join

(select * from priors limit 1000)pri on ord.order_id=pri.order_id

-- group by ord.user_id,pri.product_id

)t

)t1 where row_num <=3 group by user_id limit 10;

bucket主要用于采样:

reduce 有多少个就有多少个文件,

一个reduce会生成一个文件

4 buckets 4 reduce => 将这一个文件分成4个文件

1/16 只需要取第一个数据里面的4(1/16)个数据量

在数据倾斜里面用到采样

当只有1个 reduce时:

所有的map数据都会落到一个reduce,增加这个reduce节点的负担

为了并发更好,3个 reduce时:

会把所有map数据分发给3个reduce,提升了reduce阶段的执行效率。*

4个buckets

倍数是16:采样的每一份需要4*(1/16)=1/4 => 只需要每个文件里面的1/4bucket的数据

因子是2:采样的每一份需要4*(1/2)=2buckets

buckets=64,y=32

采样的y中的每一份=64*(1/32)=2buckets

tablesample(bucket x out of y)

y必须是table总bucket数的倍数或因子、hive根据y的大小,决定抽样的比例。例如,table总共分了64份,当y=32时,抽取(64/32=)2个bucket的数据,当y=128时,抽取(64/128=)1/2个bucket的数据。x表示从哪个bucket开始抽取。

例如,table总bucket数为32,tablesample(bucket 1 out of 16), 表示总共抽取(32/16=)2个bucket的数据,分别为第1个bucket和第(1+16)=17个bucket的数据。

则 是:

1%16 = 1

17 % 16 = 1

timestamp

881250949 1997/12/4 23:55:49

每个用户 产生行为的时候,用timestamp来区分下单先后关系。

user product timestamp什么时候看过什么商品

需求:

推荐数据里面,想知道,距离现在最近的时间是什么时候?最远的时间是什么时候?

select max(`timestamp`) as max_t,min(`timestamp`) from udata; (里面的··是1前面的那个键)

893286638 874724710

以最近的这个时间点(·893286638·)作为时间参考点。我们可以算所有的数据离最近产生的订单有多少天?(最近的时间在数值上肯定是最大的)

select user_id ,collect_list(cast(days as int)) as day_list

from (

select user_id, (cast(893286638 as bigint) - cast(timestamps as bigint))/(606024) as days from udata )t

group by user_id

limit 10;

时间衰减:

一个用户有多条行为,做行为分析的时候,最近的行为越有效果(越好)。

时间分布:[1,3,5,6]days 需要一个函数exp(-t/2)

sum([exp(-t/2)*score for score in score_list for t in days])

select user_id,sum(exp(-(cast(893286638 as bigint)-cast(`timestamps` as bigint))/(60*60*24)/2)*rating) as days from badou.udata

group by user_id

limit 10;```